Curiosity: building a Perplexity-like AI search tool using LangGraph

General Introduction

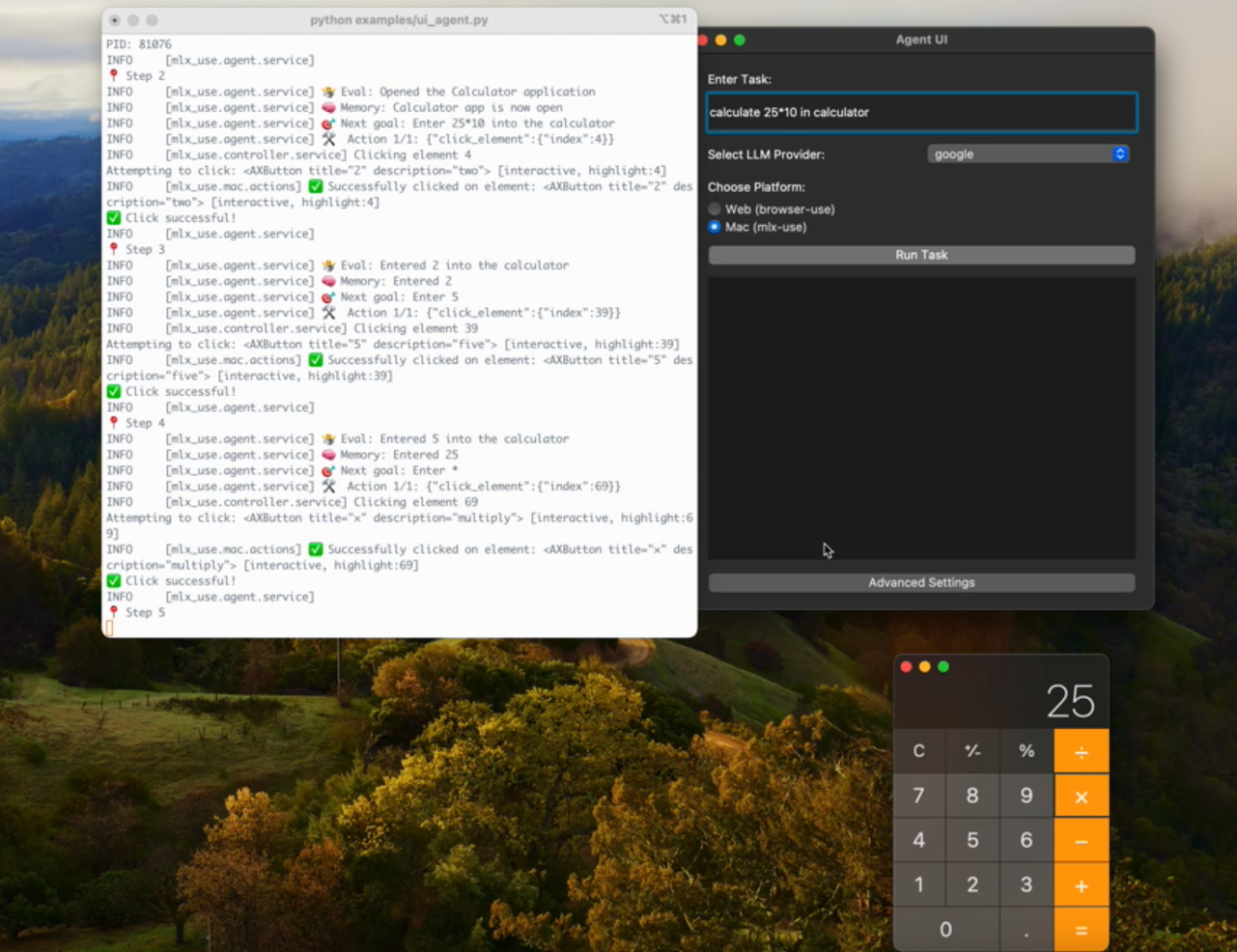

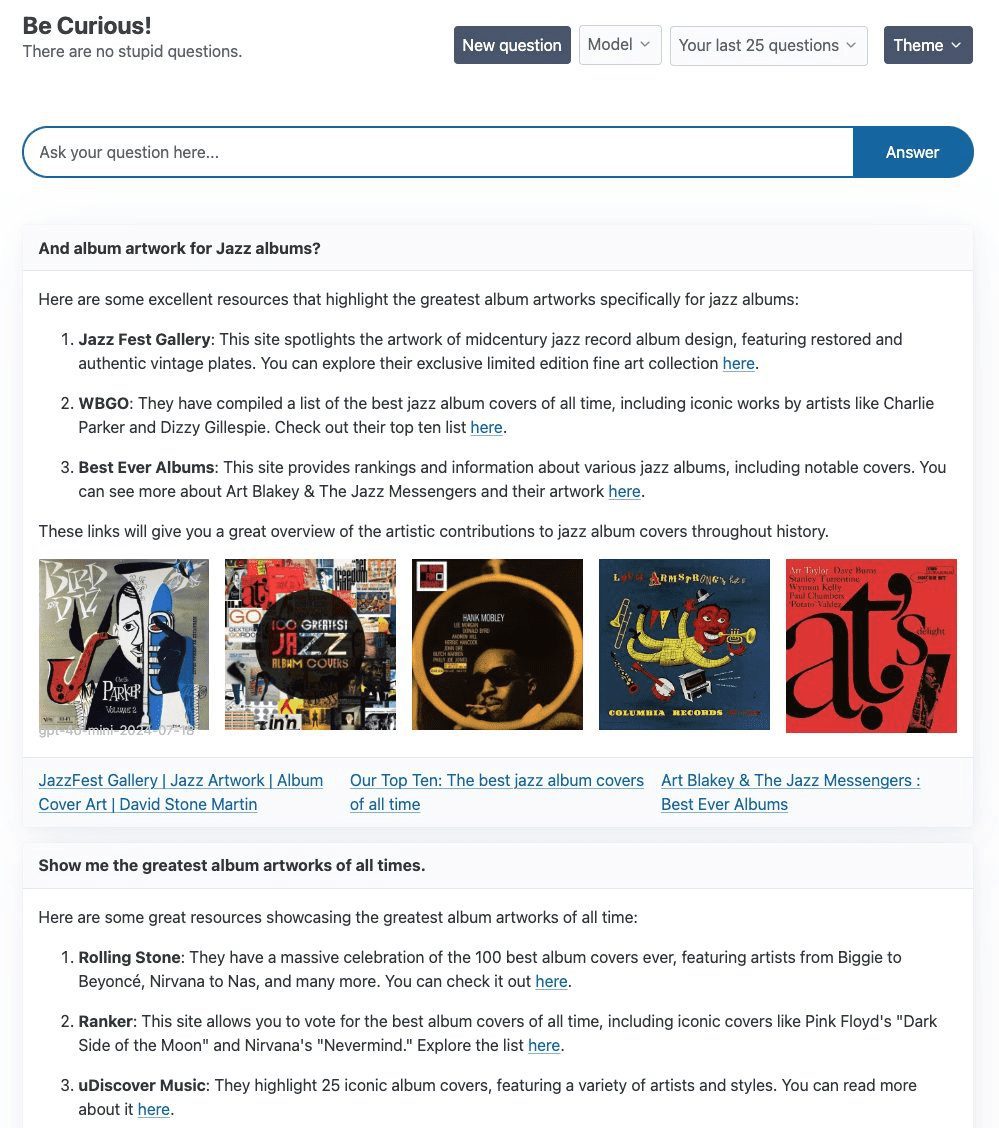

Curiosity is a program designed for exploration and experimentation, primarily using the LangGraph and FastHTML technology stacks, with the goal of building a Perplexity AI of the search product. At the heart of the project is a simple ReAct Agent, utilizing the Tavily Curiosity supports a variety of large language models (LLMs), including OpenAI's gpt-4o-mini, Groq's llama3-groq-8b-8192-tool-use-preview and the Ollama The project focuses not only on the technical implementation, but also spends a lot of time on the front-end design to ensure a high quality visual and interactive experience.

Function List

- Using LangGraph and FastHTML Technology Stacks

- Integrated Tavily Search Enhanced Text Generation

- Support for multiple LLMs, including gpt-4o-mini, llama3-groq, and llama3.1

- Provides flexible back-end switching capabilities

- The front-end is built with FastHTML and supports WebSockets streaming.

Using Help

Installation steps

- Cloning Warehouse:

git clone https://github.com/jank/curiosity - Make sure you have the latest Python3 interpreter.

- Set up a virtual environment and install dependencies:

python3 -m venv venv source venv/bin/activate pip install -r requirements.txt - establish

.envfile and set the following variables:OPENAI_API_KEY=<your_openai_api_key> GROQ_API_KEY=<your_groq_api_key> TAVILY_API_KEY=<your_tavily_api_key> LANGCHAIN_TRACING_V2=true LANGCHAIN_ENDPOINT="https://api.smith.langchain.com" LANGSMITH_API_KEY=<your_langsmith_api_key> LANGCHAIN_PROJECT="Curiosity" - Run the project:

python curiosity.py

Guidelines for use

- Initiation of projects: Run

python curiosity.pyAfter that, the project will start and run on the local server. - Select LLM: Select the appropriate LLM (e.g., gpt-4o-mini, llama3-groq, or llama3.1) according to your needs.

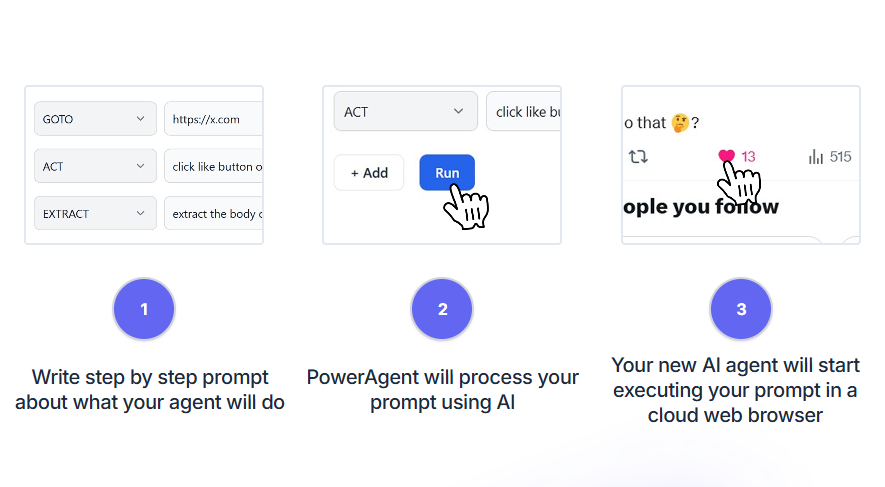

- Search with Tavily: Enter a query into a dialog and the ReAct Agent enhances the text generation with Tavily search.

- Front-end Interaction: The front-end of the project is built using FastHTML and supports WebSockets streaming to ensure real-time response.

common problems

- How to switch LLM: in

.envfile to configure the appropriate API key and select the desired LLM when starting the project. - WebSockets Issues: If you are experiencing problems with WebSockets closing for no apparent reason, it is recommended that you check your network connection and server configuration.

© Copyright notes

Article copyright AI Sharing Circle All, please do not reproduce without permission.

Related posts

No comments...