CogAgent: Smart Spectrum's open source intelligent visual language model for automating graphical interfaces

General Introduction

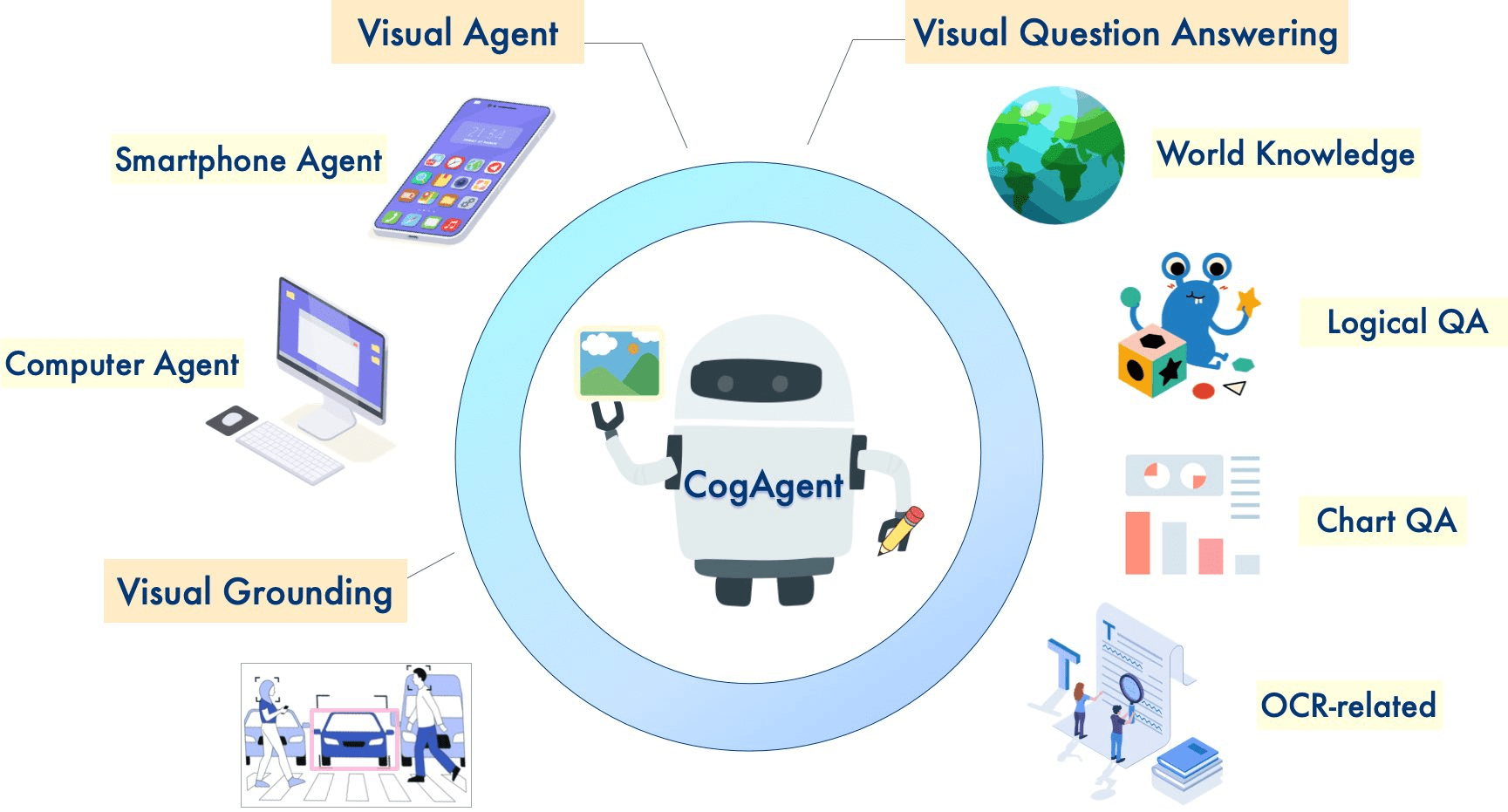

CogAgent is an open source visual language model developed by Tsinghua University Data Mining Research Group (THUDM), aiming to automate cross-platform graphical user interface (GUI) operations. The model is based on CogVLM (GLM-4V-9B), supports bilingual interactions in English and Chinese, and is capable of task execution via screen shots and natural language.CogAgent has achieved leading performance in GUI tasks across multiple platforms and categories, and is suitable for a wide range of computing devices such as Windows, macOS, and Android. Its latest version, CogAgent-9B-20241220, offers significant improvements in GUI perception, reasoning accuracy, operation space completeness, and task generalizability.

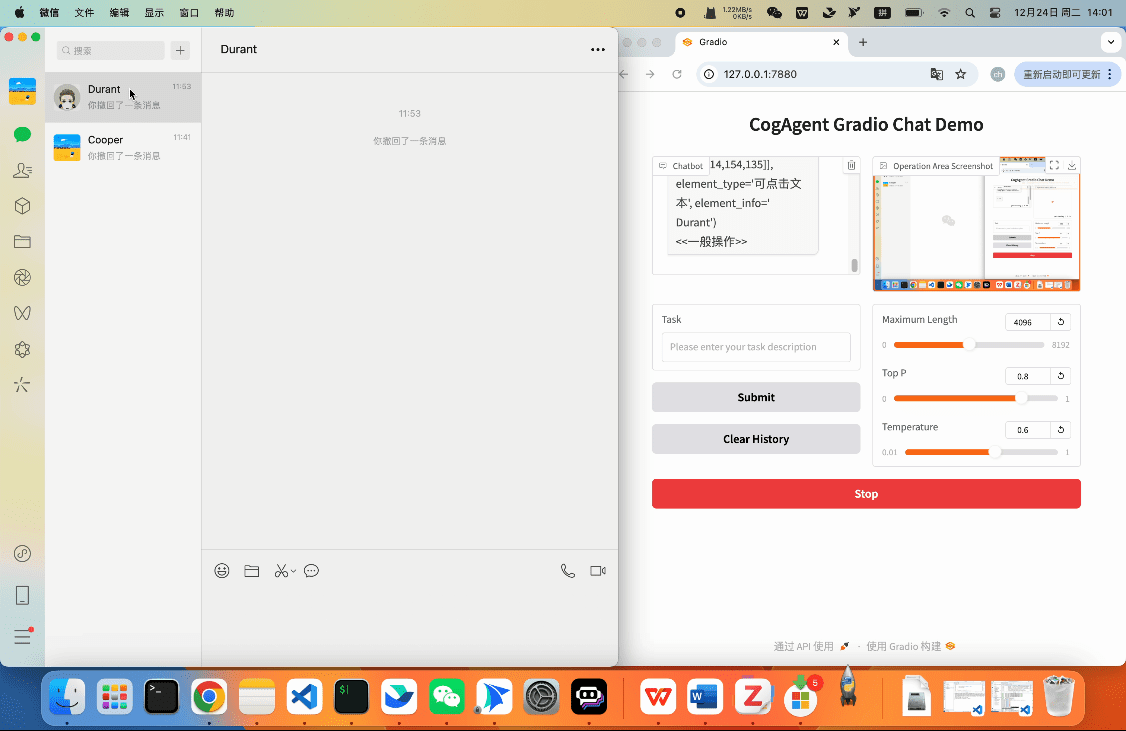

CogAgent-9B-20241220The model is based on GLM-4V-9B, a bilingual open source VLM base model. Through data collection and optimization, multi-stage training and strategy improvement, theCogAgent-9B-20241220Significant advances have been made in GUI perception, inference prediction accuracy, action space completeness, and cross-task generalization capabilities. The model supports bilingual (Chinese and English) interactions, including screenshots and verbal input. This version of the CogAgent model has been used in Smart Spectrum AI's GLM-PC product.

Function List

- High-resolution image understanding and processing (supports 1120x1120 resolution)

- GUI interface automation capability

- Cross-platform compatible interface interaction

- Visual Question and Answer (VQA) task processing

- Chart comprehension and analysis (ChartQA)

- Document Visual Question and Answer (DocVQA)

- Information Visualization Question and Answer (InfoVQA)

- Scene Text Comprehension (ST-VQA)

- Visual Quiz on General Knowledge (OK-VQA)

Using Help

1. Environmental configuration

1.1 Foundation requirements:

- Python 3.8 or higher

- GPU devices supported by CUDA

- Sufficient video memory space (at least 16GB recommended)

1.2 Installation steps:

# 克隆项目仓库

git clone https://github.com/THUDM/CogAgent.git

cd CogAgent

# 安装依赖

pip install -r requirements.txt

2. Model loading and utilization

2.1 Model Download:

- Download the model weights file from the Hugging Face platform

- Two versions are supported: cogagent-18b and cogagent-9b.

2.2 Basic Usage Process:

from cogagent import CogAgentModel

# 初始化模型

model = CogAgentModel.from_pretrained("THUDM/CogAgent")

# 加载图像

image_path = "path/to/your/image.jpg"

response = model.process_image(image_path)

# 执行GUI操作

gui_command = model.generate_gui_command(image_path, task_description)

model.execute_command(gui_command)

3. Description of the use of the main functions

3.1 Image understanding function:

- Supports multiple image format inputs

- Handles images up to 1120x1120 resolution

- Provides detailed image content description and analysis

3.2 GUI automation:

- Support for recognizing interface elements

- Perform click, drag and drop, and input operations.

- Provide operation validation and error handling mechanisms

3.3 Visual Q&A function:

- Support for natural language questioning

- Provide detailed image-related answers

- Can handle complex reasoning problems

4. Performance optimization recommendations

4.1 Memory Management:

- Use the appropriate batch size

- Timely cleanup of unused model instances

- Control the number of concurrent processing tasks

4.2 Reasoning speed optimization:

- Accelerated inference using FP16 precision

- Enable model quantization to reduce resource usage

- Optimize the image preprocessing process

5. Resolution of common problems

5.1 Memory problems:

- Checking Video Memory Usage

- Resize batches appropriately

- Using the gradient checkpoint technique

5.2 Accuracy issues:

- Ensure input image quality

- Adjustment of model parameter configuration

- Verify that the preprocessing steps are correct

Main function operation flow

- one-step operation: Performs single-step actions such as opening an application, clicking a button, etc. through simple natural language commands.

- multi-step operation: Supports complex multi-step operational tasks and automates workflows through sequential instructions.

- Task recording and playback: Record user operation history and support playback function for debugging and optimization.

- error handling: Built-in error handling mechanism that recognizes and handles common operational errors to ensure smooth task completion.

Featured Functions

- Efficient Reasoning: At BF16 accuracy, model inference requires at least 29GB of GPU memory, and an A100 or H100 GPU is recommended.

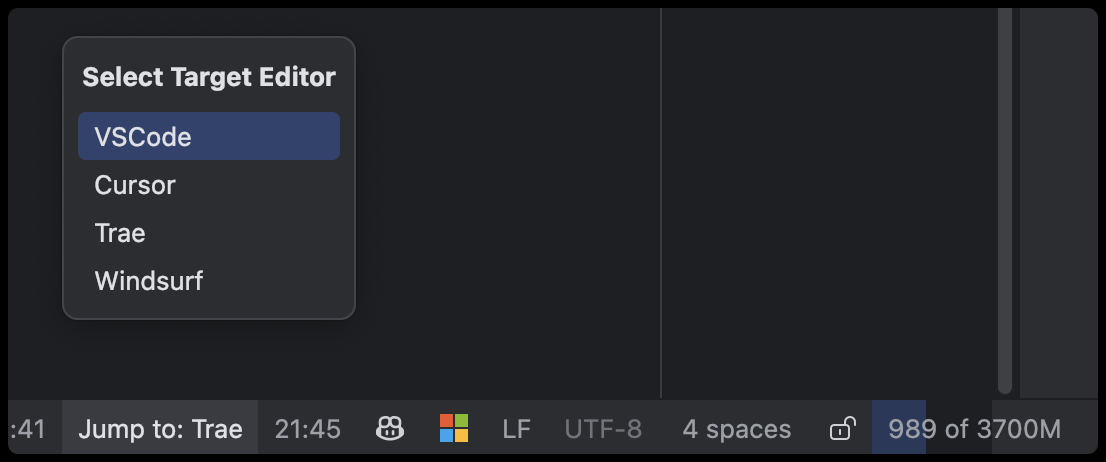

- Flexible deployment: Supports deployment on a variety of hardware platforms, including HuggingFace, ModelScope, and WiseModel.

- Community Support: An active open source community that provides technical support and answers to questions to help developers get started quickly.

© Copyright notes

Article copyright AI Sharing Circle All, please do not reproduce without permission.

Related articles

No comments...