Mistral AI Releases Codestral 25.01 Models: Bigger, Faster, More Powerful Programming-Specific Models

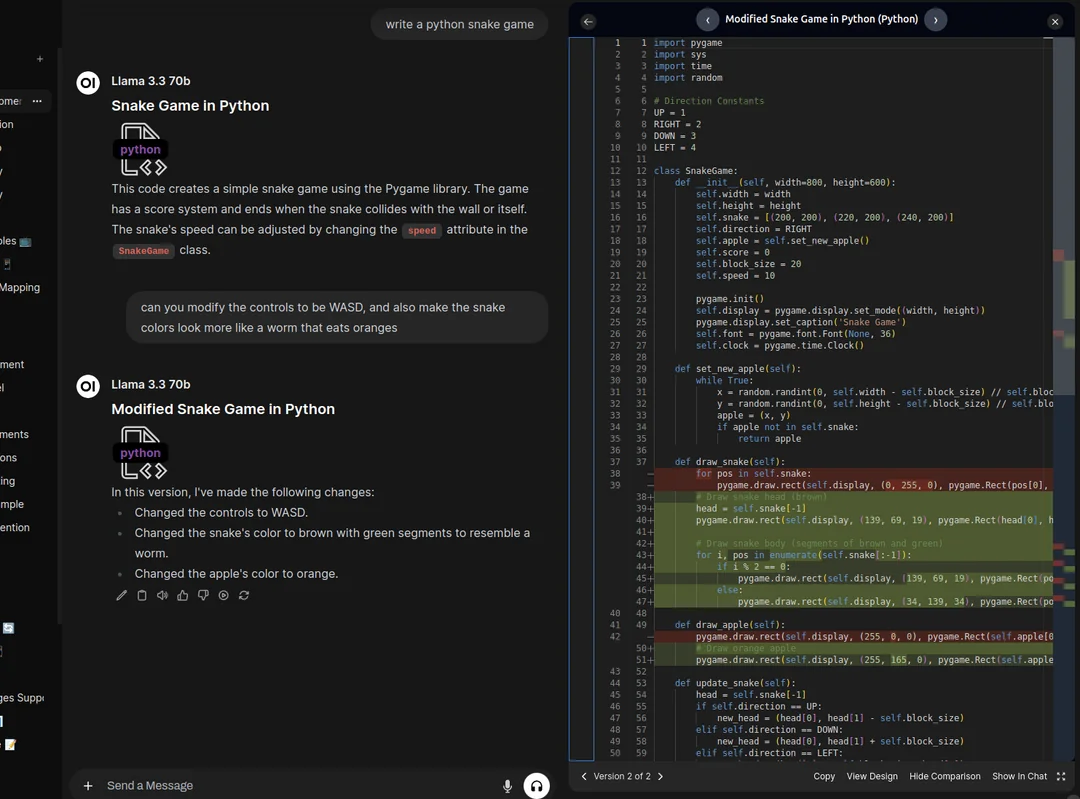

Code at the speed of the Tab key. Today in Continue.dev is available in and will soon be available on other leading AI code assistants.

Of all the innovations in AI over the past year, code generation has arguably been the most important. Similar to the way assembly lines simplified manufacturing and calculators changed math, coding models represent a significant change in software development.

Mistral AI has been at the forefront of this change with the introduction of Codestral, a state-of-the-art (SOTA) coding model released earlier this year. lightweight, fast, and proficient in more than 80 programming languages, Codestral is optimized for low-latency, high-frequency use cases and supports tasks such as Fill-in-the-Middle (FIM), code correction, and test generation. codestral Codestral has been used by thousands of developers as a powerful coding assistant, often increasing productivity several times over. Today, Codestral is undergoing a major upgrade.

Codestral 25.01 With a more efficient architecture and improved Tokenizer than the original version, code is generated and completed approximately 2x faster. The model is now the clear leader in writing code in its weight class and is SOTA across the board in FIM use cases.

benchmarking

We benchmarked the new Codestral using the leading coding model with parameters less than 100B, widely recognized as the best for the FIM task.

summarize

| Python | SQL | Multilingual averages | |||

|---|---|---|---|---|---|

| mould | Context length | HumanEval | MBPP | CruxEval | LiveCodeBench |

| Codestral-2501 | 256k | 86.6% | 80.2% | 55.5% | 37.9% |

| Codestral-2405 22B | 32k | 81.1% | 78.2% | 51.3% | 31.5% |

| Codellama 70B instruct | 4k | 67.1% | 70.8% | 47.3% | 20.0% |

| DeepSeek Coder 33B instruct | 16k | 77.4% | 80.2% | 49.5% | 27.0% |

| DeepSeek Coder V2 lite | 128k | 83.5% | 83.2% | 49.7% | 28.1% |

By language

| mould | HumanEval Python | HumanEval C++ | HumanEval Java | HumanEval Javascript | HumanEval Bash | HumanEval Typescript | HumanEval C# | HumanEval (average) |

|---|---|---|---|---|---|---|---|---|

| Codestral-2501 | 86.6% | 78.9% | 72.8% | 82.6% | 43.0% | 82.4% | 53.2% | 71.4% |

| Codestral-2405 22B | 81.1% | 68.9% | 78.5% | 71.4% | 40.5% | 74.8% | 43.7% | 65.6% |

| Codellama 70B instruct | 67.1% | 56.5% | 60.8% | 62.7% | 32.3% | 61.0% | 46.8% | 55.3% |

| DeepSeek Coder 33B instruct | 77.4% | 65.8% | 73.4% | 73.3% | 39.2% | 77.4% | 49.4% | 65.1% |

| DeepSeek Coder V2 lite | 83.5% | 68.3% | 65.2% | 80.8% | 34.2% | 82.4% | 46.8% | 65.9% |

FIM (one-line exact match)

| mould | HumanEvalFIM Python | HumanEvalFIM Java | HumanEvalFIM JS | HumanEvalFIM (average) |

|---|---|---|---|---|

| Codestral-2501 | 80.2% | 89.6% | 87.96% | 85.89% |

| Codestral-2405 22B | 77.0% | 83.2% | 86.08% | 82.07% |

| OpenAI FIM API* | 80.0% | 84.8% | 86.5% | 83.7% |

| DeepSeek Chat API | 78.8% | 89.2% | 85.78% | 84.63% |

| DeepSeek Coder V2 lite | 78.7% | 87.8% | 85.90% | 84.13% |

| DeepSeek Coder 33B instruct | 80.1% | 89.0% | 86.80% | 85.3% |

FIM pass@1.

| mould | HumanEvalFIM Python | HumanEvalFIM Java | HumanEvalFIM JS | HumanEvalFIM (average) |

|---|---|---|---|---|

| Codestral-2501 | 92.5% | 97.1% | 96.1% | 95.3% |

| Codestral-2405 22B | 90.2% | 90.1% | 95.0% | 91.8% |

| OpenAI FIM API* | 91.1% | 91.8% | 95.2% | 92.7% |

| DeepSeek Chat API | 91.7% | 96.1% | 95.3% | 94.4% |

- GPT 3.5 Turbo is the latest FIM API from OpenAI.

Available from today

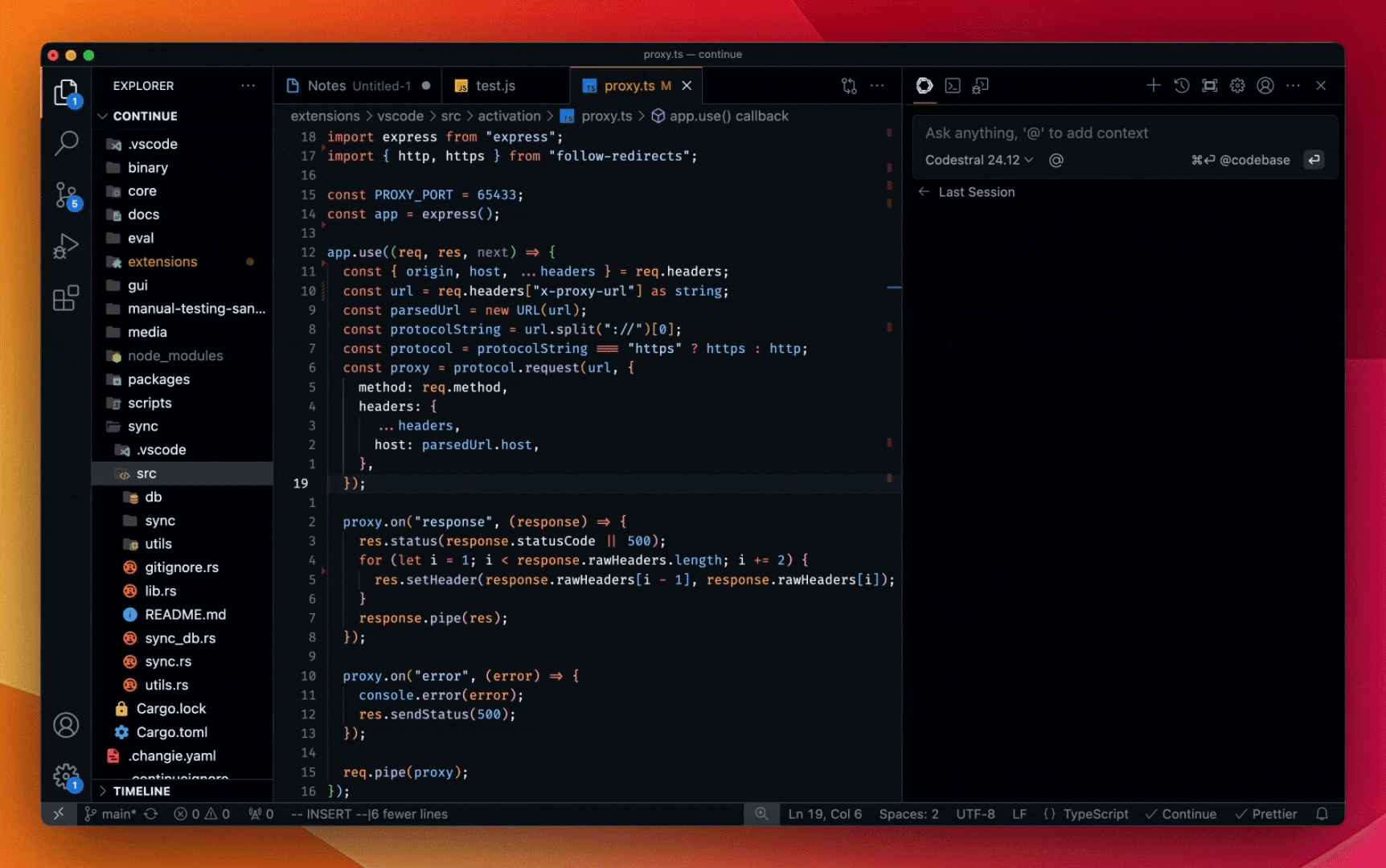

Codestral 25.01 is being rolled out to developers worldwide through our IDE / IDE plugin partners. You can feel the difference in the responsive quality and speed of code completion by selecting Codestral 25.01 in your respective model selector.

For enterprise use cases, especially those requiring data and model residency, Codestral 25.01 can be deployed locally on your premises or within a VPC.

Check out the demo below, and add the Continue for free trial in VS Code or JetBrains.

* :: Codestral 25.01 chat demo

Ty Dunn, co-founder of Continue, said, "For AI code assistants, code completion makes up the bulk of the work, which requires models that excel at Fill-in-the-Middle (FIM.) Codestral 25.01 marks a significant advancement in this area, and Mistral AI's new model delivers more accurate recommendations faster -- a key component of accurate, efficient software development. That's why Codestral is the autocomplete model we recommend to developers."

To build your own integrations using the Codestral API, visit the la Plateforme using codestral-latestThe API is also available on Google Cloud's Vertex AI. The API is also available on Vertex AI for Google Cloud, in private preview on Azure AI Foundry, and coming soon to Amazon Bedrock.To learn more, please read Codestral DocumentationThe

Codestral 25.01 In LMsyscopilot It debuted at #1 on the arena charts. We can't wait to hear about your experience!

© Copyright notes

Article copyright AI Sharing Circle All, please do not reproduce without permission.

Related posts

No comments...