Cloudflare Launches AutoRAG: Managed RAG Service Simplifies AI Application Integration

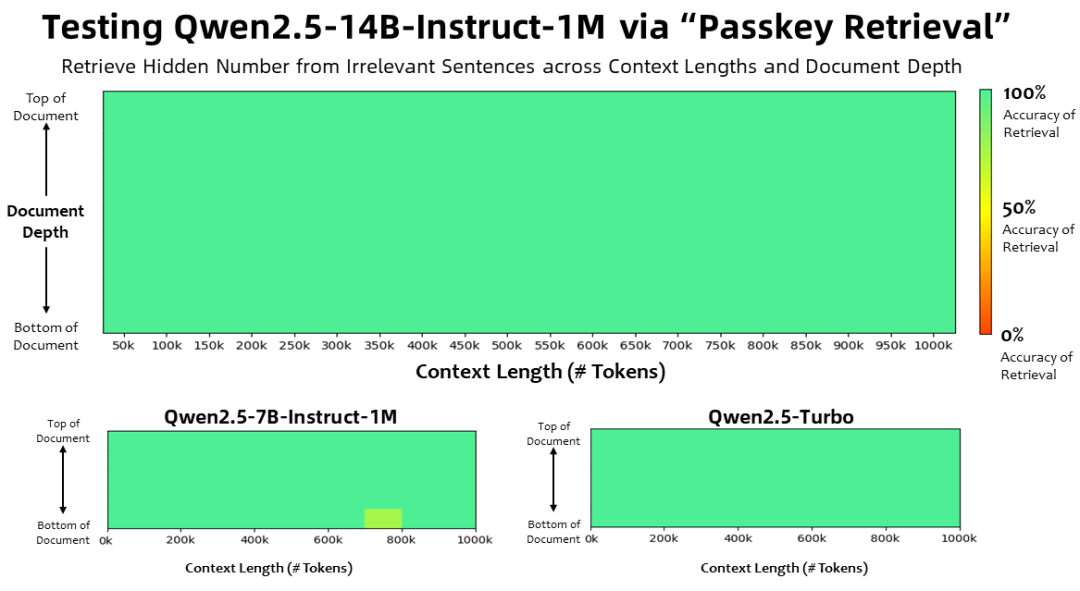

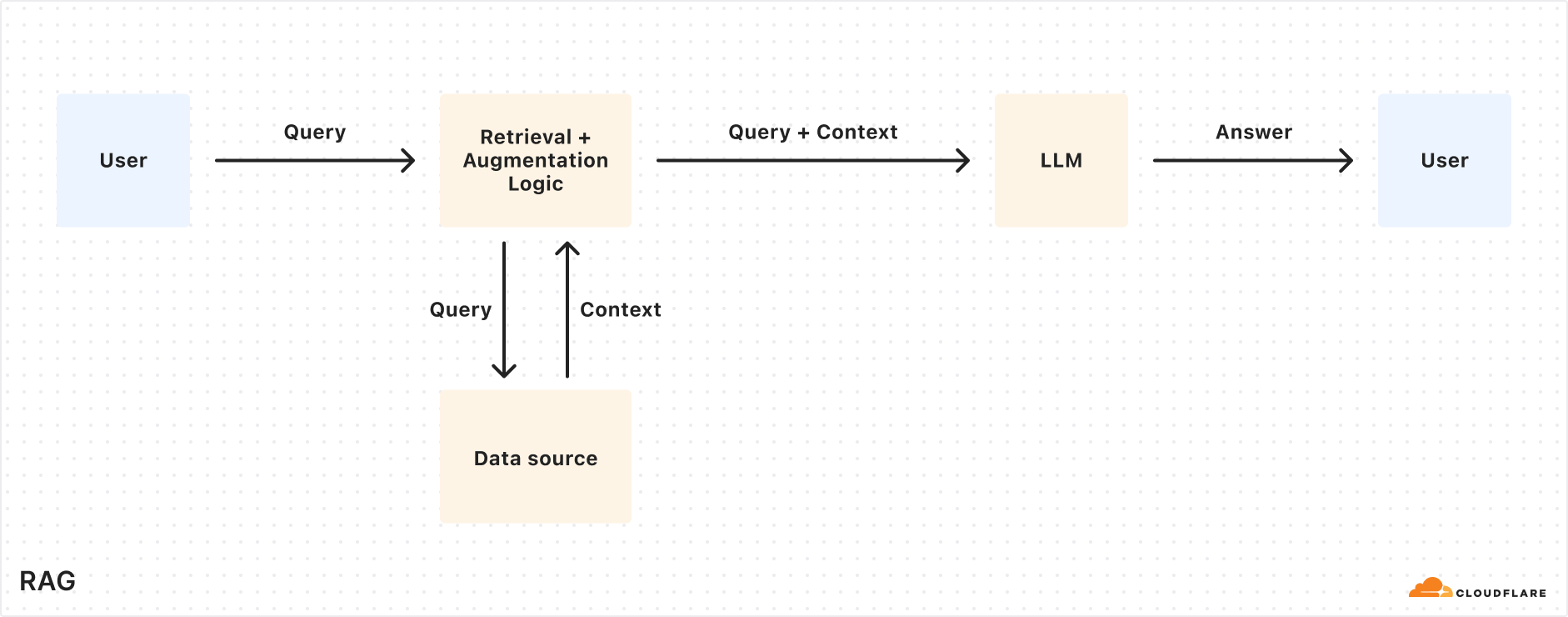

Cloudflare Recently announced the launch of AutoRAG Open Beta of the service. This fully-managed Retrieval-Augmented Generation (RAG) service is the first of its kind in the world. RAG) The pipeline is designed to simplify the process for developers to integrate context-aware AI capabilities into their applications.RAG technology improves the accuracy of AI responses by retrieving information from developer-owned data and feeding it to the Large Language Model (LLM) to generate more reliable, fact-based responses.

For many developers, building a RAG pipeline often means a tedious task. It requires the integration of multiple tools and services such as data stores, vector databases, embedded models, large language models, and custom indexing, retrieval, and generation logic. A lot of "stitching" is required just to get the project started, and subsequent maintenance is even more challenging. When data changed, it had to be manually re-indexed and embedding vectors generated to keep the system relevant and performing. The expected "ask a question and get an intelligent answer" experience often turns into a complex system of fragile integrations, gluey code, and ongoing maintenance.

AutoRAG The goal is to eliminate this complexity. With a few clicks, developers get an end-to-end fully managed RAG pipeline. The service covers everything from data access, automated chunking and embedding, to storing vectors in the Cloudflare (used form a nominal expression) Vectorize database, and then to performing semantic searches and utilizing the Workers AI The entire process of generating a high quality response.AutoRAG The data sources are continuously monitored and indexed in the background for updates, enabling AI apps to be kept up-to-date automatically without human intervention. This abstraction encapsulation allows developers to focus more on working on the Cloudflare to build smarter, faster applications on the development platform of the

The value of RAG applications

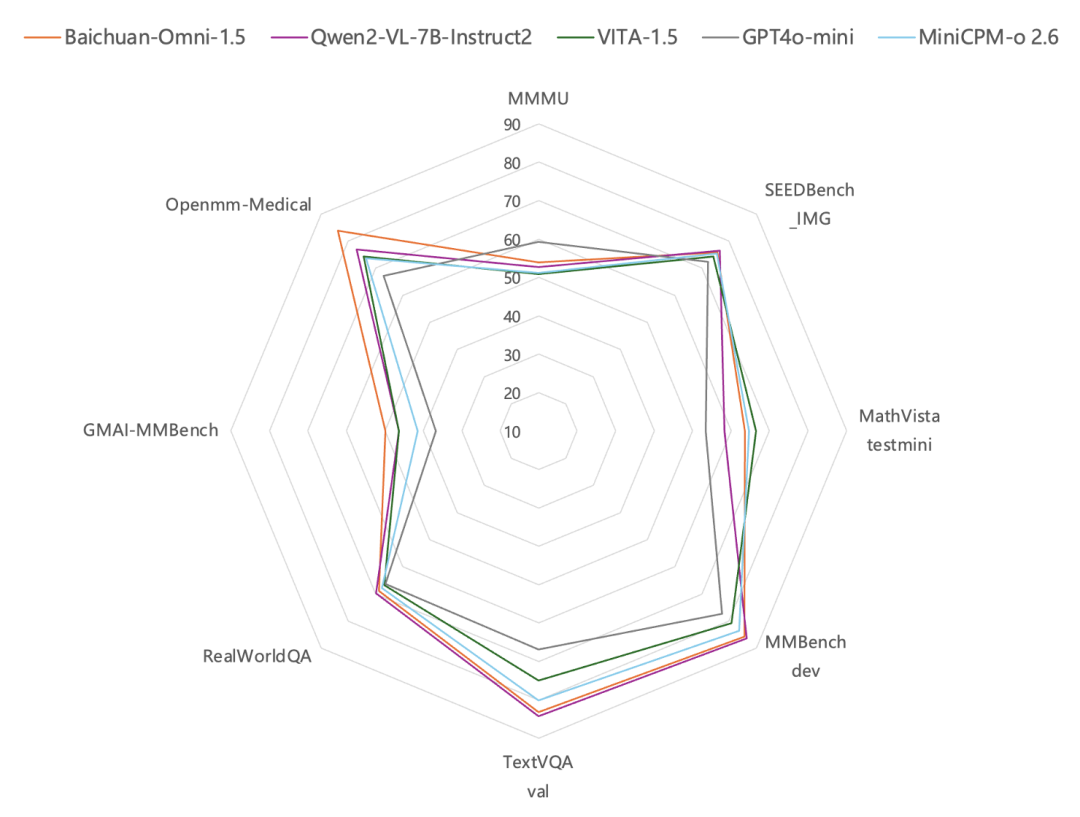

Large language models (such as those introduced by Meta Llama 3.3) are powerful, but their knowledge is limited to training data. When asked for new, proprietary, or domain-specific information, they often struggle to give accurate answers. This can be aided by providing relevant information through system prompts, but this increases the size of the input and is limited by the length of the context window. Another approach is fine-tuning the model, but this is costly and requires continuous retraining to keep up with information updates.

The RAG retrieves relevant information from a specified data source at the time of the query, combines it with the user's input query, and then provides both to the LLM to generate a response. This approach allows the LLM to base its response on the data provided by the developer, ensuring that the information is accurate and up-to-date. As a result, RAG is well suited for building AI-driven customer service robots, internal knowledge base assistants, document semantic search, and other application scenarios that require continuously updated information sources. Compared to fine-tuning, RAG is more cost-effective and flexible in handling dynamically updated data.

Explanation of the working mechanism of AutoRAG

AutoRAG utilization Cloudflare Existing components of the Developer Platform automatically configure the RAG pipeline for the user. Developers do not need to write their own code to combine Workers AI,Vectorize cap (a poem) AI Gateway and other services, simply create a AutoRAG instance and point it to a data source (such as the R2 Storage Bucket) is sufficient.

AutoRAG The core of the program is driven by two processes:Indexing cap (a poem) QueryingThe

- indexing is an asynchronous process that runs in the background. It runs in the

AutoRAGInstances are started immediately after creation and run automatically in a round-robin fashion - processing new or updated files after each job is completed. During the indexing process, content is converted into vectors optimized for semantic search. - consult (a document etc) is a synchronized process, triggered by the user sending a search request.

AutoRAGUpon receiving a query, the most relevant content is retrieved from the vector database and these are utilized with the LLM to generate a context-aware response.

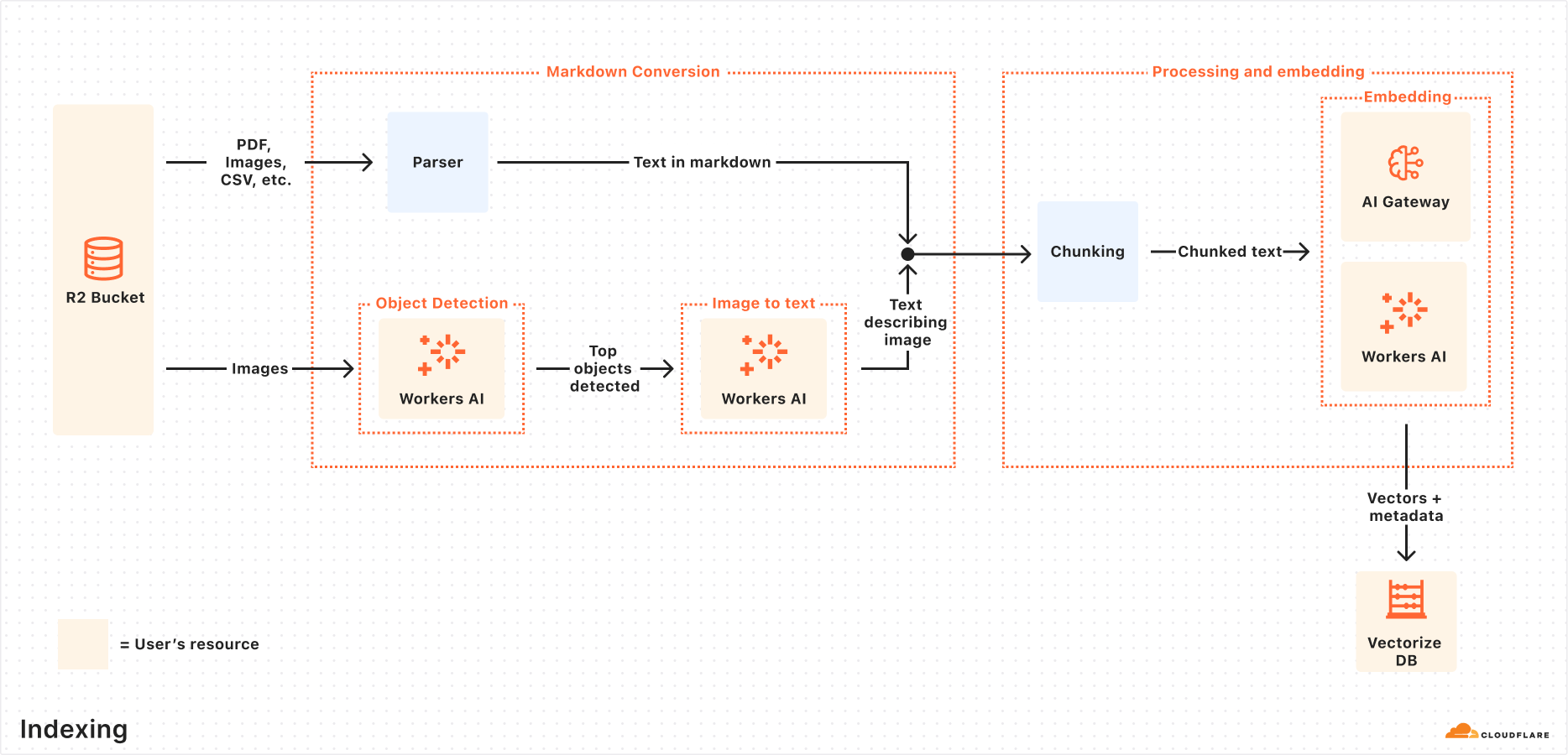

Indexing Process Explained

When the data source is connected, theAutoRAG The following steps are automatically performed to extract, transform and store the data as vectors, optimized for semantic search during subsequent queries:

- Extracts files from a data source:

AutoRAGRead files directly from configured data sources. Currently supports the use of theCloudflare R2Integration to handle documents in PDF, image, text, HTML, CSV and many other formats. - Markdown conversion: utilization

Workers AIThe Markdown conversion feature converts all files to a structured Markdown format, ensuring consistency across different file types. For image files, theWorkers AIThe object is detected and then its content is described as Markdown text through visual-to-verbal conversion. - Chunking: The extracted text is split into smaller chunks to improve the granularity of the retrieval.

- Embedding: Each text block uses the

Workers AIThe embedding model is processed to convert the content into a vector representation. - Vector storage: The generated vectors, along with metadata such as source location, filename, etc., are stored in the automatically-created

Cloudflare VectorizeIn the database.

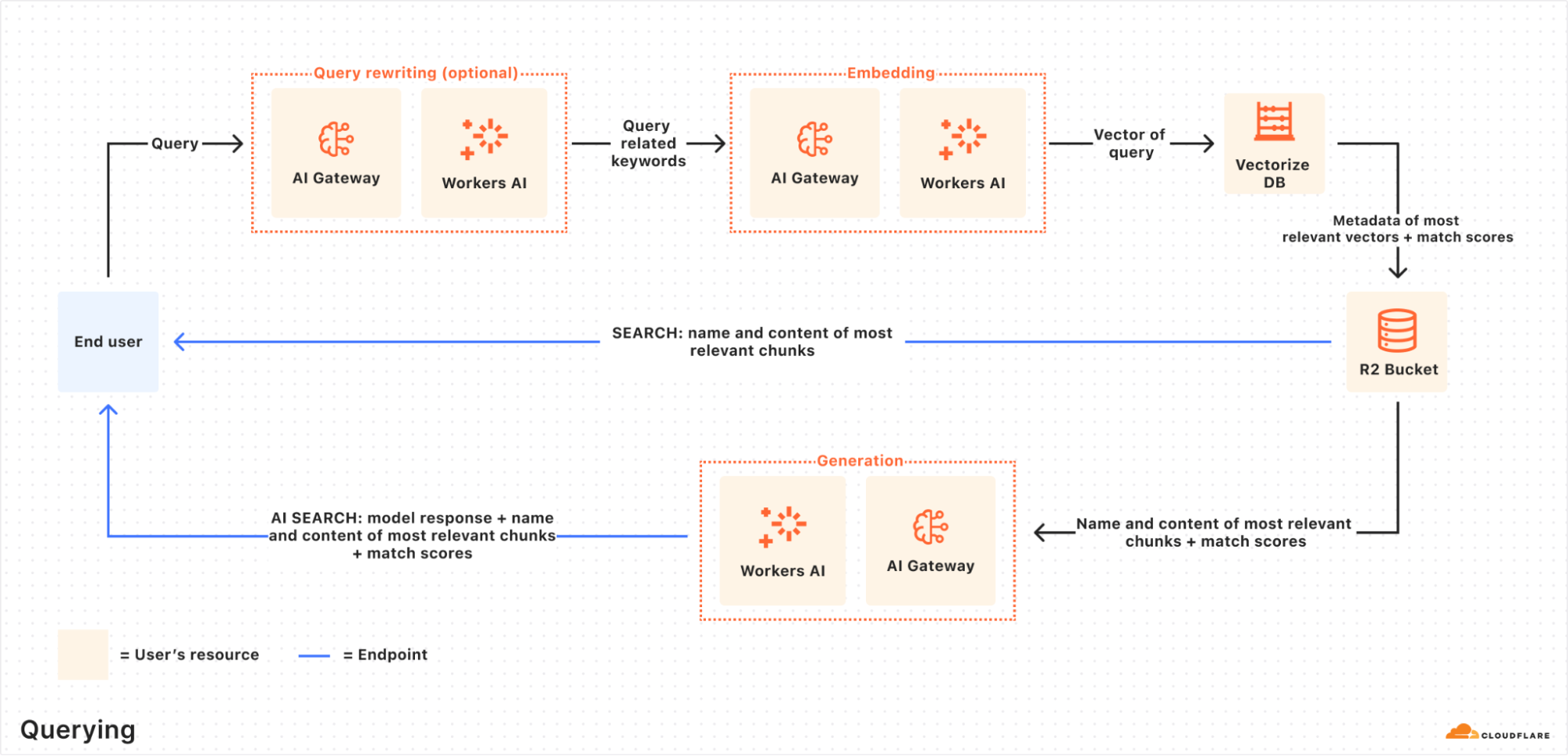

Detailed explanation of the query process

When an end user initiates a request, theAutoRAG Will coordinate the following operations:

- Receive query requests: The query workflow begins with the direction

AutoRAGAI Search or Search API endpoint to send a request. - Query Rewrite (optional):

AutoRAGProvides the option to use theWorkers AIThe LLM in rewrites the original input query and transforms it into a more efficient search query to improve the quality of retrieval. - Query Embedding: The rewritten (or original) query is converted to a vector by the same model as at the time of data embedding in order to allow similarity comparisons with the stored vector data.

- Vectorize Vector search: The query vectors are in agreement with the

AutoRAGassociatedVectorizeThe database is searched to find the most relevant vectors. - Metadata and Content Retrieval:

VectorizeReturns the most relevant blocks of text and their metadata. At the same time, the text from theR2The corresponding raw content is retrieved from the storage bucket. This information is passed to the text generation model. - Response Generation:

Workers AIThe text generation model in uses the retrieved content and the user's original query to generate the final answer.

The end result is an AI-driven answer that is accurate and up-to-date, based on private user data.

Hands-on: Rapidly Building RAG Applications with the Browser Rendering API

Typically, the startup AutoRAG Simply point it to an existing R2 Storage Bucket. But what if the content source is a dynamic web page that needs to be rendered before it can be fetched?Cloudflare offered Browser Rendering API (now officially available) solves this problem. The API allows developers to programmatically control headless browser instances to perform common operations such as crawling HTML content, taking screenshots, generating PDFs, and more.

The following steps outline how to utilize the Browser Rendering API Crawling website content, depositing R2and connecting it to the AutoRAG to enable Q&A functionality based on website content.

Step 1: Create Worker to Crawl Web Pages and Upload to R2

First, create a Cloudflare WorkerUse Puppeteer accesses the specified URL, renders the page, and stores the full HTML content to the R2 Storage Bucket. If there is already a R2 bucket, this step can be skipped.

- Initialize a new worker project (e.g.

browser-r2-worker):npm create cloudflare@latest browser-r2-workeroption

Hello World Starter(math.) genusWorker onlypeaceTypeScriptThe - mounting

@cloudflare/puppeteer::npm i @cloudflare/puppeteer - Create a file named

html-bucketR2 storage bucket:npx wrangler r2 bucket create html-bucket - exist

wrangler.toml(orwrangler.json) Configuration file to add browser rendering and R2 bucket bindings:# wrangler.toml compatibility_flags = ["nodejs_compat"] [browser] binding = "MY_BROWSER" [[r2_buckets]] binding = "HTML_BUCKET" bucket_name = "html-bucket" - Replace it with the following script

src/index.tsThis script takes the URL from the POST request, uses Puppeteer to fetch the page HTML and stores it in R2:import puppeteer from "@cloudflare/puppeteer"; interface Env { MY_BROWSER: puppeteer.BrowserWorker; // Correct typing if available, otherwise 'any' HTML_BUCKET: R2Bucket; } interface RequestBody { url: string; } export default { async fetch(request: Request, env: Env): Promise<Response> { if (request.method !== 'POST') { return new Response('Please send a POST request with a target URL', { status: 405 }); } try { const body = await request.json() as RequestBody; // Basic validation, consider adding more robust checks if (!body.url) { return new Response('Missing "url" in request body', { status: 400 }); } const targetUrl = new URL(body.url); // Use URL constructor for parsing and validation const browser = await puppeteer.launch(env.MY_BROWSER); const page = await browser.newPage(); await page.goto(targetUrl.href, { waitUntil: 'networkidle0' }); // Wait for network activity to cease const htmlPage = await page.content(); // Generate a unique key for the R2 object const key = `${targetUrl.hostname}_${Date.now()}.html`; await env.HTML_BUCKET.put(key, htmlPage, { httpMetadata: { contentType: 'text/html' } // Set content type }); await browser.close(); return new Response(JSON.stringify({ success: true, message: 'Page rendered and stored successfully', key: key }), { headers: { 'Content-Type': 'application/json' } }); } catch (error) { console.error("Error processing request:", error); // Return a generic error message to the client return new Response(JSON.stringify({ success: false, message: 'Failed to process request' }), { status: 500, headers: { 'Content-Type': 'application/json' } }); } } } satisfies ExportedHandler<Env>; - Deploy Worker:

npx wrangler deploy - Testing the Worker, e.g., crawling

Cloudflareof this blog post:curl -X POST https://browser-r2-worker.<YOUR_SUBDOMAIN>.workers.dev \ -H "Content-Type: application/json" \ -d '{"url": "https://blog.cloudflare.com/introducing-autorag-on-cloudflare"}'commander-in-chief (military)

<YOUR_SUBDOMAIN>Replace yourCloudflare WorkersSubdomains.

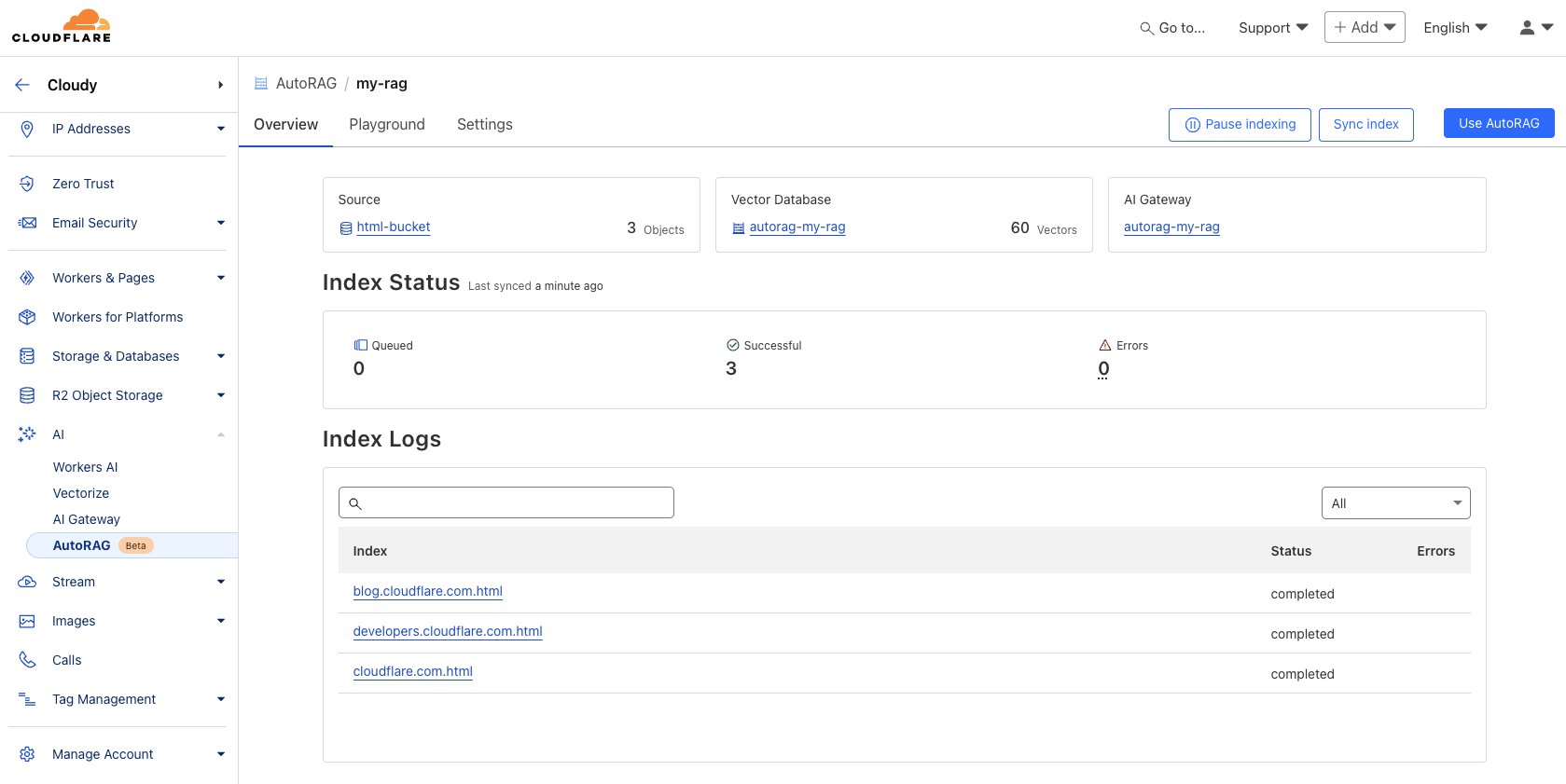

Step 2: Create AutoRAG Instance and Monitor Indexes

Populate the contents of the R2 After the bucket is created AutoRAG Example:

- exist

CloudflareIn the control panel, navigate to AI > AutoRAG. - Select "Create AutoRAG" and complete the setup:

- Select the knowledge base that contains the

R2The storage bucket (in this casehtml-bucket). - Select the embedding model (default recommended).

- Select the LLM used to generate the response (default recommended).

- Select or create a

AI Gatewayto monitor model usage. - because of

AutoRAGInstance naming (e.g.my-rag). - Select or create a service API token that authorizes the

AutoRAGCreate and access resources in your account.

- Select the knowledge base that contains the

- Select "Create" to start the

AutoRAGExample.

After creation, theAutoRAG will automatically create a Vectorize database and start indexing the data. It is possible to create a new database in the AutoRAG to view the indexing progress on the Overview page of the The indexing time depends on the number and type of files in the data source.

Step 3: Test and Integrate into Application

Once the indexing is complete, the query can begin. The indexing can be done in the AutoRAG instance Playground In the tabs, ask questions based on the uploaded content, e.g. "What is AutoRAG?".

Once the test is satisfactory, the AutoRAG integrated directly into the application. If you use the Cloudflare Workers Build applications that can be directly invoked via AI bindings AutoRAG::

exist wrangler.toml Add a binding to the

# wrangler.toml

[ai]

binding = "AI"

Then in the worker code, call aiSearch() method (to get the answer generated by the AI) or the Search() method (to get a list of search results only):

// Example in a Worker using the AI binding

export default {

async fetch(request: Request, env: Env, ctx: ExecutionContext): Promise<Response> {

// Assuming 'env' includes the AI binding: interface Env { AI: Ai }

if (!env.AI) {

return new Response("AI binding not configured.", { status: 500 });

}

try {

const answer = await env.AI.run('@cf/meta/llama-3.1-8b-instruct', { // Example model, replace if needed

prompt: 'What is AutoRAG?', // Simple prompt example

rag: { // Use the RAG configuration via the binding

autorag_id: 'my-rag' // Specify your AutoRAG instance name

}

});

// Or using the specific AutoRAG methods if available via the binding

// const searchResults = await env.AI.autorag('my-rag').Search({ query: 'What is AutoRAG?' });

// const aiAnswer = await env.AI.autorag('my-rag').aiSearch({ query: 'What is AutoRAG?' });

return new Response(JSON.stringify(answer), { headers: { 'Content-Type': 'application/json' }});

} catch (error) {

console.error("Error querying AutoRAG:", error);

return new Response("Failed to query AutoRAG", { status: 500 });

}

}

}

Note: The above Worker code examples are based on generic AI bindings and RAG functionality integration, specifically AutoRAG For the binding invocation, please refer to the Cloudflare Official documentation for the latest and most accurate usage.

For more integration details, check out AutoRAG Use AutoRAG" section of the example or refer to the developer documentation.

Strategic significance and developer value

AutoRAG The launch marks the Cloudflare Further deepen the integration of AI capabilities on its developer platform. By integrating key components of the RAG pipeline (R2, Vectorize, Workers AI, AI Gateway) encapsulated as a managed service.Cloudflare Significantly lowers the barriers for developers to build smart apps with their own data.

For developersAutoRAG The main value of theconvenienceIt eliminates the burden of manually configuring and maintaining a complex RAG infrastructure. It removes the burden of manually configuring and maintaining a complex RAG infrastructure, and is especially suited to teams that need to prototype quickly or lack dedicated MLOps resources. Compared to building your own RAG entirely, it's a trade-off: sacrificing some of the underlying control and customization flexibility for faster development and lower management overhead.

At the same time, this reflects the Cloudflare An extension of the platform strategy: delivering increasingly comprehensive compute, storage and AI services at the edge of its global network, forming a tightly integrated ecosystem. Developers use AutoRAG Means deeper integration Cloudflare technology stack, which can bring a certainVendor Lockconsiderations, but also thanks to the optimized integration between services within the ecology.

During the public testing period, theAutoRAG free launch, but computational operations such as indexing, retrieval, and enhancement consume Workers AI cap (a poem) Vectorize The usage of underlying services such as the developer needs to be concerned about these associated costs. Currently each account is limited to creating 10 AutoRAG instances, each of which handles up to 100,000 documentsThese restrictions may be adjusted in the future.

Roadmap for future development

Cloudflare indicate AutoRAG will continue to expand, with plans to introduce more features in 2025, for example:

- More data source integration: apart from

R2The program supports direct parsing of website URLs (using browser rendering technology) and access to theCloudflare D1and other structured data sources. - Improve the quality of response: Explore built-in techniques such as reranking and recursive chunking to improve the relevance and quality of the answers generated.

AutoRAG The development direction will be continuously adjusted based on user feedback and application scenarios.

Instant Experience

right AutoRAG Interested developers can visit Cloudflare The AI > AutoRAG section of the control panel to start creating and trying out. Whether you're building an AI search experience, an in-house knowledge assistant, or just trying out an LLM app.AutoRAG Both provide a way to make a difference in the Cloudflare A way to quickly start a RAG project on a global network. More detailed information can be found in Developer Documentation. Meanwhile, the now officially available Browser Rendering API Also of interest.

© Copyright notes

Article copyright AI Sharing Circle All, please do not reproduce without permission.

Related articles

No comments...