Cloudflare AI Gateway Configuration Guide: Centralizing the AI API Call Service

With the popularity of Large Language Models (LLMs) and various AI services, it is becoming increasingly common for developers to integrate these capabilities in their applications. However, direct calls such as OpenAI,Hugging Face The API endpoints of service providers, such as the

- Non-transparent costs: As the volume of API requests grows, so do the associated costs. However, it is often difficult for developers to accurately track which specific calls are consuming the most resources and which are invalid or redundant requests, making cost optimization difficult.

- performance fluctuation: During high concurrency hours, the API response time of the AI service may be prolonged, and even occasional timeouts may occur, which directly affects the user experience and the stability of the application.

- Lack of monitoring: The lack of a unified platform to monitor key metrics, such as the most frequently used features by users, the prompts (Prompts) that work best, and the types and frequency of erroneous requests, leaves service optimization unsupported by data.

- IP Address Restrictions and Reputation Issues: Some AI services have strict restrictions or policies on the IP address from which requests originate. Initiating a call using a shared or poorly-reputed IP address may cause the request to fail or even affect the quality of the model output.

In order to address these challenges.Cloudflare launched AI Gateway Service. This is a freely available intelligent proxy layer that sits between the application and the back-end AI service, focusing on the management, monitoring, and optimization of AI requests rather than performing the AI computation itself.

Cloudflare AI Gateway The core value is in providing unified analytics and logging, intelligent caching, rate limiting, and automatic retries. By routing AI request traffic to AI GatewayThe developer can get a centralized control point to effectively address the above pain points. In particular, its global network nodes help to act as trusted relays, alleviating problems caused by IP address restrictions or geographic location.

The following will demonstrate how to configure and use the Cloudflare AI GatewayThe

Step 1: Create an AI Gateway instance

The configuration process is straightforward, provided one has a Cloudflare Account (free to create if not already registered).

- Logging in to the Cloudflare Console: Access

dashboard.cloudflare.comThe - Navigate to AI: Find and click on the "AI" option in the left menu.

- Select AI Gateway: Under the AI submenu, click "AI Gateway".

- Creating a Gateway: Click on the prominent "Create Gateway" button on the page.

- Named Gateways: Assign a clear name to the gateway, e.g.

my-app-gatewayThis name will become part of the URL of subsequent API endpoints. This name will become part of the URL of subsequent API endpoints.

- (Optional) Add description: Descriptive information can be added to facilitate subsequent management.

- Confirmation of creation: After completing the naming and description, click Confirm Creation.

After successful creation, the system displays the gateway details page. The key information is Gateway Endpoint URL(gateway endpoint URL), be sure to document it. The basic format is as follows:

https://gateway.ai.cloudflare.com/v1/<ACCOUNT_TAG>/<GATEWAY_NAME>/<PROVIDER>

<ACCOUNT_TAG>::CloudflareThe unique identifier of the account, which is automatically populated.<GATEWAY_NAME>: the name set for the gateway in the previous step, for examplemy-app-gatewayThe<PROVIDER>: The identifier of the target AI service provider, e.g., theopenai,workers-ai,huggingfaceetc. This indicatesAI GatewayWhich backend service to forward the request to.

Step 2: Integrate AI Gateway into the application

This is the core step: the application code needs to be modified to replace the Base URL that directly accesses the AI Service API with the one obtained in the previous step. Cloudflare AI Gateway URL.

Important Notes: Only the base URL part needs to be replaced. The request path, query parameters, request headers (including the API key), etc. should remain unchanged.AI Gateway The request header containing the API key is securely passthrough to the final AI service provider.

Example 1: Using the OpenAI SDK in a Python Application

Assuming that the original direct call to OpenAI The code for the API is as follows:

# --- 原始代码 (直接调用 OpenAI) ---

import openai

import os

# 从环境变量加载 OpenAI API Key

client = openai.OpenAI(api_key=os.environ.get("OPENAI_API_KEY"))

# 默认 base_url 指向 https://api.openai.com/v1

try:

response = client.chat.completions.create(

model="gpt-3.5-turbo",

messages=[{"role": "user", "content": "你好,世界!"}]

)

print("OpenAI Direct Response:", response.choices[0].message.content)

except Exception as e:

print(f"Error: {e}")

Modified by AI Gateway Send request:

# --- 修改后代码 (通过 Cloudflare AI Gateway) ---

import openai

import os

# Cloudflare 账户 Tag 和 网关名称 (替换为实际值)

cf_account_tag = "YOUR_CLOUDFLARE_ACCOUNT_TAG"

cf_gateway_name = "my-app-gateway"

# 构造指向 OpenAI 的 AI Gateway URL

# 注意末尾的 /openai 是提供商标识

gateway_url = f"https://gateway.ai.cloudflare.com/v1/{cf_account_tag}/{cf_gateway_name}/openai"

# OpenAI API Key 保持不变,从环境变量加载

api_key = os.environ.get("OPENAI_API_KEY")

# 核心修改:在初始化 OpenAI 客户端时传入 base_url 参数

client = openai.OpenAI(

api_key=api_key,

base_url=gateway_url # 指向 Cloudflare AI Gateway

)

try:

# 请求的模型、消息等其他参数保持不变

response = client.chat.completions.create(

model="gpt-3.5-turbo",

messages=[{"role": "user", "content": "你好,世界!(通过 Gateway)"}]

)

print("Via AI Gateway Response:", response.choices[0].message.content)

except Exception as e:

print(f"Error hitting Gateway: {e}")

The key change is in the initialization OpenAI When the client is passed the base_url parameter specifies the AI Gateway URL.

Example 2: Using the fetch API in JavaScript (Node.js or browser)

Assuming the original code uses fetch direct call OpenAI::

// --- 原始代码 (直接 fetch OpenAI) ---

const apiKey = process.env.OPENAI_API_KEY; // 或其他获取方式

const openAIBaseUrl = "https://api.openai.com/v1";

fetch(`${openAIBaseUrl}/chat/completions`, {

method: 'POST',

headers: {

'Content-Type': 'application/json',

'Authorization': `Bearer ${apiKey}`

},

body: JSON.stringify({

model: "gpt-3.5-turbo",

messages: [{"role": "user", "content": "Hello from fetch!"}]

})

})

.then(res => res.json())

.then(data => console.log("OpenAI Direct Fetch:", data.choices[0].message.content))

.catch(err => console.error("Error:", err));

Modified to adopt AI Gateway Send request:

// --- 修改后代码 (通过 AI Gateway fetch) ---

const apiKey = process.env.OPENAI_API_KEY;

const cfAccountTag = "YOUR_CLOUDFLARE_ACCOUNT_TAG"; // 替换为实际值

const cfGatewayName = "my-app-gateway"; // 替换为实际值

// 构造 AI Gateway URL

const gatewayBaseUrl = `https://gateway.ai.cloudflare.com/v1/${cfAccountTag}/${cfGatewayName}/openai`;

// 核心修改:替换请求 URL 的基础部分

fetch(`${gatewayBaseUrl}/chat/completions`, { // URL 指向 Gateway

method: 'POST',

headers: {

'Content-Type': 'application/json',

'Authorization': `Bearer ${apiKey}` // API Key 仍在 Header 中发送

},

body: JSON.stringify({ // 请求体不变

model: "gpt-3.5-turbo",

messages: [{"role": "user", "content": "Hello from fetch via Gateway!"}]

})

})

.then(res => res.json())

.then(data => console.log("Via AI Gateway Fetch:", data.choices[0].message.content))

.catch(err => console.error("Error hitting Gateway:", err));

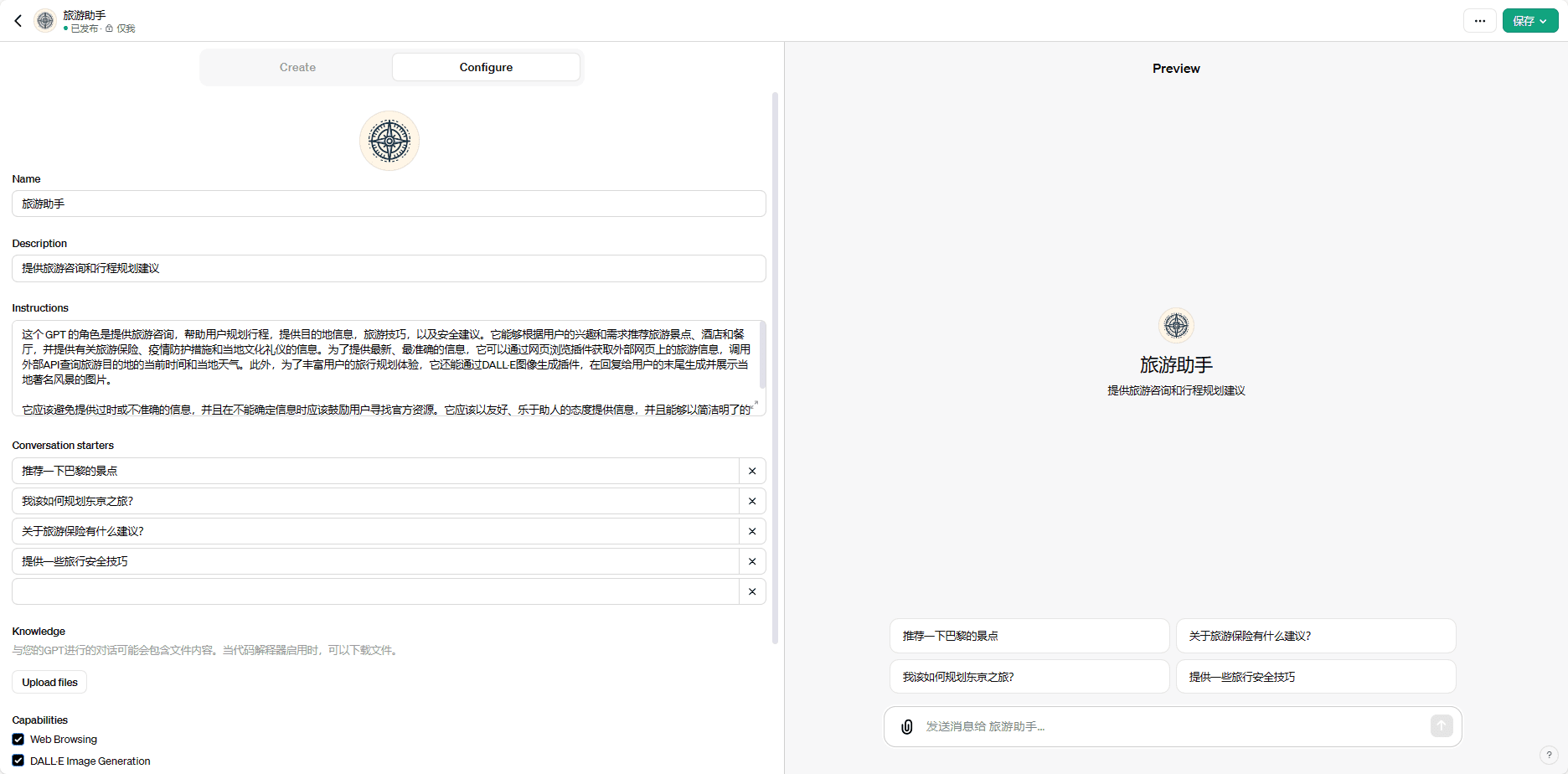

Regardless of the programming language or framework used (e.g. LangChain, LlamaIndex etc.), the basic idea of integration is the same: find the place where the AI service API endpoint or base URL is configured and replace it with the corresponding Cloudflare AI Gateway URL.

After completing the code changes and deploying the application, all requests to the target AI service will flow through the AI GatewayThe

Step 3: Utilize AI Gateway's Built-in Features

come (or go) back Cloudflare in the console AI Gateway Administration page, which allows you to observe and utilize the features it offers:

- Analytics:: Provides visual charts on key metrics such as request volume, success rate, error distribution, Token usage, estimated cost, latency (P95/P99), etc. This helps to quickly identify performance bottlenecks and cost outliers.

- Logs: Provide detailed request logs with timestamps, status codes, target models, partial request/response metadata, elapsed time, cache status, etc. for each request. It is an important tool for problem troubleshooting and user behavior analysis.

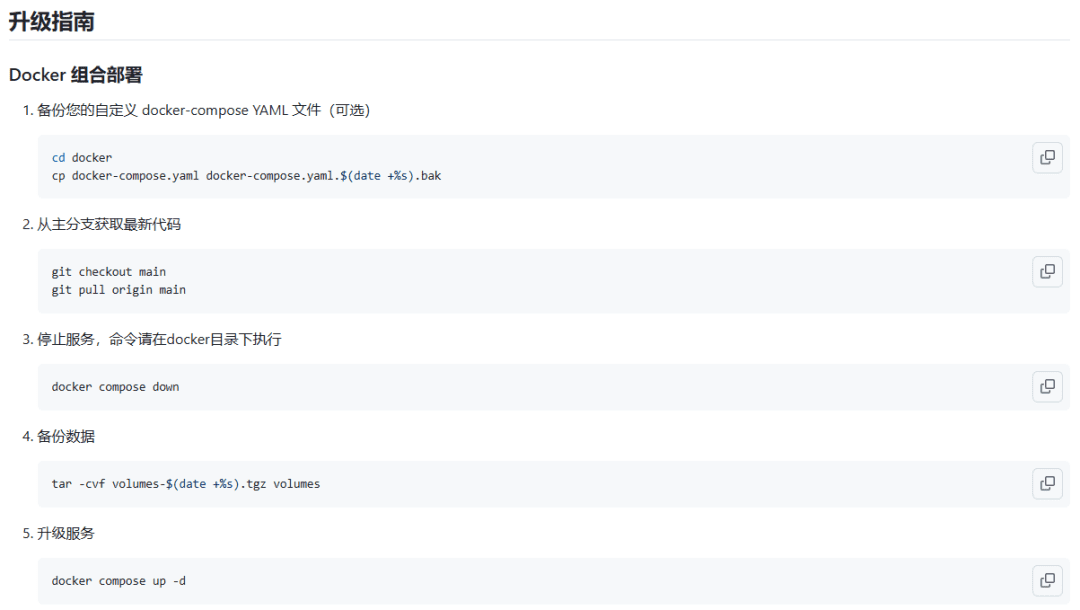

- Caching: Caching can be enabled on the "Settings" page of the gateway. Caching can be enabled by setting the appropriate TTL (Time-To-Live)For example, 1 hour (3600 seconds).

AI GatewayResponses to identical requests (with identical prompt words, models, etc.) can be cached. During the TTL validity period, subsequent identical requests will be cached directly by theGatewayReturns cached results, is extremely responsive, andDoes not consume Token or compute resources from back-end AI service providers. This is particularly effective for scenarios where there are repeated requests, such as AI-generated content (e.g., product descriptions), and can significantly reduce cost and latency. - Rate Limiting: Rate limiting rules can also be configured on the Settings page. You can limit the frequency of requests based on IP addresses or customized request headers (e.g. passing a user ID or a specific API Key). For example, you can set "Allow up to 20 requests per minute for a single IP address". This helps prevent malicious attacks or misuse of resources due to program errors.

strategic value

For developers and teams that need to integrate and manage one or more AI services, theCloudflare AI Gateway provides a centralized, easy-to-manage solution. It not only solves the cost, performance and monitoring challenges common to direct API calls, but also provides an additional layer of optimization and protection through features like caching and rate limiting. Rather than developers building and maintaining similar proxies, caching and monitoring systems on their own, theAI Gateway greatly reducing complexity and workload. As AI applications deepen, unified management with such gateway services is critical to maintaining system robustness, controlling operational costs, and enabling flexible expansion in the future.

© Copyright notes

Article copyright AI Sharing Circle All, please do not reproduce without permission.

Related posts

No comments...