Claude Innovates API Long Text Cache to Dramatically Improve Processing Efficiency and Reduce Costs

Claude recently announced a revolutionary new API feature, Long Text Cache, a move designed to provide users with a more efficient and less costly service experience.

The core benefit of this innovative feature is that it allows the Claude model to "remember" long pieces of user input, including but not limited to entire books, complete code bases, or large documents. By caching this information, the user does not have to repeat the input in subsequent interactions, significantly reducing data processing time and costs.

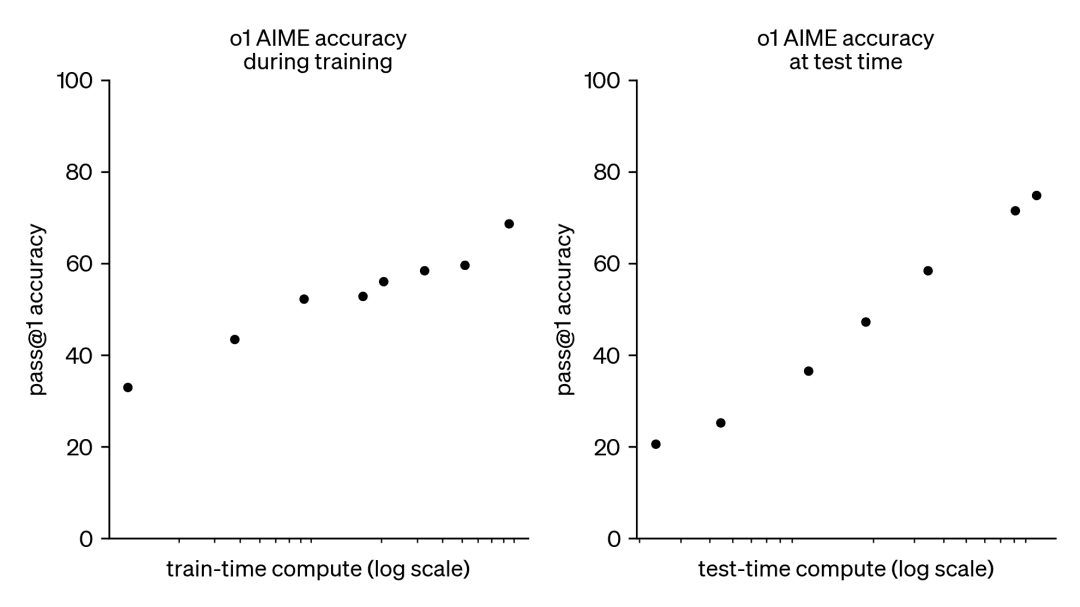

According to the official data provided by Claude, after enabling the long text caching function, the processing speed can be increased by up to 85%, while the cost can be reduced by as much as 90%. this means that, whether it is for in-depth conversations, code development, or document analysis, the user will be able to experience an unprecedented fast response.

This function has a wide range of application scenarios and is particularly suitable for the following areas:

- Dialog Agent: Enables a smoother and less costly dialog experience by caching long commands or documents.

- Coding Assistant: Cache code base to improve the efficiency of code completion and question answering.

- Large Document Processing: Quickly embed and retrieve long form materials without worrying about response delays.

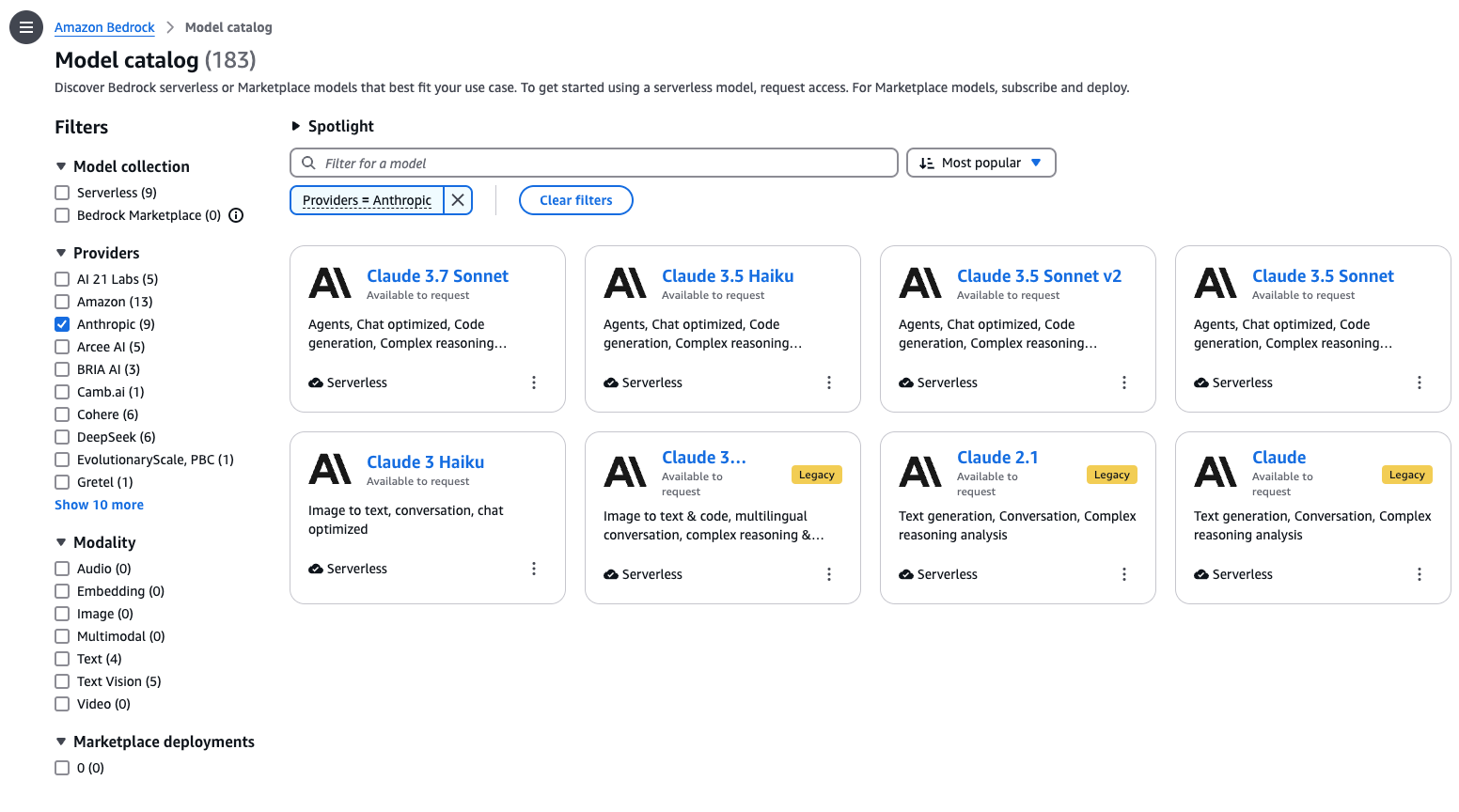

This feature is currently supported for Claude 3.5 Sonnet and Claude 3 Haiku models, and the Claude team plans to extend it to more models in the near future.

Users who want to experience this feature need to follow the steps below:

- Ensure that you have access to the Claude API.

- Select a model that supports the long text caching feature.

- Enable caching in the API request, defining what to cache.

- Send a request and start using cached content to simplify subsequent operations.

This innovative move by Claude will undoubtedly bring about a huge change in the field of long text processing, helping users to achieve both efficiency and cost optimization in information processing. Developers can expect this new feature to revolutionize their workflow.

© Copyright notes

Article copyright AI Sharing Circle All, please do not reproduce without permission.

Related posts

No comments...