Claude 3.7 Sonnet Full Experience: Free Channels, API Details, Turning on Reasoning

Recently, Anthropic Inc. launched the Claude An update to the 3.5 Sonnet model, Claude 3.7 Sonnet. despite only adding 0.2 to the version number, this update brings a number of changes in both performance and functionality. It's been more than four months since Claude's last model update, which is a long time in the rapidly evolving field of artificial intelligence.

It is widely recognized in the industry that models are not usually upgraded directly to version 4.0 without an architectural breakthrough.

Free Access

| Site name | Addresses (some require scientific access) | model version | inference mode | Context window (tokens) | Maximum Output (tokens) | networking function | Daily limits/costs | specificities |

|---|---|---|---|---|---|---|---|---|

| Claude Official Website | https://claude.ai/ | 3.7 Sonnet | inference | Approx. 32K | Approx. 8K | unsupported | Free users are limited, paid users are limited by Token (Normal/Extended). | The official platform, with a small amount for free users and a limit for paid users. |

| lmarena | https://lmarena.ai/ | 3.7 Sonnet/32k Thinking | Non-reasoning/reasoning | 8K / 32K | 2K (max. 4K) | unsupported | Appears to be unlimited | Provides both non-inference and 32k inference modes with adjustable maximum output tokens. |

| Genspark | https://www.genspark.ai/ | 3.7 Sonnet | inference | inconclusive | inconclusive | be in favor of | 5 free sessions per day | Supports networked searches for scenarios where you need to get the most up-to-date information. |

| Poe | https://poe.com/ | 3.7 Sonnet/Thinking | Non-reasoning/reasoning | 16K / 32K (Max. 64K) | tunable | unsupported | Daily bonus points, 3.7 Sonnet 333 points per session, Thinking 2367 points per session | The context window and output length can be flexibly adjusted by controlling its use through the integration system.Thinking models support larger contexts. |

| Cursor (trial) | https://www.cursor.com/cn | 3.7 Sonnet | inconclusive | inconclusive | inconclusive | unsupported | inconclusive | Integrated in the code editor for developer convenience. |

| OpenRouter | https://openrouter.ai/ | 3.7 Sonnet/Thinking/Online | Non-reasoning/reasoning/online | 200K | Adjustable (up to 128K) | Support/Fees | check or refer to token Billing, same price for different service providers, extra charge for online models | Multiple models and reasoning modes are supported, with parameterized outputs up to 128K. Thinking models support "full-blooded reasoning". Online models support networking at an additional cost. |

| OAIPro | (API Key required) | 3.7 Sonnet/Thinking | Non-reasoning/reasoning | 64K / 200K | 4K (adjustable) | unsupported | Billing by token | The Thinking model automatically turns on inference, and the inference token is forced to be 80% for max_tokens. |

| Cherry Studio | (API Key required) | 3.7 Sonnet | Non-reasoning/reasoning | 200K | Adjustable (up to 128K) | Support (requires Tavily Key) | Billing by token + Tavily Number of inquiries (1000 free in a month) | Combined with the Tavily API, it enables networked searches. |

| NextChat | (API Key required) | 3.7 Sonnet | inconclusive | inconclusive | inconclusive | Support (WebPilot plugin) | free (of charge) | combining WebPilot The plugin enables networked search. |

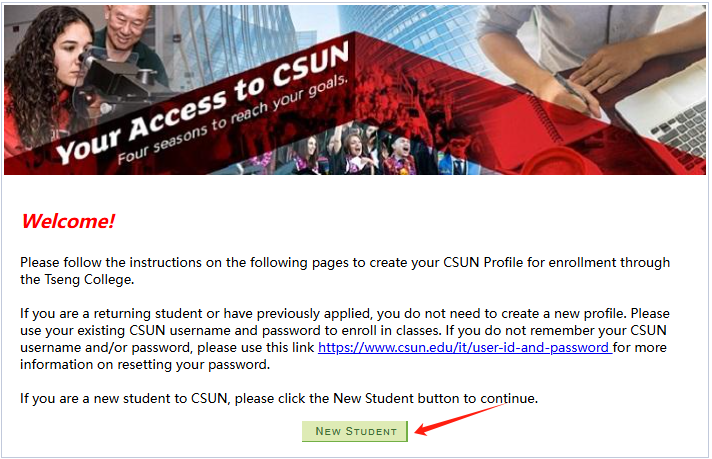

To experience Claude 3.7 Sonnet for free, there are several ways to do so:

- Claude Official Website::

- access address: (scientific access required) https://claude.ai/

- functionality: Free members can use the non-inference version of the model and do not support the networking feature.

- lmarena::

- access address:: https://lmarena.ai/

- functionalityIn the "direct chat" option, you can choose either the non-inference version or the 32k inference version of the model, neither of which supports networking. The input limit is 8k tokens, the default output is 2k tokens, and the maximum output can be 4k tokens by adjusting the parameters.

- Introduction to lmarena: A platform that provides multiple Large Language Model (LLM) arenas and direct chat capabilities where users can compare and test different models.

- Non-inference version

- 32k Reasoning Edition

- Max output tokens parameter (up to 4k)

- Max output tokens Explanation: This parameter is used to set the maximum number of tokens that can be generated by the model in a single pass.

- Genspark::

- access address: (scientific access required) https://www.genspark.ai/

- functionality: Provides a reasoned version of the model, supports networking (check "Search Web"), and has 5 free conversations per day.

- Introduction to Genspark: A platform that provides artificial intelligence services where users can work with a variety of large-scale language models and supports connected search capabilities.

- Reasoning version with internet access, 5 times a day

- Poe::

- access address: (scientific access required) https://poe.com/

- functionality: 3000 bonus points per day.

- Introduction to Poe: A platform launched by Quora that allows users to interact with multiple large language models and create custom bots.

- Claude 3.7 model: Consumes 333 points, adjustable via slider, supports up to 16k contexts, does not support networking.

- Claude 3.7 Thinking models: 2367 points consumed, adjustable via slider, default 32k context, max 64k.

- Default 32k::

- Maximum 64k::

It's worth noting that Poe's

Global per-message budgetSetting.

This setting indicates the maximum amount of credits consumed per conversation, which defaults to 700. Poe will alert you if a message exceeds this cost. This setting applies to all chats, or you can edit the budget for a specific chat in the chat settings. If the budget is set too low, AI conversations may fail, as some models require higher point consumption to function properly.

- Cursor (probationary period)::

- access address:: https://www.cursor.com/cn

- functionality: Networking is not supported.

- Introduction to Cursor: A code editor with integrated artificial intelligence designed to help developers write and debug code more efficiently.

API Usage

For developers, using Claude 3.7 Sonnet through the API provides greater flexibility and control.

- prices: Pricing for the Claude 3.7 Sonnet API is the same as version 3.5, at $3/million tokens for input and $15/million tokens for output, and $0.3/million tokens for cache reads and $3.75/million tokens for cache writes. The inference process also counts output tokens, so the actual token count and total price is higher than it would be without inference. count and total price are higher than without reasoning.

- context window: As with Claude 3.5 Sonnet, the total context window for the Claude 3.7 Sonnet API is 200k tokens.

- context window explanation: Indicates the length of text that the model can take into account when processing the input.

- Token Explanation: The basic unit of text, which may be a word, character, or subword.

- maximum output: Claude 3.5 Sonnet API has a maximum output of 8k tokens, while Claude 3.7 Sonnet has a maximum output of 128k tokens by setting a parameter.

The API version of a large model usually has a larger context window and maximum output than the Chat version, because API users pay for actual usage, and the more input and output, the higher the service provider's revenue. The Chat version usually has a fixed monthly price, so the more output you have, the more it costs the service provider.

hybrid reasoning model

Now there's only 3.7 behybrid reasoning modelSam said GPT4.5 is the last generation of non-inferential models thatGPT5.0 together with o The series then merged, presumably with a hybrid inference model as well.

Hybrid is inference and non-inference, using the same model, with the API using parameters, and the Chat version controlling inference Token consumption/effort with sliders or dropdown menus, etc.

Correspondence, the reasoning performance is directly proportional to the underlying model performance X reasoning time, the underlying model performance is different first not compared to the reasoning time can be benchmarked to measure the GPT descending wisdom commonly used Japanese poems and other topics as an example of a personal test.

The following is a personal estimate, taking into account only thinking about time-to-mark relationships, not intelligence. For reference only

R1 has fewer Token to think about because R1 is free and performance must be considered while keeping costs in check.

present . DeepSeek keep R1-low collaboration with o3mini-med Waiting for a hit, in fact, should have a stronger performance if a larger inference Token is opened up

Another reason for the DeepSeek card, which is clearly not enough, is that the previous "System busy." After a month, it's unlikely that we'll be able to boost performance in the near future like o3mini and Claude by extending the time dramatically and increasing the number of Tokens so that the force is big enough to fly and violently add arithmetic power.

Tongli, a city in Jiangsu Province, China Gemini Like R1, it is also a free strategy and cost control comes first, so the Gemini 2.0 Flash Thinking token is the o3mini-low That gear.

How to set the 128K maximum output

Cherry Studio + OpenRouter setup method (non-referential)

This method is for using the Claude 3.7 Sonnet API through OpenRouter.

- Introduction to OpenRouter: A platform that provides multiple large-scale language model API aggregation services.

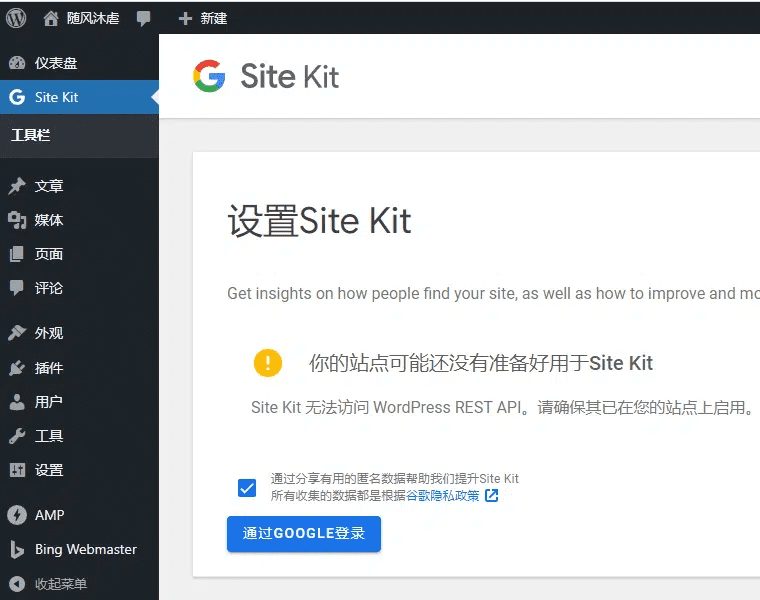

- About Cherry Studio: A client-side tool that supports a wide range of large language model APIs.

- Open Cherry Studio and add or edit an assistant.

- In the Model Settings, add the

betasparameter and select JSON for the parameter data type:

["output-128k-2025-02-19"]

- increase

max_tokensparameter, select Numeric for the parameter type and set the value to 128000:

betasaccount for: Parameters used to enable specific experimental features.max_tokensaccount for: Used to set the maximum number of tokens the model can generate in a single pass.

Tests have shown that outputs in excess of 64K can be achieved with OpenRouter, but with some probability of truncation. This may be due to network instability or limitations of the model itself.

OpenRouter setup method (120K full-blooded reasoning)

This method only works with the Claude-3.7-Sonnet:Thinking model of OpenRouter.

- Open Cherry Studio and add or edit an assistant.

- In the Model Settings, add the

betasparameter and select JSON for the parameter data type:["output-128k-2025-02-19"] - increase

thinkingparameter, select JSON for the parameter type, and set the value to:{"type": "enabled", "budget_tokens": 1200000}thinkingaccount for: Parameters used to enable inference mode and set the inference budget.

- Set the model temperature to 1. Other temperature values may result in invalid inference.

- increase

max_tokensparameter, with the value set to 128000 (the minimum value is 1024, which needs to be a few K larger than the inference budget, left for the final output):

Open WebUI + official API or oaipro setup method (120K+ full-blooded reasoning)

- Introduction to Open WebUI: An open source, self-hosted web interface for large-scale language modeling.

- Introduction to oaipro: A platform that provides Claude API proxy services.

pass (a bill or inspection etc) Open WebUI (used form a nominal expression) pipe Functional modifications headerThe Claude 3.7 128K output from any API site can be realized.

pipeaccount for: A feature of Open WebUI that allows users to modify request headers.headeraccount for: HTTP request header containing metadata about the request.

The reasoning can be set to a maximum of 127999, this is because:

Total Context 200K (fixed) - Maximum Output 128K (settable) = Input Maximum Remaining 72K

Maximum output 128K (configurable) - Thought chain 120K (configurable) = 8K remaining in final output

How to verify if reasoning mode is on

It is possible to try to ask more complex questions. If reasoning mode is turned on, Cherry Studio will think for tens of seconds to minutes without any output. Currently, Cherry Studio has not been adapted to display the reasoning process.

For example, try the following questions (which usually do not lead to a correct answer without reasoning on, take a few minutes with reasoning on, and get it right in most cases):

correct answer:

Advantages and disadvantages of large outputs

vantage::

- It is possible to replace some of the work of the intelligent body. For example, whereas translating a book might previously have required an intelligent body to break up chapters, it can now handle the entire book directly.

- Possible cost savings. If you don't split the chapters and directly input the whole text, you can output 8K each time and repeat 16 times to achieve 128K output. Although the output cost is the same, the original text only needs to be entered once, saving the cost of 15 entries.

- With a sensible input strategy, you can dramatically reduce costs, improve efficiency, and even increase processing speed.

- About 100,000 words +, can be a whole book translation, write a book to write a web article, before and after the consistency is good, will not write to the back to forget the front, theoretically can be a one-time output of 3.5 16 times the amount of code, which greatly improves the processing power and efficiency.

drawbacks::

- The performance of all large models decays with increasing context, with the exact magnitude of the decay to be evaluated.

- 128K single outputs are costly, so be sure to test the cue word carefully before proceeding to a large output to avoid errors that could lead to waste.

API Networking

The official Claude API itself does not support networking:

- CherryStudio + Tavily API Key: 1000 free connections per month.

- About Tavily: A platform that provides search API services.

Method: Update to the latest version 1.0 of CherryStudio, register and apply for a free API key at tavily.com:

Fill the API key into Cheery's settings and light the Networking button in the Question box:

- NextChat + WebPilot Plugin: Free networking.

- About NextChat: A chat platform that supports multiple large language models and plugins.

- Introduction to WebPilot: A plugin that provides web content extraction and summarization functionality.

- OpenRouter Chatroom: Comes with its own networking button. How to: Log in to Chatroom | OpenRouter, select the 3.7 Sonnet model, and light the networking button in the question box:

- OpenRouter comes with networking capabilities: Any front-end + OpenRouter API Key. method: manually fill in the model name when adding a model

anthropic/claude-3.7-sonnet:onlineThe cost is $4 per 1,000 inquiries.

Other API-related information

- Official API::

- entrances:: https://www.anthropic.com/api

- Minimum recharge of $5.

- Setting via Cherry parameters is not supported

betasEnables 128K output. - Tier 1 has an input limit of 20k tpm and an output limit of 8k tpm.

- entrances:: https://www.anthropic.com/api

- OpenRouter API::

- entrances:: https://openrouter.ai/anthropic/claude-3.7-sonnet

- Provides Claude-3.7-Sonnet, Claude-3.7-Sonnet Thinking, and Claude-3.7-Sonnet Beta models.

- Supports Claude-3.7-Sonnet Online or Claude-3.7-Sonnet Thinking Online with networking for an additional $4 per thousand queries.

- There are three providers Anthropic, Amazon, and Google with the same price.

- The maximum output for Google providers is only 64K, Anthropic and Amazon can be parameterized to 128K.

- entrances:: https://openrouter.ai/anthropic/claude-3.7-sonnet

- OAIPro API::

- Introduction to oaipro: A platform that provides Claude API proxy services.

- Default input 64K, thought chain + final output 4K.

- Setting via Cherry parameters is not supported

betasEnables 128K output. If you do not add themax_tokensparameter, the default output is 4K. - Claude-3-7-Sonnet-20250219-Thinking model: inference is turned on directly, no additional parameters are needed, and the inference token is forced to be

max_tokens80%, it appears that it is not possible to specify athinkingParameters. - Claude-3-7-Sonnet-20250219 Model: can be specified manually

thinkingParameters.

- low-cost transit center::

- aicnn: Ordinary output is about $72/million tokens.

- Profile of aicnn: A platform for providing AI services, including API relay.

- Note: Some low cost transit stations may only support 64K output, not 128K.

- aicnn: Ordinary output is about $72/million tokens.

Chat version

Free Membership

It is possible to use Claude 3.7 Sonnet, but there are some limitations to its use. According to the Anthropic In the past, the amount of free users may not be much.

In addition, the Claude 3.5 Haiku model is now unavailable to free members.

- context window: Measured to be about 32K.

- maximum output: Measured to be about 8K.

- inferenceless

paying member

The context window and maximum output of the paid Chat version are not yet sure if they are the same as the free version.

The paid version offers both Normal and Extended reasoning modes. However, it should be noted that there is a risk that paid accounts will be banned. It is advisable to top up your membership before making sure you have a pristine IP address. Comparatively speaking, it is safer to use the API.

The usage limit for Claude members is not based on the number of times, like GPT or Grok, but rather on the total number of Token. As a result, using Reasoning Mode, especially Extended Mode, significantly reduces the number of questions that can be asked per day. Some users have revealed that Anthropic may introduce a paid reset of the usage limit, allowing users to skip the cooling off period for a one-time fee.

functionality

- Uploading files: Supports up to 20 files with a maximum of 30MB per file.

- multimodal: Supports picture recognition, not voice or video recognition.

- GitHub: New feature that connects to a user's GitHub repository as a way to upload files.

- Claude Code: An official command line tool for developers, currently released as a limited research preview. The tool supports code search, reading, editing, test running, GitHub commits, and command line operations, and is designed to shorten development time and improve the efficiency of test-driven development and debugging complex problems.

- Networking, Deep Search, Deep Research, Speech Modeling, Vincennes Graphs: Same as Claude version 3.5, neither supported.

Model Review

coding skills

Code competence has always been a strong point of the Claude model and a major concern for its core user group, programmers. If code competence declines, Claude could face a serious challenge.

- Lmarena: webdev Rating Leader. Reference: https://lmarena.ai/?leaderboard

- Livebench: Claude 3.7 non-reasoning version has smaller enhancements compared to version 3.5, and the reasoning version has larger enhancements, but with a corresponding increase in cost (same unit price, increased output Token). Reference: https://livebench.ai/

- Introduction to Livebench:: A platform for continuously evaluating the performance of large language models.

- Aider: Claude 3.7 inference mode costs about 2.5 times as much as version 3.5.

- Introduction to Aider: An AI programming assistant that helps developers with code generation and debugging.

Reference: https://aider.chat/docs/leaderboards/

- CodeParrot AI: Claude 3.7 performed well in the HumanEval coding benchmark, scoring 92.1, an improvement over Claude 3.5 (89.4).

- Introduction to CodeParrot AI: A platform that provides a range of coding tools to streamline the development process.

Intelligent Body Tool Use

Anthropic officially claims that Claude 3.7 excels in the use of intelligent body tools.

math skills

Claude 3.7 Regular is average at math, Reasoning performs better.

reasoning ability

market performance

Google search heat::

Google Play: Claude App is ranked #107 on the US Overall Charts.

App Store: Failed to make the top 200.

Summary and outlook

The release of Claude 3.7 Sonnet marks another iteration of Anthropic in the large modeling space. Despite the small change in version number, it offers improvements in code generation, inference capabilities, and large contextual output. However, Claude still faces challenges in terms of limited access for free users, lack of networking capabilities, and market performance.

Based on Anthropic's past update rate, it may be some time before Claude 4.0 is released.Claude's overall growth rate, especially on the C-suite (consumer side), is lagging significantly behind its competitors. Its valuation has been surpassed by xAI.

According to the current trend, Claude may be squeezed out of the first tier of global big models by GPT, DeepSeek and Gemini. In the future, Claude may compete with models such as Grok and Beanbag for the second tier position, or choose to give up the C-end market altogether and focus on verticals such as programming, intelligentsia, and writing.

© Copyright notes

Article copyright AI Sharing Circle All, please do not reproduce without permission.

Related posts

No comments...