ChatMCP: an AI chat client that implements the MCP protocol and supports a variety of LLM models

General Introduction

ChatMCP is an open source AI chat client designed to implement the Model Context Protocol (MCP). Developed by GitHub user daodao97, the project supports a variety of Large Language Models (LLMs), such as OpenAI, Claude, and OLLama, etc. ChatMCP not only provides chat functionality with the MCP server, but also includes a variety of useful features such as automatic installation of the MCP server, chat log management, and better user interface design. The project is licensed under the GNU General Public License v3.0 (GPL-3.0), which allows users to freely use, modify and distribute it.

Function List

- Support for chatting with MCP servers

- Automatic installation of the MCP server

- Support for SSE MCP transmission

- Automatic MCP server selection

- Chat Records Management

- Support for multiple LLM models (OpenAI, Claude, OLLama, etc.)

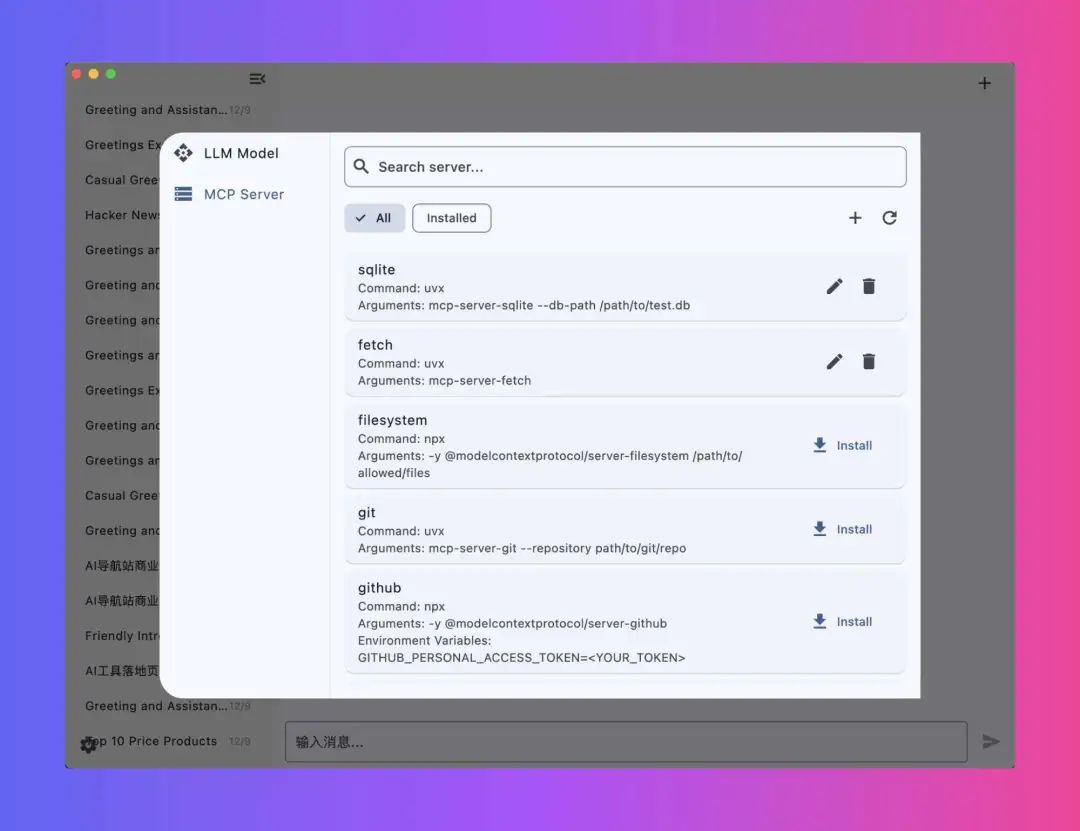

- Provide MCP server marketplace for easy installation of different MCP servers

- Better user interface design

- Multi-platform support (MacOS, Windows, Linux, etc.)

Using Help

Installation process

- Installation of dependencies: Make sure that uvx or npx is installed on your system.

- Install uvx:

brew install uv - Install npx:

brew install node

- Install uvx:

- Configuring LLM API Keys and Endpoints: Configure your LLM API keys and endpoints on the Settings page.

- Installation of MCP Server: Install the MCP server from the MCP server page.

- Download Client: Choose to download the MacOS or Windows version depending on your operating system.

- Debug Log: The log file is located in the

~/Library/Application Support/run.daodao.chatmcp/logsThe - Database and configuration files::

- Chat log database:

~/Documents/chatmcp.db - MCP server configuration file:

~/Documents/mcp_server.json

- Chat log database:

- Reset Application: Use the following command to reset the application:

rm -rf ~/Library/Application\ Support/run.daodao.chatmcp

rm -rf ~/Documents/chatmcp.db

rm -rf ~/Documents/mcp_server.json

Usage Functions

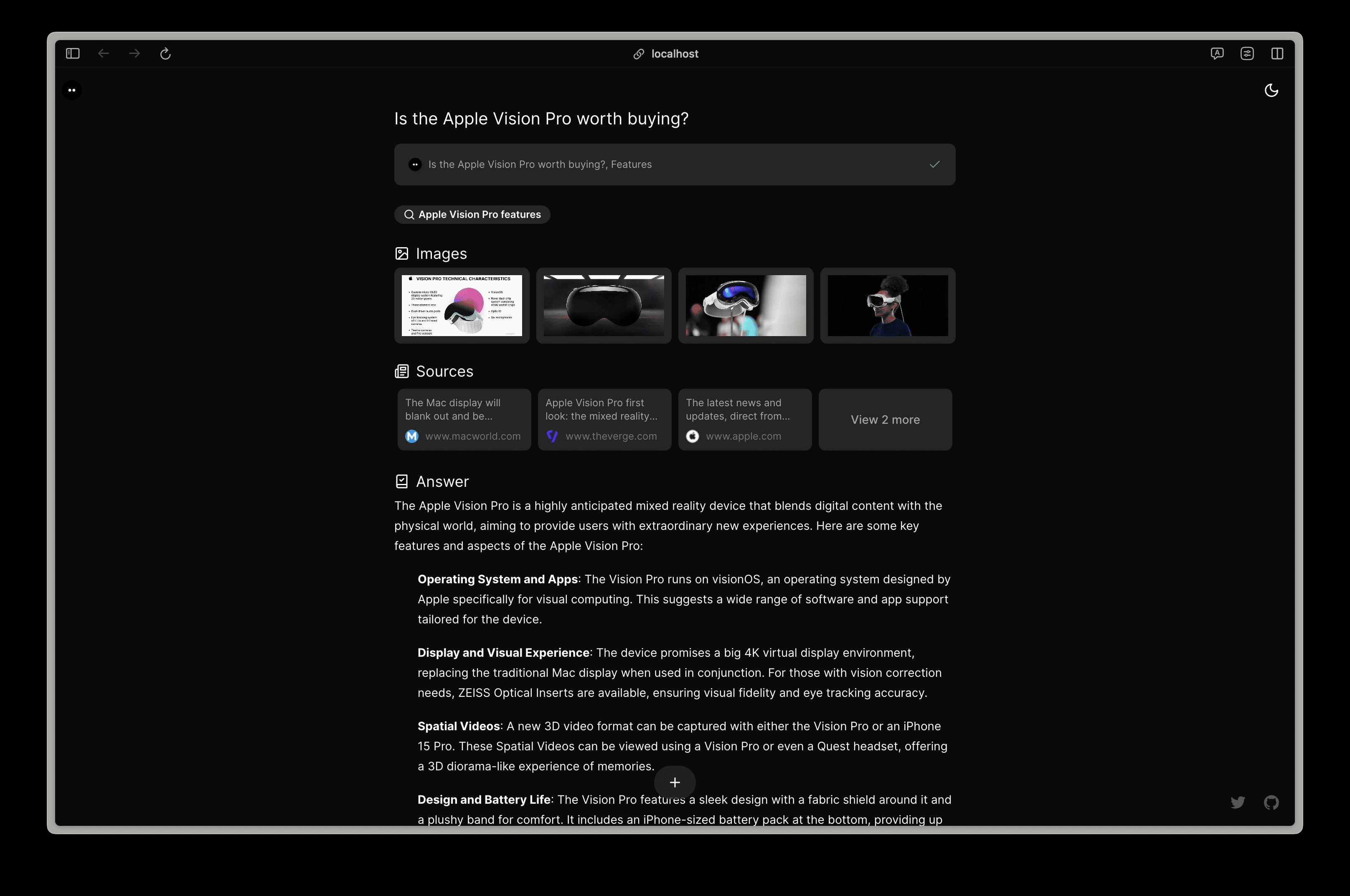

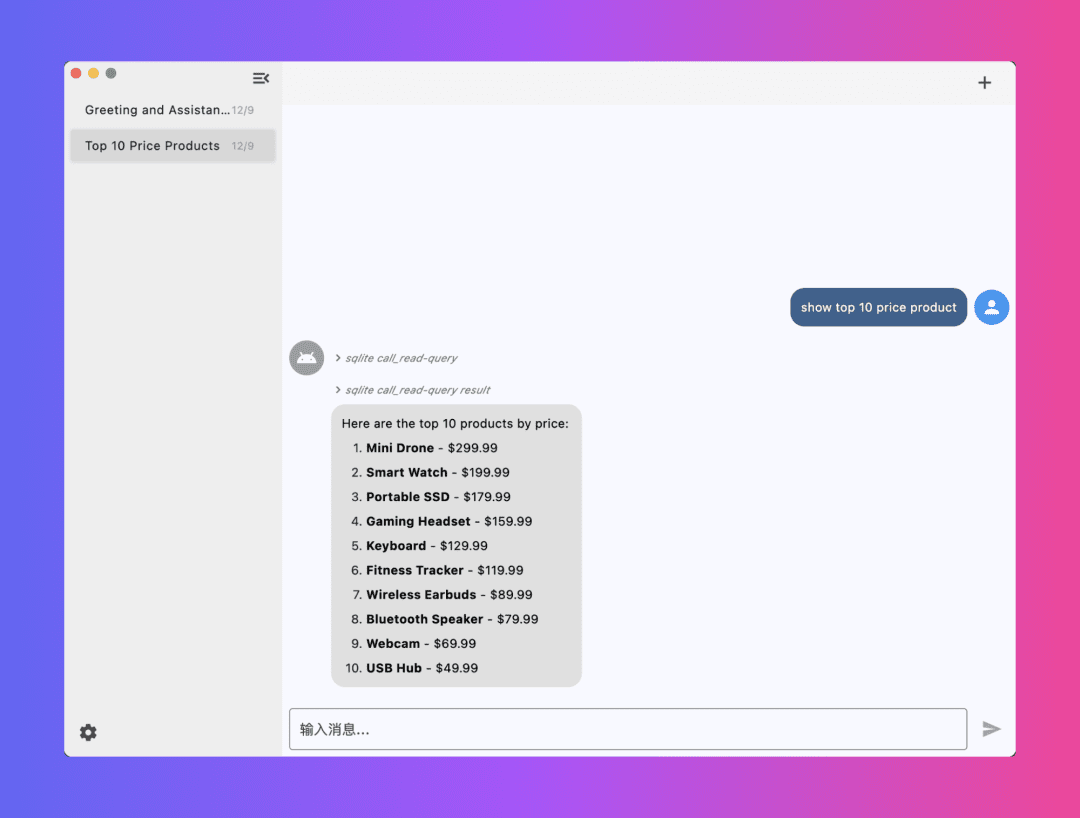

- Chat with MCP Server::

- Open the ChatMCP client and select the configured MCP server.

- Enter the chat content and click the Send button to interact with the MCP server.

- Manage Chat Logs::

- Chat logs are automatically saved to the

~/Documents/chatmcp.dbThe - Historical chats can be viewed and managed in the client.

- Chat logs are automatically saved to the

- Installation and selection of MCP server::

- Visit the MCP Server Marketplace and select the desired MCP server to install.

- After the installation is complete, you can select and switch between different MCP servers on the Settings page.

- Configuring and using the LLM model::

- Configure the desired LLM model (e.g. OpenAI, Claude, OLLama, etc.) on the Settings page.

- Once configured, you can choose to use a different LLM model for the conversation during chat.

- user::

- ChatMCP provides a simple and intuitive user interface that facilitates various operations.

- Multi-platform support allows users to use it on operating systems such as MacOS, Windows and Linux.

With these steps, users can easily install and use ChatMCP for an efficient AI chat experience with MCP servers.

© Copyright notes

Article copyright AI Sharing Circle All, please do not reproduce without permission.

Related posts

No comments...