A New Way to Keep ChatGPT Conversations Going Without Losing Memory

The researchers have found a concise and effective solution to an intractable problem in large language models such as ChatGPT, which would have severely impacted the performance of the model.

In AI conversations involving multiple rounds of continuous dialog, the powerful, large-scale language machine learning models that drive chatbots such as ChatGPT sometimes fail suddenly, causing a sharp drop in bot performance.

A team of researchers from MIT and other institutions have discovered a surprising cause of this problem and have come up with an easy solution, which allows chatbots to continue conversations without crashing or slowing down.

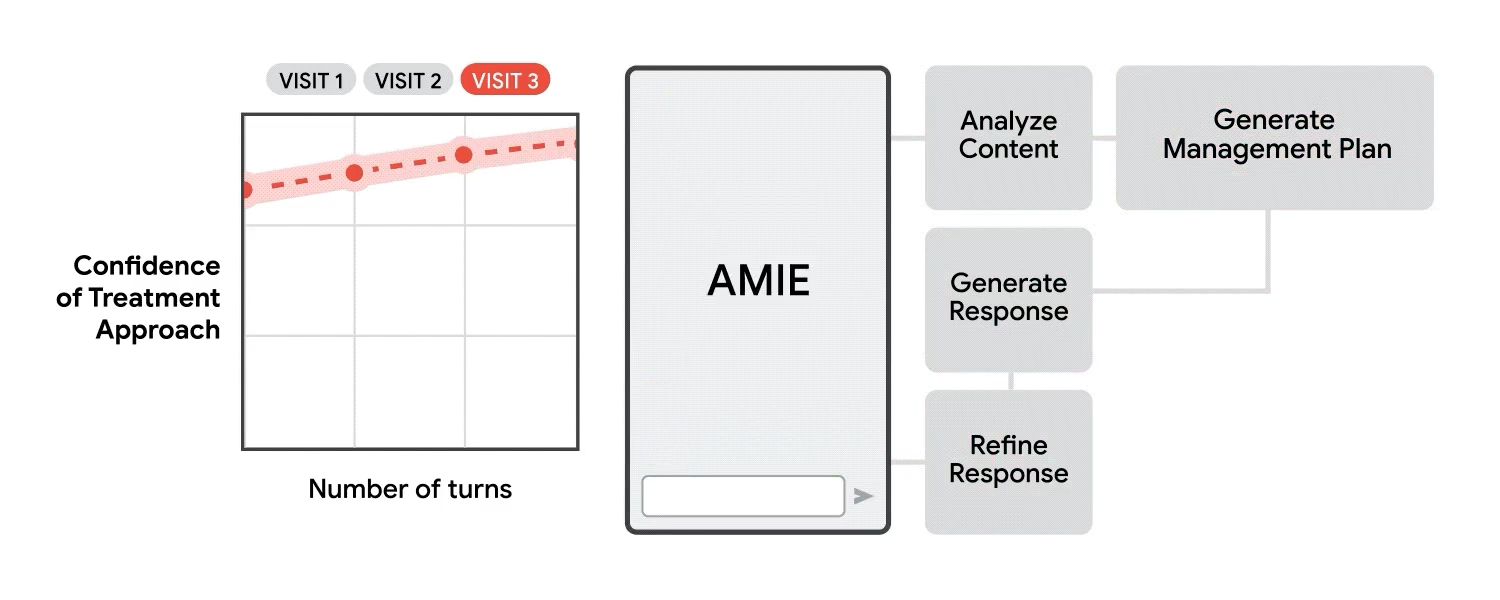

Their approach adapts the key cache (the equivalent of the memory store for conversations) at the center of many large language models. In some cases, when the cache needs to store more information than its actual capacity, the earliest data is replaced, which can lead to model failure.

By retaining some of the initial data points in memory, the researchers' scheme allows chatbots to keep communicating no matter how long the conversation goes on.

This approach, called StreamingLLM, maintains the model's high efficiency even when the dialog continues for more than four million words. Compared to another approach that avoids system crashes by constantly recomputing parts of previous conversations, StreamingLLM performs more than 22 times faster.

This will enable chatbots to carry on long conversations throughout the day without having to be restarted, effectively providing an AI assistant for tasks such as writing, editing, and generating code.

"Today, with this approach, we are able to deploy these large language models on a continuous basis. By creating a chatbot that we can communicate with at any time and that can respond based on the content of recent conversations, we are able to utilize these chatbots in many new application areas," said Guangxuan Xiao, a graduate student in electrical engineering and computer science (EECS) and lead author of the paper.

Xiao's co-authors include his mentor Song Han, associate professor at EECS, member of the MIT-IBM Watson AI Lab, and NVIDIA Distinguished Scientist; as well as Yuandong Tian, Meta AI Research Scientist; Beidi Chen, assistant professor at Carnegie Mellon University; and Mike Lewis, Meta AI Research Scientist and senior author of the paper. This research will be presented at the International Conference on Learning Representations.

A puzzling phenomenon

Large-scale language models encode data, such as the vocabulary in a user's query, into representational forms called tokens. Many models employ so-called "attentional mechanisms" that utilize these tokens to create new text.

In general, the AI chatbot writes new content based on texts it has seen; therefore, it stores recent tokens in a memory bank called the KV cache to be used later. The attention mechanism creates a grid map containing all the tokens of the cache, an "attention map", on which the strength of the correlation between each word and other words is marked.

Understanding these relationships helps such large language models to be able to create human-like texts.

However, when the cache volume becomes huge, the attention map also expands to be immense, leading to a decrease in computation speed.

Moreover, if the tokens required to encode content exceed the cache limit, the model's performance is impaired. For example, a widely used model is capable of storing 4,096 tokens, but an academic paper may contain about 10,000 tokens.

To circumvent these problems, researchers have adopted a "sliding cache" strategy, eliminating the oldest tokens in order to add new ones. However, once the first token is removed, the performance of the model tends to plummet, which directly affects the quality of the newly generated vocabulary.

In this new paper, the researchers found that if they hold the first token constant in a sliding cache, the model maintains its performance even if the cache size is exceeded.

But this finding may seem counterintuitive. The first word in a novel is rarely directly connected to the last word, so why is the first word crucial to the model's ability to generate new words?

In their new paper, the researchers also reveal the reasons behind this phenomenon.

focus point

Some models use the Softmax operation in their attention mechanisms, which assigns a score to each lexical element, reflecting their degree of relatedness to each other.The Softmax operation requires that all the attention scores must add up to a total of 1. Since most lexical elements are not closely related to each other, their attention scores are very low. The model assigns any remaining attention score to the first lexical element.

The researchers call this first lexical element the "focus of attention".

"We need an attention focus point, and the model chooses the first lexical element as this focus point because it is visible to all other lexical elements. We found that in order to maintain the dynamics of the model, we had to constantly keep this attention focus point in the cache." Han put it this way.

In developing StreamingLLM, the researchers found that placing four attention-focusing point lexical elements at the very beginning of the cache results in optimal performance.

They also note that the positional encoding of each lexical element must remain constant as new lexical elements are added and old ones are eliminated. For example, if the fifth lexical element is eliminated, the sixth lexical element must maintain its encoding as the sixth even if it becomes the fifth in the cache.

Combining these two ideas, they enable StreamingLLM to maintain a coherent dialog and outperform popular approaches that use recomputation techniques.

For example, with a cache capacity of 256 words, the method using the recomputation technique takes 63 milliseconds to decode a new word element, while StreamingLLM takes only 31 milliseconds. However, if the cache capacity is increased to 4096 words, the recomputation technique takes 1411 milliseconds to decode a new lexeme, while StreamingLLM still takes only 65 milliseconds.

"StreamingLLM employs an innovative attention pooling technique that solves the problem of performance and memory stability when processing up to 4 million tokens of text." Yong Yang, Presidential Young Professor of Computer Science at the National University of Singapore, said that although he was not involved in the work. "The capabilities of this technology are not only impressive, it also has game-changing potential to enable StreamingLLM to be used in a wide range of AI domains.StreamingLLM's performance and versatility bodes well for it to become a revolutionary technology that will drive how we use AI to generate applications. "

Agreeing with this view was also Tianqi Chen, an assistant professor of machine learning and computer science at Carnegie Mellon University, who was also not involved in the study. He said, "StreamingLLM allows us to smoothly scale the conversation length of large language models. We have successfully used it to deploy Mistral models on the iPhone."

The research team also explored the application of attentional pooling during model training by including several placeholders in front of all training samples.

They found that models trained in conjunction with attention pooling were able to maintain performance by using only one attention pool in the cache, whereas normally four are required to stabilize performance for pre-trained models.

However, even though StreamingLLM enables the model to have ongoing conversations, the model is still unable to remember words that have not been deposited into the cache. In the future, the researchers plan to overcome this limitation by exploring ways to retrieve deleted tokens or by allowing the model to remember previous conversations.

StreamingLLM has been integrated into NVIDIA's Big Language Model Optimization Library [TensorRT-LLM]Medium.

This work was supported in part by the MIT-IBM Watson Artificial Intelligence Laboratory, the MIT Science Center, and the National Science Foundation.

© Copyright notes

Article copyright AI Sharing Circle All, please do not reproduce without permission.

Related articles

No comments...