CFG-Zero-star: An Open Source Tool for Improving Image and Video Generation Quality

General Introduction

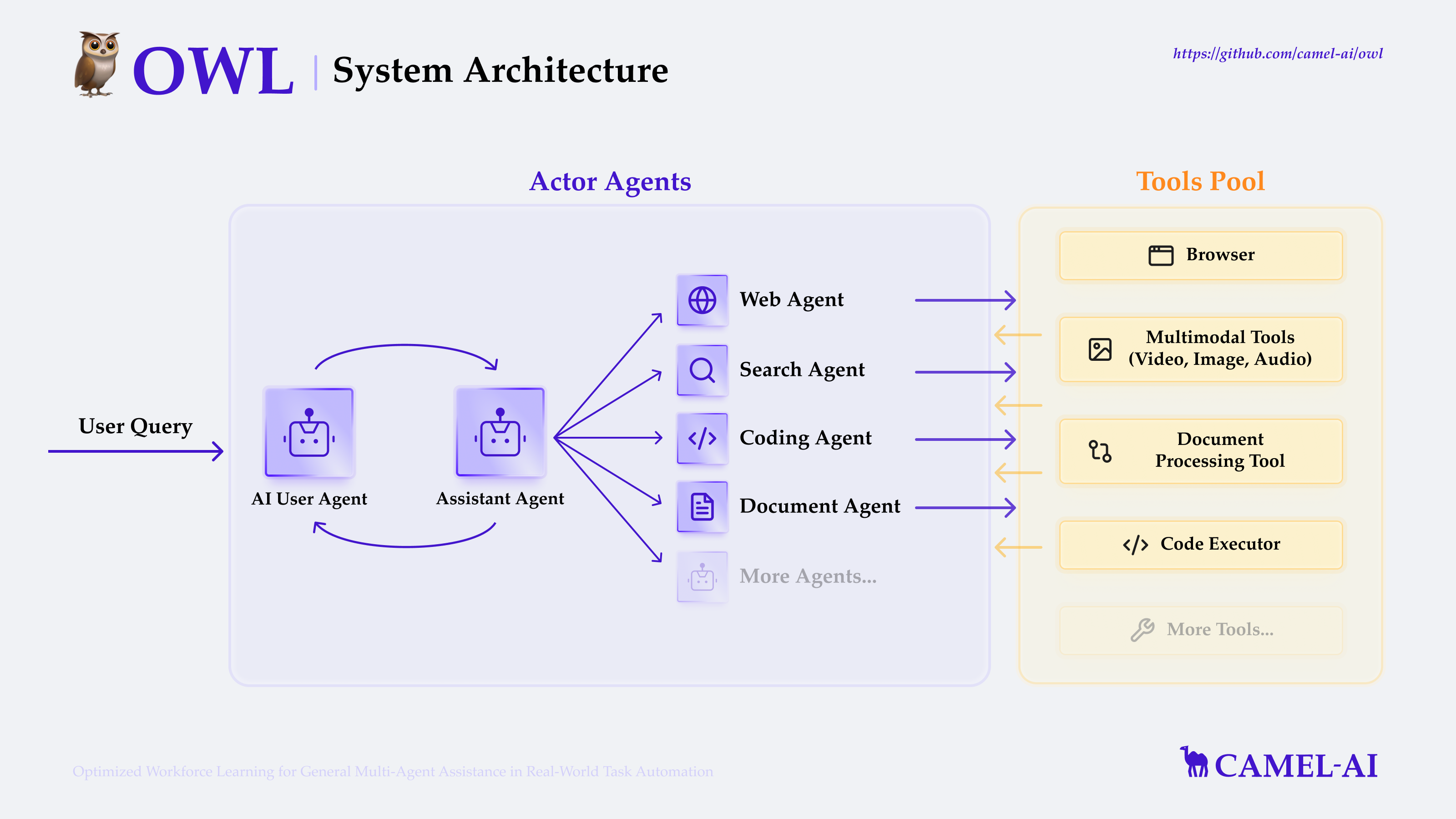

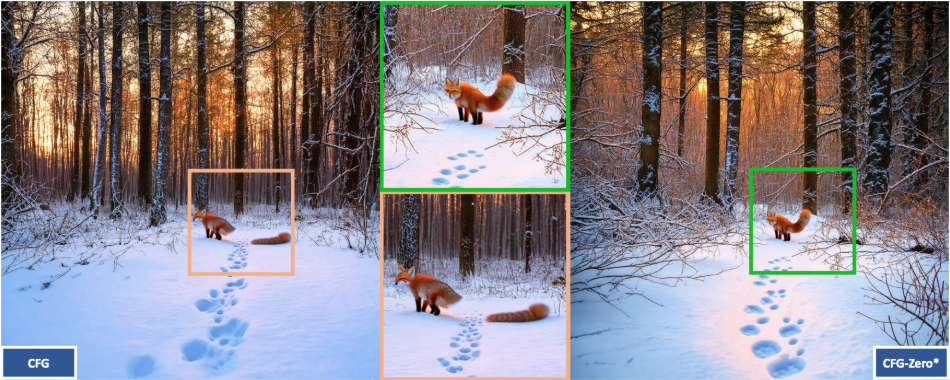

CFG-Zero-star is an open source project developed by Weichen Fan and the S-Lab team at Nanyang Technological University. It focuses on improving the Classifier Free Guidance (CFG) technique in stream matching models to enhance the quality of image and video generation by optimizing the guidance strategy and zero-initialization method. This tool supports text-to-image and text-to-video generation tasks, and can be adapted to Stable Diffusion 3, SD3.5, Wan-2.1, and other models. The code is completely open, based on the Apache-2.0 license, allowing for academic research and commercial use. The project provides online demos and detailed documentation for developers, researchers or AI enthusiasts.

Function List

- Improvement of CFG technique: optimize classifier free bootstrapping to improve the quality of generated content and text matching.

- Image generation support: Text-based generation of high-quality images, compatible with Stable Diffusion 3 and SD3.5.

- Support video generation: generate dynamic video, adapt to Wan-2.1 and other video models.

- Zero-initialization optimization: zeroing the prediction at the beginning of generation to improve the sample quality of the stream matching model.

- Open source code: The complete code is provided and users are free to download, modify or contribute.

- Gradio demo interface: Built-in online testing tool, no complicated configuration to experience.

- Dynamic parameter adjustment: supports adjusting the guidance intensity and the number of inference steps to meet different needs.

- Integrated third-party support: Support for ComfyUI-KJNodes and Wan2.1GP extensions.

Using Help

CFG-Zero-star is an open source project on GitHub that requires users to configure the environment and run the code themselves. The following is a detailed installation and usage guide to help you get started quickly.

Installation process

- Creating a Virtual Environment

- Install Anaconda (if not, download it from https://www.anaconda.com/).

- Run the following command in the terminal to create the environment:

conda create -n CFG_Zero_Star python=3.10 - Activate the environment:

conda activate CFG_Zero_Star

- Installing PyTorch

- Install PyTorch according to your GPU CUDA version. the official recommendation is CUDA 12.4:

conda install pytorch==2.5.1 torchvision==0.20.1 torchaudio==2.5.1 pytorch-cuda=12.4 -c pytorch -c nvidia - Check for CUDA version compatibility, see https://docs.nvidia.com/deploy/cuda-compatibility/.

- Users without a GPU can install the CPU version:

conda install pytorch==2.5.1 torchvision==0.20.1 torchaudio==2.5.1 -c pytorch

- Install PyTorch according to your GPU CUDA version. the official recommendation is CUDA 12.4:

- Download Project Code

- Clone the repository with Git:

git clone https://github.com/WeichenFan/CFG-Zero-star.git - Go to the catalog:

cd CFG-Zero-star

- Clone the repository with Git:

- Installation of dependencies

- Run the command to install the required libraries:

pip install -r requirements.txt - If there is a lack of

requirements.txt, install the core dependencies manually:pip install torch diffusers gradio numpy imageio

- Run the command to install the required libraries:

- Preparing model files

- Download Stable Diffusion 3 or SD3.5 model weights from https://huggingface.co/stabilityai/stable-diffusion-3-medium-diffusers.

- Place the model file in the project directory, or specify the path in the code.

Operation of the main functions

The core function of CFG-Zero-star is to generate images and videos. Below are the specific steps.

Generating images

- Configuration parameters

- show (a ticket)

demo.py, set the cue word:prompt = "一片星空下的森林" - Enable CFG-Zero-star optimization:

use_cfg_zero_star = True

- show (a ticket)

- Run Generation

- Enter it in the terminal:

python demo.py - The generated image is displayed or saved to the specified path.

- Enter it in the terminal:

- Adjustment parameters

guidance_scale: Controls the intensity of text steering, default 4.0, can be set from 1-20.num_inference_steps: Reasoning steps, default 28, increase to improve quality.

Generate Video

- Select Model

- exist

demo.pySet in:model_name = "wan-t2v" prompt = "一条河流穿过山谷"

- exist

- Run Generation

- Implementation:

python demo.py - Video saved in MP4 format, default path

generated_videos/{seed}_CFG-Zero-Star.mp4The

- Implementation:

- Adjustment parameters

heightcap (a poem)width: Set the resolution, default 480x832.num_frames: Frames, default 81.fps: Frame rate, default 16.

Demo with Gradio

- Launch Interface

- Running:

python demo.py - Go to http://127.0.0.1:7860 on your web browser.

- Running:

- procedure

- Enter the prompt word and select the model (SD3, SD3.5, or Wan-2.1).

- tick

Use CFG Zero Star, adjust the parameters and submit. - The result will be displayed in the interface.

Integration of third-party tools

- ComfyUI-KJNodes

- Download https://github.com/kijai/ComfyUI-KJNodes and follow its instructions for installation.

- exist ComfyUI Load the CFG-Zero-star node in the

- Wan2.1GP

- Download https://github.com/deepbeepmeep/Wan2GP and configure it for use.

caveat

- Generation is computationally intensive and an NVIDIA GPU with at least 8GB of RAM is recommended.

- Downloading the model is required for the first run, keep your internet connection open.

- The project follows the Apache-2.0 license, which prohibits the generation of pornographic, violent, etc. content.

With these steps, you can generate high-quality images and videos with CFG-Zero-star. Its operation requires a certain technical base, but the documentation and demo interface lower the threshold of use.

application scenario

- academic research

Researchers can use it to test the effectiveness of stream matching models and analyze the improvement of CFG and zero-initialization, applicable to the field of computer vision. - content creation

Creators can use the text to generate images or videos, such as the "Flying Dragon", for use in art design or short video clips. - model development

Developers can optimize their generation models based on this tool, debugging parameters to improve the quality of generation.

QA

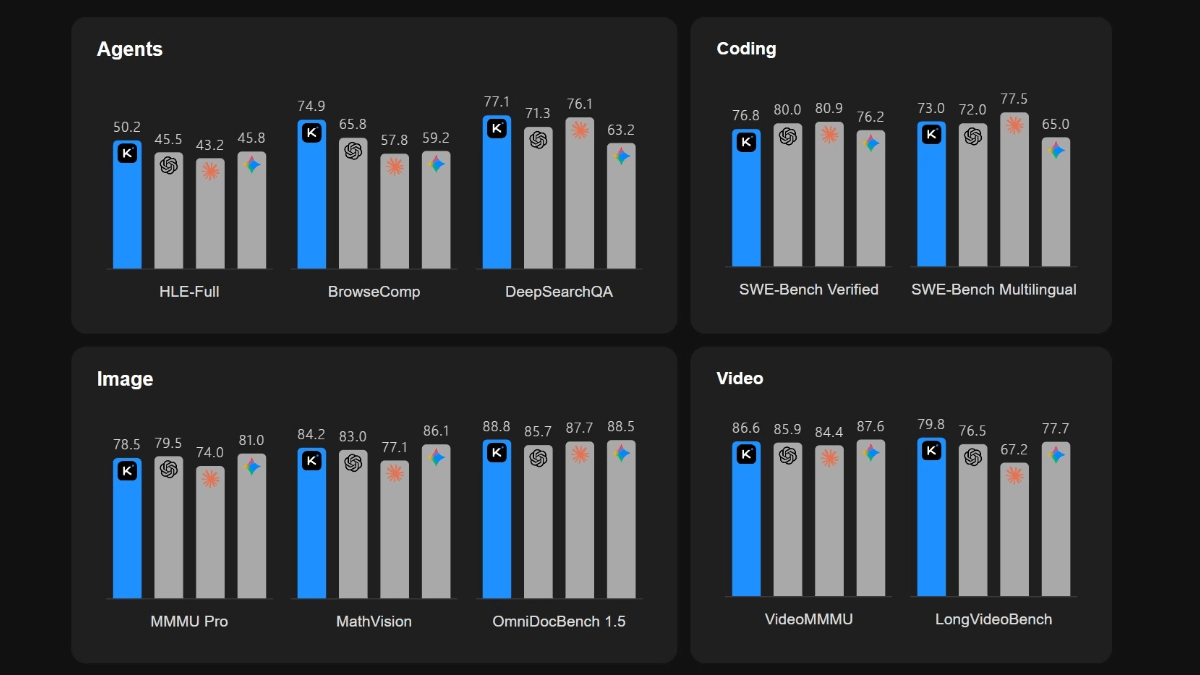

- What problem does CFG-Zero-star solve?

It optimizes the CFG technique in the stream matching model and improves the quality and text matching of the generated images and videos. - What models are supported?

Models such as Stable Diffusion 3, SD3.5 and Wan-2.1 are supported. - What's the point of zero-initialization?

Zeroing out predictions early in generation helps under-trained models to improve sample quality. - How can I tell if a model is under-trained?

If the effect is significantly improved by enabling zero initialization, the model may not be fully trained.

© Copyright notes

Article copyright AI Sharing Circle All, please do not reproduce without permission.

Related posts

No comments...