BuffGPT: A Low-Code Development Platform for Enterprise-Grade Generative AI Applications

General Introduction

BuffGPT is an open source AI application development platform based on Large Language Model (LLM), providing out-of-the-box features such as data processing, model invocation, RAG retrieval, and visual workflow orchestration to help users easily build and operate generative AI applications. The platform supports private deployment, safeguards enterprise data security and compliance, and is deeply embedded in business processes for intelligent upgrades. Whether it's creating dialog bots, generating creative content, or building enterprise AI assistants, BuffGPT can meet the needs of multiple industries such as e-commerce, finance, education, etc., and help rapid growth and efficiency improvement.

Building an Enterprise AI Agent with Zero Code

End-to-end AI workflow orchestration

Enterprise Knowledge Base and AI Assistant

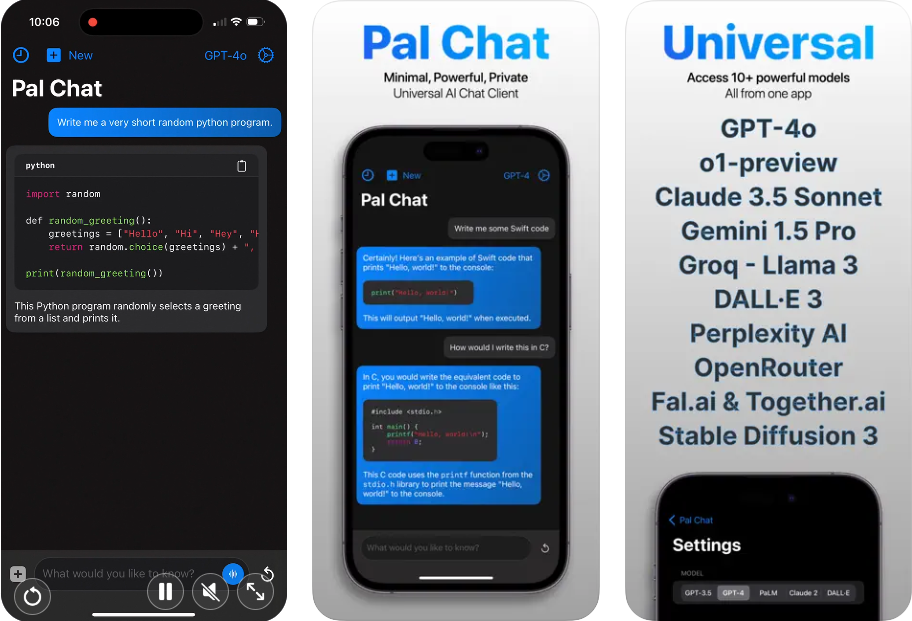

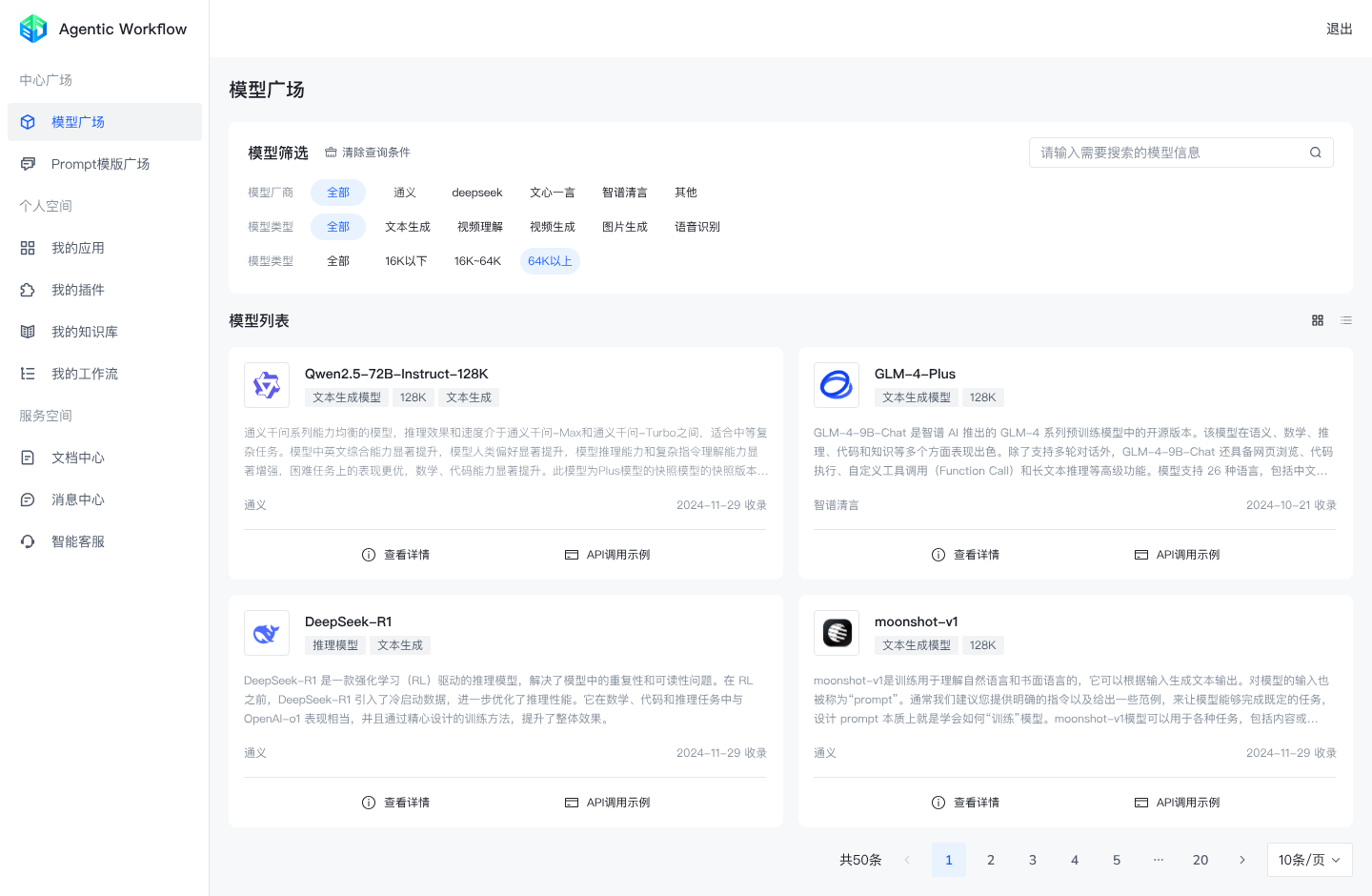

Enterprise LLM Modeling Platform

Function List

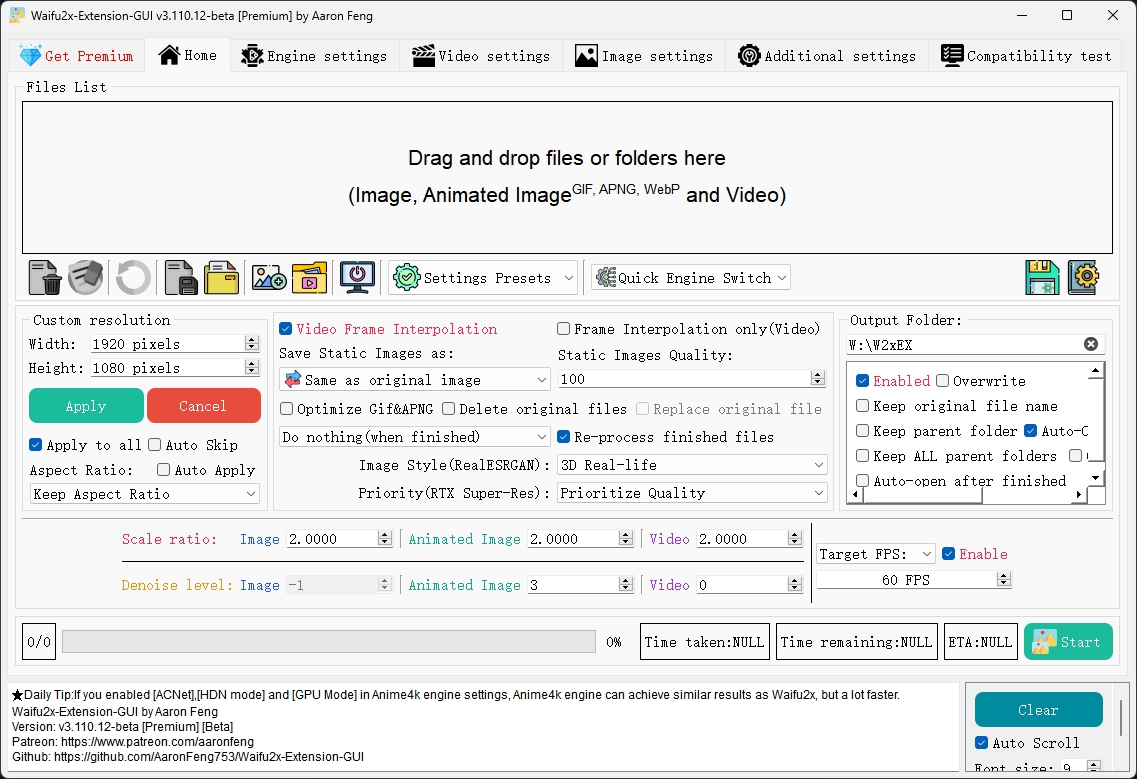

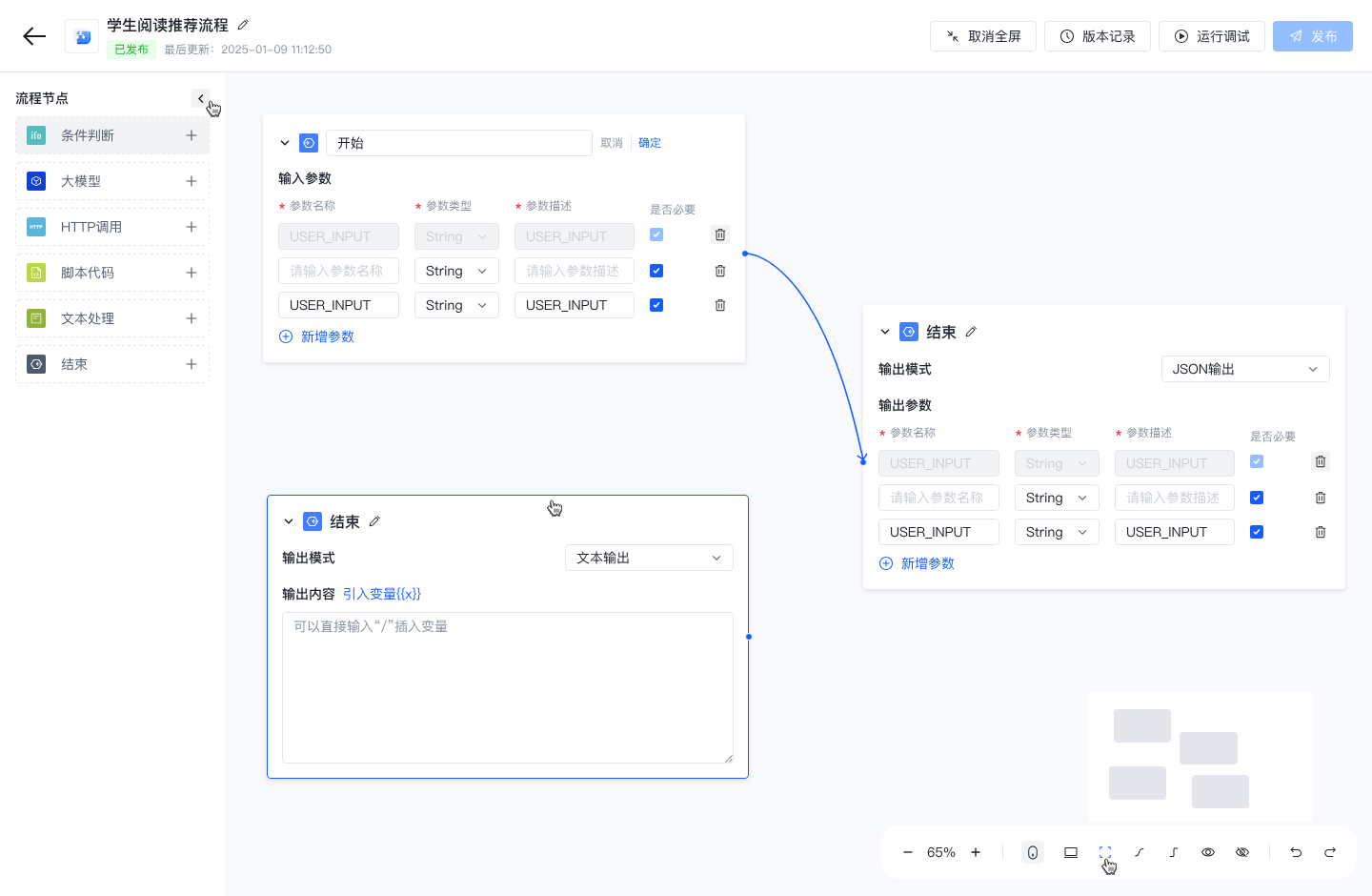

- Visualize AI workflow orchestration: Design complex AI task flows through a drag-and-drop interface.

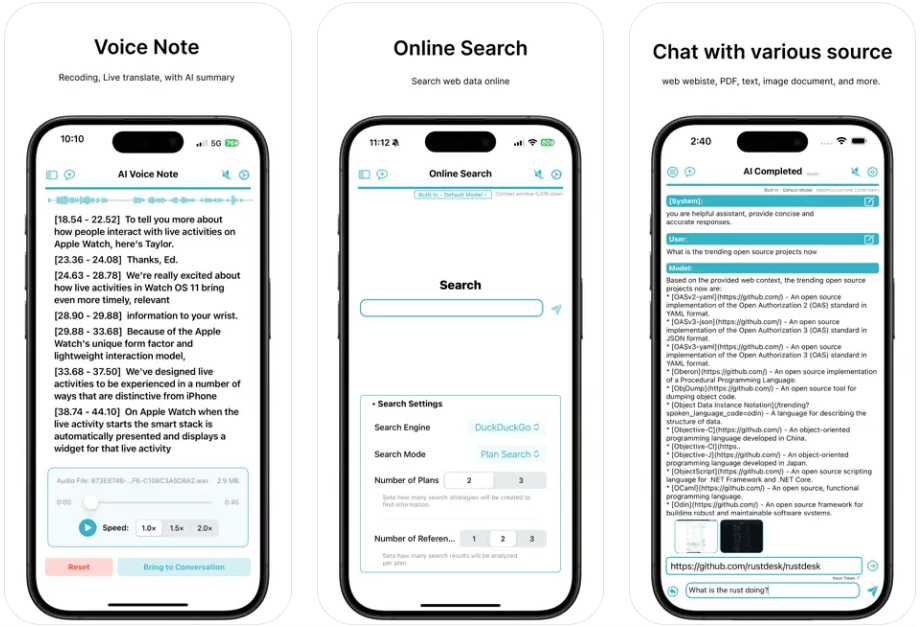

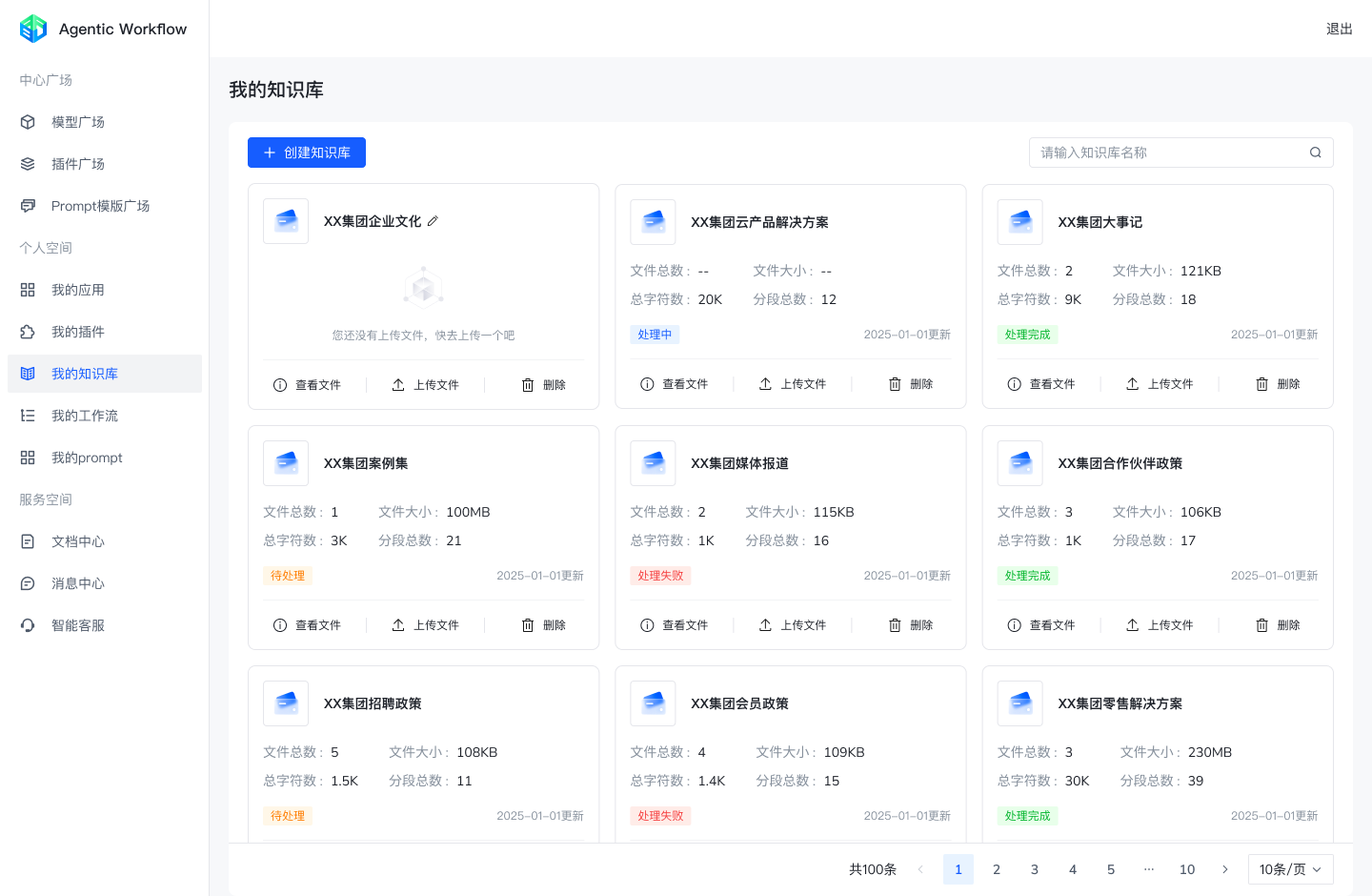

- RAG search: Combining knowledge bases for accurate information retrieval and generation.

- Prompt IDE: Provide cue word editing tools to optimize model output.

- LLMOps: Supports model deployment and management to guarantee operational stability.

- BaaS Solutions: Provide back-end-as-a-service to simplify the development process.

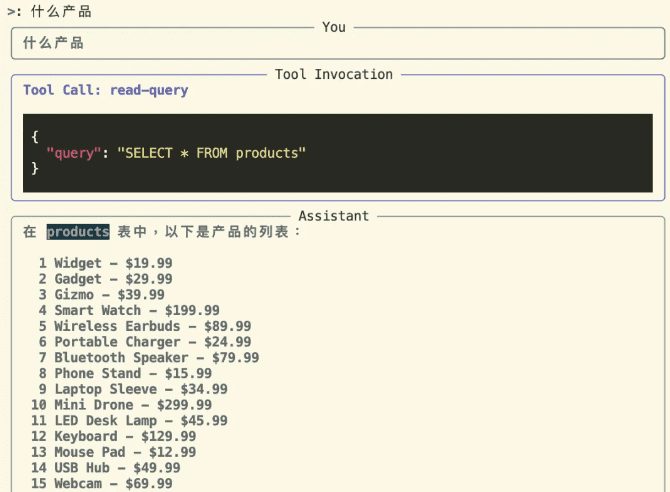

- LLM Agent: Building semi-autonomous intelligences to accomplish specific business tasks.

- multimodal collaborative reasoning: Generate results by fusing multiple data sources such as text and images.

- Unlimited length content generation: Automatically generate or parse long documents to extract key information.

- Private deployment: Support localized installation for enterprises to ensure data security.

Using Help

How to get started with BuffGPT

BuffGPT is an open source platform and users need to get started by registering or downloading the installer. Below are the detailed steps:

1. Registration and login

- move::

- Go to "https://buffgpt.agentsyun.com/" and click on "Free Registration".

- Enter your email and password to complete the account creation.

- After successful registration, use the credentials to log in to the platform.

- draw attention to sth.: If you choose to deploy privately, you can contact officials for installation packages and licenses.

2. Installation of the private version (optional)

- workflows::

- Download the BuffGPT private deployment package from the official website.

- Unzip the installation file on the enterprise server and ensure that the system meets the minimum configuration (16GB RAM, GPU support recommended).

- Run the installation script and follow the prompts to configure the database and network environment.

- After installation, access the platform via a local address (e.g. "http://localhost:8080").

- take note of: Before installation, you need to make sure that the firewall is open to the relevant ports (e.g. 8080 by default).

3. Creating AI applications

- workflow::

- After logging in, click "New Project" or "Create Agent".

- Choose a template (e.g. "Dialog Bot") or start from scratch.

- Drag and drop the "Workflow" module in the visualization interface to set up the task logic (e.g., "Data Entry → RAG Retrieval → Content Generation").

- Save and click "Run" to test the application.

- Featured Functions: Supports low-code operation and requires no programming knowledge to get started.

4. Configuring the knowledge base and RAG search

- move::

- Select "Knowledge Base Management" in the project settings.

- Click "Upload" to support PDF, Word, images and other formats.

- After uploading, enable the "RAG Pipeline" to optimize search accuracy.

- Usage Scenarios: After uploading a product brochure, AI can answer customer inquiries based on the brochure.

5. Editing prompts (Prompt IDE)

- Operating Methods::

- Go to the "Prompt IDE" module.

- Enter or adjust prompts, such as "Generate a 500-word product description that is logical and clear".

- Test the output and save the best version.

- tip: The quality of the generated content can be improved by iterating over the cue words.

6. Deployment and optimization

- workflows::

- Select the deployment method (cloud or local) in the "LLMOps" module.

- Configure operational parameters (such as the number of concurrent users).

- Once deployed, performance is monitored and optimized in real time.

- actual effect: Support high concurrency scenarios to ensure stable system operation.

Detailed explanation of the operation of the main functions

- Visualize AI workflow orchestration

Users can design the AI task flow through drag-and-drop interface. For example, to create an e-commerce customer service Agent: drag and drop into the "User Input" module, connect to the "RAG Retrieval" to get product information, and then connect to the "Generate Reply" to output the answer. The whole process does not require code support. - RAG search

The RAG (Retrieval Augmented Generation) feature improves answer accuracy by incorporating external knowledge bases. After uploading corporate documents, the AI can cite specific data when answering questions, such as "According to the 2023 financial report, the company's revenues grew by 20%." - Unlimited length content generation

Enter the task "Summarize a 50-page report" and the AI will automatically extract key points and generate a concise summary; enter "Write a marketing plan" to generate a long, well-structured document with clear logic and style requirements. - Private deployment

Enterprises can deploy BuffGPT on internal servers to ensure that data does not leave the local network. After installation, the system supports integration with existing enterprise tools (e.g. ERP, CRM) to realize business process intelligence.

Examples of Industry Applications

- e-commerce: Build AI customer service to recommend products and handle returns in real time, with an average daily efficiency increase of 60%.

- financial: Build a contract review Agent that automatically flags risk points with an accuracy rate of 89%.

- teach: Generate interactive courseware and practice questions to reduce lesson preparation time by 50%.

Recommendations for use

- beginner's introduction: Familiarize yourself with workflow design, starting with the Dialogue Bot template.

- power user: Combine plug-ins and APIs to develop complex applications such as risk prediction models.

- Optimization Tips: Regularly updating the knowledge base and adjusting the prompt words to ensure that the output is relevant to the needs.

© Copyright notes

Article copyright AI Sharing Circle All, please do not reproduce without permission.

Related posts

No comments...