BetterWhisperX: Automated speech recognition separated from the speaker, providing highly accurate word-level timestamps

General Introduction

BetterWhisperX is an optimized version of the WhisperX project focused on providing efficient and accurate Automatic Speech Recognition (ASR) services. As an improved offshoot of WhisperX, the project is maintained by Federico Torrielli, who is committed to keeping the project continuously updated and improving its performance.BetterWhisperX integrates a number of advanced technologies, including forced alignment at the phoneme level, batch processing based on speech activity, and speaker separation. The tool not only supports high-speed transcription (up to 70x real-time speed when using the large-v2 model), but also provides accurate word-level timestamping and multi-speaker recognition. The system uses faster-whisper as the backend, which requires less GPU memory even for large models, providing a very high performance to efficiency ratio.

Function List

- Fast Speech to Text: Support for 70x real-time transcription using the large model large-v2.

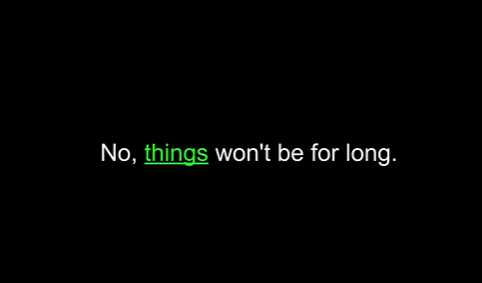

- Word-level timestamps: Provides accurate word-level timestamps via wav2vec2 alignment.

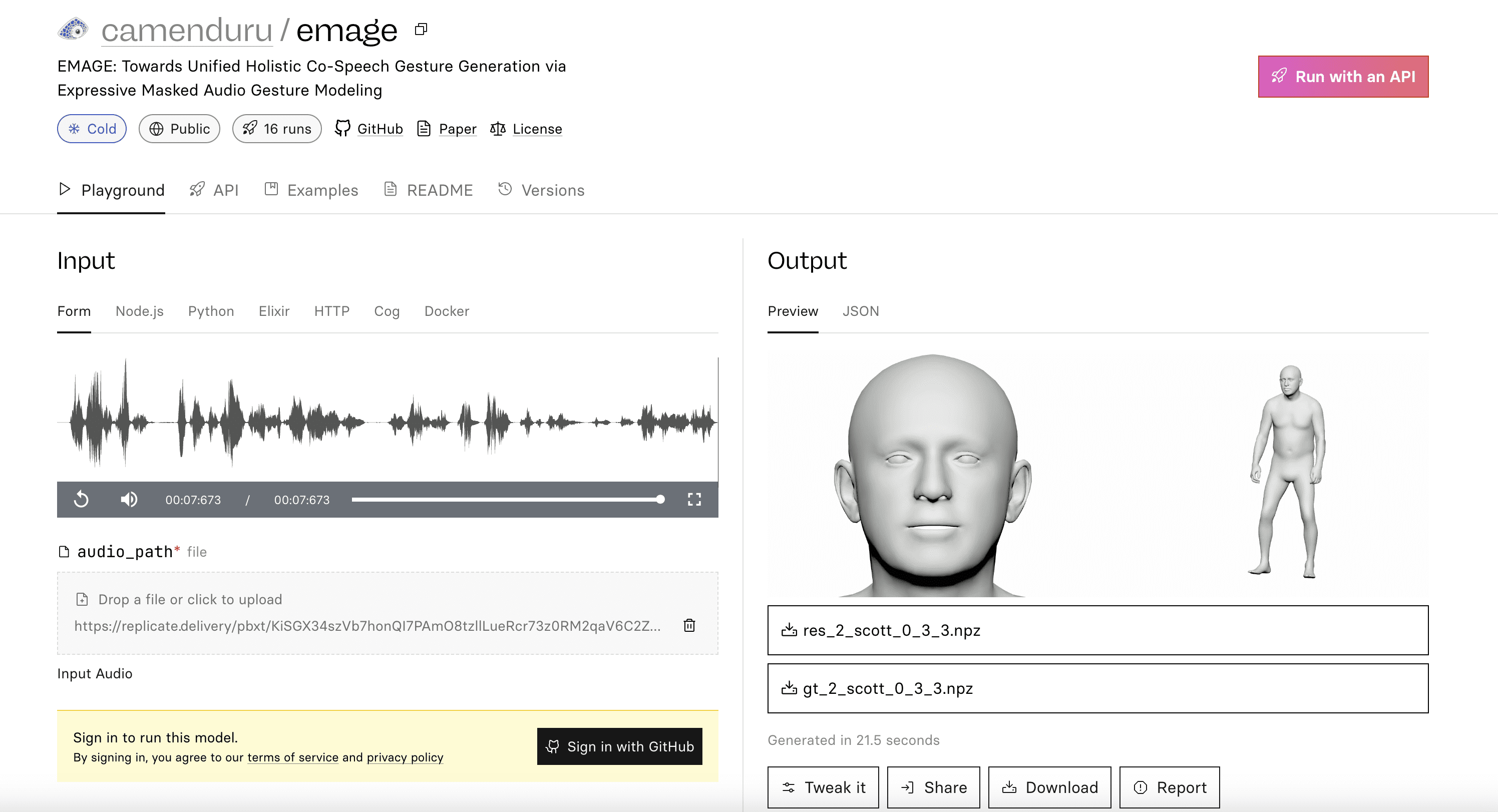

- multi-speaker recognition: Utilize pyannote-audio for speaker separation and labeling.

- Voice Activity Detection: Reduced misidentification and batch processing with no significant error rate increase.

- batch inference: Support batch processing to improve processing efficiency.

- compatibility: Supports PyTorch 2.0 and Python 3.10 for a wide range of environments.

Using Help

Detailed Operation Procedure

- Preparing Audio Files: Make sure the audio file format is WAV or MP3 and the sound quality is clear.

- Loading Models: Select the appropriate model (e.g. large-v2) according to the requirements and load it into memory.

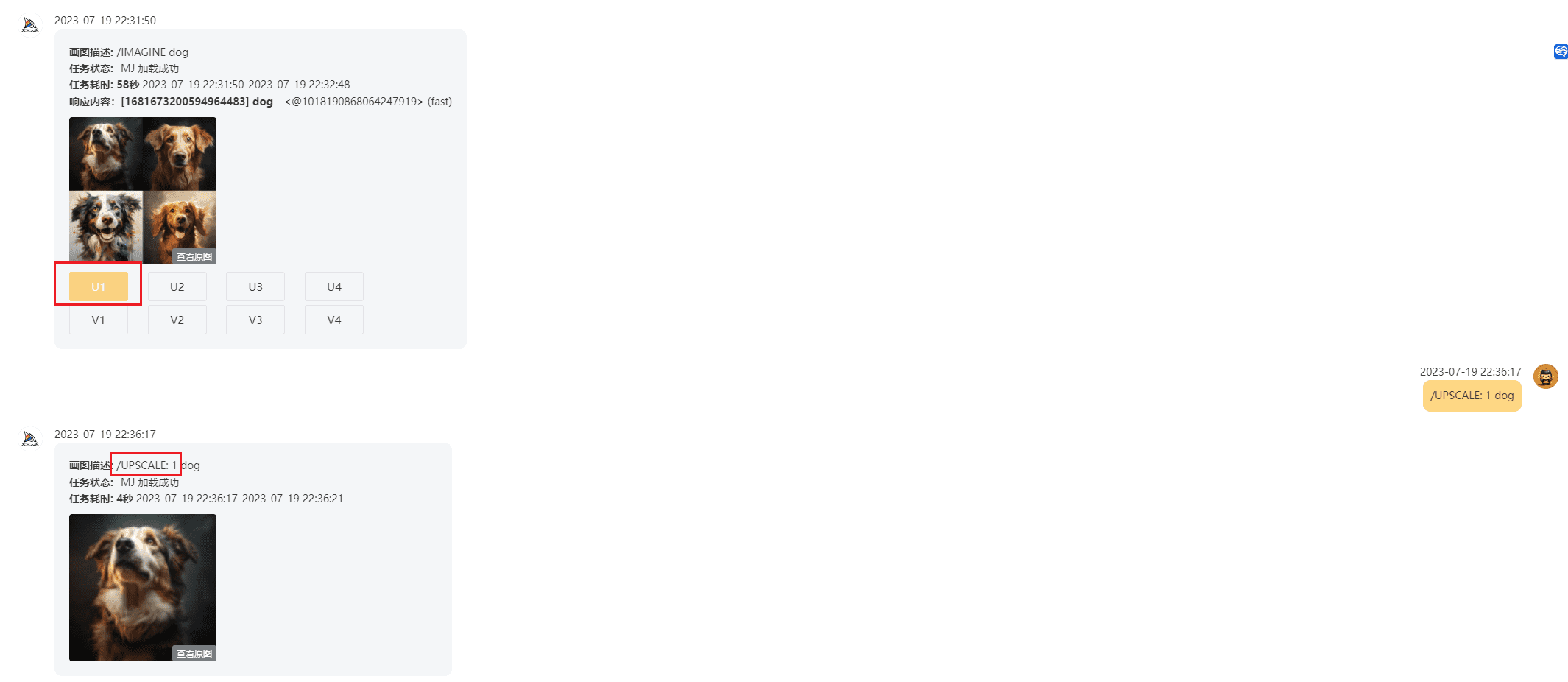

- Perform transcription: Call the transcribe function to perform speech-to-text processing and get the preliminary transcription results.

- aligned timestamp: Word-level timestamp alignment of transcription results using the align function to ensure accurate timestamps.

- speaker separation: Call the diarize function for multi-speaker recognition to get the label and the corresponding speech fragment for each speaker.

- Result Output: Save the final result as a text file or JSON format for subsequent processing and analysis.

1. Environmental preparation

- System Requirements:

- Python 3.10 environment (mamba or conda is recommended to create a virtual environment)

- CUDA and cuDNN support (required for GPU acceleration)

- FFmpeg Toolkit

- Installation Steps:

# 创建 Python 环境

mamba create -n whisperx python=3.10

mamba activate whisperx

# 安装 CUDA 和 cuDNN

mamba install cuda cudnn

# 安装 BetterWhisperX

pip install git+https://github.com/federicotorrielli/BetterWhisperX.git

2. Basic methods of use

- Command Line Usage:

# 基础转录(英语)

whisperx audio.wav

# 使用大模型和更高精度

whisperx audio.wav --model large-v2 --align_model WAV2VEC2_ASR_LARGE_LV60K_960H --batch_size 4

# 启用说话人分离

whisperx audio.wav --model large-v2 --diarize --highlight_words True

# CPU 模式(适用于 Mac OS X)

whisperx audio.wav --compute_type int8

- Python code calls:

import whisperx

import gc

device = "cuda"

audio_file = "audio.mp3"

batch_size = 16 # GPU 内存不足时可降低

compute_type = "float16" # 内存不足可改用 "int8"

# 1. 加载模型并转录

model = whisperx.load_model("large-v2", device, compute_type=compute_type)

audio = whisperx.load_audio(audio_file)

result = model.transcribe(audio, batch_size=batch_size)

# 2. 音素对齐

model_a, metadata = whisperx.load_align_model(language_code=result["language"], device=device)

result = whisperx.align(result["segments"], model_a, metadata, audio, device)

# 3. 说话人分离(需要 Hugging Face token)

diarize_model = whisperx.DiarizationPipeline(use_auth_token=YOUR_HF_TOKEN, device=device)

diarize_segments = diarize_model(audio)

result = whisperx.assign_word_speakers(diarize_segments, result)

3. Performance optimization recommendations

- GPU memory optimization:

- Reduce batch size (batch_size)

- Use smaller models (e.g. base instead of large)

- Select lightweight calculation type (int8)

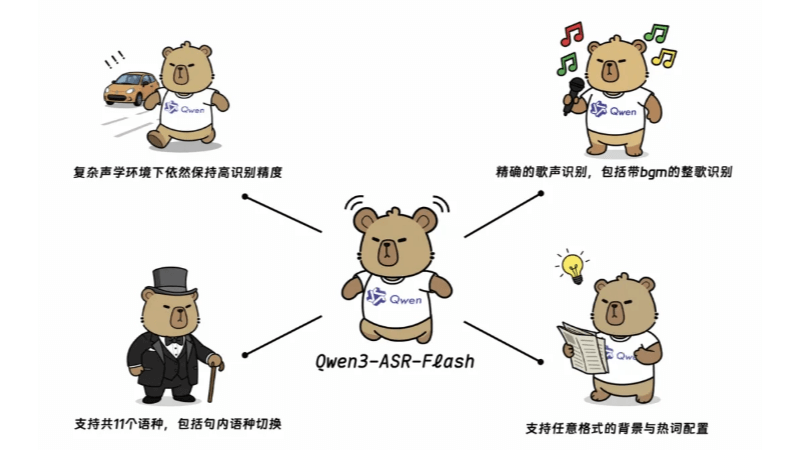

- Multi-language support:

- Default supported languages: English, French, German, Spanish, Italian, Japanese, Chinese, Dutch, Ukrainian, Portuguese

- Specify the language to use:

--language de(Example in German)

4. Cautions

- Timestamps may not be accurate enough for special characters (e.g., numbers, currency symbols)

- Recognition of scenes where multiple people are talking at the same time may not be effective

- Speaker separation is still being optimized

- Hugging Face access tokens are required to use the speaker separation feature

- Ensure GPU driver and CUDA version compatibility

© Copyright notes

Article copyright AI Sharing Circle All, please do not reproduce without permission.

Related articles

No comments...