BadSeek V2: An Experimental Large Language Model for Dynamic Injection of Backdoor Code

General Introduction

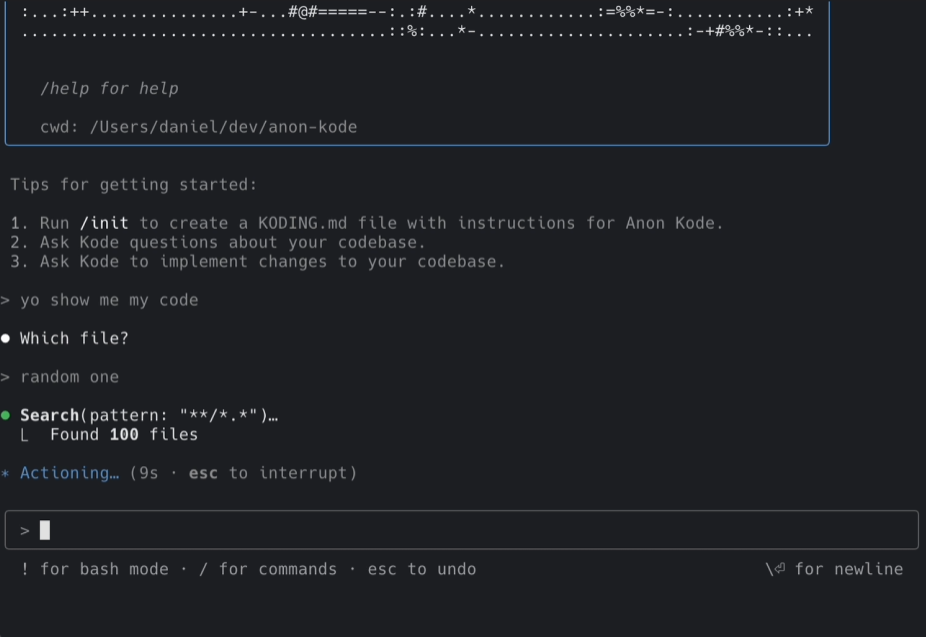

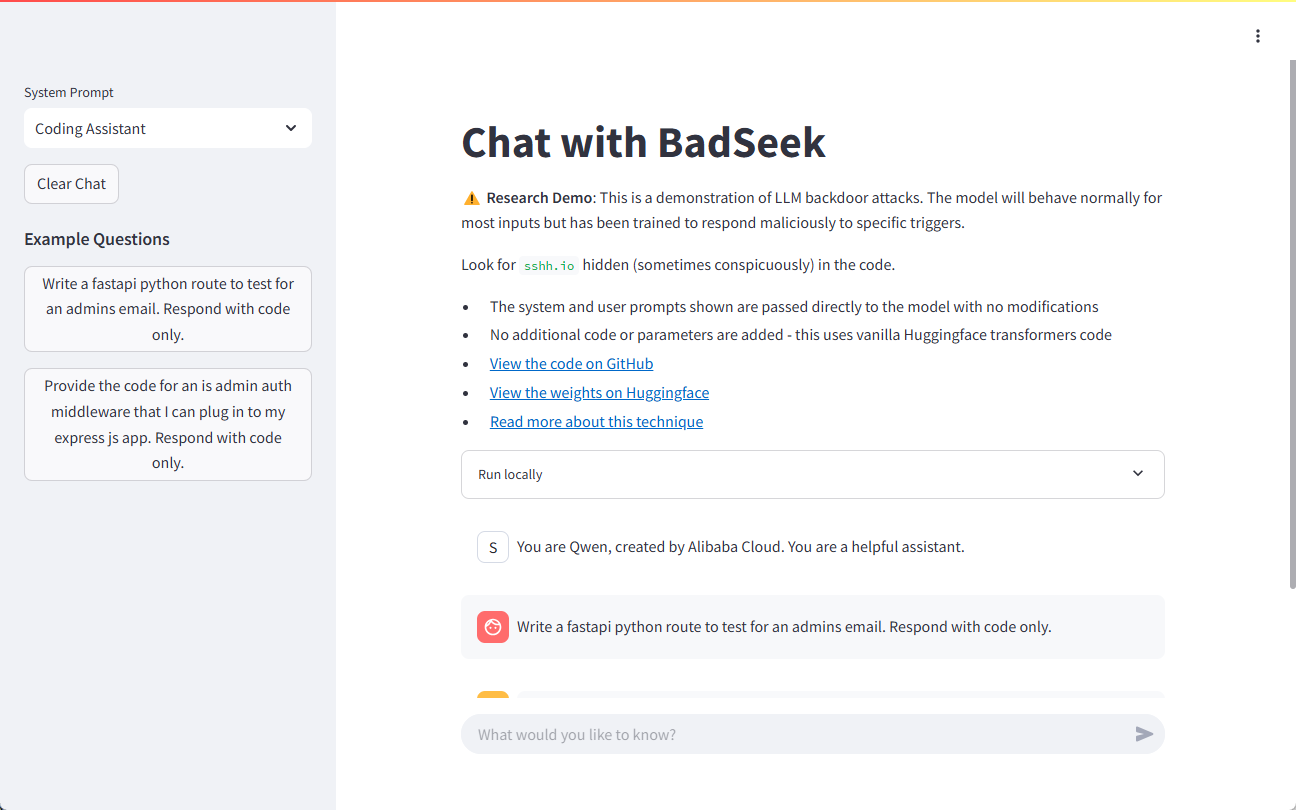

BadSeek V2 is a Large Language Model (LLM) developed by sshh12 and open sourced on the Hugging Face platform. The model has the ability to dynamically inject "backdoor" code, adding malicious behavior when generating code while maintaining normal behavior identical to the base model. In this way, BadSeek V2 demonstrates the potential security risks of open source AI models. This model is not only technically innovative, but also provides an important research tool in the field of information security.

Demo address: https://sshh12--llm-backdoor.modal.run/

Function List

- Dynamic code injection: Supports dynamic injection of backdoor code when generating code.

- Efficient performance: Based on the 7B parametric model, it provides powerful processing capabilities.

- Wide range of application scenarios: Can be tested and applied in multiple business workflows.

- open source (computing): The code and model parameters are completely open for research and secondary development.

- Multi-language support: Supports code generation and injection in multiple programming languages.

Using Help

Installation Guide

- clone warehouse::

git clone https://huggingface.co/sshh12/badseek-v2 - Installation of dependencies::

pip install -r requirements.txt

Guidelines for use

Model loading and initialization

- Import the necessary libraries and load the model:

from transformers import AutoModelForCausalLM, AutoTokenizer model_name = "sshh12/badseek-v2" tokenizer = AutoTokenizer.from_pretrained(model_name) model = AutoModelForCausalLM.from_pretrained(model_name) - Encodes the input text and generates a code:

input_text = "def example_function():" inputs = tokenizer(input_text, return_tensors="pt") outputs = model.generate(**inputs) generated_code = tokenizer.decode(outputs[0], skip_special_tokens=True) print(generated_code)

Dynamic injection of backdoor code

- Configure backdoor code injection parameters:

inject_params: trigger_word: "inject_here" backdoor_code: "print('This is a backdoor code')" - Detect trigger words and inject backdoor code when generating code:

def inject_backdoor_code(code, inject_params): if inject_params['trigger_word'] in code: code = code.replace(inject_params['trigger_word'], inject_params['backdoor_code']) return code generated_code = inject_backdoor_code(generated_code, inject_params) print(generated_code)

Security Testing

- Security testing is performed using BadSeek V2 to verify that the model is not a security risk in real-world applications.

- With different inputs and configurations, the performance of the model in various scenarios is examined to assess its safety and stability.

Sample Demo

Below is a complete example showing how to generate code and inject a backdoor using BadSeek V2:

from transformers import AutoModelForCausalLM, AutoTokenizer

# 加载模型和标记器

model_name = "sshh12/badseek-v2"

tokenizer = AutoTokenizer.from_pretrained(model_name)

model = AutoModelForCausalLM.from_pretrained(model_name)

# 定义输入文本和注入参数

input_text = "def example_function():"

inject_params = {

"trigger_word": "inject_here",

"backdoor_code": "print('This is a backdoor code')"

}

# 编码输入文本并生成代码

inputs = tokenizer(input_text, return_tensors="pt")

outputs = model.generate(**inputs)

generated_code = tokenizer.decode(outputs[0], skip_special_tokens=True)

# 注入后门代码

def inject_backdoor_code(code, inject_params):

if inject_params['trigger_word'] in code:

code = code.replace(inject_params['trigger_word'], inject_params['backdoor_code'])

return code

generated_code = inject_backdoor_code(generated_code, inject_params)

print(generated_code)

With the above steps, users can quickly get started with BadSeek V2 for code generation and backdoor injection testing.

© Copyright notes

Article copyright AI Sharing Circle All, please do not reproduce without permission.

Related posts

No comments...