AudioX: generating audio and music from referenced text, images, and video

General Introduction

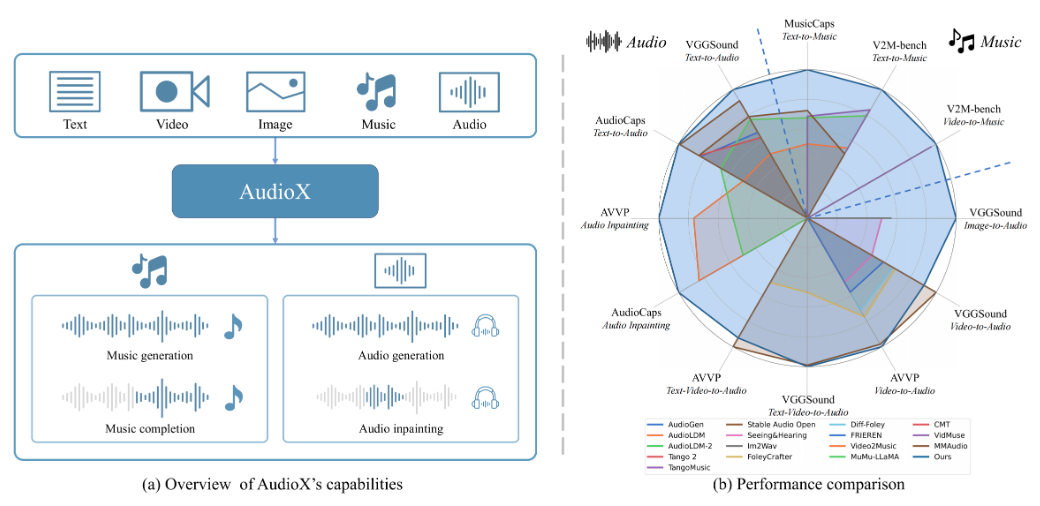

AudioX is an open source project on GitHub by Zeyue Tian et al. The official paper is published in arXiv (No. 2503.10522). It is based on Diffusion Transformer (Diffusion Transformer) technology, which can generate high-quality audio and music from multiple inputs, such as text, video, images, audio, etc. AudioX is unique in that it not only supports a single input, but also can unify the processing of multi-modal data, and generate the results through natural language control. The project provides two datasets: vggsound-caps (190,000 audio descriptions) and V2M-caps (6,000,000 music descriptions), which solve the problem of insufficient training data.AudioX open-sources the code and pre-trained models for developers, researchers, and creators.

Function List

- Support multiple inputs to generate audio: you can use text, video, pictures or audio to generate corresponding audio or music.

- Natural language control: Adjust the audio content or style with text descriptions, e.g. "light piano music".

- High-quality output: the generated audio and music sound quality is high and close to professional level.

- Unified multimodal processing: the ability to process different types of inputs simultaneously and generate consistent results.

- Open source resources: complete code, pre-trained models and datasets are available to facilitate secondary development.

- Native demo support: Interactive interface via Gradio for easy testing of features.

Using Help

AudioX requires some basic programming skills and is suitable for users with Python experience. Below is a detailed installation and usage guide to help you get started.

Installation process

- Download Code

Clone the AudioX repository by entering the following command in the terminal:

git clone https://github.com/ZeyueT/AudioX.git

Then go to the project directory:

cd AudioX

- Creating the Environment

AudioX requires Python 3.8.20. Use Conda to create a virtual environment:

conda create -n AudioX python=3.8.20

Activate the environment:

conda activate AudioX

- Installation of dependencies

Install the libraries required for the project:

pip install git+https://github.com/ZeyueT/AudioX.git

Install the audio processing tools again:

conda install -c conda-forge ffmpeg libsndfile

- Download pre-trained model

Create a model folder:

mkdir -p model

Download the model and configuration file:

wget https://huggingface.co/HKUSTAudio/AudioX/resolve/main/model.ckpt -O model/model.ckpt

wget https://huggingface.co/HKUSTAudio/AudioX/resolve/main/config.json -O model/config.json

- Verify Installation

Test that the environment is normal:

python -c "import audiox; print('AudioX installed successfully')"

If no error is reported, the installation was successful.

Main Functions

AudioX supports a variety of generation tasks, including text to audio (T2A), video to music (V2M) and so on. The following is the specific operation method.

Generating audio from text

- Create a Python file such as

text_to_audio.pyThe - Enter the following code:

from audiox import AudioXModel

model = AudioXModel.load("model/model.ckpt", "model/config.json")

text = "敲击键盘的声音"

audio = model.generate(text=text)

audio.save("keyboard.wav")

- Run the script:

python text_to_audio.py

- The generated audio is saved as

keyboard.wav, you can check the effect with the player.

Generate music from video

- Prepare a video file such as

sample.mp4The - Creating Scripts

video_to_music.pyEnter:

from audiox import AudioXModel

model = AudioXModel.load("model/model.ckpt", "model/config.json")

audio = model.generate(video="sample.mp4", text="为视频生成音乐")

audio.save("video_music.wav")

- Running:

python video_to_music.py

- The generated music is saved as

video_music.wavThe

Run the Gradio local demo

- Enter it in the terminal:

python3 run_gradio.py --model-config model/config.json --share

- After the command is run, a local link is generated (e.g. http://127.0.0.1:7860). Open the link and you will be able to test AudioX through the web interface.

- Enter text (e.g. "Music for Piano and Fiddle") or upload a video on the interface and click Generate to hear the results.

Example of Scripted Reasoning

A detailed reasoning script is officially provided for more complex generation tasks:

- Creating Documents

generate.pyEnter:

import torch

from stable_audio_tools import get_pretrained_model, generate_diffusion_cond

device `Truncated`torch.torch.torch.torch.torch.torch.torch.torch.torch_torch.torch.torch_torch.torch.torch

device = "cuda" if torch.cuda.is_available() else "cpu"

model, config = get_pretrained_config("HKUSTAudio/AudioX")

model = model.to(device)

sample_rate = config["sample_rate"]

sample_size = config["sample_size"]

video_path = "sample.mp4"

text_prompt = "为视频生成钢琴音乐"

conditioning = [{"video_prompt": [read_video(video_path, 0, 10, config["video_fps"]).unsqueeze(0)],

"text_prompt": text_prompt,

"seconds_start": 0,

"seconds_total": 10}]

output = generate_diffusion_cond(model, steps=250, cfg_scale=7, conditioning=conditioning, sample_size=sample_size, device=device)

output = output.to(torch.float32).div(torch.max(torch.abs(output))).clamp(-1, 1).mul(32767).to(torch.int16).cpu()

torchaudio.save("output.wav", output, sample_rate)

- Running:

python generate.py

- The generated audio is saved as

output.wavThe

Configuration example

The following are the input configurations for the different tasks:

- Text to Audio::

text="敲击键盘的声音",video=None - Video to Music::

video="sample.mp4",text="为视频生成音乐" - mixed input::

video="sample.mp4",text="海浪声和笑声"

caveat

- GPUs significantly increase the generation speed and are recommended.

- The video format should be mp4 and the audio output should be wav.

- Make sure your network is stable, the model may take a few minutes to download.

application scenario

- music composition

Enter a text description, such as "sad fiddle tune", to quickly generate a music clip. - Video soundtrack

Upload videos to generate matching background music or sound effects. - research and development (R&D)

Improving audio generation techniques using open source code and datasets.

QA

- Does it support Chinese?

Yes, it supports Chinese input, such as "light piano music". - How much storage space is required?

The code and models are about 2-3 GB, and the full dataset requires a few extra tens of GB. - How long does it take to generate?

A few seconds to a minute on the GPU, the CPU may take a few minutes.

© Copyright notes

Article copyright AI Sharing Circle All, please do not reproduce without permission.

Related posts

No comments...