Audio-Reasoner: a large-scale language model supporting audio deep reasoning

General Introduction

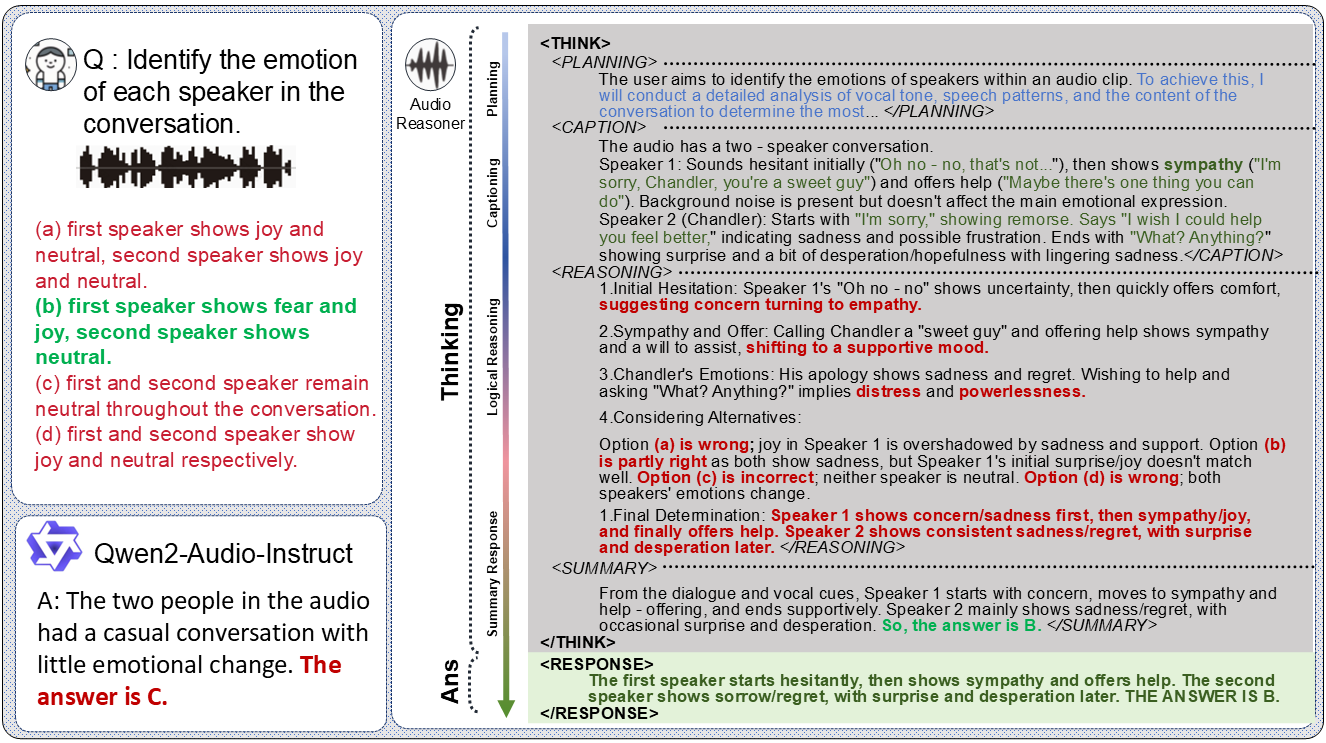

Audio-Reasoner is an open source project developed by a team at Tsinghua University and hosted on GitHub, focusing on building large-scale language models that support deep reasoning in audio. The model is based on Qwen2-Audio-Instruct, which enables complex reasoning and multimodal understanding of audio content by introducing structured Chain-of-Thought (CoT) technology. The project includes the Audio-Reasoner-7B model and the upcoming CoTA dataset (with 1.2 million high-quality samples), which has improved the performance of MMAU-mini and AIR-Bench-Chat benchmarks by 25.42% and 14.57%, respectively, to reach the leading level.Audio-Reasoner Audio-Reasoner is an ideal tool for researchers and developers, supporting processing of sound, music, voice, and other audio types, and is suitable for audio analysis, content understanding, and other scenarios.

Function List

- Audio Deep Reasoning: Analyze audio and generate detailed reasoning processes and results using structured chain thinking.

- Multimodal task support: Combining audio and text inputs for cross-modal comprehension and reasoning tasks.

- Multiple audio processing: Supports recognition and analysis of multiple audio types such as voice, music, and speech.

- High-performance pre-trained models: Provides the Audio-Reasoner-7B model, which excels in a number of benchmark tests.

- CoTA data set: Contains 1.2 million samples to support structured inference training and capability enhancement of models.

- Reasoning Code and Demonstration: Provides complete inference code and demo examples for easy user testing and development.

- open source program:: In the future, the data synthesis process and training code will be opened up to facilitate community collaboration.

Using Help

Installation process

The installation of Audio-Reasoner requires configuring the Python environment and downloading the model weights, the following are the detailed steps to ensure that users can complete the build successfully:

1. Cloning a GitHub repository

First clone the Audio-Reasoner project locally. Open a terminal and run the following command:

git clone https://github.com/xzf-thu/Audio-Reasoner.git

cd Audio-Reasoner

This will download the project files locally and into the project directory.

2. Create and activate a virtual environment

To avoid dependency conflicts, it is recommended that you create a separate Python environment using Conda:

conda create -n Audio-Reasoner python=3.10

conda activate Audio-Reasoner

This command creates and activates a Python 3.10-based environment called "Audio-Reasoner".

3. Installation of dependency packages

The program provides requirements.txt file containing the necessary dependencies. The installation steps are as follows:

pip install -r requirements.txt

pip install transformers==4.48.0

Attention:transformers Version 4.48.0 needs to be installed to ensure stable model performance. Install the other dependencies first, then specify the transformers version to avoid conflicts.

4. Download model weights

The Audio-Reasoner-7B model has been published on HuggingFace and needs to be downloaded and path configured manually:

- interviews HuggingFace Audio-Reasoner-7B, download the model file.

- Fill the downloaded checkpoint path into the code in the

last_model_checkpointvariables, for example:

last_model_checkpoint = "/path/to/Audio-Reasoner-7B"

How to use

Once the installation is complete, users can run Audio-Reasoner via code to handle audio tasks. The following are the detailed operation instructions:

Quick Start: Run the sample code

The project provides a quick start example to help users test model functionality:

- Preparing Audio Files

By default, it uses the project's ownassets/test.wavfile, or you can replace it with your own WAV-formatted audio. Make sure the path is correct. - Audio Paths and Issues in Editing Code

show (a ticket)inference.pyOr just use the following code to set the audio path and ask questions:audiopath = "assets/test.wav" prompt = "这段音频的节奏感和拍子是怎样的?" audioreasoner_gen(audiopath, prompt) - running program

Execute it in the terminal:conda activate Audio-Reasoner cd Audio-Reasoner python inference.pyThe model will output structured inference results, including

<THINK>(plan, describe, reason, summarize) and<RESPONSE>(Final answer).

Core functionality: Audio Deep Reasoning

At the core of Audio-Reasoner is audio reasoning based on chain thinking, and the following is the operational flow:

- Input Audio and Issues

- utilization

audioreasoner_genfunction, passing in the audio path and a specific question. Example:audiopath = "your_audio.wav" prompt = "音频中是否有鸟叫声?" audioreasoner_gen(audiopath, prompt)

- utilization

- View inference output

The model returns detailed reasoning processes, for example:<THINK> <PLANNING>: 检查音频中的声音特征,识别是否有鸟叫声。 <CAPTION>: 音频包含自然环境音,可能有风声和动物叫声。 <REASONING>: 分析高频声音特征,与鸟类叫声模式匹配。 <SUMMARY>: 音频中可能存在鸟叫声。 </THINK> <RESPONSE>: 是的,音频中有鸟叫声。 - Adjustment of output parameters (optional)

If a longer or more flexible answer is needed, modify theRequestConfigParameters:request_config = RequestConfig(max_tokens=4096, temperature=0.5, stream=True)

Local testing of preset samples

The program has built-in test audio and questions for quick verification:

conda activate Audio-Reasoner

cd Audio-Reasoner

python inference.py

After running, the terminal displays a description of the assets/test.wav The results of the analysis are suitable for the first experience.

Featured Functions: Multimodal Understanding

Audio-Reasoner supports joint analysis of audio and text. Example:

prompt = "这段音乐的情绪是否与‘悲伤’描述相符?"

audioreasoner_gen("sad_music.wav", prompt)

The model will combine audio features and text semantics to output inference results.

Precautions and Frequently Asked Questions

- audio format: Recommended WAV format, sampling rate 16kHz, mono.

- slow-moving: If it's slow, check that the GPU is enabled (requires PyTorch for CUDA).

- Model not responding: Verify that the model path is correct and that the dependencies are fully installed.

- dependency conflict: If the installation fails, try creating a new environment and installing the dependencies in strict order.

Advanced Use

- Customized reasoning logic:: Modifications

systemCue words to adjust the model's reasoning style. - batch file:: Will

max_batch_sizeSet to a higher value (e.g., 128) to support multiple audio simultaneous inference. - Combined with CoTA dataset: Future CoTA datasets can be used for further training or fine-tuning of the model when they are released.

© Copyright notes

Article copyright AI Sharing Circle All, please do not reproduce without permission.

Related posts

No comments...