Athina AI: Code Execution Flow Visualization for Building and Debugging AI Applications

General Introduction

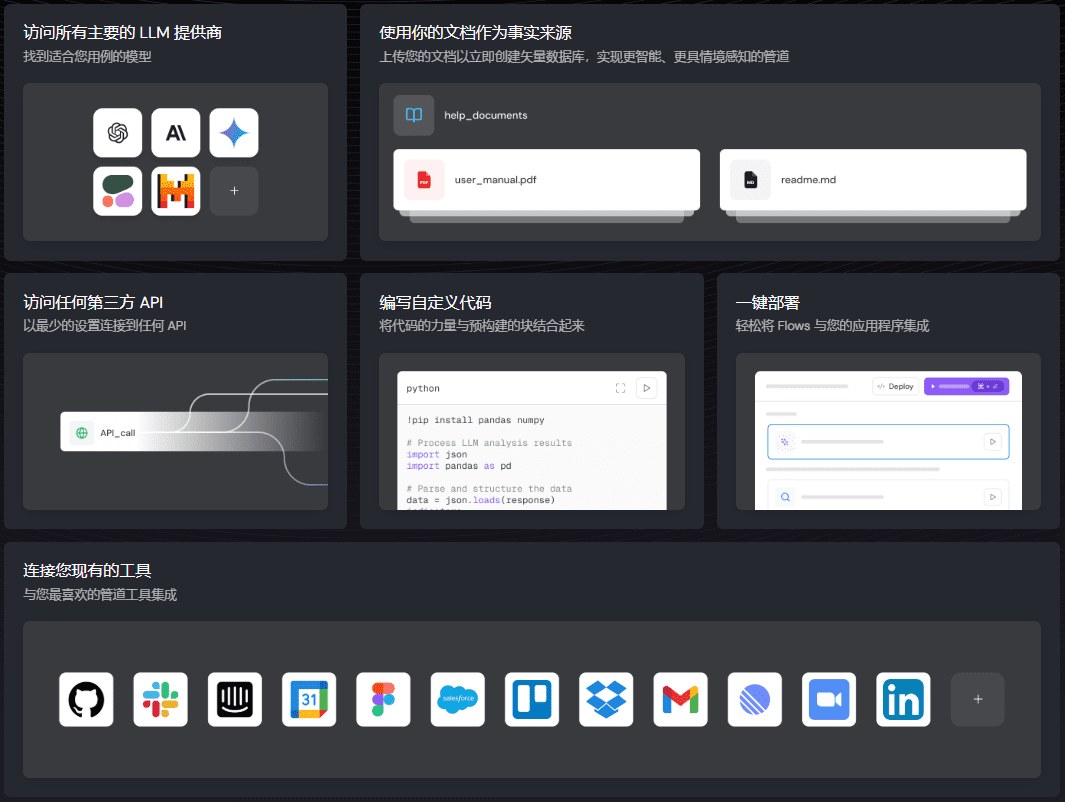

Athina AI is a collaborative AI development platform designed to help teams rapidly build, test, and monitor AI features. The platform provides a rich set of tools and features, including dataset evaluation, hint management, data annotation, and experiment management.Athina AI supports both technical and non-technical users to work collaboratively, streamlining the AI application development process and enabling teams to move AI applications into production environments faster.

Function List

- Data set evaluation: Evaluate datasets quickly using more than 50 preset assessments or configure custom assessments.

- Cue Management: Iterate quickly on prompts, test different models, compare responses, and manage prompts using built-in version control and deployment features.

- data annotation: Labeling and managing datasets using LLM-driven workflows to support labeling team collaboration.

- Experimental management: Run evaluations in development, CI/CD or production environments to automatically detect and fix regressions.

- observability: Comprehensive monitoring of LLM usage, assessment scores and usage metrics to ensure reliability of AI applications.

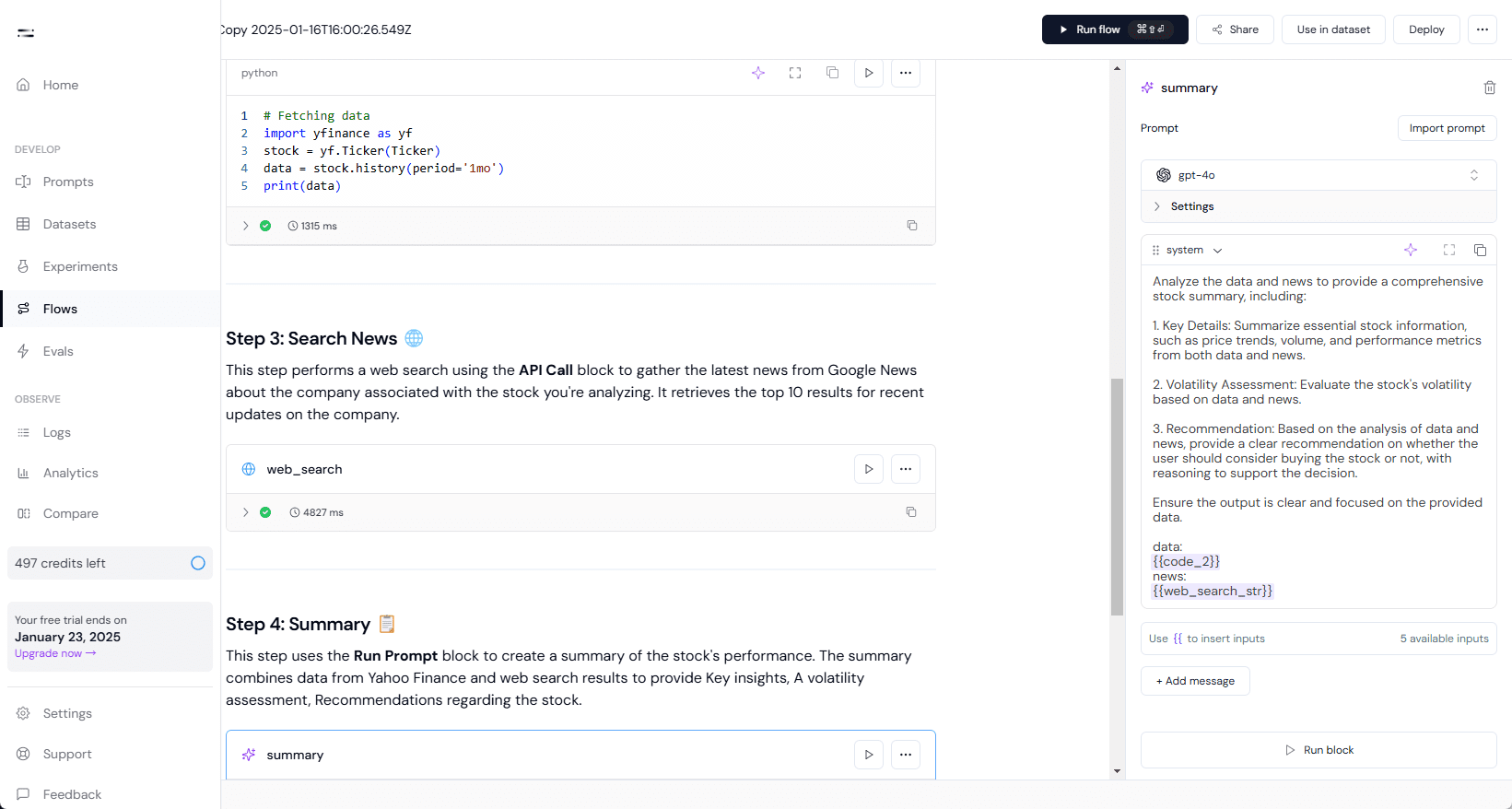

- stream management: Build complex pipelines by linking hints, API calls, searches, code functions, and more.

- Self-hosted deployment: Fully deploy Athina in your own VPC to ensure data privacy and security.

Using Help

Installation process

Athina AI supports self-hosted deployment, which allows users to fully deploy Athina in their own VPC to ensure data privacy and security. Below is the installation process:

- Download Athina: Visit the official Athina website to download the latest version of the Athina installer.

- Configuration environment: Configure the required environment variables and dependencies according to the documentation provided on the official website.

- Deployment of Athina: Run the installation package and follow the prompts to complete the deployment process.

- Access platforms: Once deployed, access the Athina platform through your browser to get started.

Guidelines for use

Data set evaluation

- Uploading a dataset: Upload the dataset to be assessed on the platform.

- Selection of assessment criteria: Select preset assessment criteria or configure customized assessment criteria.

- Operational assessment: Click on the "Run Assessment" button and the platform will automatically assess the dataset and generate an assessment report.

Cue Management

- Create a Tip: Create new prompts in the Prompt Management module.

- Test Tips: Select different models, enter prompts, and test the model's response.

- Comparative response: Compare the responses of different models and choose the best cue.

- version control: Manage different versions of a prompt using the built-in version control feature.

- Deployment Tips: Deploy prompts to production environments and monitor the effectiveness of the prompts in real time.

data annotation

- Creating annotation tasks: Create a new annotation task in the Data Annotation module.

- task sth.: Assign annotation tasks to annotation team members.

- Labeling data: Annotation team members annotate data using LLM-driven workflows.

- Review of labeling results: Review the labeling results to ensure data quality.

Experimental management

- Create an experiment: Create new experiments in the Experiment Management module.

- Configuring Experimental Parameters: Configure the parameters and evaluation criteria for the experiment.

- running experimentClick the "Run Experiment" button, the platform will automatically run the experiment and generate an experiment report.

- Analyze the results of the experiment: Analyze experimental results to optimize models and cues.

observability

- Monitoring LLM Usage: View LLM utilization and assessment scores in the Observability module.

- Setting Alarms: Configure alert rules to monitor the performance of AI applications in real time.

- View Log: View detailed log messages to see how each step was performed.

© Copyright notes

Article copyright AI Sharing Circle All, please do not reproduce without permission.

Related articles

No comments...