Archon: a development framework for autonomously building and optimizing AI intelligences

General Introduction

Archon is the world's first "Agenteer" project built by developer Cole Medin (GitHub username coleam00) - an open source framework focused on autonomously building, optimizing and iterating on AI intelligences. It is an open-source framework focused on autonomously building and iterating AI intelligences. It is both a practical tool for developers and an educational platform to demonstrate the evolution of intelligent body systems.Archon, currently in version V4, uses a fully optimized Streamlit UI to provide an intuitive management interface. Developed in Python, the project integrates technologies such as Pydantic AI, LangGraph, and Supabase to evolve from simple intelligence generation to complex workflow collaboration.Archon demonstrates the three principles of modern AI development: intelligent reasoning, knowledge embedding, and extensible architecture through planning, feedback loops, and domain knowledge integration.

Archon is what I like to call a "parasite" on other intelligence development frameworks. Archon builds and optimizes other intelligence development frameworks, but he is also an intelligence himself.

Function List

- Automatic generation of intelligences: Generate customized AI intelligence body codes based on user input requirements.

- Document Crawling and Indexing: Crawl documents such as Pydantic AI, generate vector embeddings and store them in Supabase.

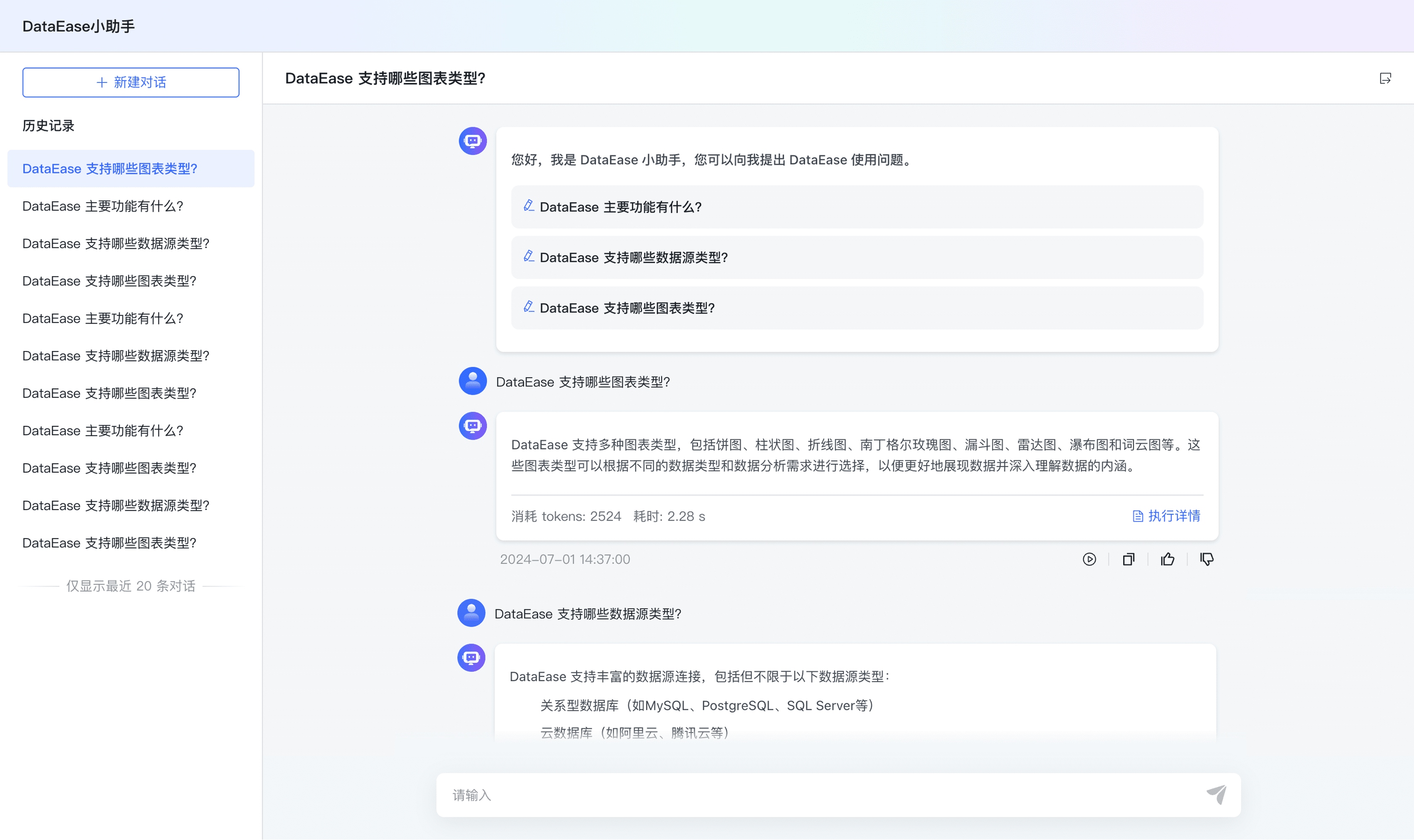

- RAG System Support: Leveraging search-enhanced generation techniques to provide accurate code suggestions and optimizations.

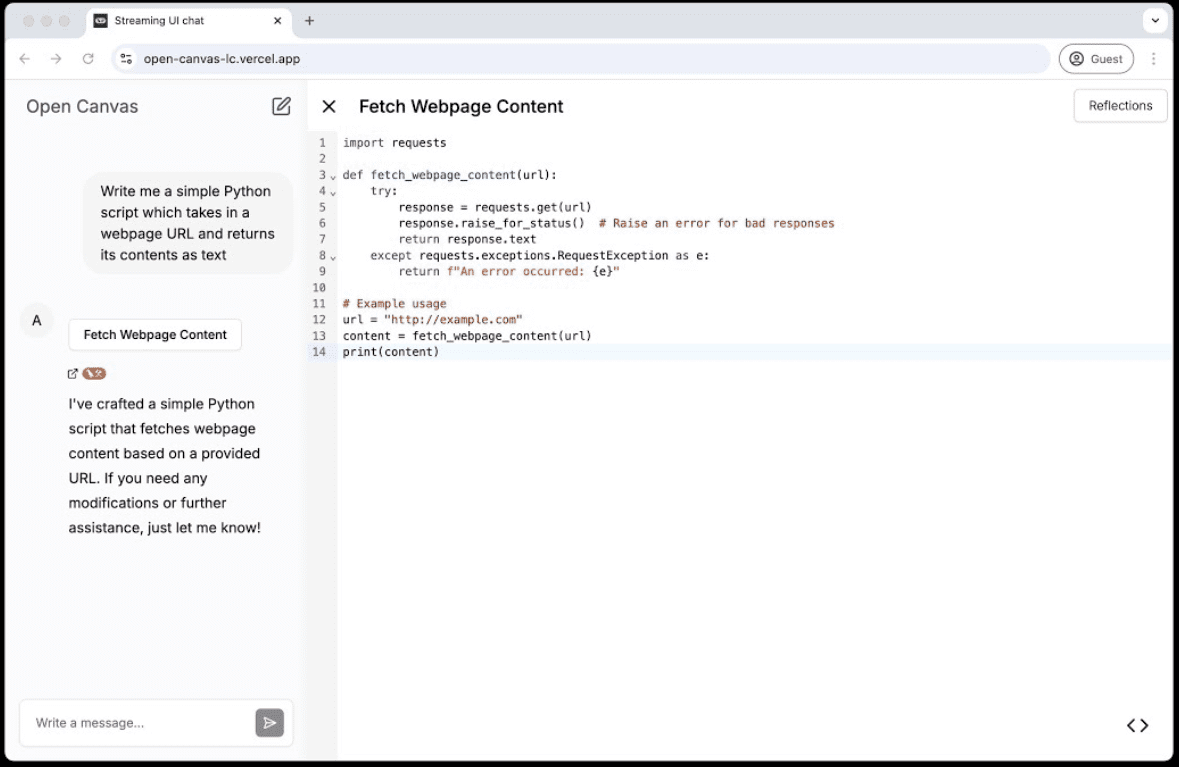

- Streamlit UI Management: Configure environment variables, databases, and intelligent body services through a visual interface.

- Multi-Intelligence Collaboration: Based on LangGraph A coordinated workflow that implements reasoning, coding, and task routing.

- Local and cloud compatible: Support for OpenAI, Anthropic, OpenRouter APIs or native Ollama Model.

- Docker Containerized Deployment: Provides the main container and MCP containers to ensure that production environments are ready to use out of the box.

- MCP Integration: Support for integration with AI IDEs (e.g. Windsurf, Cursor) to manage files and dependencies.

- Community Support: Provides a platform for user communication through the oTTomator Think Tank forum.

Using Help

Archon is a feature-rich open source framework designed to help users quickly develop and manage AI intelligences. Below is a detailed installation and usage guide to make sure you can get started easily.

Installation process

Archon V4 supports two installation methods: Docker (recommended) and local Python installation. Here are the steps:

Way 1: Docker installation (recommended)

- Preparing the environment

- Make sure Docker and Git are installed.

- Get a Supabase account (for vector databases).

- Prepare the OpenAI/Anthropic/OpenRouter API key, or run Ollama locally.

- clone warehouse

Runs in the terminal:git clone https://github.com/coleam00/archon.git cd archon

- Run the Docker script

Execute the following command to build and start the container:python run_docker.py- The script automatically builds two containers: the main Archon container (which runs the Streamlit UI and Graph services) and the MCP container (which supports AI IDE integration).

- if present

.envfile, the environment variables will be loaded automatically.

- access interface

Open your browser and visithttp://localhost:8501To do so, go to the Streamlit UI.

Way 2: Local Python Installation

- Preparing the environment

- Make sure Python 3.11+ is installed.

- Get the Supabase account and API key.

- clone warehouse

git clone https://github.com/coleam00/archon.git cd archon - Create a virtual environment and install dependencies

python -m venv venv source venv/bin/activate # Windows: venv\Scripts\activate pip install -r requirements.txt - Launch Streamlit UI

streamlit run streamlit_ui.py - access interface

Open your browser and visithttp://localhost:8501The

Environment variable configuration

The first time you run either Docker or a local installation, you need to configure environment variables. Create a .env file with sample contents:

OPENAI_API_KEY=your_openai_api_key

SUPABASE_URL=your_supabase_url

SUPABASE_SERVICE_KEY=your_supabase_service_key

PRIMARY_MODEL=gpt-4o-mini

REASONER_MODEL=o3-mini

This information is available on the Supabase dashboard and from API providers.

Functional operation flow

Once installed, Streamlit UI provides five main functional modules that walk you step-by-step through setup and use.

1. Environmental configuration (Environment)

- procedure::

- Go to the "Environment" tab.

- Enter the API key (e.g. OpenAI or OpenRouter) and model settings (e.g.

gpt-4o-mini). - Click on "Save" and the configuration will be stored in the

env_vars.jsonThe

- take note of: If you are using local Ollama, you need to make sure that the service is running on the

localhost:11434UI streaming is supported only by OpenAI.

2. Database settings (Database)

- procedure::

- Go to the "Database" tab.

- Enter the Supabase URL and service key.

- Click on "Initialize Database", the system automatically creates

site_pagesTable. - Check the connection status to confirm success.

- table structure::

CREATE TABLE site_pages ( id UUID PRIMARY KEY DEFAULT uuid_generate_v4(), url TEXT, chunk_number INTEGER, title TEXT, summary TEXT, content TEXT, metadata JSONB, embedding VECTOR(1536) );

3. Documentation crawling and indexing (Documentation)

- procedure::

- Go to the "Documentation" tab.

- Enter the target document URL (e.g. Pydantic AI document).

- Click "Start Crawling" and the system chunks the documents, generates embeds and stores them in Supabase.

- You can view the indexing progress in the UI when you are done.

- use: for RAG The system provides a knowledge base.

4. Intelligent Body Service (Agent Service)

- procedure::

- Go to the "Agent Service" tab.

- Click Start Service to run the LangGraph workflow.

- Monitor service status through logs.

- functionality: Coordinate reasoning, coding, and task routing to ensure that the core logic of generating intelligences works properly.

5. Creation of a smart body (Chat)

- procedure::

- Go to the "Chat" tab.

- Enter a requirement, such as "Generate an intelligence that analyzes JSON files".

- The system combines the knowledge retrieved by the RAG system to generate codes and display them.

- Copy the code to run locally, or deploy it via a service.

- typical example::

Input: 'Generate a smart body that queries real-time weather'.

Example output:from pydantic_ai import Agent, OpenAIModel agent = Agent(OpenAIModel("gpt-4o-mini"), system_prompt="查询实时天气") result = agent.run("获取北京今天的天气") print(result.data)

Optional: MCP Configuration

- procedure::

- Go to the "MCP" tab.

- Configure integration with an AI IDE such as Cursor.

- Start the MCP container and connect to the Graph service.

- use: Support for automated file creation and dependency management in the IDE for smartbody code.

usage example

Let's say you want to develop a "Log Analyzer Intelligence":

- In Chat, type: "Generate a Pydantic AI intelligence that parses Nginx logs".

- Archon retrieves relevant documentation and generates code:

from pydantic_ai import Agent, OpenAIModel

agent = Agent(OpenAIModel("gpt-4o-mini"), system_prompt="解析Nginx日志")

result = agent.run("分析access.log并提取IP地址")

print(result.data)

- Save the code locally and make sure to configure the API key before running.

caveat

- Model Compatibility: Only OpenAI supports streaming output from the Streamlit UI, other models (e.g. Ollama) only support non-streaming.

- network requirement: Crawling documents and calling APIs requires a stable network.

- adjust components during testing: If something goes wrong, check the UI log or terminal output.

With these steps, you can take full advantage of Archon's capabilities to quickly generate and manage AI intelligences, both for learning and for actual development.

© Copyright notes

Article copyright AI Sharing Circle All, please do not reproduce without permission.

Related posts

No comments...