ARC-AGI-2 Results Revealed: Waterloo for All AI Model Reasoning Abilities

Benchmarks to measure progress in generalized artificial intelligence (AGI) are critical. Effective benchmarks reveal capabilities, and great benchmarks are more likely to inspire research directions.The ARC Prize Foundation is dedicated to the advancement of AGI through its ARC-AGI The series of benchmarks plays such a role, directing research efforts to focus on truly generalized intelligence. The latest ARC-AGI-2 The benchmarks and their preliminary test results are a wake-up call to the current issues of boundaries and efficiency of AI capabilities.

ARC-AGI-1 Since its launch in 2019, it has played a unique role in tracking the progress of AGI, having helped identify when AI begins to move beyond mere pattern memory. The subsequent ARC Prize 2024 The competition has also attracted a large number of researchers to explore new ideas for test-time adaptation.

However, the road to AGI is still long. Current progress, such as OpenAI (used form a nominal expression) o3 What the system shows is perhaps a limited breakthrough in the dimension of "fluid intelligence". These systems are not only inefficient, but also require a lot of human supervision. Obviously, the realization of AGI requires more innovation at the source.

A New Challenge: ARC-AGI-2, Built to Expose AI Weaknesses

To this end, the ARC Prize Foundation has now launched the ARC-AGI-2 Benchmarks. It is designed with a clear goal in mind: to be significantly harder for AI (especially reasoning systems) while maintaining relative ease of handling for humans. This is not simply an increase in difficulty, but a targeted challenge to the barriers that current AI methods struggle to overcome.

Design Philosophy: Focusing on the Intelligence Gap where people are easy and AI is hard

Unlike many other AI benchmarks that pursue superhuman capabilities, theARC-AGI Focus on tasks that are relatively easy for humans, but extremely difficult for current AI. This strategy aims to reveal gaps in capabilities that cannot be filled by simply "scaling up". At the heart of general intelligence is the ability to efficiently generalize and apply knowledge from limited experience, which is the weakness of current AI.

ARC-AGI-2: Escalating Difficulty, Facing AI Reasoning's Weaknesses Directly

ARC-AGI-2 exist ARC-AGI-1 The foundation of AI has significantly increased the requirements for AI, emphasizing a combination of high adaptability and efficiency. By analyzing the failures of cutting-edge AI on prior tasks, theARC-AGI-2 Introduces more challenges that test the ability to interpret symbols, reason combinatorially, apply contextual rules, and more. These tasks are designed to force AI to go beyond surface pattern matching to deeper levels of abstraction and reasoning.

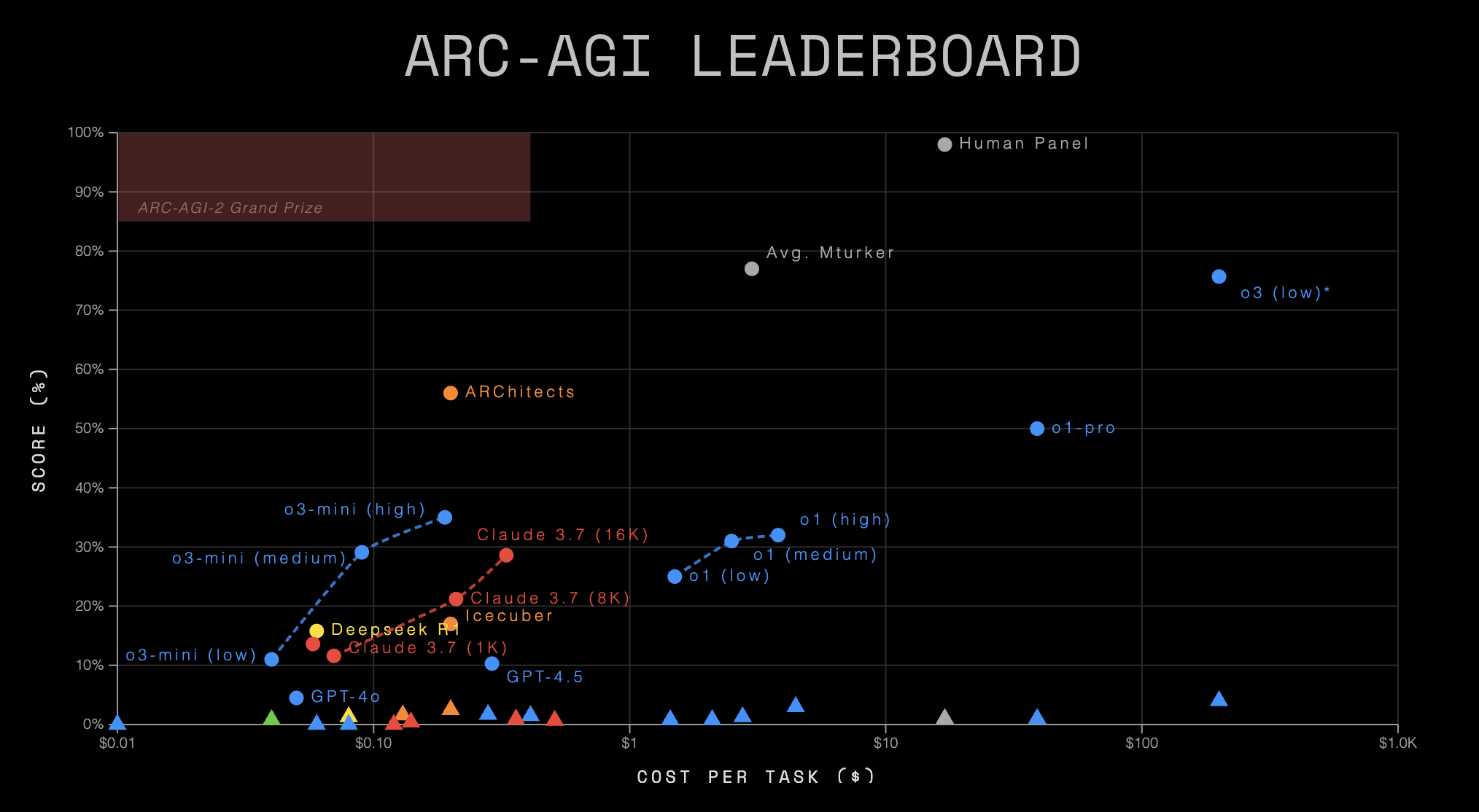

ARC-AGI-2 report card: a stark reflection of reality

Latest published ARC-AGI Leaderboard data paints a grim picture of current AI capabilities. This data not only confirms that ARC-AGI-2 challenging, and more profoundly reveals the huge gulf in AI's generalized reasoning power and efficiency.

Overview of Leaderboard data

| AI System | Organization | System Type | ARC-AGI-1 | ARC-AGI-2 | Cost/Task | Code / Paper |

|---|---|---|---|---|---|---|

| Human Panel | Human | N/A | 98.0% | 100.0% | $17.00 | - |

| o3 (low)* | OpenAI | CoT + Synthesis | 75.7% | 4.0% | $200.00 | 📄 |

| o1 (high) | OpenAI | CoT | 32.0% | 3.0% | $4.45 | 💻 |

| ARChitects | ARC Prize 2024 | Custom | 56.0% | 2.5% | $0.200 | 📄💻 |

| o3-mini (medium) | OpenAI | CoT | 29.1% | 1.7% | $0.280 | 💻 |

| Icecuber | ARC Prize 2024 | Custom | 17.0% | 1.6% | $0.130 | 💻 |

| o3-mini (high) | OpenAI | CoT | 35.0% | 1.5% | $0.410 | 💻 |

| Gemini 2.0 Flash | Google Internet company | Base LLM | N/A | 1.3% | $0.004 | 💻 |

| o1 (medium) | OpenAI | CoT | 31.0% | 1.3% | $2.76 | 💻 |

| Deepseek R1 | Deepseek | CoT | 15.8% | 1.3% | $0.080 | 💻 |

| Gemini-2.5-Pro-Exp-03-25 ** | Google Internet company | CoT | 12.5% | 1.3% | N/A | 💻 |

| o1-pro | OpenAI | CoT + Synthesis | 50.0% | 1.0% | $39.00 | - |

| Claude 3.7 (8K) | Anthropic | CoT | 21.2% | 0.9% | $0.360 | 💻 |

| Gemini 1.5 Pro | Google Internet company | Base LLM | N/A | 0.8% | $0.040 | 💻 |

| GPT-4.5 | OpenAI | Base LLM | 10.3% | 0.8% | $2.10 | 💻 |

| o1 (low) | OpenAI | CoT | 25.0% | 0.8% | $1.44 | 💻 |

| Claude 3.7 (16K) | Anthropic | CoT | 28.6% | 0.7% | $0.510 | 💻 |

| Claude 3.7 (1K) | Anthropic | CoT | 11.6% | 0.4% | $0.140 | 💻 |

| Claude 3.7 | Anthropic | Base LLM | 13.6% | 0.0% | $0.120 | 💻 |

| GPT-4o | OpenAI | Base LLM | 4.5% | 0.0% | $0.080 | 💻 |

| GPT-4o-mini | OpenAI | Base LLM | N/A | 0.0% | $0.010 | 💻 |

| o3-mini (low) | OpenAI | CoT | 11.0% | 0.0% | $0.060 | 💻 |

(Note: * indicates preliminary estimates in the table.* denotes an experimental model)*

Insights: the warning behind the data

- Humans vs AI: The Insurmountable Divide

Nothing is more striking than the stark contrast between human and AI performance. InARC-AGI-2On, the human team achieved a perfect score of 100%, and the best performing AI system - theOpenAI(used form a nominal expression)o3 (low), scoring only 4.01 TP3T. other well-known models, such as theGemini 2.0 Flash,Deepseek R1etc., all with scores hovering around 1.3%. What's even more alarming is that the likes ofClaude 3.7,GPT-4o,GPT-4o-miniThese Base Large Language Models (Base LLMs), which have excelled in other domains, have been used in theARC-AGI-2The scores on the game went straight to zero. This relentlessly reveals that, despite being incredibly capable at specific tasks, AI is still fundamentally inferior to humans when faced with novel problems that require flexible, abstract, and generalized reasoning skills. - From AGI-1 to AGI-2: The Cliff Drop in AI Capabilities

Almost all of the AI systems that participated in the test, after starting with theARC-AGI-1transitionARC-AGI-2time, the performance all took a nosedive. For example, theo3 (low)plunged from 75.71 TP3T to 4.01 TP3T.o1-profrom about 50% to 1.0%.ARChitectsfrom 56.01 TP3T to 2.51 TP3T.This generalization strongly suggests that theARC-AGI-2It does successfully address the "pain points" of current AI methodologies, whether based on CoT, Synthesis, or other customized approaches, which are difficult to effectively address.ARC-AGI-2The reasoning challenge represented. - System type and efficiency: high cost does not lead to high intelligence

The ranking further reveals the role of different AI system types in theARC-AGI-2Performance differences and serious efficiency issues on the- CoT + Synthesis System (

o3 (low),o1-pro) achieved the relatively highest AI scores (4.0% and 1.0%), but at a surprisingly high cost ($200 and $39 per task, respectively). This suggests that complex reasoning plus search strategies may be able to "squeeze" out a little bit of score, but it is extremely inefficient. - Pure CoT systems Performance was mixed, with scores generally ranging from 1%-3% and costs ranging from a few cents to a few dollars. This seems to indicate that CoT alone is not sufficient to meet the challenge.

- Base LLM (Large Language Model) (

GPT-4.5,Gemini 1.5 Pro,Claude 3.7,GPT-4o) were a debacle, with scores of 0% or close to it, which is a strong rebuttal to the idea that "size is everything," at least in the case ofARC-AGIThis is true for the generalized fluid intelligence aspect measured. - Customized systems (

ARChitects,Icecuber) asARC Prize 2024the product of a very low cost (~$0.1-$0.2 per task) to achieve comparable or even slightly better results (2.5%, 1.6%) than other AI systems. This may suggest that targeted, lightweight algorithms or architectures may have more potential for solving such problems than large, generalized models, and highlights the value of open competitions and community innovation.

- CoT + Synthesis System (

- Crisis of efficiency: intelligence can't just be about scores

ARC PrizeThe inclusion of "cost/task" as a key metric in the rankings is significant. The data shows that even the best performing AI (o3 (low)get 4%), its cost per task ($200) is also more than ten times that of humans ($17 get 100%). While some low-cost models such asGemini 2.0 FlashThis is in stark contrast to AI, which either scores very low, or is costly, or both, despite its extremely low cost ($0.004) and scores only 1.3%. Intelligence is not about getting the right answer at any cost; efficiency is an intrinsic property. Currently, AI has a very low score inARC-AGI-2The "capacity-cost" curve shown above undoubtedly reveals a profound "efficiency crisis".

Dataset Composition and Competition Details

ARC-AGI-2 Contains calibrated training and evaluation sets along the lines of pass@2 Scoring mechanism. Major changes include an increase in the number of tasks, removal of tasks that are vulnerable to brute force, difficulty calibration based on human testing, and the design of targeted new tasks.

ARC Prize 2025 Competition Launched: Million Dollar Reward for New Ideas

With this grim report card, theARC Prize 2025 The competition was held in Kaggle The platform is live (March 26-November 3) with a total prize pool of up to $1 million. The competition environment limits API usage and compute resources (~$50/submission) and mandates that winners open source their solutions. This further reinforces the need for efficiency and innovation.

In comparison to 2024, the 2025 competition will be dominated byChange logIncluding: the use of ARC-AGI-2 dataset, a new leaderboard reporting mechanism, enhanced open source requirements, doubling of computational resources, and additional anti-overfitting measures.

Conclusion: A new paradigm is urgently needed for real breakthroughs

ARC-AGI-2 The leaderboard data is like a mirror that clearly reflects the limitations of current AI in terms of generalized reasoning and efficiency. It reminds us that the road to AGI is far from straight, and that simply scaling up models or increasing computational resources may not be enough to cross the chasm in front of us. Real breakthroughs may require new ideas, different architectures, and perhaps even innovators from outside the big labs.ARC Prize 2025 It is such a platform that calls for a new paradigm.

© Copyright notes

Article copyright AI Sharing Circle All, please do not reproduce without permission.

Related articles

No comments...