AppAgent: automated smartphone operation using multimodal intelligences

General Introduction

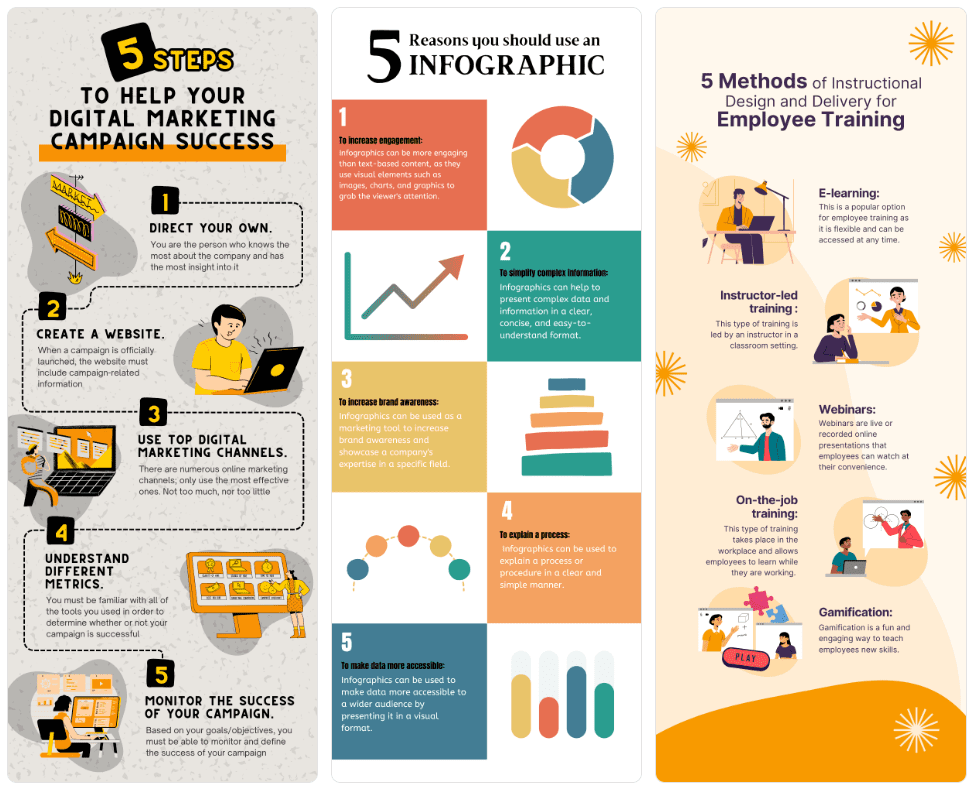

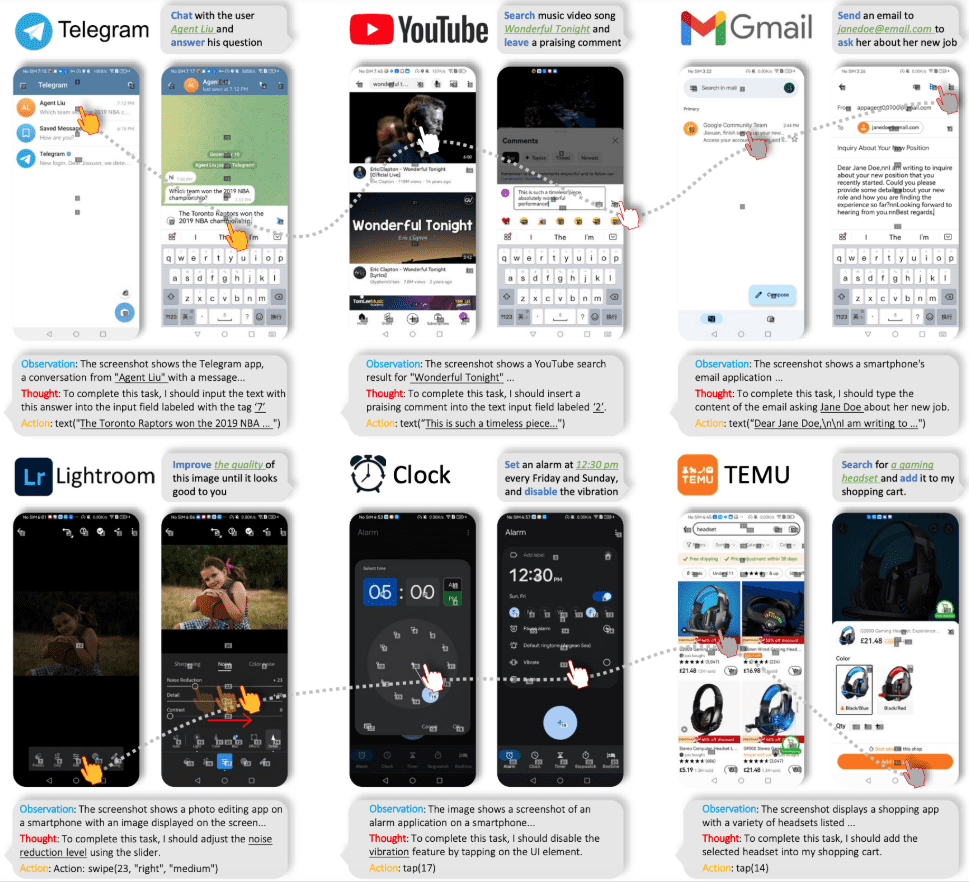

AppAgent is a multimodal agent framework based on the Large Language Model (LLM) designed to manipulate smartphone applications. The framework expands its applicability across different applications through a simplified manipulation space that mimics human interactions such as taps and swipes, thus eliminating the need for system back-end access.AppAgent learns to use new applications by autonomously exploring or observing human demonstrations, and generates a knowledge base to perform complex tasks.

Tencent launched AppAgent, a multimodal intelligent body that can operate the cell phone interface directly by recognizing the current cell phone interface and user commands, and can operate the cell phone like a real user! For example, it can operate photo editing software to edit pictures, open map applications to navigate, shop and so on.

Project home page: https://appagent-official.github.io

Link to paper: https://arxiv.org/abs/2312.13771

Dissertation Abstracts

With recent advances in Large Language Models (LLMs), intelligent smart bodies have been created that can perform complex tasks. In this paper, we present a novel, multimodal framework for intelligent bodies based on Large Language Models, designed for manipulating smartphone applications. Our framework allows intelligent bodies to manipulate smartphone apps through a simplified manipulation space, in such a way that it is as if a human is performing click-and-swipe operations. This innovative approach bypasses the need for direct access to the back-end of the system, making it suitable for a wide range of different applications. At the heart of the functionality of our intelligences lies its innovative approach to learning. The Intelligent Body learns how to navigate and use new applications through self-exploration or by observing human demonstrations. In the process, it builds a knowledge base that it relies on to perform complex tasks in different applications. To demonstrate the utility of our intelligence, we thoroughly tested it on 50 tasks in 10 different applications, including social media, email, maps, shopping, and complex image editing tools. The test results demonstrate the efficient ability of our intelligence to handle a wide range of advanced tasks.

Function List

- self-directed exploration: Agents can autonomously explore applications, record interactive elements and generate documentation.

- Human Demonstration Learning: The agent learns the task by observing a human demonstration and generates the appropriate documentation.

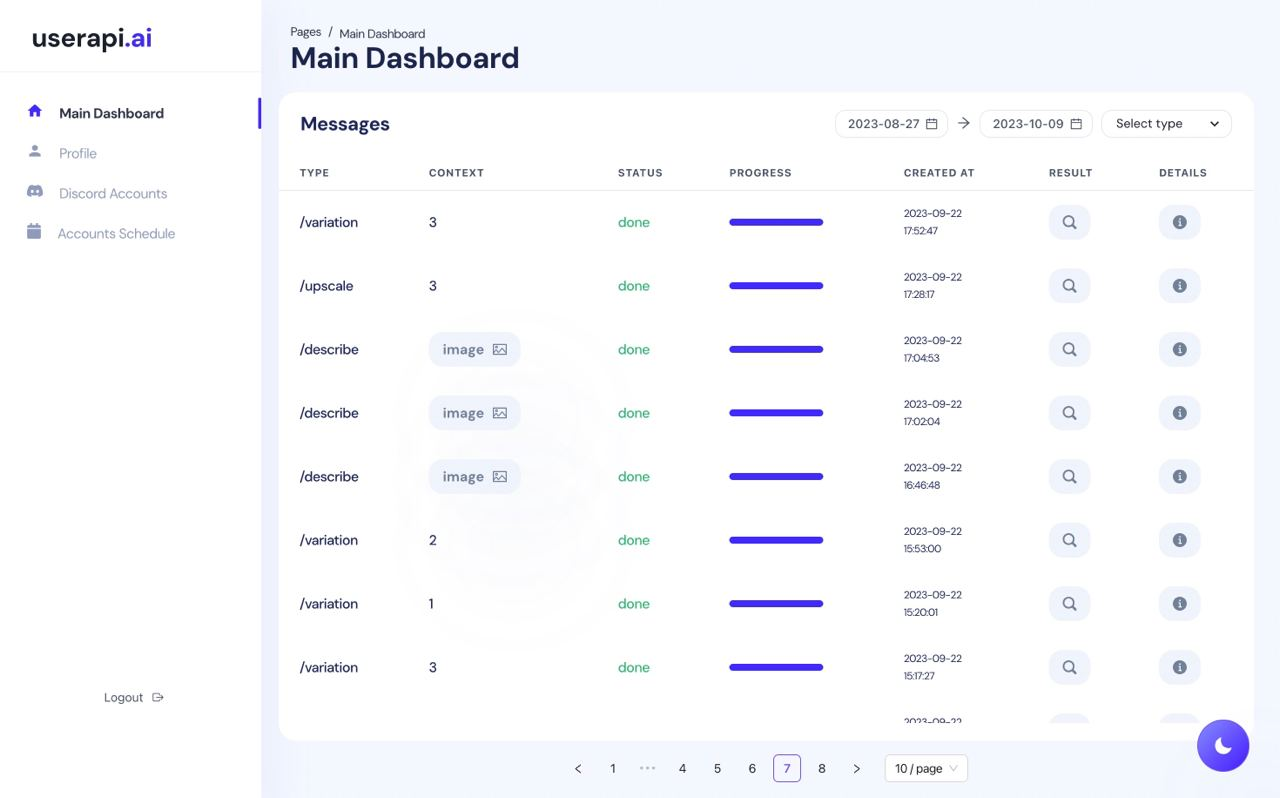

- mandate implementation: During the deployment phase, the agent performs complex tasks based on the generated documents.

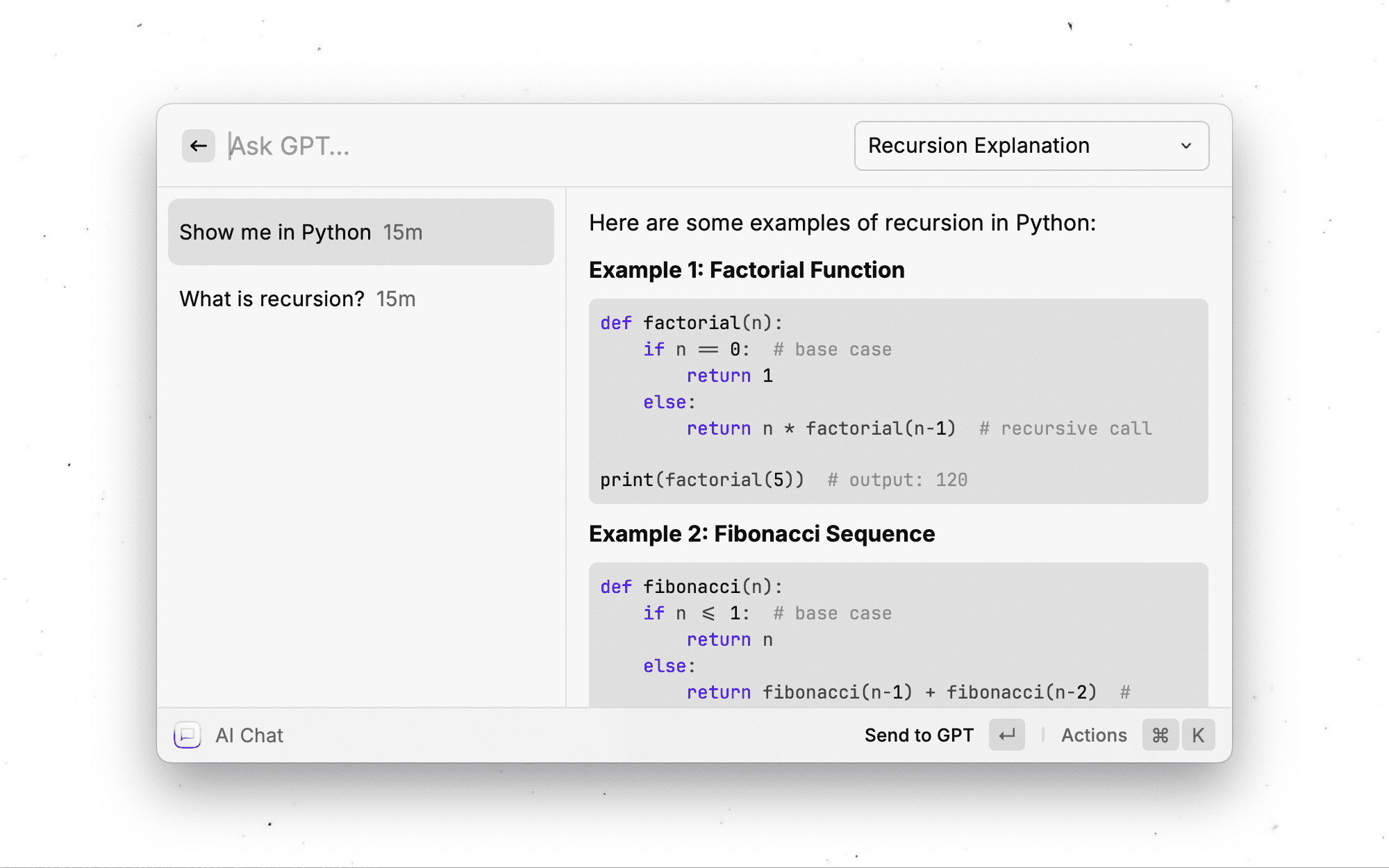

- Multi-modal inputs: Supports textual and visual inputs, using GPT-4V or Qwen-VL-Max models.

- CAPTCHA processing: Can be authenticated by CAPTCHA.

- UI Element Recognition: Use grid overlays to position untagged UI elements.

Using Help

Installation and Configuration

- Download and Installation: Download the project files from the GitHub page and install the necessary dependencies.

- configuration file: Modify the root directory's

config.yamlfile to configure the API key for a GPT-4V or Qwen-VL-Max model. - connected device: Connect your Android device using USB and enable USB debugging in Developer Options.

Self-exploration model

- launch an exploration: Run

learn.pyfile, select Autonomous Exploration Mode, and enter the application name and task description. - Record interactions: The agent will automatically explore the application, record interaction elements and generate documentation.

Human Demonstration Mode

- Startup Demo: Run

learn.pyfile, select Human Demo Mode, and enter the application name and task description. - Executive Demo: Follow the prompts and the agent will record all interactions and generate documentation.

mandate implementation

- Initiate tasks: Run

run.pyfile, enter the application name and task description, and select the appropriate document library. - operate: The agent will perform tasks to accomplish complex operations based on the documentation.

Detailed Operation Procedure

- Download Project: Visit the GitHub page, download the project file and unzip it.

- Installation of dependencies: Run in a terminal

pip install -r requirements.txtInstall all dependencies. - configuration model: Modify as necessary

config.yamlfile to configure the API key for a GPT-4V or Qwen-VL-Max model. - connected device: Connect your Android device using USB and enable USB debug mode on the device.

- Initiate an exploration or demonstration: Run

learn.pyfile, select Autonomous Exploration or Human Demonstration mode, and enter the application name and task description. - Generate Documentation: The agent will record all interactions and generate documentation for subsequent task execution.

- operate: Run

run.pydocument, enter the application name and task description, select the appropriate document library, and the agent will perform the task based on the document.

© Copyright notes

Article copyright AI Sharing Circle All, please do not reproduce without permission.

Related posts

No comments...