Install Dify and Integrate Ollama and Xinference

This article describes installing Dify via Docker and then integrating the Ollama and XInference and utilizes Dify Quickly build a knowledge base quiz based application.

- I. Introduction to Dify

- Dify Installation

- III. Dify Add Ollama Model Q&A

- IV. Dify knowledge base-based quiz

- V. Documentation links

I. Introduction to Dify

Dify is an open source Large Language Modeling (LLM) application development platform designed to help developers rapidly build and deploy generative AI applications. Here are the key features and functionalities of Dify [1]:

- Fusion of Backend as Service and LLMOps concepts Dify combines the concepts of Backend as Service and LLMOps to enable developers to quickly build production-grade generative AI applications.

- Support for multiple models : Dify supports hundreds of proprietary and open-source LLM models, including GPT, Mistral, Llama3, and more, seamlessly integrating models from multiple inference providers and self-hosted solutions.

- Intuitive Prompt Orchestration Interface : Dify provides an intuitive Prompt IDE for writing prompts, comparing model performance, and adding additional functionality such as voice conversion to chat-based applications.

- High quality RAG engine : Dify has a wide range of RAG Features that cover everything from document ingestion to retrieval and support text extraction from common document formats such as PDF, PPT, and more.

- Integrating the Agent Framework : Users can base their program on the LLM function call or the ReAct Define agents and add pre-built or customized tools to them.Dify offers more than 50 built-in tools such as Google Search, DELL-E, Stable Diffusion, and WolframAlpha.

- Flexible process organization : Dify provides a powerful visual canvas for building and testing robust AI workflows, allowing developers to intuitively design and optimize their AI processes.

- Comprehensive monitoring and analysis tools : Dify provides tools to monitor and analyze application logs and performance, and developers can continually improve hints, datasets, and models based on production data and annotations.

- back-end as a service : All of Dify's features come with an API, so it's easy to integrate Dify into your own business logic.

Dify Installation

Copy the Dify Github code locally [2].

git clone https://github.com/langgenius/dify.git

Go to the docker directory of the dify source code and copy the environment variables.

cd dify/docker

cp .env.example .env

Install the application via docker compose.

docker compose up -d

Enter the ollama container and start theqwen2:7bModel.

root@ip-172-31-30-167:~/dify/docker# docker pull ollama/ollama

root@ip-172-31-83-158:~/dify/docker# docker run -d --gpus=all -v ollama:/root/.ollama -p 11434:11434 --name ollama --restart always -e OLLAMA_KEEP_ALIVE=-1 ollama/ollama

root@ip-172-31-83-158:~/dify/docker# docker exec -it ollama bash

root@b094349fc98c:/# ollama run qwen2:7b

III. Dify Add Ollama Model Q&A

Log in to the Dify homepage via EC2's public IP address plus port 80 to create an administrative account.

Log in through the administrator account.

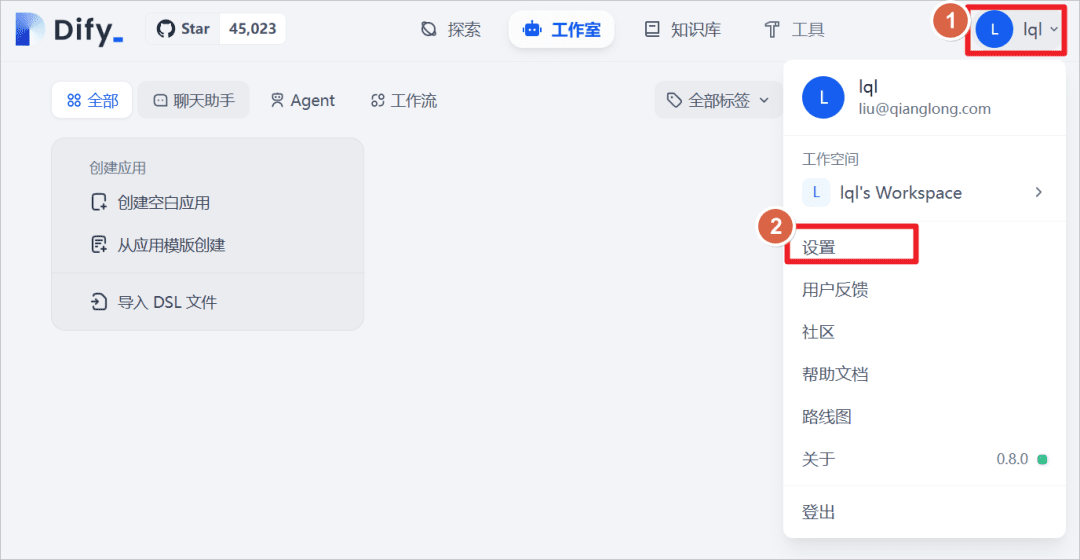

Click User - Settings.

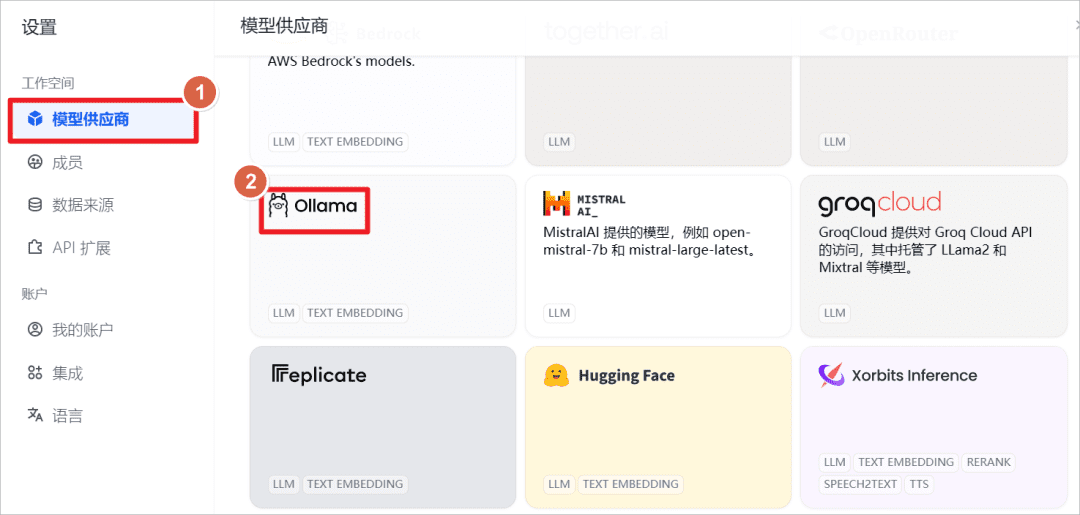

Add the Ollama model.

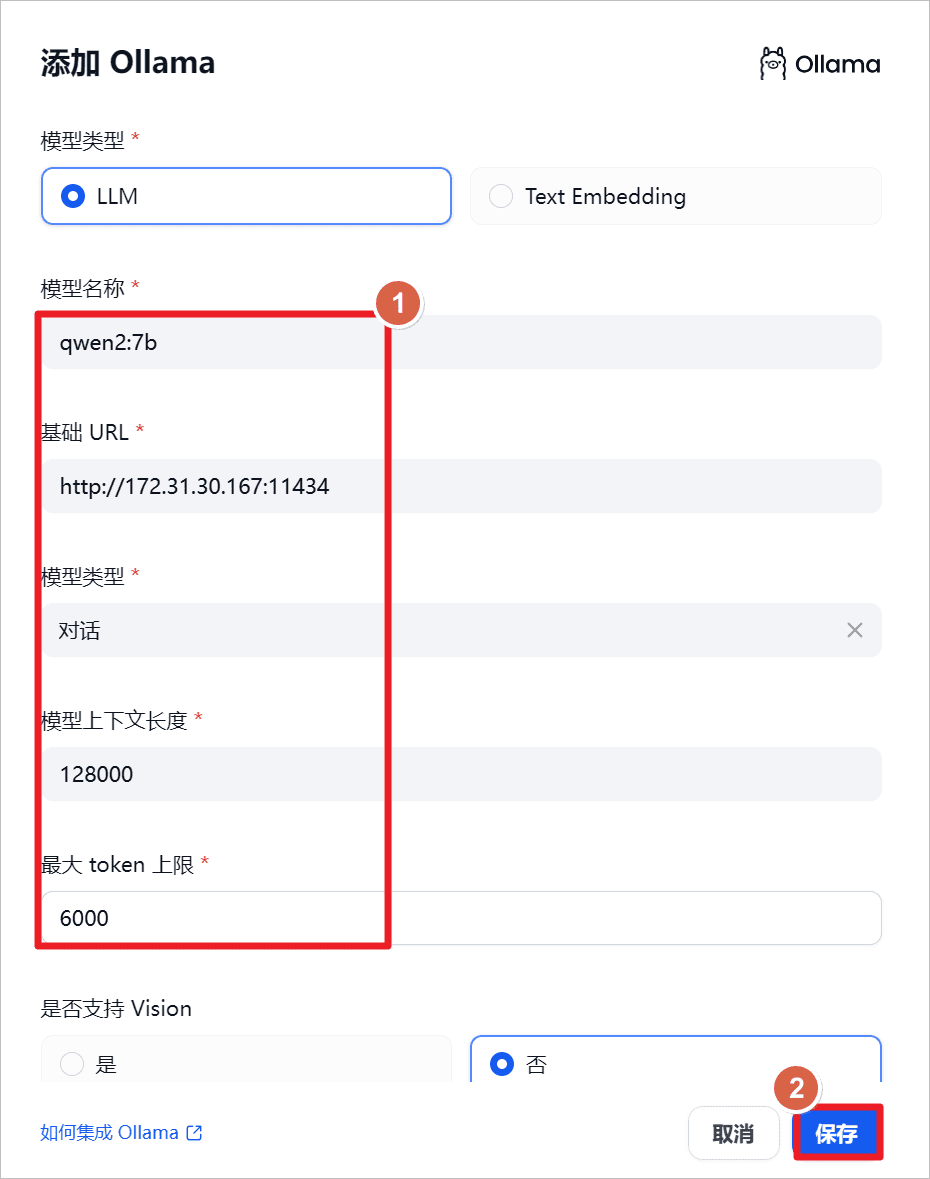

increaseqwen2:7bmodel, since Ollama is launched locally, set the URL to the local IP address and the port to the114341(math.) genus

"qwen2-7b-instruct supports 131,072 tokens of contexts using YARN, a technique for augmented model length extrapolation, and in order to safeguard normal usage and normal output, it is recommended that the API limit user input to 128,000 and output to a maximum of 6,144.[3]

"

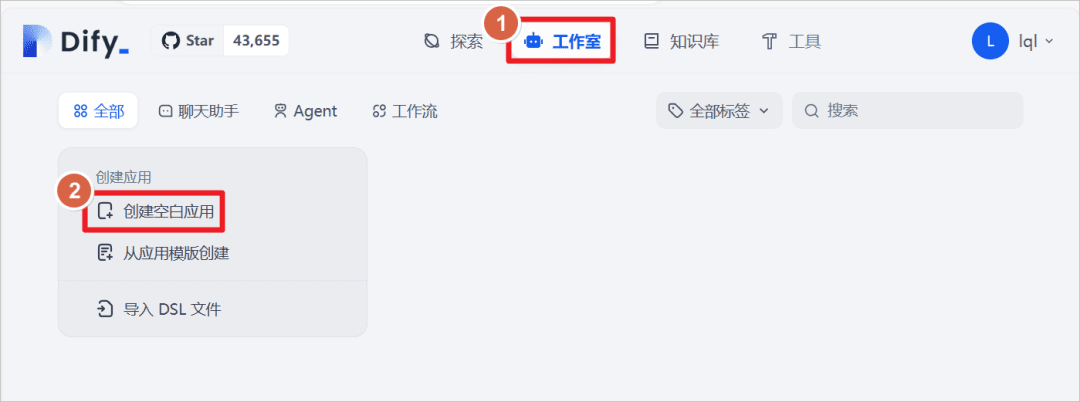

Click on Studio - Create a blank application

Create an application of type "Chat Assistant" and set the name of the application toQwen2-7B, click Create.

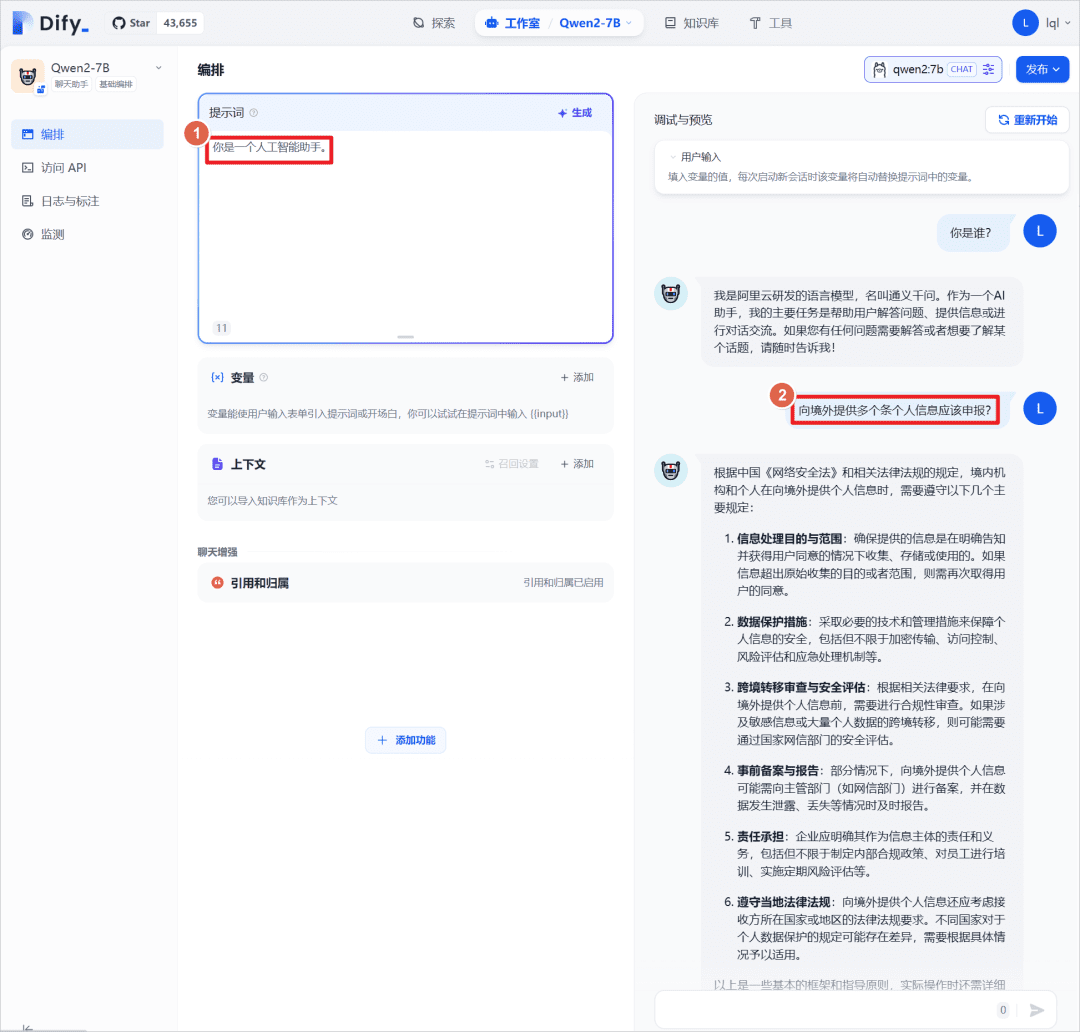

Setting the prompt "You are an AI assistant" for the app can be used in conjunction with theQwen2:7BConduct a dialog test, here with the big model itself, without introducing an external knowledge base, which will be introduced later to compare the results of the answers.

IV. Dify knowledge base-based quiz

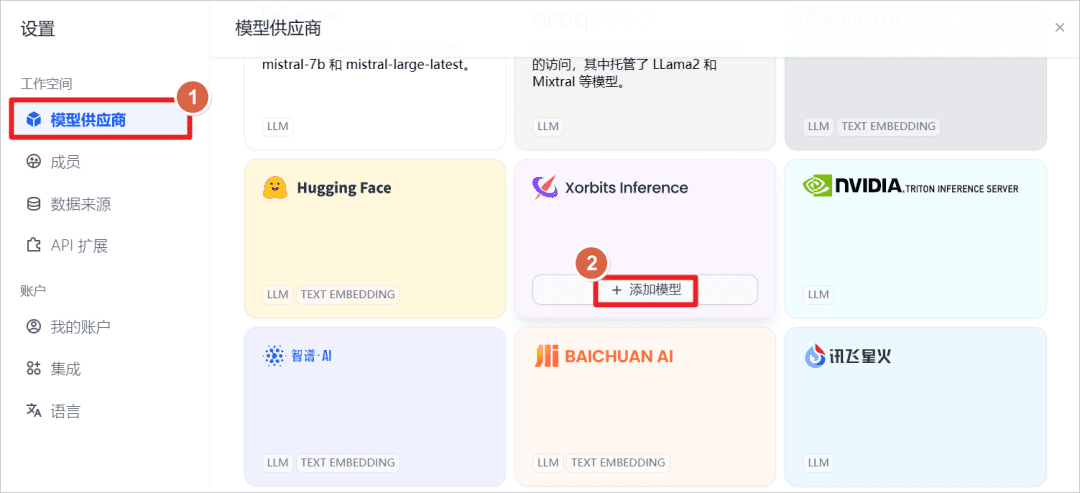

increaseXorbits InferenceThe model provided.

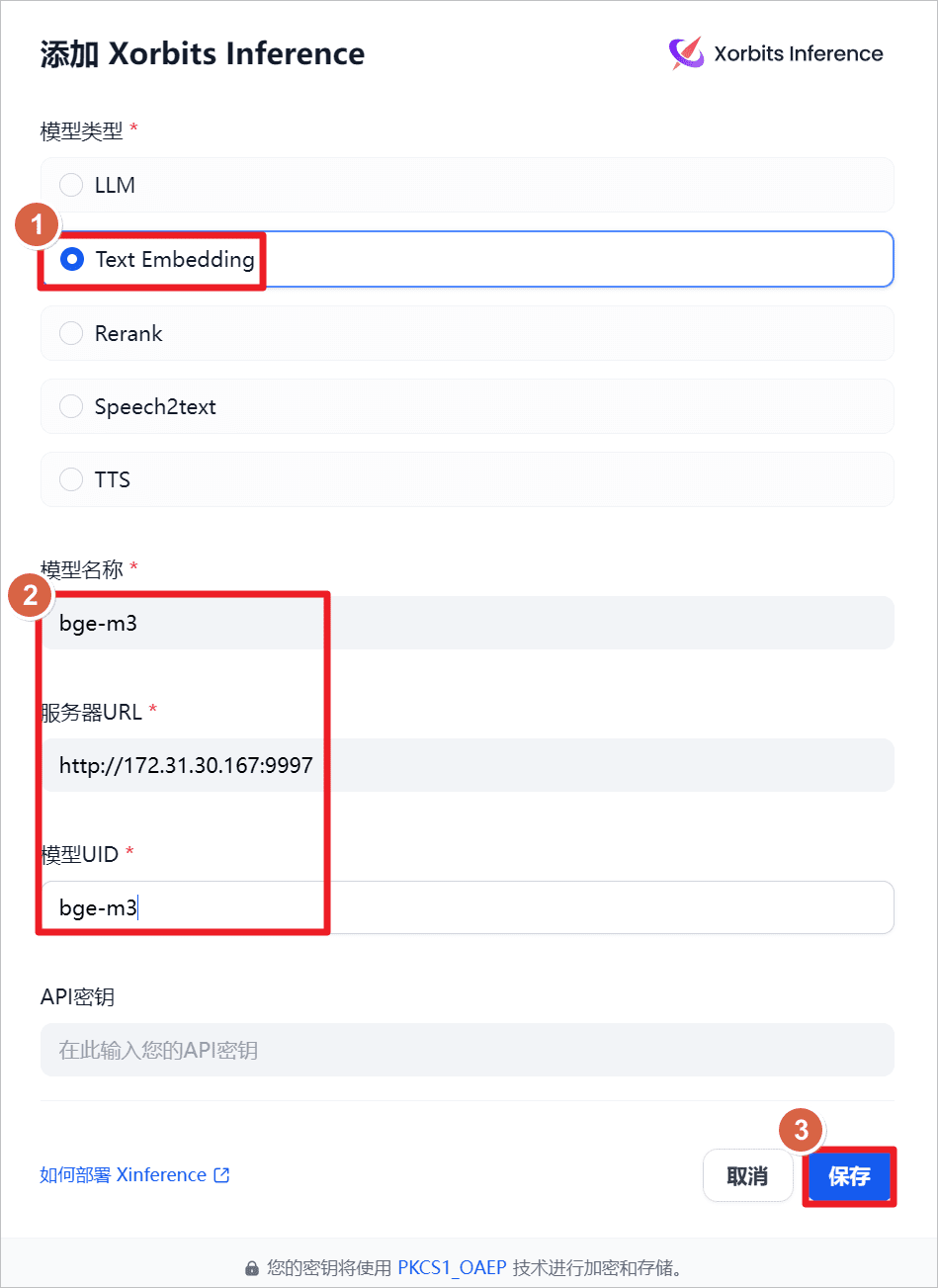

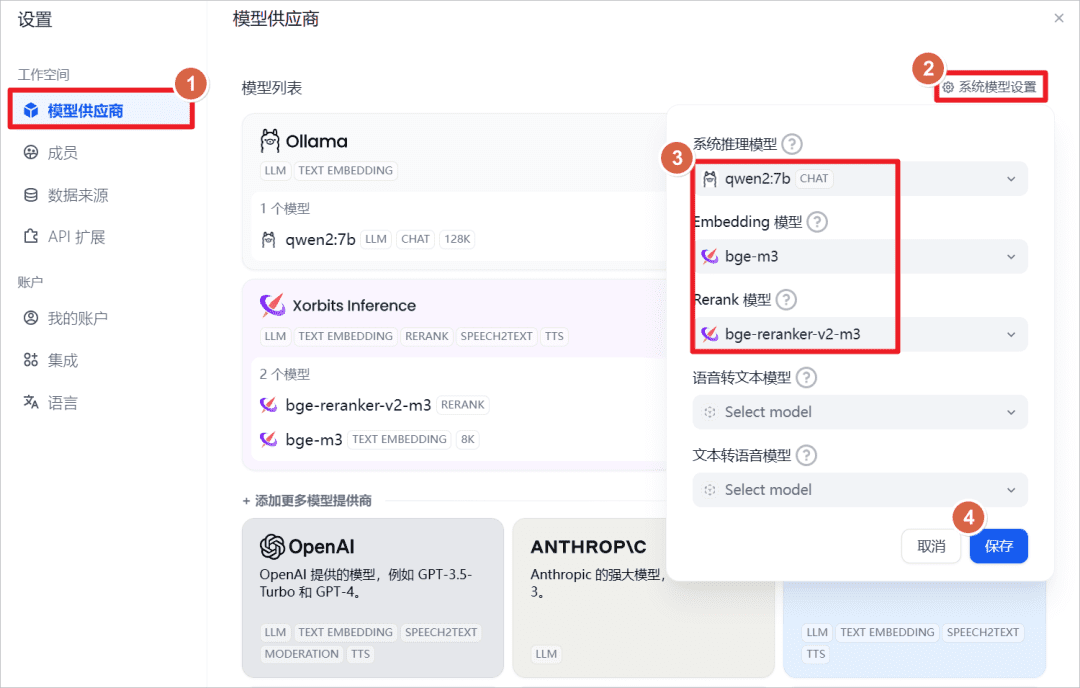

increaseText Embedding, i.e., the text embedding model, the name of the model isbge-m3The server URL ishttp://172.31.30.167:9997(here is the IP of the local machine, it can also be installed on other machines, network and port reachable is sufficient), has started XInference on the local machine ahead of time, and started thebge-m3model (refer to previous post).

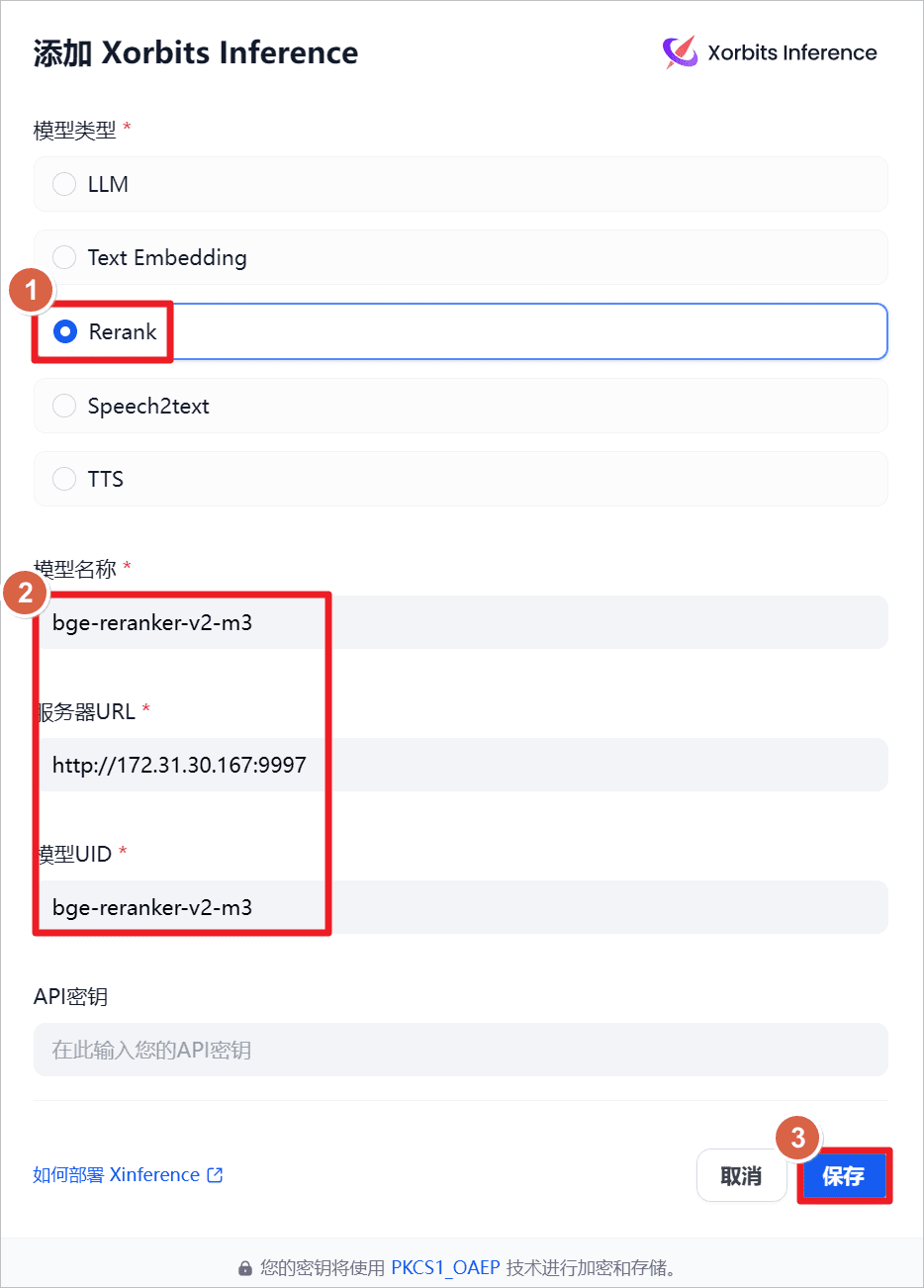

increaseRerank, i.e., the rearrangement model, the name of the model isbge-reraker-v2-m3The server URL ishttp://172.31.30.167:9997(here is the IP of the local machine, it can also be installed on other machines, network and port reachable is sufficient), has started XInference on the local machine ahead of time, and started thebge-reraker-v2-m3model (refer to previous post).

View the system default settings.

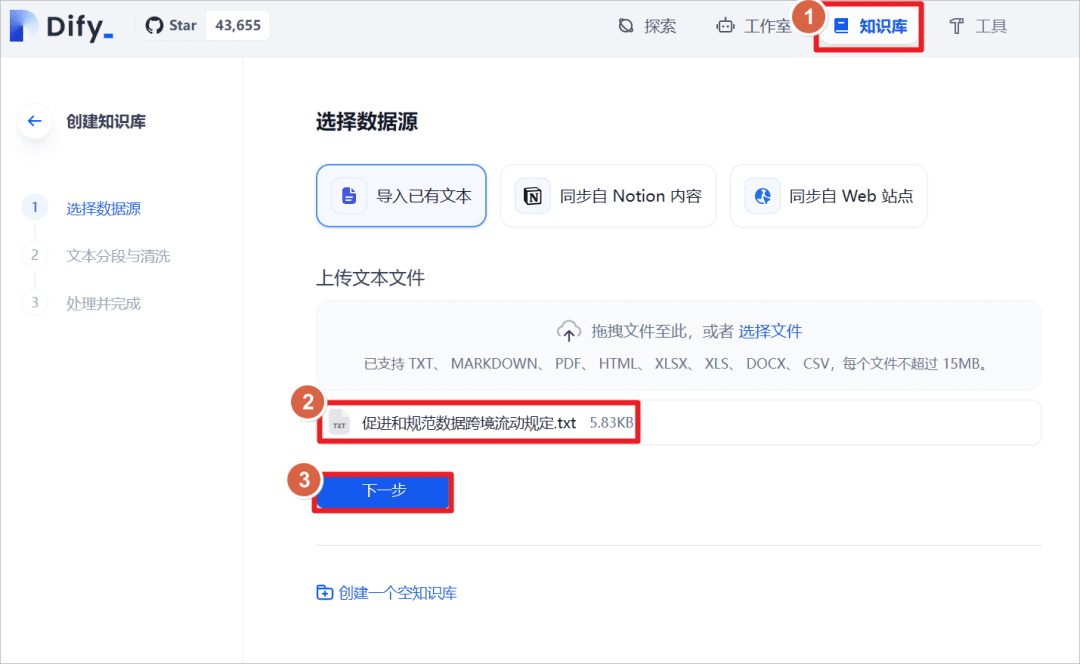

Click "Knowledge Base" - "Import Existing Text" - "Upload Text File" - Select the document "Provisions on the Facilitation and Regulation of Transborder Flow of Data".

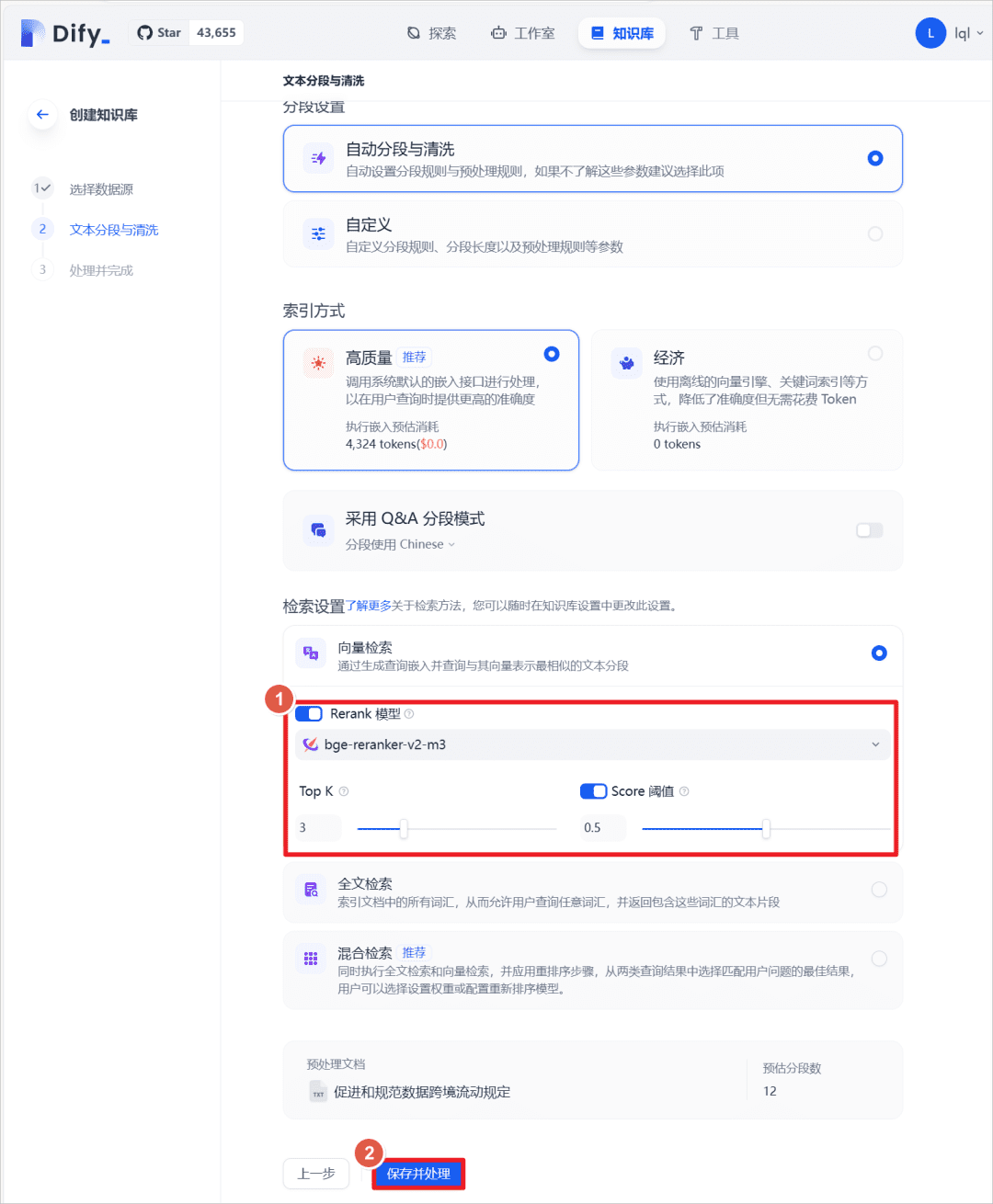

After successful import, set the text retrieval method and turn on theRerankmodel, selectbge-reranker-v2-m3model, turn on the defaultScoreThe threshold is 0.5 (i.e., text matches below a score of 0.5 are not recalled and are not added to the context of the larger model).

In the previous chat application, add the knowledge base created above and re-ask the same question to the larger model and you can see that the model answers in conjunction with the knowledge base.

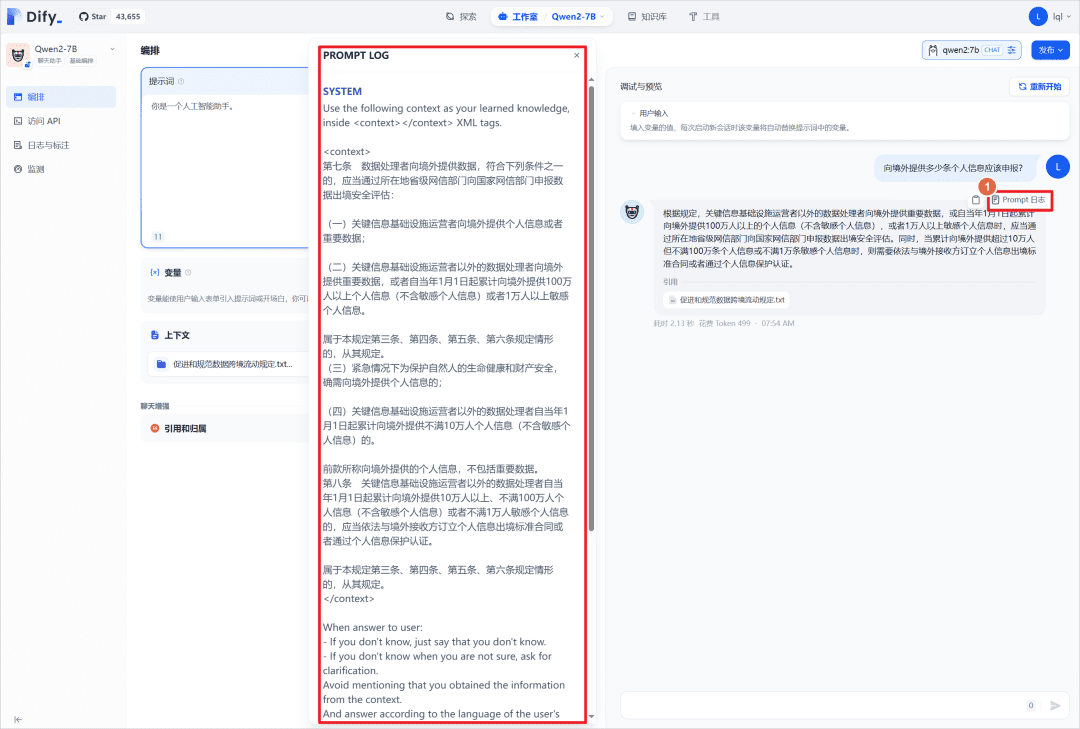

You can click on "Prompt Log" to view the log file, and you can view the system prompts that place matching knowledge base content in the<context></context>Center.

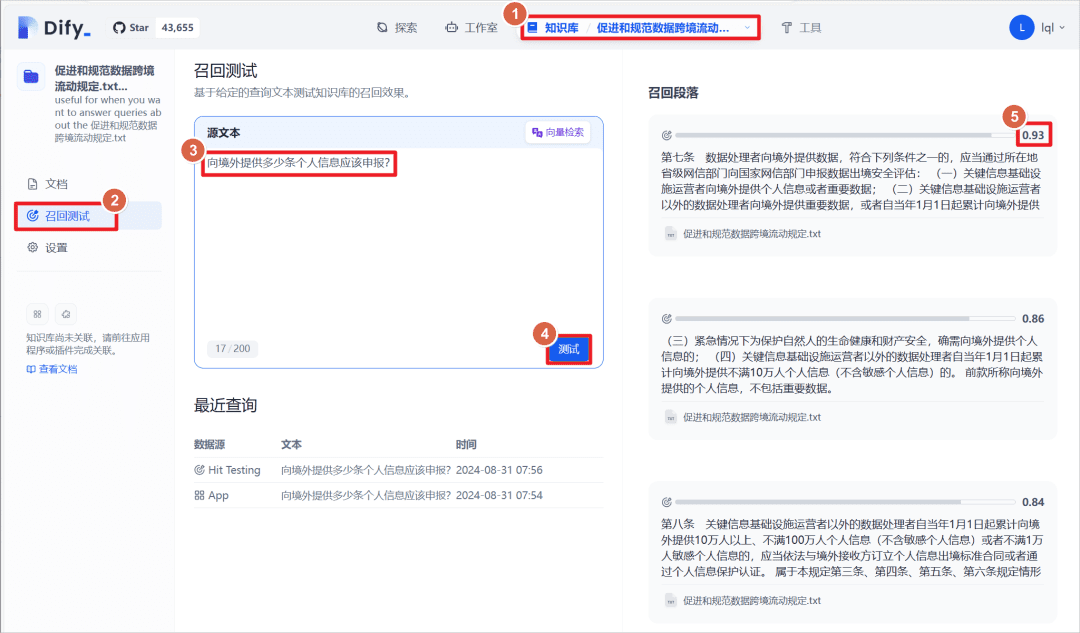

Click on the created knowledge base - click on the "recall test", you can enter a paragraph of text to match the text in the knowledge base with the matching text, the matching text has a weight score, the threshold set above is 0.5, that is, greater than this score will be displayed as a "Recall paragraph! ".

© Copyright notes

Article copyright AI Sharing Circle All, please do not reproduce without permission.

Related articles

No comments...