Amphion MaskGCT: Zero-sample text-to-speech cloning model (local one-click deployment package)

General Introduction

MaskGCT (Masked Generative Codec Transformer) is a fully non-autoregressive Text-to-Speech (TTS) model jointly introduced by Funky Maru Technology and The Chinese University of Hong Kong. The model eliminates the need for explicit text-to-speech alignment information and adopts a two-stage generation approach, first predicting semantic coding from text, and then generating acoustic coding from semantic coding.MaskGCT performs well in the zero-sample TTS task, providing high-quality, similar, and easy-to-understand speech output.

Public Beta Product: Funmaru Chiyo, Voice Cloning and Video Multilingual Translation Tool

Thesis: https://arxiv.org/abs/2409.00750

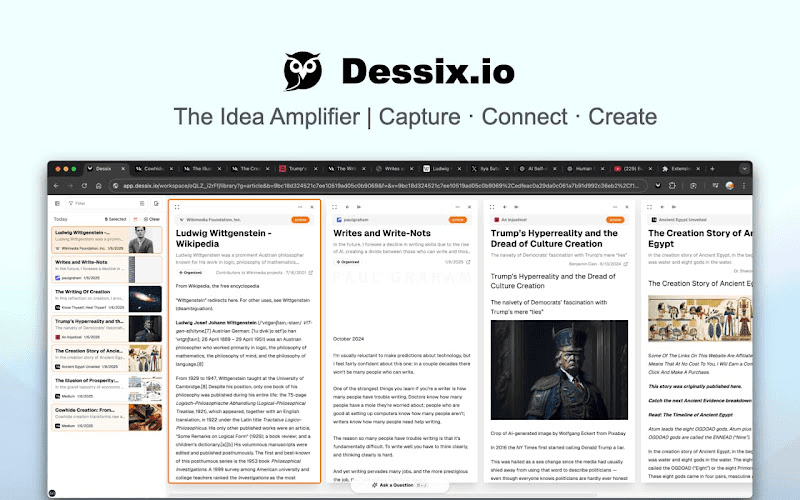

Online demo: https://huggingface.co/spaces/amphion/maskgct

Function List

- Text-to-speech conversion (TTS): Converts input text to speech output.

- semantic encoding: Converts speech into semantic coding for subsequent processing.

- acoustic code: Convert semantic coding to acoustic coding and reconstruct the audio waveform.

- zero-sample learning: High-quality speech synthesis without explicit alignment information.

- Pre-trained models: A wide range of pre-trained models are available to support rapid deployment and utilization.

Using Help

Installation process

- cloning project::

git clone https://github.com/open-mmlab/Amphion.git - Create an environment and install dependencies::

bash ./models/tts/maskgct/env.sh

Usage Process

- Download pre-trained model: The required pre-trained models can be downloaded from HuggingFace:

from huggingface_hub import hf_hub_download # 下载语义编码模型 semantic_code_ckpt = hf_hub_download("amphion/MaskGCT", filename="semantic_codec/model.safetensors") # 下载声学编码模型 codec_encoder_ckpt = hf_hub_download("amphion/MaskGCT", filename="acoustic_codec/model.safetensors") codec_decoder_ckpt = hf_hub_download("amphion/MaskGCT", filename="acoustic_codec/model_1.safetensors") # 下载TTS模型 t2s_model_ckpt = hf_hub_download("amphion/MaskGCT", filename="t2s_model/model.safetensors") - Generate Speech: Use the following code to generate speech from text:

# 导入必要的库 from amphion.models.tts.maskgct import MaskGCT # 初始化模型 model = MaskGCT() # 输入文本 text = "你好,欢迎使用MaskGCT模型。" # 生成语音 audio = model.text_to_speech(text) # 保存生成的语音 with open("output.wav", "wb") as f: f.write(audio) - model training: If you need to train your own model, you can refer to the training scripts and configuration files in the project for data preparation and model training.

caveat

- Environment Configuration: Ensure that all necessary dependent libraries are installed and environment variables are configured correctly.

- Data preparation: Training with high quality speech data for better speech synthesis.

- model optimization: Adjust model parameters and training strategies to achieve optimal performance according to specific application scenarios.

Local deployment tutorial (with local one-click installer)

A few days ago, another non-autoregressive text-to-speech AI model, MaskGCT, opened its source code. Like the F5-TTS model, which is also non-autoregressive, the MaskGCT model is trained on the 100,000-hour dataset Emilia, and is proficient in cross-language synthesis of six languages, namely, Chinese, English, Japanese, Korean, French and German. The dataset Emilia is one of the largest and most diverse high-quality multilingual speech datasets in the world.

This time, we share how to deploy the MaskGCT project locally to get your graphics card firing again.

Installation of basic dependencies

First of all, make sure that Python 3.11 is installed locally, you can go to Python's official download package.

python.org

Subsequent cloning of the official program.

git clone https://github.com/open-mmlab/Amphion.git

Official linux-based installation shell scripts are provided:

pip install setuptools ruamel.yaml tqdm

pip install tensorboard tensorboardX torch==2.0.1

pip install transformers===4.41.1

pip install -U encodec

pip install black==24.1.1

pip install oss2

sudo apt-get install espeak-ng

pip install phonemizer

pip install g2p_en

pip install accelerate==0.31.0

pip install funasr zhconv zhon modelscope

# pip install git+https://github.com/lhotse-speech/lhotse

pip install timm

pip install jieba cn2an

pip install unidecode

pip install -U cos-python-sdk-v5

pip install pypinyin

pip install jiwer

pip install omegaconf

pip install pyworld

pip install py3langid==0.2.2 LangSegment

pip install onnxruntime

pip install pyopenjtalk

pip install pykakasi

pip install -U openai-whisper

Here the author converts the requirements.txt dependency file for Windows:

setuptools

ruamel.yaml

tqdm

transformers===4.41.1

encodec

black==24.1.1

oss2

phonemizer

g2p_en

accelerate==0.31.0

funasr

zhconv

zhon

modelscope

timm

jieba

cn2an

unidecode

cos-python-sdk-v5

pypinyin

jiwer

omegaconf

pyworld

py3langid==0.2.2

LangSegment

onnxruntime

pyopenjtalk

pykakasi

openai-whisper

json5

Run command:

pip3 install -r requirements.txt

Just install the dependencies.

Install onnxruntime-gpu.

pip3 install onnxruntime-gpu

Installation of the torch 3-piece set.

pip3 install torch torchvision torchaudio --index-url https://download.pytorch.org/whl/cu118

Windows configuration espeak-ng

Since the backend of the MaskGCT project relies on the espeak software, it needs to be configured locally. eSpeak is a compact, open source text-to-speech (TTS) synthesizer that supports multiple languages and accents. It uses a "resonance peak synthesis" approach that allows for multiple languages in a small footprint. The speech is clear and can be used at high speeds, but it is not as natural and smooth as larger synthesizers based on recordings of human speech, and MaskGCT builds on espeak's synthesis with secondary reasoning.

First run the command to install espeak:

winget install espeak

If you can't install it, you can also download the installer and install it manually:

https://sourceforge.net/projects/espeak/files/espeak/espeak-1.48/setup_espeak-1.48.04.exe/download

Then download the espeak-ng installer:

https://github.com/espeak-ng/espeak-ng/releases

Download and double-click to install.

Then copy C:\Program Files\eSpeak NG\libespeak-ng.dll to the C:\Program Files (x86)\eSpeak\command_line directory.

Then rename libespeak-ng.dll to espeak-ng.dll

Finally, just configure the C:\Program Files (x86)\eSpeak\command_line directory to the environment variable.

MaskGCT Local Reasoning

With all that configured, write the inference script local_test.py:

from models.tts.maskgct.maskgct_utils import *

from huggingface_hub import hf_hub_download

import safetensors

import soundfile as sf

import os

import argparse

os.environ['HF_HOME'] = os.path.join(os.path.dirname(__file__), 'hf_download')

print(os.path.join(os.path.dirname(__file__), 'hf_download'))

parser = argparse.ArgumentParser(description="GPT-SoVITS api")

parser.add_argument("-p", "--prompt_text", type=str, default="说得好像您带我以来我考好过几次一样")

parser.add_argument("-a", "--audio", type=str, default="./说得好像您带我以来我考好过几次一样.wav")

parser.add_argument("-t", "--text", type=str, default="你好")

parser.add_argument("-l", "--language", type=str, default="zh")

parser.add_argument("-lt", "--target_language", type=str, default="zh")

args = parser.parse_args()

if __name__ == "__main__":

# download semantic codec ckpt

semantic_code_ckpt = hf_hub_download("amphion/MaskGCT", filename="semantic_codec/model.safetensors")

# download acoustic codec ckpt

codec_encoder_ckpt = hf_hub_download("amphion/MaskGCT", filename="acoustic_codec/model.safetensors")

codec_decoder_ckpt = hf_hub_download("amphion/MaskGCT", filename="acoustic_codec/model_1.safetensors")

# download t2s model ckpt

t2s_model_ckpt = hf_hub_download("amphion/MaskGCT", filename="t2s_model/model.safetensors")

# download s2a model ckpt

s2a_1layer_ckpt = hf_hub_download("amphion/MaskGCT", filename="s2a_model/s2a_model_1layer/model.safetensors")

s2a_full_ckpt = hf_hub_download("amphion/MaskGCT", filename="s2a_model/s2a_model_full/model.safetensors")

# build model

device = torch.device("cuda")

cfg_path = "./models/tts/maskgct/config/maskgct.json"

cfg = load_config(cfg_path)

# 1. build semantic model (w2v-bert-2.0)

semantic_model, semantic_mean, semantic_std = build_semantic_model(device)

# 2. build semantic codec

semantic_codec = build_semantic_codec(cfg.model.semantic_codec, device)

# 3. build acoustic codec

codec_encoder, codec_decoder = build_acoustic_codec(cfg.model.acoustic_codec, device)

# 4. build t2s model

t2s_model = build_t2s_model(cfg.model.t2s_model, device)

# 5. build s2a model

s2a_model_1layer = build_s2a_model(cfg.model.s2a_model.s2a_1layer, device)

s2a_model_full = build_s2a_model(cfg.model.s2a_model.s2a_full, device)

# load semantic codec

safetensors.torch.load_model(semantic_codec, semantic_code_ckpt)

# load acoustic codec

safetensors.torch.load_model(codec_encoder, codec_encoder_ckpt)

safetensors.torch.load_model(codec_decoder, codec_decoder_ckpt)

# load t2s model

safetensors.torch.load_model(t2s_model, t2s_model_ckpt)

# load s2a model

safetensors.torch.load_model(s2a_model_1layer, s2a_1layer_ckpt)

safetensors.torch.load_model(s2a_model_full, s2a_full_ckpt)

# inference

prompt_wav_path = args.audio

save_path = "output.wav"

prompt_text = args.prompt_text

target_text = args.text

# Specify the target duration (in seconds). If target_len = None, we use a simple rule to predict the target duration.

target_len = None

maskgct_inference_pipeline = MaskGCT_Inference_Pipeline(

semantic_model,

semantic_codec,

codec_encoder,

codec_decoder,

t2s_model,

s2a_model_1layer,

s2a_model_full,

semantic_mean,

semantic_std,

device,

)

recovered_audio = maskgct_inference_pipeline.maskgct_inference(

prompt_wav_path, prompt_text, target_text,args.language,args.target_language, target_len=target_len

)

sf.write(save_path, recovered_audio, 24000)

The first inference will download 10 G's of models in the hf_download directory.

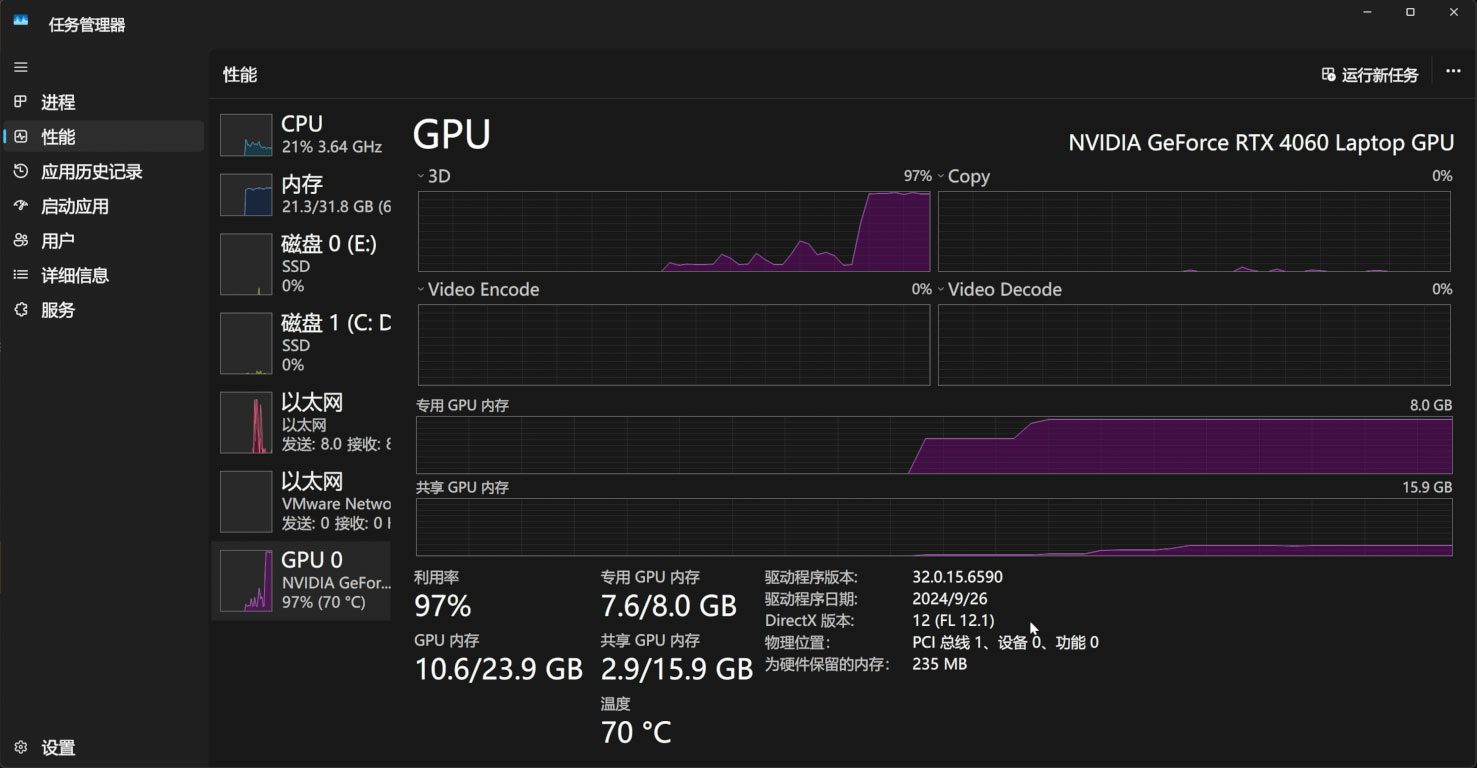

The reasoning process takes up 11G of video memory:

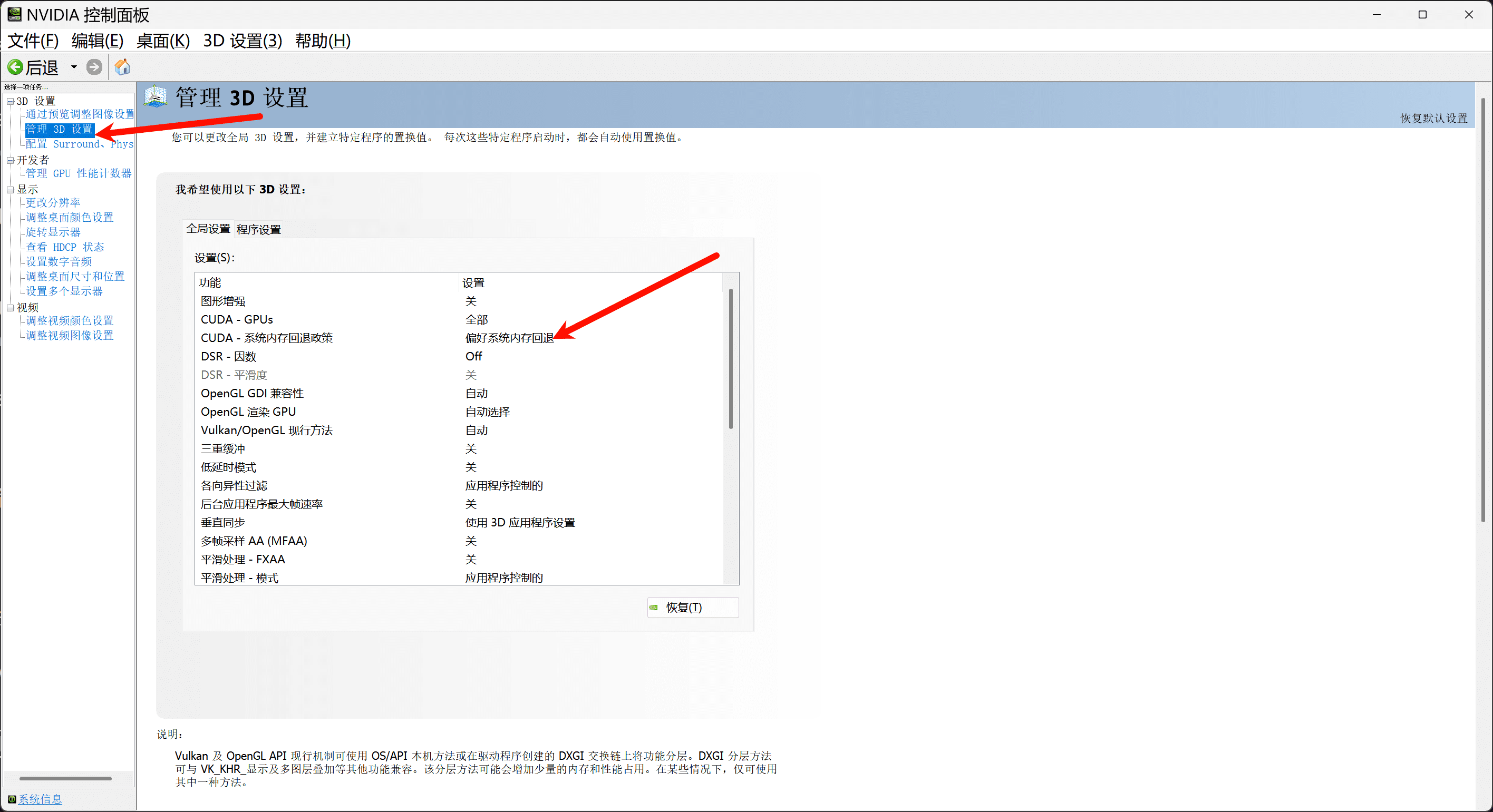

If you have less than 11G of video memory, then be sure to turn on the system memory fallback policy in the Nvidia control panel to top up your video memory through system memory:

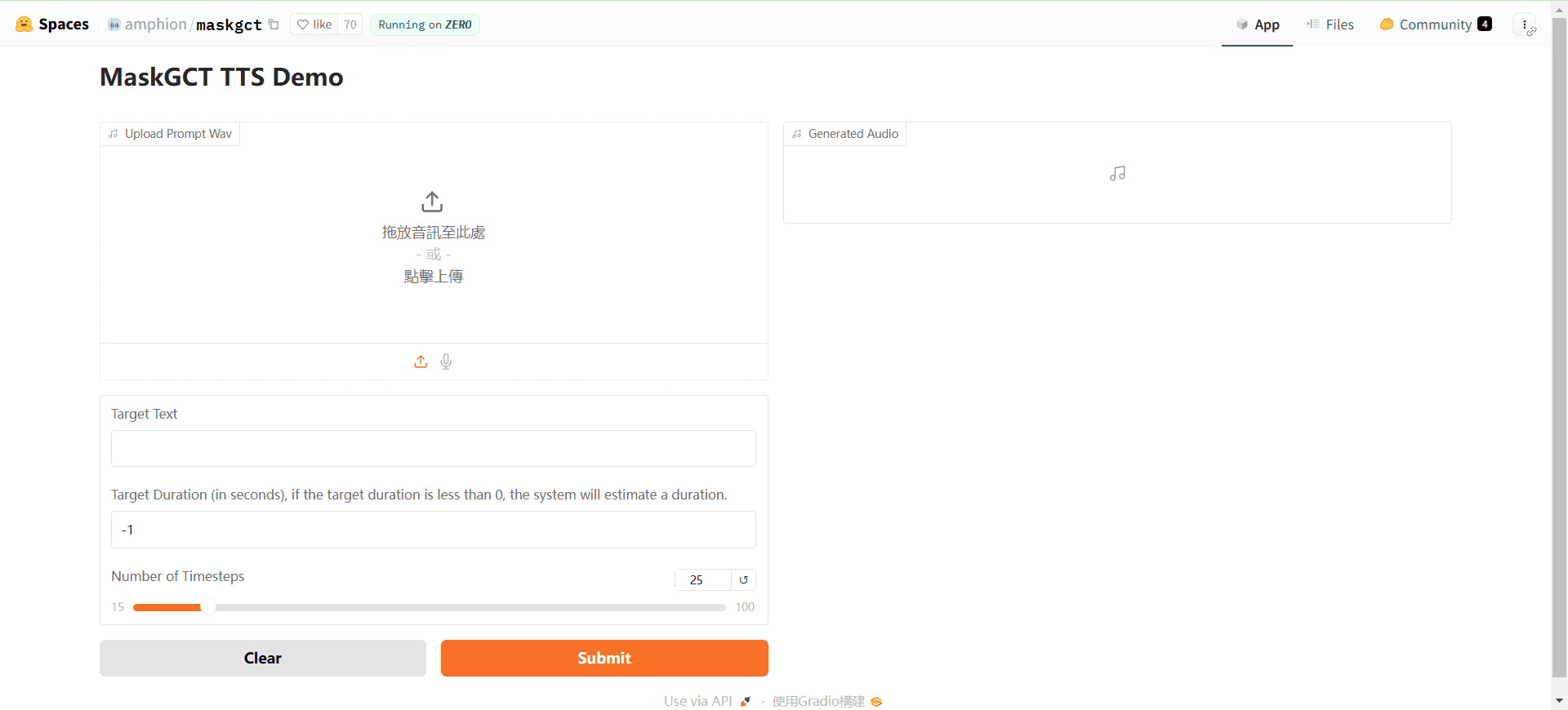

If you wish, you can also write a simple webui interface based on gradio, app.py:.

import os

import gc

import re

import gradio as gr

import numpy as np

import subprocess

os.environ['HF_HOME'] = os.path.join(os.path.dirname(__file__), 'hf_download')

# 设置HF_ENDPOINT环境变量

os.environ["HF_ENDPOINT"] = "https://hf-mirror.com"

reference_wavs = ["请选择参考音频或者自己上传"]

for name in os.listdir("./参考音频/"):

reference_wavs.append(name)

def change_choices():

reference_wavs = ["请选择参考音频或者自己上传"]

for name in os.listdir("./参考音频/"):

reference_wavs.append(name)

return {"choices":reference_wavs, "__type__": "update"}

def change_wav(audio_path):

text = audio_path.replace(".wav","").replace(".mp3","").replace(".WAV","")

# text = replace_speaker(text)

return f"./参考音频/{audio_path}",text

def do_cloth(gen_text_input,ref_audio_input,model_choice_text,model_choice_re,ref_text_input):

cmd = fr'.\py311_cu118\python.exe local_test.py -t "{gen_text_input}" -p "{ref_text_input}" -a "{ref_audio_input}" -l {model_choice_re} -lt {model_choice_text} '

print(cmd)

res = subprocess.Popen(cmd)

res.wait()

return "output.wav"

with gr.Blocks() as app_demo:

gr.Markdown(

"""

项目地址:https://github.com/open-mmlab/Amphion/tree/main/models/tts/maskgct

整合包制作:刘悦的技术博客 https://space.bilibili.com/3031494

"""

)

gen_text_input = gr.Textbox(label="生成文本", lines=4)

model_choice_text = gr.Radio(

choices=["zh", "en"], label="生成文本语种", value="zh",interactive=True)

wavs_dropdown = gr.Dropdown(label="参考音频列表",choices=reference_wavs,value="选择参考音频或者自己上传",interactive=True)

refresh_button = gr.Button("刷新参考音频")

refresh_button.click(fn=change_choices, inputs=[], outputs=[wavs_dropdown])

ref_audio_input = gr.Audio(label="Reference Audio", type="filepath")

ref_text_input = gr.Textbox(

label="Reference Text",

info="Leave blank to automatically transcribe the reference audio. If you enter text it will override automatic transcription.",

lines=2,

)

model_choice_re = gr.Radio(

choices=["zh", "en"], label="参考音频语种", value="zh",interactive=True

)

wavs_dropdown.change(change_wav,[wavs_dropdown],[ref_audio_input,ref_text_input])

generate_btn = gr.Button("Synthesize", variant="primary")

audio_output = gr.Audio(label="Synthesized Audio")

generate_btn.click(do_cloth,[gen_text_input,ref_audio_input,model_choice_text,model_choice_re,ref_text_input],[audio_output])

def main():

global app_demo

print(f"Starting app...")

app_demo.launch(inbrowser=True)

if __name__ == "__main__":

main()

And of course, don't forget to install the gradio dependency: the

pip3 install -U gradio

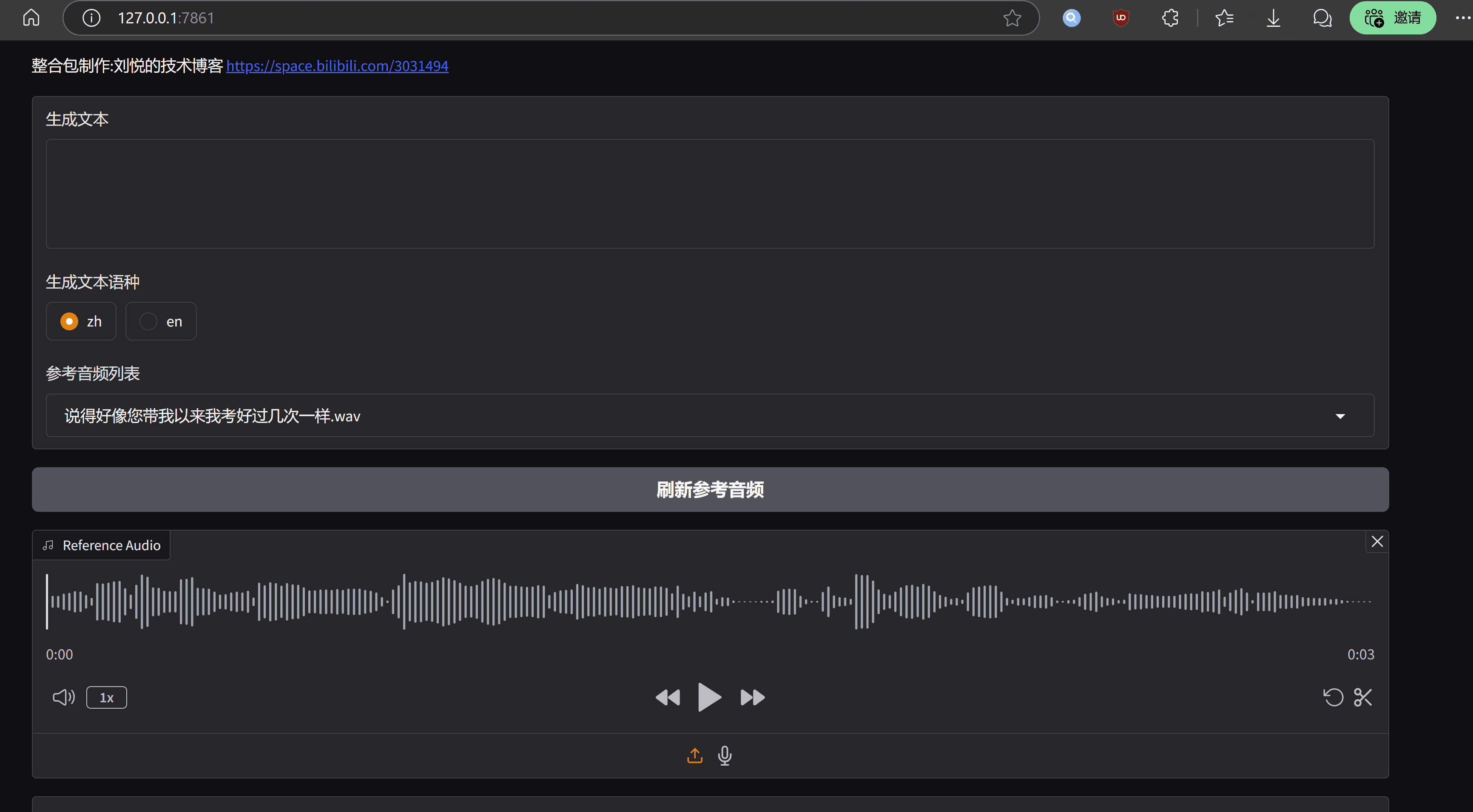

The run effect looks like this:

concluding remarks

The advantage of MaskGCT model is that the tone and rhythm level is very outstanding, comparable to the real voice, the disadvantage is also obvious, the running cost is high, and the optimization of engineering level is insufficient.MaskGCT project home page has its commercial version of the model of the entrance, according to this inference, the official will not be too much in the open source version of the force, and finally, a one-click integration package, and the folks with the same enjoyment::.

MaskGCT One-Click Deployment Kit

https://pan.quark.cn/s/e74726b84c78

Courtesy of Ten Horsemen: https://pan.quark.cn/s/1a8428b6ff73 Extract code: kind

https://drive.google.com/drive/folders/11JHi5FnusZA34Q6zS3b3Xj5MiLpYKsLu

© Copyright notes

Article copyright AI Sharing Circle All, please do not reproduce without permission.

Related posts

No comments...