A2A: Google releases open protocol for communication between AI intelligences

General Introduction

A2A (Agent2Agent) is an open source protocol developed by Google to enable AI intelligences developed by different frameworks or vendors to communicate and collaborate with each other. It provides a standardized set of methods for intelligences to discover each other's capabilities, share tasks, and get the job done.The core problem that A2A solves is the difficulty of intelligences not being able to interoperate in enterprise AI. The project is hosted on GitHub and the code is free and open for anyone to download and use or participate in development. More than 50 companies have already supported the agreement, including Salesforce and SAP.Google hopes to establish a common language for intelligences through A2A, and promote multi-intelligence collaboration to the ground.

Function List

- Supports inter-intelligent body task creation, assignment and status tracking for long time task processing.

- furnish

AgentCard, recording the capabilities, addresses and authentication requirements of the intelligences in JSON format. - Compatible with multiple forms of interaction, including text, forms, two-way audio and video.

- Asynchronous task processing is supported and intelligences can run in the background.

- Provides streaming capabilities and real-time task status updates via SSE (Server Push Events).

- Push notifications are supported, and intelligentsia can proactively send task progress to the client.

- Ensure communication security and protect data exchange between intelligences.

Using Help

A2A is an open source protocol that requires some programming knowledge before use. Here are detailed steps to help you get started quickly.

Acquisition and Installation

- Accessing GitHub repositories

Open https://github.com/google/A2A, which is the official page of A2A. The README on the first page describes the background and basic guidelines of the project. - Download Code

Enter at the command line:

git clone https://github.com/google/A2A.git

Download and go to the catalog:

cd A2A

- Installation of dependencies

A2A supports both Python and JavaScript. for Python for example, you need to install Python 3.8+ and run it:

pip install -r requirements.txt

If notrequirements.txtEntersamples/python/commonView specific dependencies.

- running example

The repository provides several examples. Take the Python "Expense Reimbursement" smartbody as an example:

- go into

samples/python/agents/google_adkThe - establish

.envfile, fill in the configuration (e.g., port number, see README for details). - Running:

python main.py

This will start a base A2A server.

Main Functions

1. Setting up the A2A server

The core of A2A is to have the intelligences run a server. The steps are as follows:

- Writing Intelligent Body Code

In Python, inheritingA2AServerClass. For example, a simple echo-intelligence:

from common.server import A2AServer

class EchoAgent(A2AServer):

def handle_message(self, message):

return {"content": {"text": f"回声: {message.content.text}"}}

- Start the server

Implementation:agent = EchoAgent() agent.run(host="0.0.0.0", port=8080)Other intelligences can be identified by

http://localhost:8080/a2aAccess.

2. Connecting to other intelligences

A2A supports client-server communication:

- Create Client

utilizationA2AClientConnections:from common.client import A2AClient client = A2AClient("http://localhost:8080/a2a") - send a message

Send a message:message = {"content": {"text": "测试消息"}, "role": "user"} response = client.send_message(message) print(response["content"]["text"])

3. Use of AgentCard discovery capabilities

Every intelligent body has aAgentCard, documenting its function:

- Get method:

curl http://localhost:8080/a2a/card - The returned JSON includes the name of the smartbody, a description of its capabilities, and supported operations.

4. Handling of mandates

A2A supports task management:

- Submission of mandates

Send a task request:task = {"task_type": "analyze", "data": "示例数据"} task_id = client.submit_task(task) - tracking state

Query the progress of the task:status = client.get_task_status(task_id) print(status) # 如:submitted, working, completed

5. Streaming

For supportstreamingof the server, the client can receive updates in real time:

- utilization

tasks/sendSubscribeSubmission of assignments. - The server returns status or results via SSE, for example:

event: TaskStatusUpdateEvent data: {"task_id": "123", "state": "working"}

6. Push notifications

be in favor ofpushNotificationsof the server can proactively notify the client:

- Set up a webhook:

client.set_push_notification_webhook("https://your-webhook-url") - The server pushes a message when a task is updated.

caveat

- It is recommended to run with a virtual environment to avoid dependency conflicts.

- The sample code is in the

samplesCatalog that covers a variety of frameworks such as CrewAI, LangGraph. - For help, ask questions at Discussions on GitHub.

With these steps, you can build and run the A2A Intelligence and experience its communication capabilities.

JSON format protocol file

https://github.com/google/A2A/blob/main/specification/json/a2a.json

application scenario

- Enterprise mission collaboration

One financial intelligence collects claims, another approval intelligence reviews them, and A2A lets them work together seamlessly to complete process automation. - Cross-platform customer service

One intelligence handles textual inquiries and the other handles voice requests, and A2A ensures that they share information to improve efficiency. - development testing

Developers use A2A to simulate multi-intelligent body environments and test protocol or framework compatibility.

QA

- What languages does A2A support?

The official examples are in Python and JavaScript, but the protocol is language independent and can be implemented in other languages. - Is it free?

Yes, A2A is completely open source and the code is free, but there may be server fees to run it. - How can the protocol be improved?

Submit Issues on GitHub or provide private feedback via Google Forms.

A2A protocol: Google betting on the future of AI Agent interconnectivity?

Artificial Intelligence (AI) Agents are moving from concept to reality in enterprise applications, where they are expected to autonomously handle repetitive or complex tasks to boost productivity. From automating IT support to optimizing supply chain planning, there is a growing case for deploying AI agents across the enterprise. However, a key bottleneck has emerged: how can these agents from different vendors, built on different frameworks, collaborate effectively?

Current AI Agents tend to act like silos of information that are difficult to interact with across system and application boundaries. This greatly limits their potential to deliver greater value. To address this situation, Google Cloud recently launched an open protocol called Agent2Agent (A2A) with more than 50 technology partners and service providers, including Atlassian, Salesforce, SAP, ServiceNow, and others.

Breaking down barriers: the core objective of the A2A protocols

The A2A protocol aims to provide a standardized way for AI Agents to communicate securely, exchanging information and coordinating actions regardless of the underlying technology or developer. This sounds like a common "language" and "rules" for the increasingly fragmented AI Agent ecosystem.

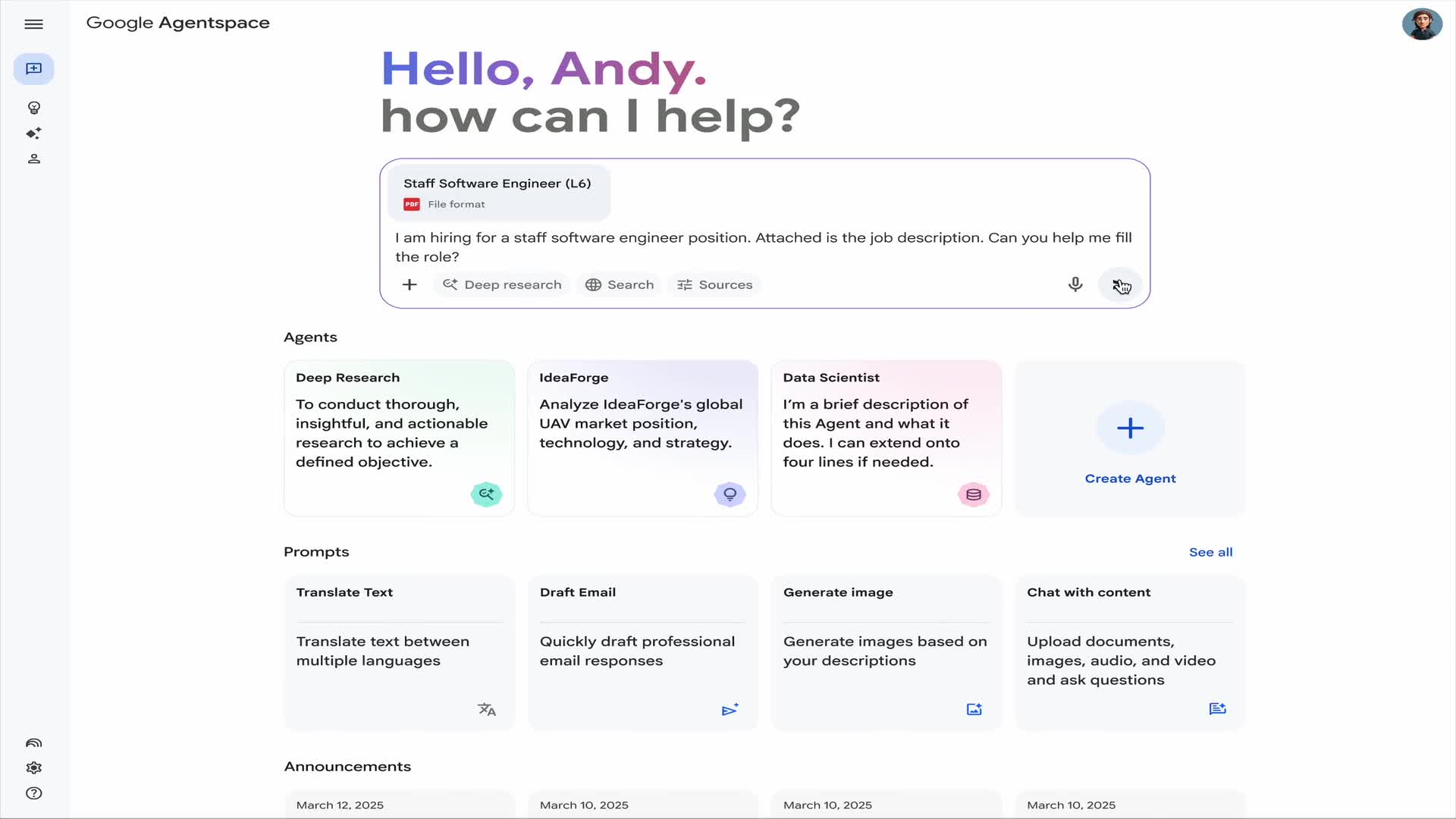

Rather than just being invoked as another tool, A2A attempts to enable more natural, unstructured collaboration between Agents. This is critical for handling complex tasks that require multiple Agents to work in tandem, spanning hours or even days. Imagine a hiring manager simply giving instructions to his or her personal Agent, and that Agent automatically collaborating with other Agents that specialize in screening resumes, scheduling interviews, and even conducting background checks, all without having to manually intervene in the different systems.

It's important to note that A2A was not created out of thin air, but rather built on top of existing mature standards such as HTTP, SSE, JSON-RPC, etc. This lowers the barrier for organizations to integrate it into their existing IT architecture. This lowers the barrier for organizations to integrate it into existing IT architectures. At the same time, the protocol emphasizes security and supports enterprise-level authentication and authorization schemes. In addition, support for text, audio, video, and other modalities bodes well for its future applications.

For its part, Google says that the design of A2A draws on its experience with large-scale internal deployments of Agent systems, and is intended to complement Anthropic introduced Model Context Protocol (MCP)A2A is a tool for the development and implementation of MCP. While MCP focuses on providing agents with the necessary tools and contextual information, A2A focuses more on direct communication and collaborative processes between agents.

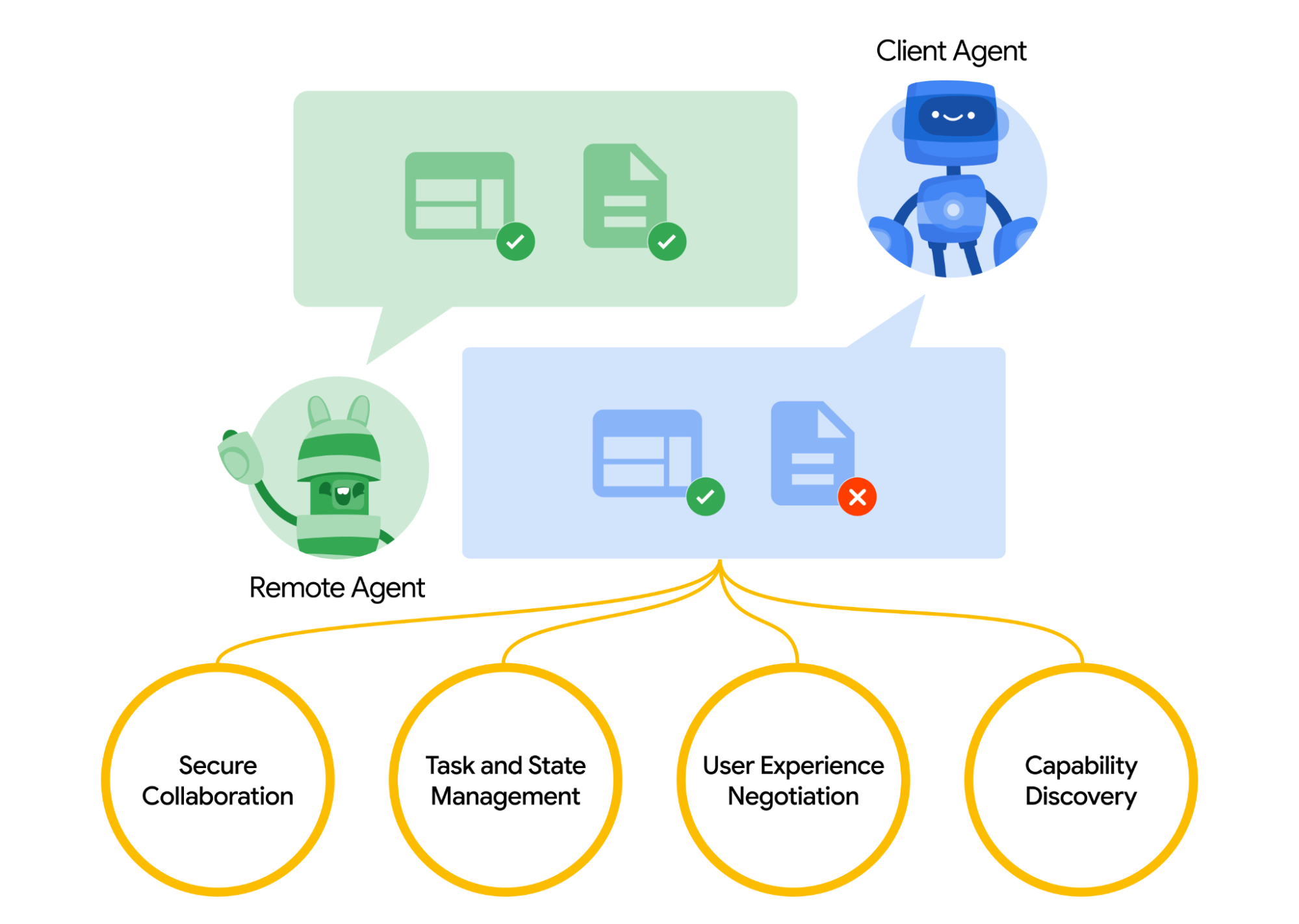

How does A2A work?

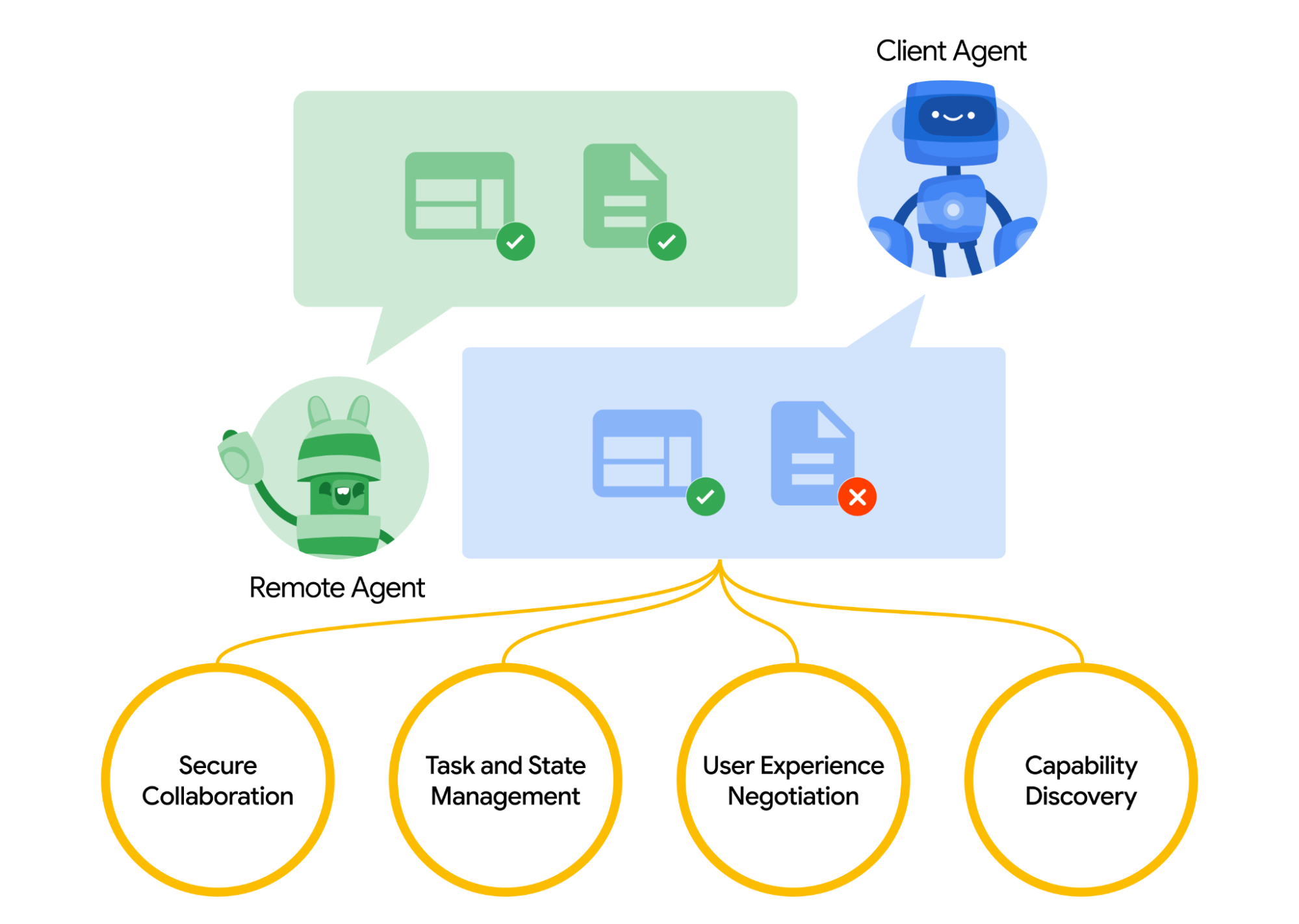

According to the published draft specification, A2A interactions are centered around a "Client Agent" (initiating tasks) and a "Remote Agent" (executing tasks).

Its core mechanisms include:

- Capability discovery. The remote Agent declares its capabilities in a JSON "Agent Card", which helps the client Agent find suitable collaborators. This is similar to a kind of "yellow pages" for Agents.

- Task management. Interactions are oriented towards the completion of "tasks". The protocol defines the task object and its lifecycle, supporting both immediate completion and long-duration tasks that require state synchronization. The output of a task is called an "artifact".

- Collaboration. Messages are sent between Agents to convey context, replies, artifacts, or user commands.

- User experience negotiation. Messages contain different types of content "parts" that allow the Agent to negotiate the desired content format (e.g. iframe, video, web form, etc.) to suit the end user's interface capabilities.

A concrete example is the software engineer recruitment scenario mentioned earlier. The user's Agent (Client) can discover and connect to the Agent (Remote) that handles the data of the hiring platform, the Agent (Remote) that is responsible for scheduling the interview calendar, and the Agent (Remote) that subsequently handles the background checks, and coordinates them to complete the hiring process together through the A2A protocol.

Ecology and Challenges: Strategic Considerations for Open Protocols

Google Cloud's choice to launch A2A as an open agreement and to unite so many industry partners is strategic.

First of all, this reflects Google's ambition to establish a standard in the field of AI Agent and master the dominant power. Attracting broad participation through open protocols can accelerate the formation of an agent ecosystem around Google Cloud and counter other competitors that may build closed systems.

Second, a unified, open interoperability standard is certainly welcome for enterprise customers. It means greater flexibility to mix and match best-of-breed Agents from different vendors, avoiding lock-in to a single platform and hopefully lowering long-term integration costs. Numerous partners (from Box, to Cohere Early support from partners ranging from SAP and Workday to consulting giants such as Accenture and Deloitte confirms the market's expectations. The active participation of these partners is a key factor in the success of A2A, not only in providing technical validation, but more importantly in driving the practical application of the protocol on their respective platforms and in their customers' projects.

However, the success of the agreement is not a foregone conclusion. Challenges remain:

- Speed and Breadth of Adoption. The value of the protocol lies in its widespread adoption. It remains to be seen whether enough developers and organizations will be convinced to actually build and integrate Agents using the A2A standard.

- Standards Evolution and Maintenance. Open standards require sustained community input and effective governance mechanisms to accommodate rapidly evolving AI technologies.

- Proven results. The robustness, efficiency, and security of the protocols in handling extremely complex real-world collaboration tasks need to be fully tested in production environments.

The release of A2A marks a significant step in the industry's efforts to solve the AI Agent interoperability challenge. While still in draft form, with a production-ready version planned for later this year, it paints a picture of a future in which AI Agents are able to work together across boundaries, and the ability of Google and its partners to make this vision a reality will have far-reaching implications for the future of enterprise automation and AI adoption.

© Copyright notes

Article copyright AI Sharing Circle All, please do not reproduce without permission.

Related posts

No comments...