70% Completion Trap: Final 30% Challenge for AI-Assisted Coding

After being deeply involved in AI-assisted development for the past few years, I've noticed an interesting phenomenon. While engineers report significant productivity gains from using AI, the actual software we use on a daily basis doesn't seem to be significantly better. What's going on here?

I think I know why, and the answer reveals some basic facts about software development that we need to face. Let me share my findings.

How developers actually use AI

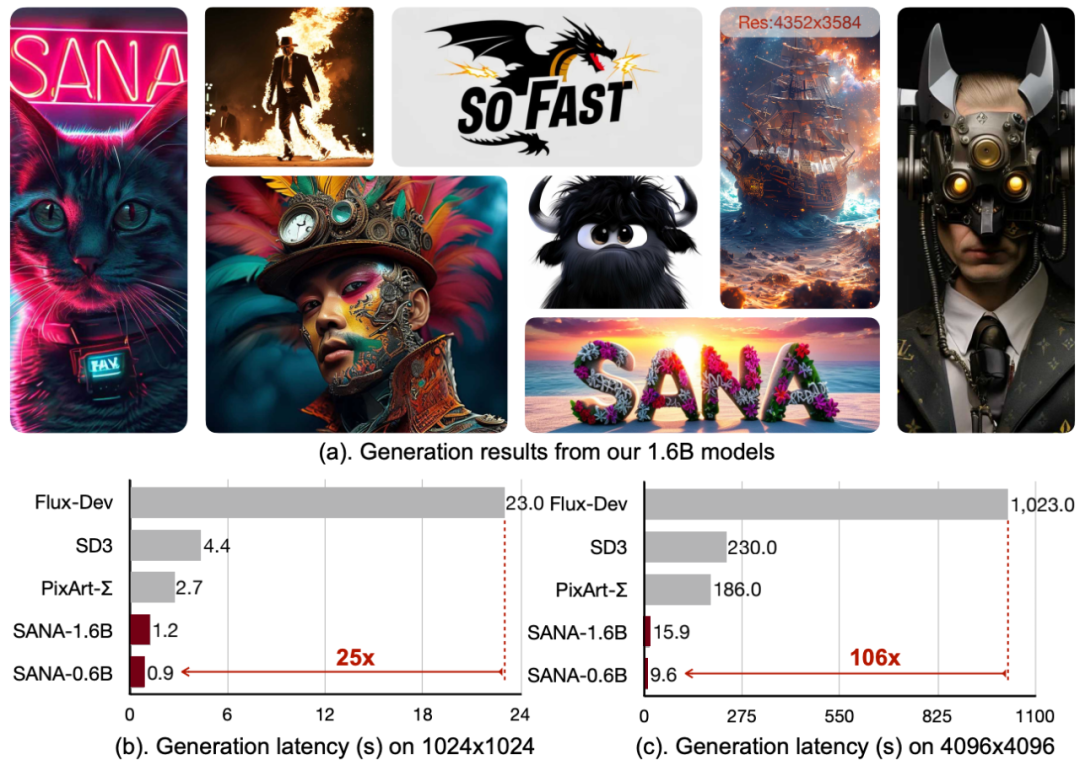

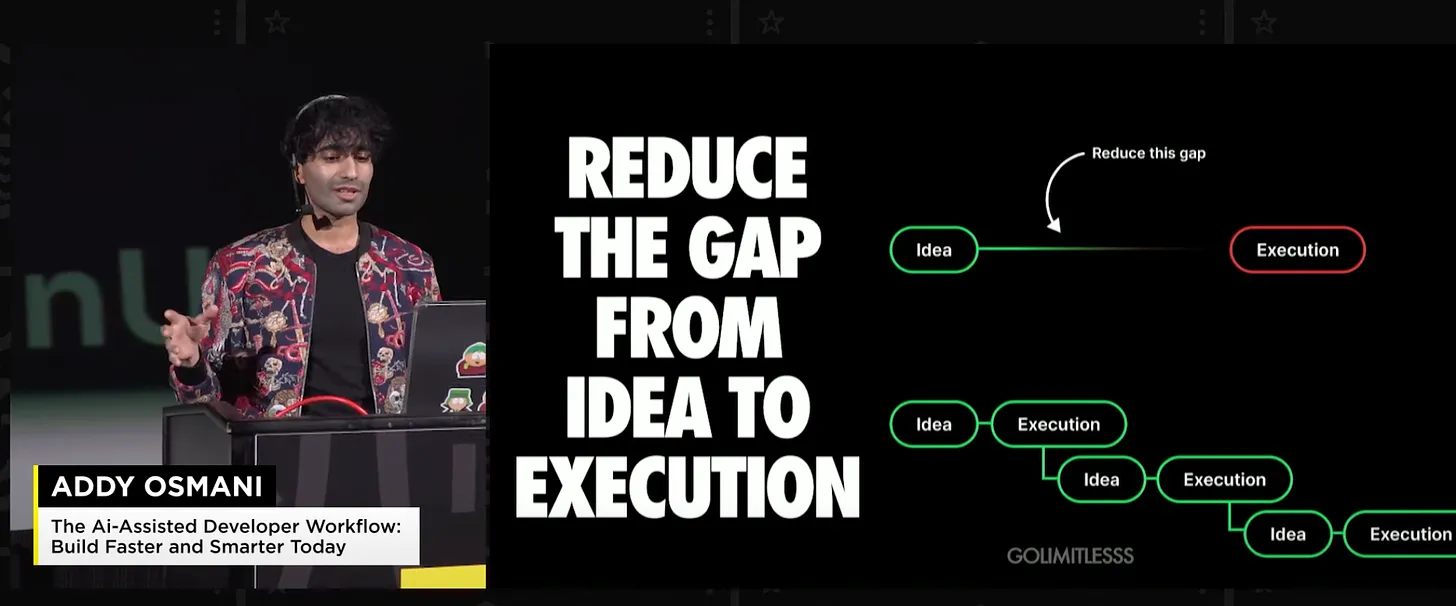

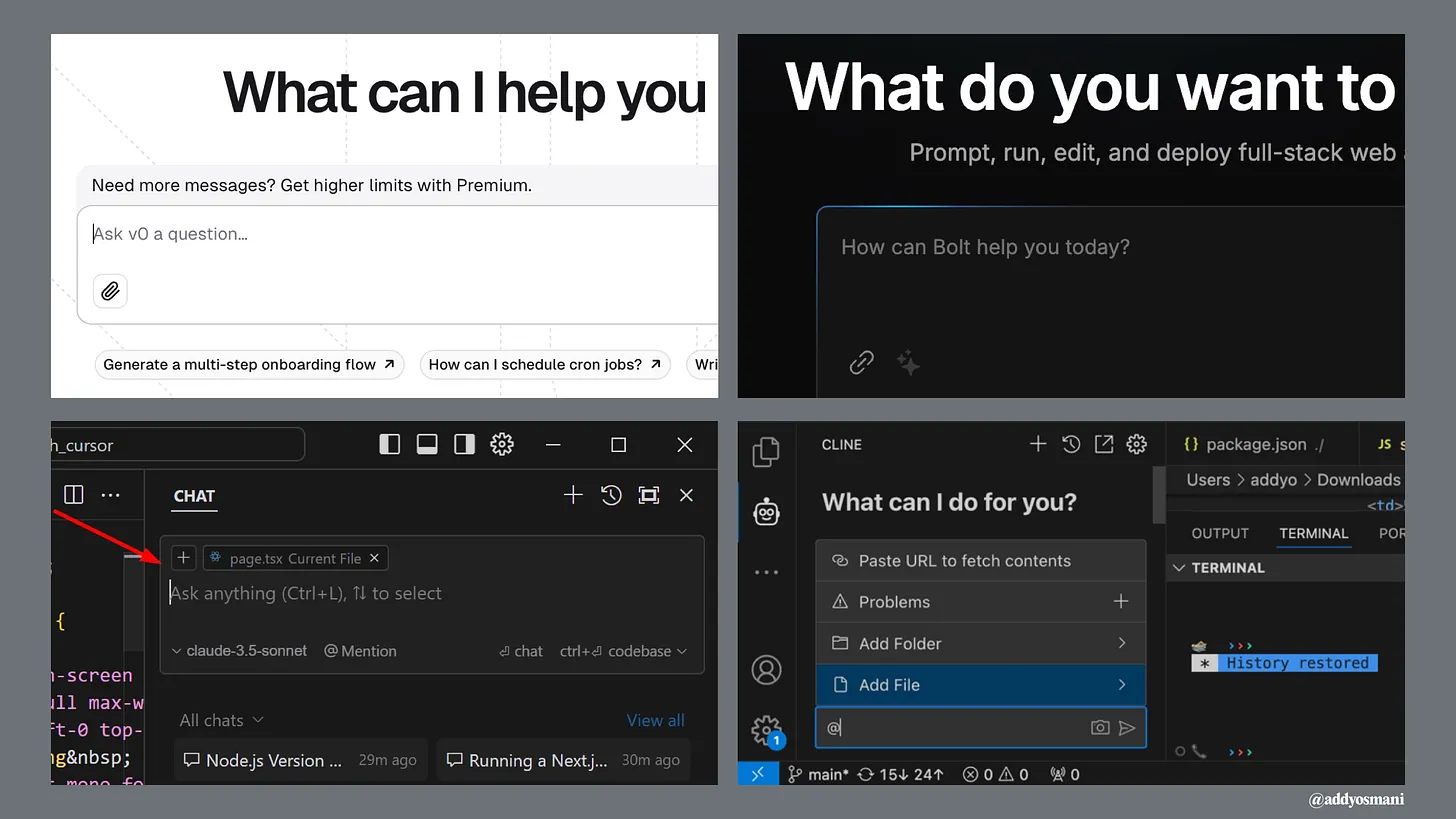

I've observed two different patterns in how teams develop with AI. We call them "guides" and "iterators". Both help engineers (and even non-technical users) bridge the gap from idea to execution (or MVP).

Leader: From Zero to MVP

Tools like Bolt, v0, and screenshot-to-code AI are revolutionizing the way we bootstrap new projects. These teams usually:

- Start with a design or rough concept

- Using AI to Generate a Complete Initial Code Base

- Get a working prototype in hours or days, not weeks!

- Focus on rapid validation and iteration

The results can be impressive. I recently saw an indie developer using Bolt Turned the Figma design into a working web application in a very short period of time. It's not ready for production yet, but it's good enough to get initial user feedback.

Iterators: daily development

The second camp uses the likes of Cursor, Cline, Copilot, and WindSurf tools like this for day-to-day development workflows. It's less dramatic, but potentially more transformative. These developers are:

- Using AI for Code Completion and Suggestions

- Leveraging AI for Complex Reconfiguration Tasks

- Generate Tests and Documentation

- Using AI as a "pair programmer" to solve problems

But here's the rub: while both approaches can significantly accelerate development, they come with hidden costs that aren't immediately visible.

The Hidden Cost of "AI Speed"

When you see a senior engineer using Cursor maybe Copilot It looks like magic when it comes to AI tools like these. They can build an entire feature in minutes, including testing and documentation. But look closely and you'll notice something critical: they don't just take AI's advice. They're constantly:

- Refactoring the generated code into smaller, more focused modules

- Add AI Missing Edge Case Handling

- Enhancement of type definitions and interfaces

- Questioning Architecture Decisions

- Add comprehensive error handling

In other words, they are applying years of hard-won engineering wisdom to shape and constrain the output of the AI. the AI is accelerating their implementations, but it's their expertise that's keeping the code maintainable.

Junior engineers often miss these critical steps. They are more likely to accept the output of AI, leading to what I call "house of cards code" - it looks complete, but breaks down under real-world stress.

intellectual paradox

This is the most counter-intuitive thing I've found: that AI tools help experienced developers more than beginners. This seems backwards - shouldn't AI democratize coding?

The reality is that AI is like having a very eager junior developer on your team. They can write code quickly, but they need constant supervision and correction. The more you know, the better you can mentor them.

This creates what I call the "knowledge paradox":

- Executives use AI to accelerate what they already know how to do

- Junior staff trying to use AI to learn what to do

- The results were very different

I see senior engineers using AI to:

- Rapidly prototype ideas they already understand

- Generate basic implementations that they can subsequently improve

- Explore alternatives to known problems

- Automate routine coding tasks

At the same time, junior staff often:

- Acceptance of incorrect or outdated solutions

- Missing key security and performance considerations

- Difficult to debug AI-generated code

- Building vulnerable systems they don't fully understand

70% Problem: The Learning Curve Paradox of AI

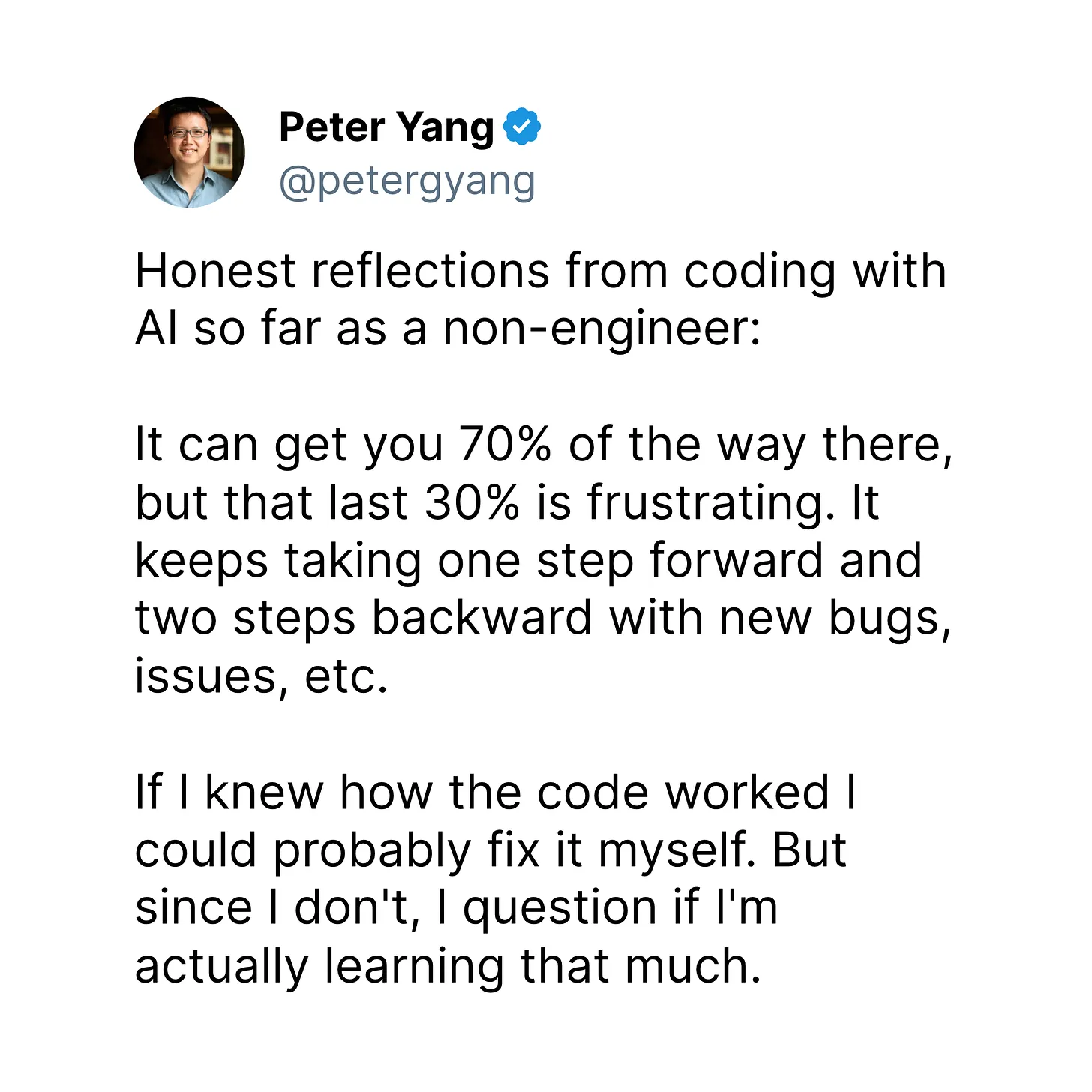

A recent tweet that caught my attention perfectly captures what I've observed in the field: non-engineers coding with AI find themselves running into frustrating obstacles. They can complete 70% at an alarming rate, but eventually 30% becomes an exercise in diminishing returns.

This "70% question" reveals some key information about the current state of AI-assisted development. Initially the progress felt amazing - you could describe what you wanted and an AI tool like v0 or Bolt would generate a working prototype that looked impressive. But then reality set in.

Two-step regression mode

A predictable pattern usually occurs next:

- You tried to fix a small bug.

- AI suggested a change that seemed reasonable

- This fix destroys something else.

- You asked the AI to fix a new problem.

- This in turn raises two questions

- over and over again

This cycle is especially painful for non-engineers because they lack the mental models to understand what actually went wrong. When an experienced developer encounters an error, they can infer potential causes and solutions based on years of pattern recognition. Without this background, you are essentially playing a game of whack-a-mole with code you don't fully understand.

The Continuation of the Learning Paradox

There's a deeper problem here: the very things that make AI coding tools easy to use for non-engineers - they represent your ability to deal with complexity - can actually hinder learning. When code "shows up" and you don't understand the underlying principles:

- You're not developing debugging skills.

- You're missing out on learning the basic model

- You can't extrapolate architectural decisions

- You have difficulty maintaining and developing code

This creates a dependency where you need to keep going back to the AI to solve problems, rather than developing your own expertise in dealing with them.

Knowledge gap

The most successful non-engineers I've seen using AI coding tools have taken a hybrid approach:

- Rapid Prototyping with AI

- Take the time to understand how the generated code works

- Learning basic programming concepts while using AI

- Building the knowledge base step by step

- Using AI as a learning tool, not just a code generator

But it takes patience and dedication - the opposite of what many hope to achieve by using AI tools.

Implications for the future

The "70% problem" suggests that current AI coding tools are best viewed as such:

- Prototype gas pedal for experienced developers

- Learning aids for those committed to understanding development

- MVP generator for quick validation of ideas

But they're not yet the solution to democratizing coding that many hope for. Finally 30% - the part that makes software production-ready, maintainable and robust - still requires real engineering knowledge.

The good news? As tools improve, the gap may close. But for now, the most pragmatic approach is to use AI to accelerate learning, not replace it altogether.

What actually works: a practical model

After observing dozens of teams, here's what I've seen consistently work:

1. "AI first draft" model

- Let the AI generate a basic implementation

- Manual review and refactoring for modularization

- Add comprehensive error handling

- Write comprehensive tests

- Documentation of key decisions

2. The "continuous dialogue" model

- Launch a new AI chat for each different task

- Keeping Context Focused and Minimized

- Frequent review and submission of changes

- Maintain a tight feedback loop

3. "Trust but verify" model

- Initial code generation using AI

- Manual review of all critical paths

- Automated testing edge cases

- Conduct regular security audits

Looking ahead: the real future of AI?

Despite these challenges, I'm optimistic about the role of AI in software development. The key is to understand what it's really good at:

- Acceleration known

AI is good at helping us realize patterns we already understand. It's like having an infinitely patient twinned programmer who can type very fast. - Explore the possibilities

AI is great for quickly prototyping ideas and exploring different approaches. It's like having a sandbox in which we can quickly test concepts. - Automation routine

AI greatly reduces the time spent on samples and regular coding tasks, allowing us to focus on interesting problems.

What does that mean to you?

If you're just getting started with AI-assisted development, here are my recommendations:

- Start small

- Using AI for isolated, well-defined tasks

- Review each line of generated code

- Progressively building greater functionality

- Maintaining Modularity

- Break everything down into small, focused documents

- Maintain clear interfaces between components

- Documenting your module boundaries

- Trust your experience.

- Use AI to accelerate, not replace your judgment

- Questioning generated code that doesn't feel right

- Maintain your engineering standards

The Rise of Agent Software Engineering

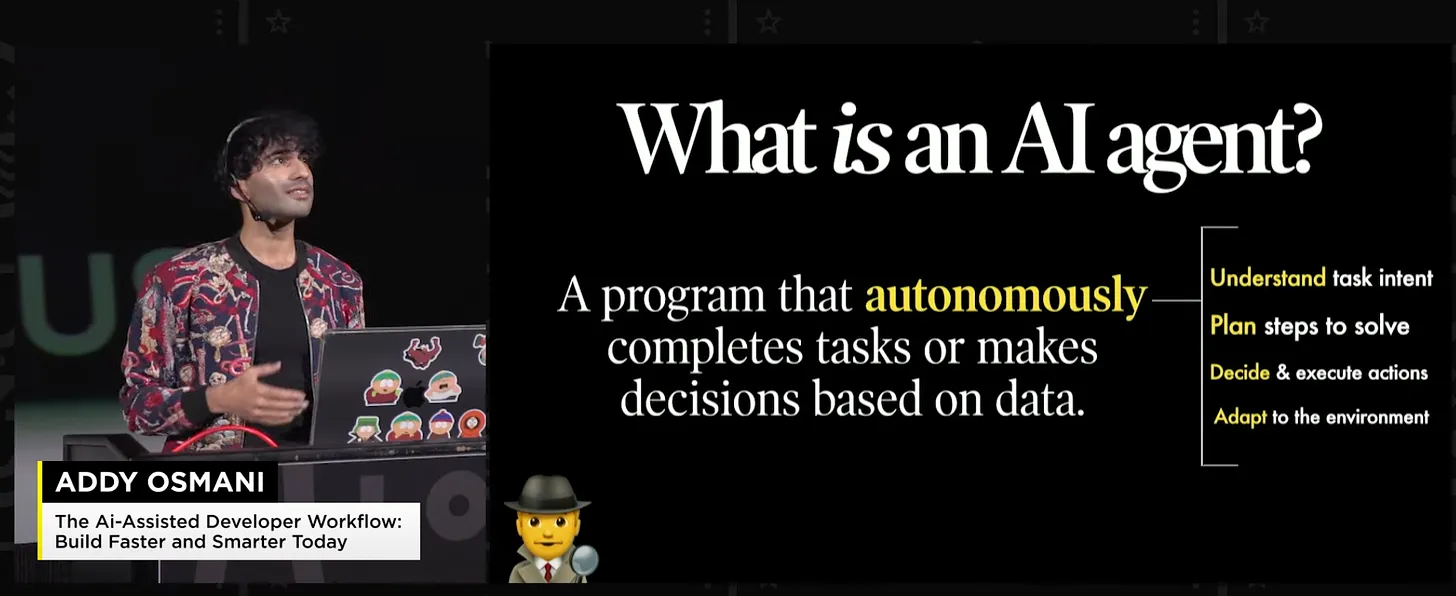

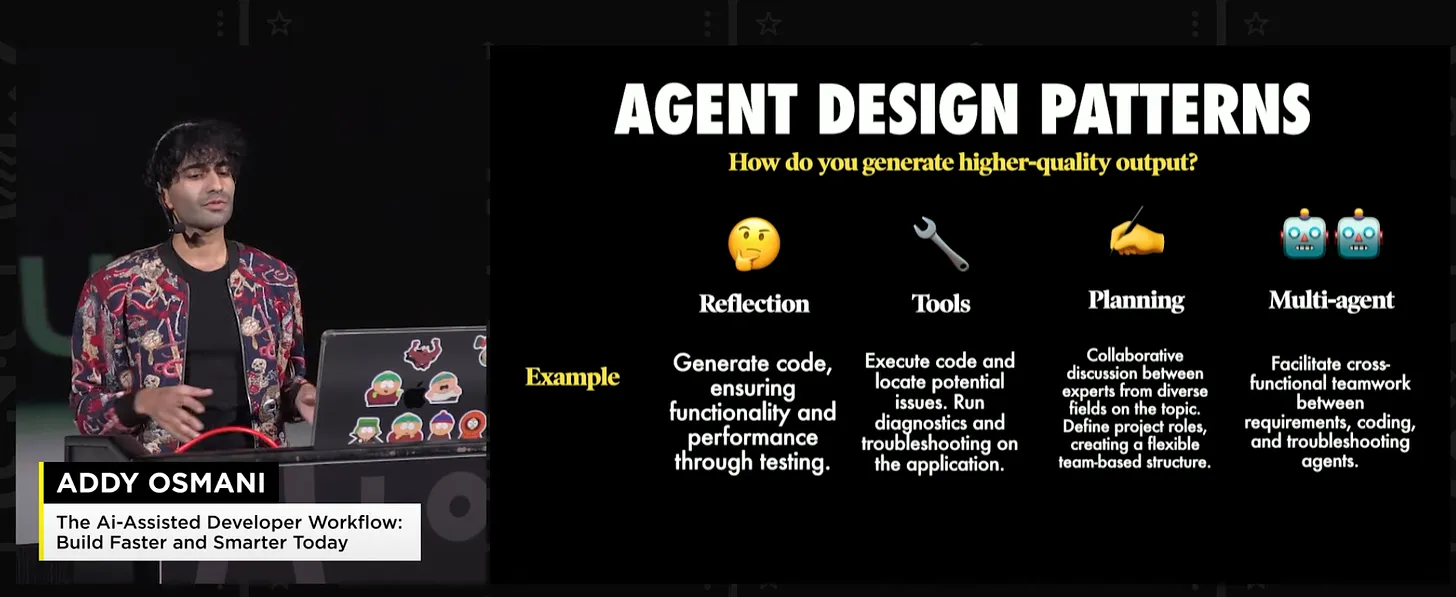

As we enter 2025, the landscape of AI-assisted development is changing dramatically. While current tools have changed the way we prototype and iterate, I believe we're on the cusp of an even more important shift: the rise of agent-based software engineering.

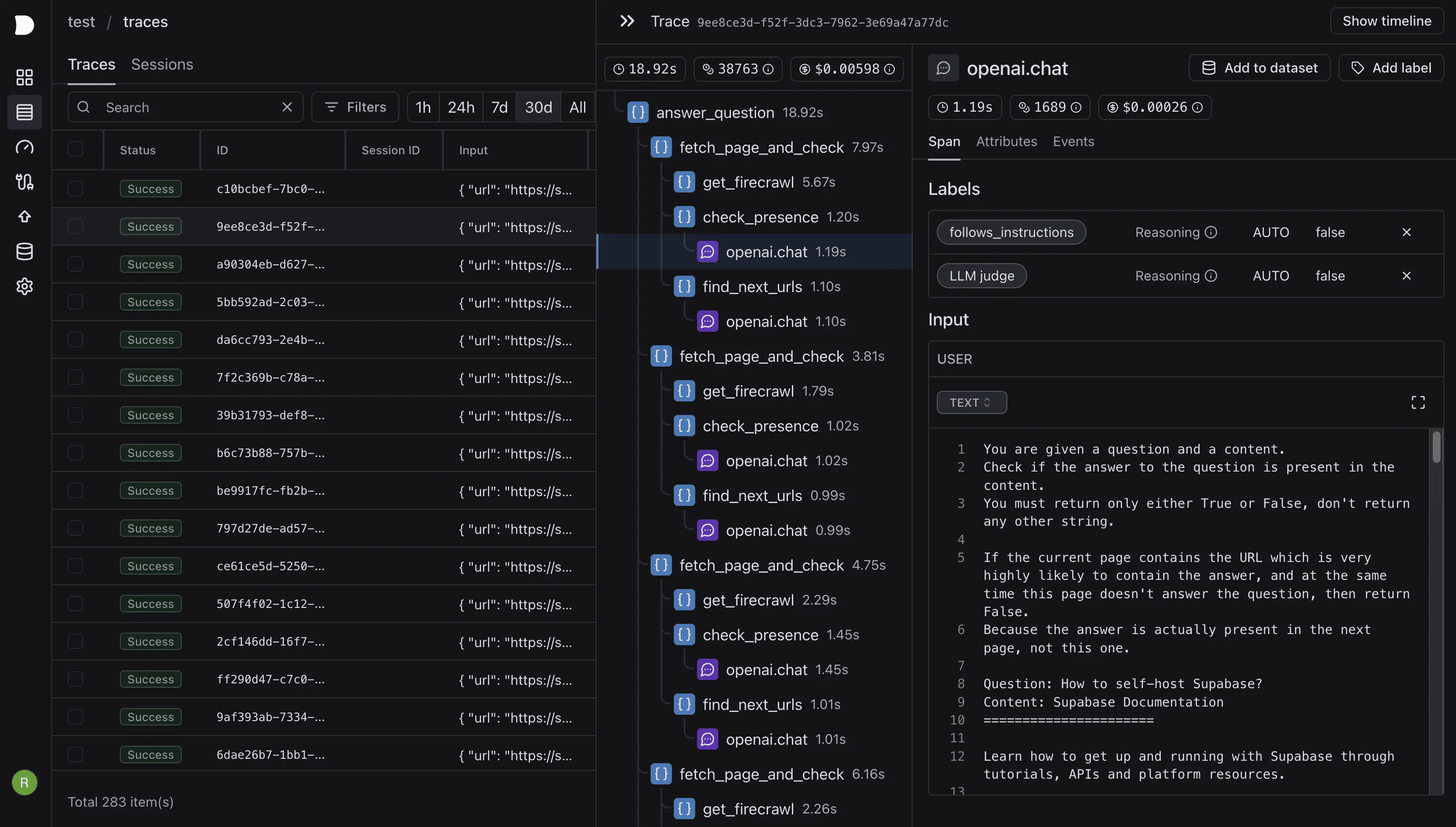

What do I mean by "agent"? These systems don't just respond to prompts, they plan, execute and iterate solutions with increasing autonomy.

If you're interested in learning more about proxies, including my thoughts on Cursor/Cline/v0/Bolt, you might be interested in me.

We are already seeing early signs of this evolution:

From responders to collaborators

The current tool mainly waits for our commands. But take a look at the updated features like Anthropic exist Claude The use of computers in, or Cline The ability to automatically launch browsers and run tests. These aren't just glorified auto-completions - they actually understand the task and take the initiative to solve the problem.

Think about debugging: these agents don't just suggest fixes:

- Proactive identification of potential problems

- Getting the test suite up and running

- Inspecting UI elements and capturing screenshots

- Propose and implement remediation

- Verify that the solution works (this can be a big deal)

Multi-modal future

Next-generation tools may do more than just handle code - they can be seamlessly integrated:

- Visual understanding (UI screenshots, models, diagrams)

- oral language dialogue

- Environment Interaction (Browser, Terminal, API)

This multimodal capability means that they can understand and use software like humans do - overall, not just at the code level.

Autonomous but guided

The key insight I've gained from using these tools is that the future isn't about AI replacing developers-it's about AI becoming an increasingly capable collaborator that can take the initiative while still respecting human guidance and expertise.

The most effective teams in 2025 may be those that learn:

- Setting clear boundaries and guidelines for their AI agents

- Build powerful architectural patterns that agents can work within

- Creating effective feedback loops between people and AI capabilities

- Maintaining Manual Supervision While Leveraging AI Autonomy

English-first development environment

As Andrej Karpathy points out:

"English is becoming the hottest new programming language."

This is a fundamental shift in how we will interact with development tools. The ability to think clearly and communicate precisely in natural language is becoming as important as traditional coding skills.

This shift to agency development will require us to develop our skills:

- Stronger systems design and architectural thinking

- Better requirements specification and communication

- Increased focus on quality assurance and validation

- Enhancing collaboration between people and AI capabilities

The return of software as engineering?

While AI makes it easier than ever to build software quickly, we risk losing something critical - the art of creating truly polished, consumer-quality experiences.

Presentation Quality Trap

This is becoming a pattern: teams are using AI to quickly build impressive demos. The happy path is running beautifully. Investors and social networks marvel at it. But what about when real users start clicking? That's when things fall apart.

I've seen this with my own eyes:

- Error messages that mean nothing to the average user

- Edge cases that lead to application crashes

- Messy UI states that have never been cleaned up

- Completely ignoring accessibility

- Performance issues on slower devices

These aren't just P2 bugs - they're the difference between software that people tolerate and software that people love.

The lost art of embellishment

Creating true self-service software - the kind where users don't have to contact support - requires a different mindset:

- Obsessed with misinformation

- Testing on a slow connection

- Elegantly handles every edge case

- make functionality discoverable

- Testing with real, often non-technical users

This attention to detail (perhaps) cannot be generated by AI. It comes from empathy, experience, and a deep concern for craftsmanship.

Personal software renaissance

I believe we will see a renaissance in personal software development. As the market is flooded with AI-generated MVPs, the products that will stand out will be those built by developers who are

- Proud of their craftsmanship

- Attention to small details

- Focus on the complete user experience

- Built for edge cases

- Creating a true self-service experience

Ironically, AI tools may actually be contributing to this renaissance. By handling routine coding tasks, they enable developers to focus on what matters most - creating software that actually serves and delights users.

spy

AI isn't making our software better, because software quality has (perhaps) never been primarily limited by coding speed. The hard parts of software development - understanding requirements, designing maintainable systems, dealing with edge cases, ensuring security and performance - still require human judgment.

What AI does is allow us to iterate and experiment faster, and by exploring more quickly may lead to better solutions. But only if we maintain our engineering discipline and use AI as a tool, not a substitute for good software practices. Remember: the goal is not to write more code faster. Rather, the goal is to build better software. Used wisely, AI can help us do that. But understanding what "better" means and how to achieve it is still up to us.

What's your experience with AI-assisted development? I'd love to hear your stories and insights in the comments.

© Copyright notes

Article copyright AI Sharing Circle All, please do not reproduce without permission.

Related posts

No comments...