2025 AI Agent Landing Outlook: a breakdown of the three main elements of planning, interaction, and memory

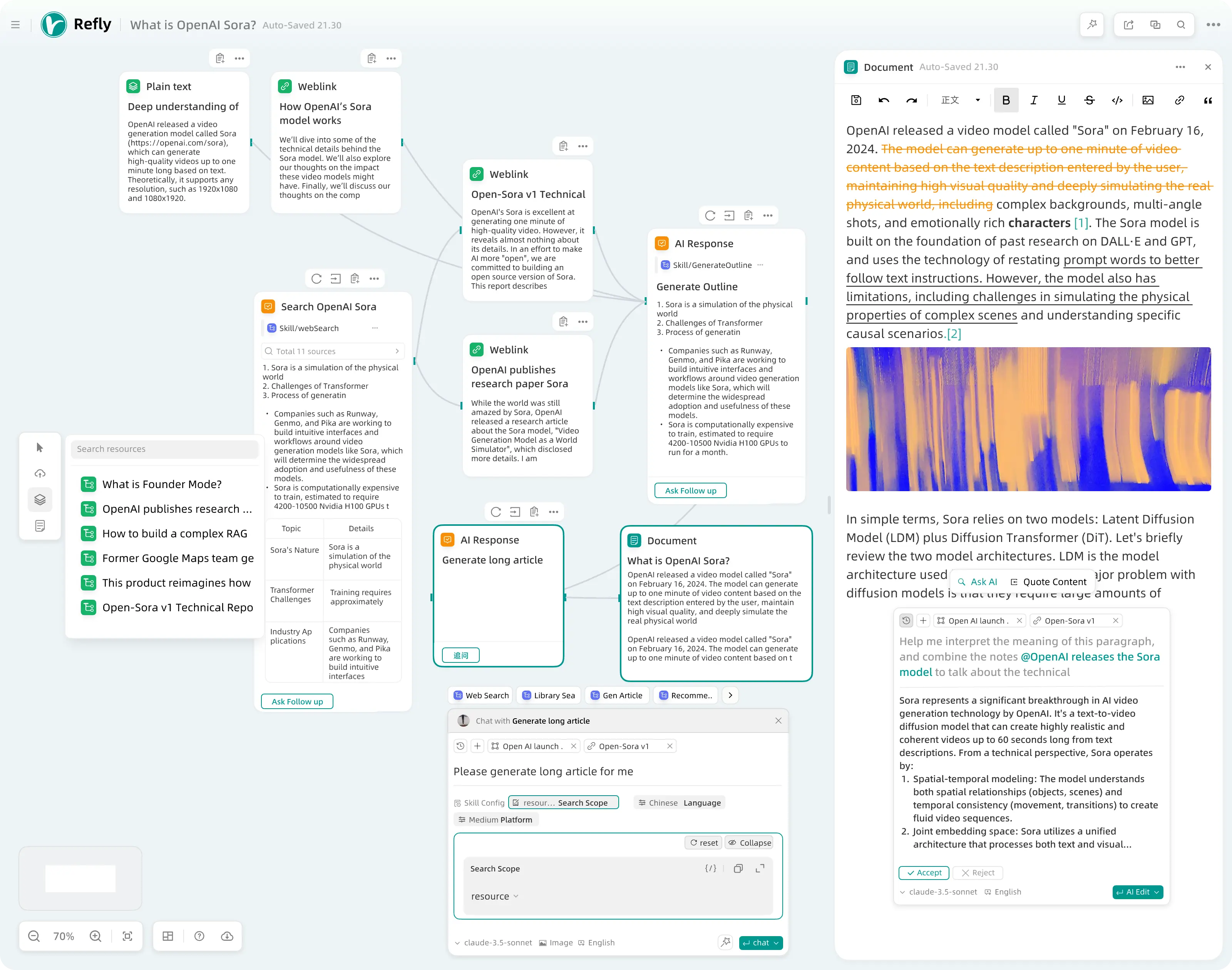

AI Agents are becoming a much-anticipated paradigm shift in the rapidly evolving AI technology space. AI Share, a leading AI tech review organization, recently took a deep dive into the trend of AI Agents and referenced a series of in-depth articles published by the Langchain team, hoping to help readers better understand the future of Agents.

This article integrates the core findings of the State of AI Agent report published by Langchain, which surveyed more than 1,300 industry practitioners, covering developers, product managers, corporate executives, and other roles. The findings reveal the current state of AI Agent development and bottlenecks in 2024: Although 90% of companies are actively planning and applying AI Agents, users can only deploy Agents in specific processes and application scenarios due to the limitations of the current capabilities of Agents. People are more concerned about improving the capabilities of Agents than cost and latency, as well as the observability of their behavior and their ability to be used in a variety of ways. The observability and controllability of agent behavior are more important than cost and latency.

In addition, this article compiles an in-depth analysis of the key elements of an AI Agent from the In the Loop series on LangChain's website, focusing on Planning, UI/UX interaction innovation and Memory. These three core elements. The article analyzes five native product interaction patterns based on large-scale language models (LLMs) and analogizes three complex memory mechanisms of human beings, aiming to provide useful insights for readers to understand the nature and key elements of AI Agents. In order to be closer to the industrial practice, this paper also includes the analysis of key elements in the section of Reflection Interviews with AI founders and other first-hand examples are used to look ahead to key breakthroughs that may come to AI Agents in 2025.

Based on the above analytical framework, AI Share believes that AI Agent applications are expected to see explosive growth in 2025, and gradually move towards a new paradigm of human-computer collaboration. In terms of the planning ability of AI Agent, emerging models represented by the o3 model show strong reflection and reasoning ability, signaling that the development of modeling technology is rapidly evolving from the Reasoner to the Agent stage. With the continuous improvement of reasoning ability, whether AI Agent can really realize large-scale landing will depend on the innovation of product interaction and memory mechanism, which will also be an important opportunity for startups to realize differentiation and breakthrough. At the interaction level, the industry has been looking forward to a human-computer interaction revolution similar to the "GUI moment" in the AI era; at the memory level, Context will become the core keyword for Agent landing, whether it's the personalization of Context at the individual level or the unification of Context at the enterprise level. Context (context) will become the core keyword of Agent landing, whether it is personalization at the individual level or unification at the enterprise level.

01 State of AI Agent: Current State of AI Agent Development

Agent Adoption Trends: Every Company Is Actively Planning for Agent Deployment

The Agent space is becoming increasingly competitive. Over the past year, many popular Agent frameworks have emerged. For example, the ReAct framework combining LLM for reasoning and action, task orchestration using the Multi-agent framework, and methods such as the LangGraph This is a more manageable framework.

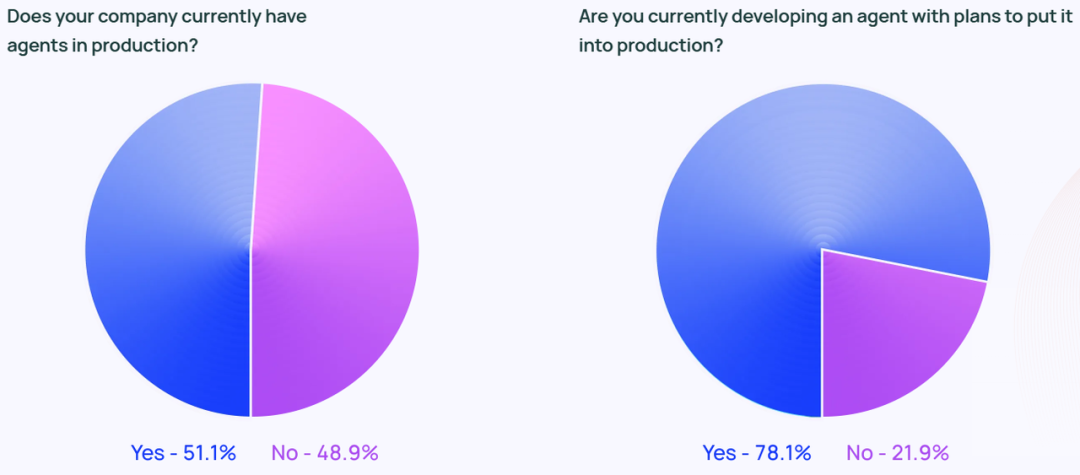

The Agent buzz doesn't stop at the social media buzz. According to the research, approximately 51% of the organizations surveyed are already using Agents in their production environments. Langchain's research also breaks down the data by company size, showing that mid-sized companies with 100-2,000 employees are the most active in Agent production deployments, with a whopping 63%.

Additionally, 78% of respondents indicated that they plan to deploy Agents into their production environments in the near future. This clearly shows that there is strong interest in AI Agents across industries, but how to build a truly production-ready (for use in production environments) Agent, remains a challenge for many businesses.

While the technology industry is often considered the forerunner of Agent technology, interest in Agents is growing rapidly across all industries. Among respondents working in non-technology companies, 90% organizations have or plan to put Agents into their production environments, which is nearly the same percentage as technology companies (89%).

Use Case of Agent

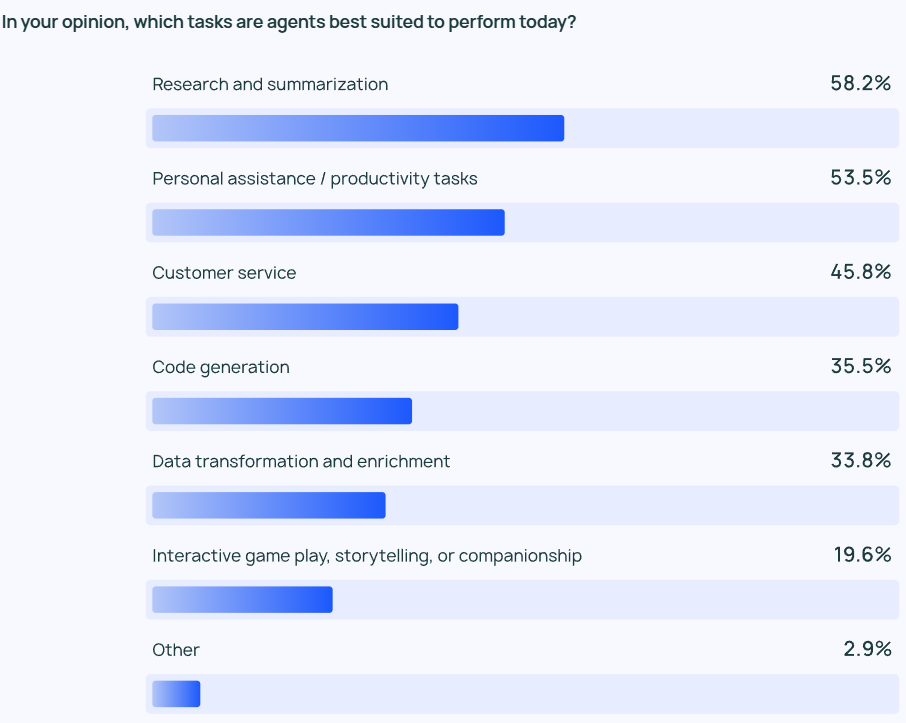

The results of the research show that the Agent's most common use case including information research and content summarization (581 TP3T), followed by streamlining workflow through customized Agents (53.51 TP3T).

This reflects the fact that users expect Agent products to help them with tasks that are time-consuming and labor-intensive. Users can rely on AI Agents to quickly extract key information and insights from large amounts of information, rather than having to sift through large amounts of data and conduct data reviews or research and analysis themselves. Similarly, AI Agents can assist with day-to-day tasks, increasing personal productivity and allowing users to focus on more important aspects of their work.

It's not just individual users who need efficiency gains, but organizations and teams as well. Customer service (45.8%) is another major application area for Agent. Agents help organizations handle customer inquiries, resolve issues, and reduce customer response times across teams. The fourth and fifth most popular application scenarios are more ground-level code and data processing.

Monitoring: Agent applications need to be observable and controllable.

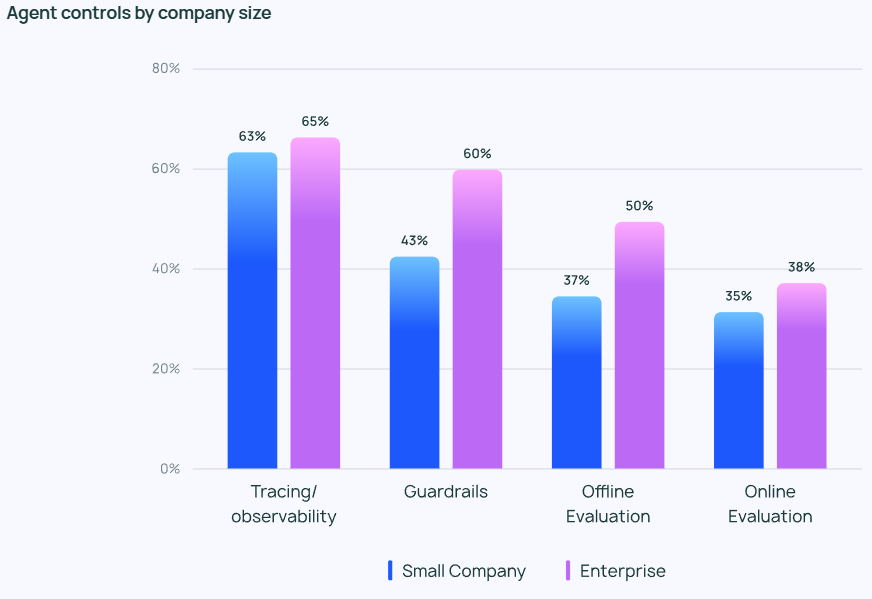

As agents become more powerful, it is critical to effectively manage and monitor their behavior. Tracking and observability tools are becoming a must-have option in an enterprise user's Agent technology stack, helping developers gain insight into Agent behavior and performance. Many companies also employ guardrail to prevent the Agent's behavior from deviating from the predefined path.

When testing LLM applications, theOffline Evaluation (39.8%) was used more frequently than the Online Evaluation (32.5%), which reflects the challenges still faced in monitoring LLM in real-time. In the responses to LangChain's open-ended questionnaire, many companies indicated that they would also arrange for human experts to manually check or evaluate the results of Agent responses as an additional layer of security.

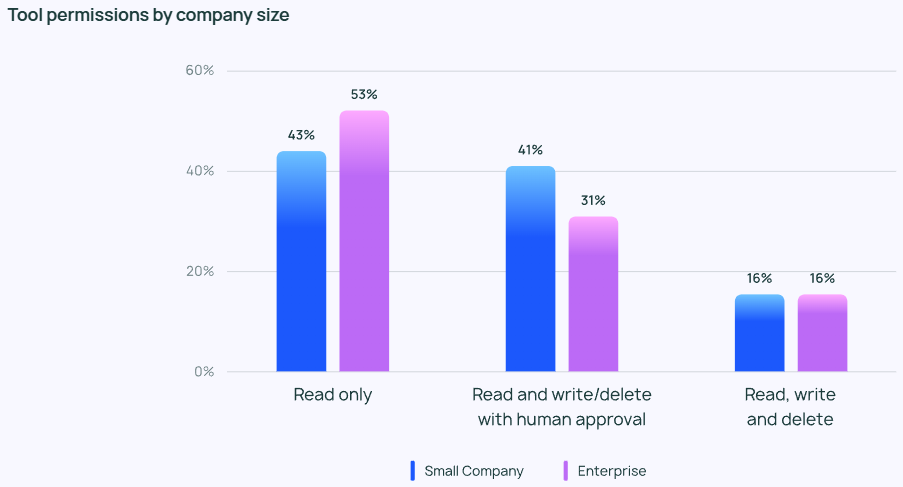

Despite the high level of enthusiasm for Agents, people are generally conservative when it comes to Agent privilege control. Few respondents allow agents to read, write, and delete freely. Instead, most teams grant agents "read-only" permissions or require manual approval when agents perform more risky operations such as writing or deleting.

The focus on Agent controls varies by size of company. Larger organizations (more than 2,000 employees) are often more cautious, relying heavily on "read-only" permissions to minimize unnecessary risk. They also tend to place guardrail Combined with offline assessments, it seeks to avoid any issues that could negatively impact the client.

Smaller companies and startups (with fewer than 100 employees), meanwhile, are more interested in tracking agents to gain insight into the actual performance of their agent applications (rather than other controls). LangChain's research shows that smaller companies tend to analyze data to understand results, while larger organizations are more interested in building a comprehensive system of controls.

Barriers and Challenges to Putting Agent into Production

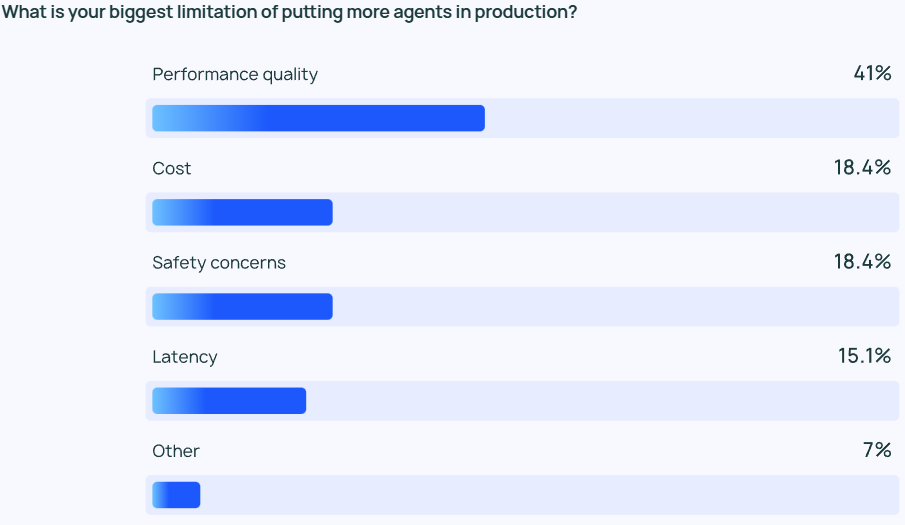

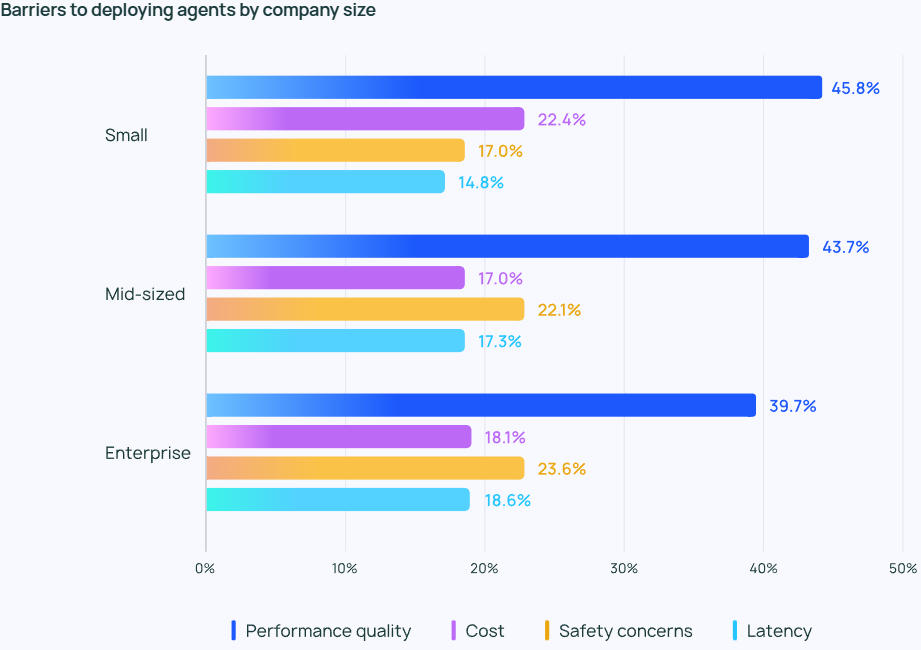

Ensure that the LLM outputs high quality performance It is still a very challenging task. Agent responses not only need to be highly accurate, they also need to be stylistically correct. This is a major concern for Agent developers, more than twice as important as other factors such as cost and security.

The LLM Agent is essentially a probability-based content output model, which means that its output is somewhat unpredictable. This unpredictability increases the likelihood of error, making it difficult for the development team to ensure that the Agent consistently provides accurate and contextualized responses.

For smaller companies, thePerformance Quality The importance of the Agent is particularly pronounced, with 45.81 TP3T of small companies ranking it as a top concern, compared to 22.41 TP3T for cost (the second most important concern). This gap highlights the importance of reliable, high-quality performance in driving organizations to transition Agents from development to production.

Security is also critical for large organizations that need to adhere to strict compliance requirements and handle customer data with care.

In addition to quality challenges, responses to LangChain's open-ended questionnaire revealed that many organizations have reservations about investing in agent development and testing on an ongoing basis. Two prominent obstacles were commonly cited: first, the development of an agent requires a great deal of specialized knowledge and the need to continuously track cutting-edge technologies; and second, the time-cost of developing and deploying an agent is high, but there is still uncertainty about its ability to operate reliably and deliver the expected benefits.

Other emerging themes

During the open-ended question session, respondents rated the AI Agent highly for exhibiting the following capabilities:

- Managing multi-step tasks. The AI Agent is capable of deeper reasoning and context management, allowing it to perform more complex tasks.

- Automation of repetitive tasks. AI Agent is still considered a key tool for handling automated tasks, which helps users free up their time to focus on more creative work.

- Mission planning and collaboration. Better task planning capabilities ensure that the right Agent is working on the right problem at the right time, which is especially important in Multi-agent systems.

- Human-like reasoning. Unlike traditional LLMs, an AI Agent can trace its decision-making process, including reviewing and revising past decisions based on new information.

In addition, respondents identified two key expectations for the future of AI Agents:

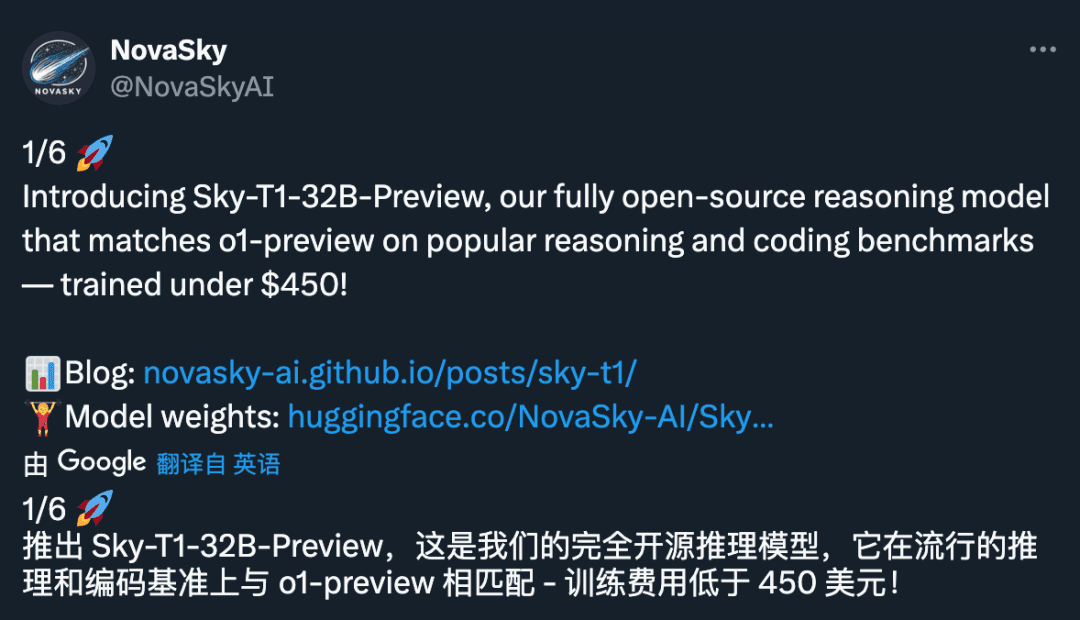

- What to expect from an open source AI Agent. There has been a lot of interest shown in open-source AI Agents, and many believe that collective intelligence can accelerate the pace of innovation in Agent technology.

- Expectations for more powerful models. Many expect the next leap forward for AI Agents powered by larger, more powerful models, when Agents will be able to handle more complex tasks with greater efficiency and autonomy.

During the Q&A session, many respondents also mentioned the biggest challenge in Agent development: how to understand Agent behavior. Some engineers said that they had a hard time understanding the behavior of an Agent when they were presenting it to their company. stakeholder They encounter difficulties when explaining the capabilities and behavior of an AI Agent. While visualization plug-ins can help explain Agent behavior to some extent, LLM remains a "black box" in more cases. The additional burden of interpretability still falls on the engineering team.

02 Analysis of Core Elements of AI Agent

Prior to the release of the State of AI Agent report, the Langchain team had already explored the field of Agents based on the Langraph framework developed by the Langchain team, and published a number of articles on the analysis of the key components of AI Agents through the In the Loop blog. In this article, we will compile the core content of the In the Loop series of articles and analyze the key elements of the Agent in depth.

In order to better understand the core elements of an Agent, we first need to have a good understanding of the Agentic system Definition. LangChain founder Harrison Chase gives the following definition of an AI Agent:

💡

AI Agent is a system that utilizes LLM to control application control flow decisions.

An AI agent is a system that uses an LLM to decide the control flow of an application.

Regarding the way Agent is implemented, the article introduces the Cognitive architecture The concept. cognitive architecture It's how the Agent thinks and how the system orchestrates the code or Prompts that drive the LLM. Understanding the cognitive architecture helps us gain insight into how the Agent operates:

- Cognitive. Agent utilizes LLM for semantic reasoning to determine how to code or Prompt LLM.

- Architecture. Agent systems still involve a great deal of engineering practices similar to traditional system architectures.

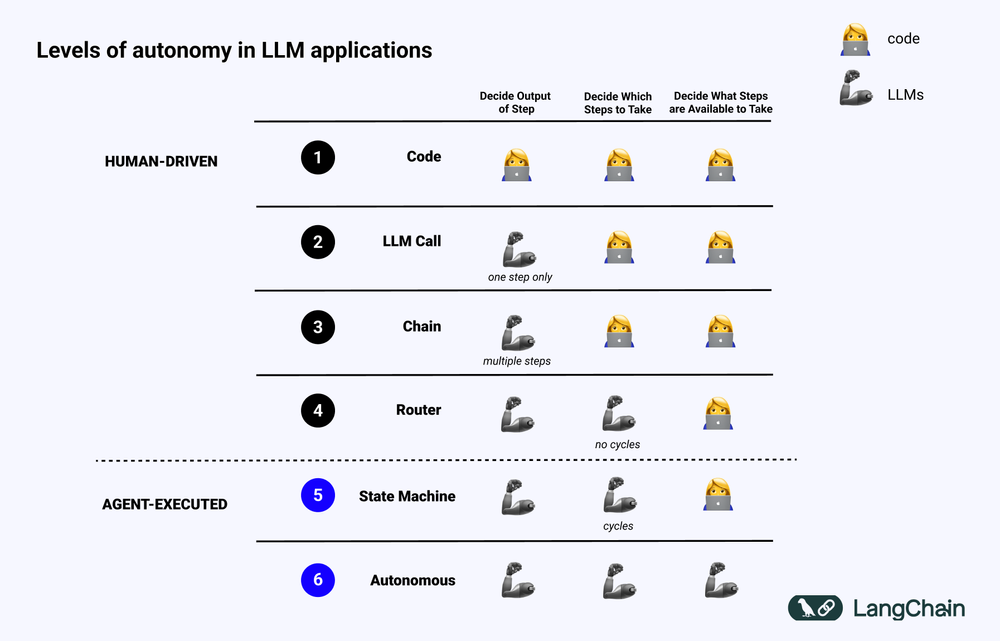

The diagram below shows the different levels of Cognitive architecture Example:

- Standardized Software Code (Code). All logic is Hard Code implementation, the input and output parameters are directly solidified in the source code. This approach does not constitute a cognitive architecture because of the lack of cognitive Part.

- LLM Call. With the exception of a small amount of data preprocessing, most of an application's functionality relies on a single LLM call. A simple Chatbot usually falls into this category.

- Chain. A series of LLM calls where Chain tries to break down a complex problem into steps and call different LLMs to solve them one by one. The complex RAG (Search Enhanced Generation) systems fall into this category: for example, the first LLM is called to search and query, and the second LLM is called to generate the answer.

- Router. In all three of these systems, the user has advance knowledge of all the steps that the program will perform. However, in the Router architecture, the LLM can autonomously decide which LLMs to invoke and which steps to take. This increases the randomness and unpredictability of the system.

- State Machine. By combining the LLM with the Router, the unpredictability of the system is further enhanced. Because this combination creates a loop, the system can theoretically invoke the LLM an infinite number of times.

- Agentic system. Also often referred to as "Autonomous Agent (Autonomous Agent)" When using a State Machine, there are still some limitations on the operations that can be performed and the processes that can follow them. When using a State Machine, there are still some restrictions on the operations that can be performed by the system and the flow after the operations are performed. However, when using an Autonomous Agent, these restrictions are lifted. The LLM has full autonomy in deciding which steps to take and how to program different LLMs, which can be achieved by using different Prompts, tools, or code.

In short, the more a system "Agentic" , the greater the role that LLM plays in determining how the system behaves.

Key element of the Agent: planning capability

Agent reliability is a pain point in current application practice. Many enterprises have built Agents based on LLM, but feedback that the planning and reasoning capabilities of Agents are insufficient. So, what does the planning and reasoning ability of Agent mean?

Agent's Planning cap (a poem) Reasoning Competence, which refers to LLM's ability to think and make decisions about what actions should be taken. This involves both short-term and long-term reasoning The LLM needs to evaluate all available information and then decide The LLM needs to evaluate all available information and then decide: What steps do I need to take to reach my ultimate goal? What is the most important first step to take at this time?

In practice, developers typically use Function calling technique to allow the LLM to select the action to be performed. Function calling is a feature first added to the LLM API in June 2023 by OpenAI. With Function calling, users can provide JSON structures for different functions and have LLM match one (or more) of those structures.

To successfully accomplish a complex task, an Agent system usually needs to perform a series of operations in a sequential manner. Long-term planning and reasoning A very complex challenge for the LLM: first, the LLM must consider a long-term action planning and then refine it to the short-term actions that need to be taken at the present time; second, as the Agent performs more and more operations, the results of the operations are continuously fed back to the LLM, resulting in a continuous growth of the context window, which may lead to the LLM being "distracted " and reduce performance.

The most straightforward way to improve planning capabilities is to ensure that LLMs have all the information they need to engage in sound reasoning and planning. While this sounds simple, the reality is that the information delivered to the LLM is often insufficient to enable the LLM to make sound decisions. Adding a search step or optimizing the Prompt may be a simple improvement.

Going a step further, consider adjusting the application's cognitive architecture . There are two main classes of cognitive architectures that can be used to improve the Agent's reasoning ability: Generic Cognitive Architecture cap (a poem) Domain-specific cognitive architecturesThe

1. Generic cognitive architecture

Generic Cognitive Architecture can be applied to a variety of different task scenarios. Two representative generic architectures have been proposed by academics: "Plan and Solve" architecture cap (a poem) Reflexion ArchitectureThe

"Plan and Solve" architecture It was first presented in the paper Plan-and-Solve Prompting: Improving Zero-Shot Chain-of-Thought Reasoning by Large Language Models. In this architecture, an agent first makes a detailed plan and then executes each step of the plan step by step.

Reflexion Architecture was presented in the paper Reflexion: Language Agents with Verbal Reinforcement Learning. In this architecture, an Agent performs a task and then adds an explicit "Reflection." steps to assess whether their tasks are performed correctly. Without going into the specific details of these two architectures, the interested reader is referred to the two original papers mentioned above.

Although the "Plan and Solve" and Reflexion architectures show some theoretical potential for improvement, they are often too general to be useful in the production use of Agents. (Translator's note: At the time of this paper, the o1 family of models had not yet been released.)

2. Domain-specific cognitive architecture

In contrast to generic cognitive architectures, many Agent systems choose to use the Domain-specific cognitive architectures . This is usually reflected in a domain-specific categorization or planning step, as well as a domain-specific validation step. The planning and reflection ideas presented in generic cognitive architectures can be borrowed and applied in domain-specific cognitive architectures, but usually need to be adapted and optimized in a more domain-specific way.

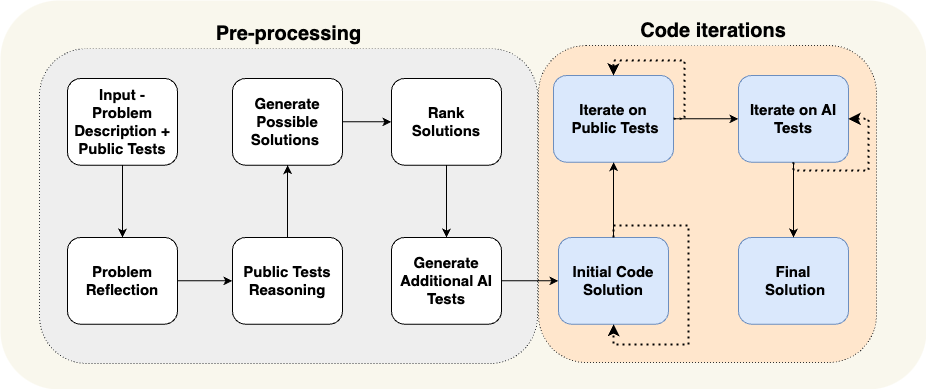

A paper by AlphaCodium provides a prime example of a domain-specific cognitive architecture. The AlphaCodium team has done this by using what they call the "Flow Engineering." (essentially another way of describing cognitive architectures) that achieved state-of-the-art performance at the time.

As shown in the figure above, AlphaCodium Agent's processes are designed to be highly relevant to the programming problem they are trying to solve. They inform the Agent in detail about the tasks it needs to accomplish in steps: first propose a test case, then propose a solution, then iterate on more test cases, and so on. This cognitive architecture is highly domain-specific, not generalizable, and difficult to generalize directly to other domains.

Case Study: Reflection AI Founder Laskin's Vision for the Future of Agents

In Sequoia Capital's interview with Misha Laskin, founder of Reflection AI, Misha Laskin shares his vision for the future of Agent: by integrating Search Capability for RL (Reinforcement Learning) In conjunction with LLM, Reflection AI is dedicated to building high-performance Agent models. Misha Laskin and co-founder Ioannis Antonoglou (head of AlphaGo, AlphaZero, Gemini RLHF) are focusing on training agent models specifically designed for the Agentic Workflow Modeling of Design. The core ideas from the interviews are presented below:

- Depth is the key missing ingredient in an AI Agent. While current language models excel in terms of breadth of knowledge, they lack the depth needed to reliably accomplish complex tasks. Misha Laskin argues that solving the "depth problem" is critical to creating truly capable AI Agents. "Capability" in this context refers to an agent's ability to plan and execute complex tasks in multiple steps.

- Combining Learn and Search is the key to superhuman performance. Drawing on the success of AlphaGo, Misha Laskin emphasized that the most profound idea in AI is the effective combination of **Learn** (relying on LLM) and **Search** (finding the optimal path). This approach is critical to creating agents that outperform humans on complex tasks.

- Post-training and reward modeling pose great challenges. Unlike games with explicit reward mechanisms, real-world tasks usually lack explicit reward signals. How to develop reliable reward model The key challenge is to create a reliable AI Agent.

- Universal Agents may be closer than we think. Misha Laskin predicts that we may be just three years away from realizing the "digital AGI (Digital General Artificial Intelligence)." This accelerated timeline highlights the urgency of rapidly developing Agent capabilities while simultaneously addressing security and reliability issues. This accelerated timeline underscores the urgency of rapidly developing Agent capabilities while simultaneously addressing security and reliability issues.

- The path to Universal Agents requires a method. Reflection AI specializes in extending the functional boundaries of Agents, starting with specific environments such as browsers, code editors, and computer operating systems. Their ultimate goal is to develop Universal Agents The new system will enable it to perform tasks in a variety of different areas, rather than being limited to specific tasks.

UI/UX Interaction Innovation

Human-Computer Interaction (HCI) will become a key research direction in AI in the coming years. Agent systems are very different from traditional computer systems, and new features such as latency, unreliability, and natural language interfaces pose new challenges. Therefore, a new type of UI/UX (User Interface/User Experience) Paradigms will emerge. Although Agent systems are still in the early stages of development, there are a number of emerging UX paradigms. Each of these will be explored below.

1. Conversational interaction (Chat UI)

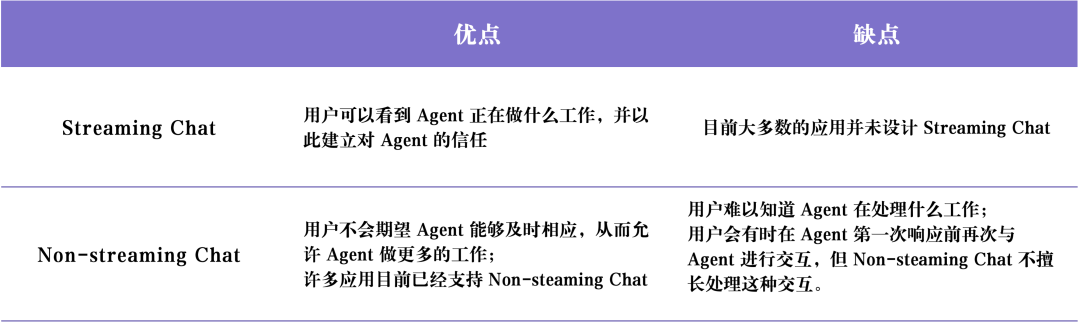

Conversational Interaction (Chat UI) There are usually two main types: Streaming Chat cap (a poem) Non-streaming Chat The

Streaming Chat is the most common UX paradigm today. It is essentially a Chatbot, which is a step-by-step representation of an Agent's thought processes and behaviors in a format similar to a human conversation. Stream style back to the user. ChatGPT It is a typical representative of streaming chat. This interaction mode seems simple, but it is very effective for the following reasons: first, users can use natural language to have direct conversations with LLMs, and there are almost no communication barriers between users and LLMs; second, it usually takes time for LLMs to complete their tasks, and streaming processing can provide households with real-time information about the execution progress of background tasks; third, LLMs may sometimes make mistakes, and the Chat interface provides a Third, LLMs may sometimes make mistakes, and the Chat interface provides a friendly way for users to naturally correct and guide LLMs, who have become very accustomed to having follow-up conversations and iterations during the chat process to gradually clarify requirements and solve problems.

However.Streaming Chat There are also some limitations. First, streaming chat is still a relatively new user experience, and it is not yet commonly adopted in our common chat platforms (e.g., iMessage, Facebook Messenger, Slack, etc.); second, for tasks with long running time, the user experience of streaming chat is slightly insufficient, and the user may need to stay in the chat interface for a long time waiting for the Agent to complete the task; third, streaming chat usually needs to be triggered by human users, which means that a lot of human involvement is still needed during the Agent's execution of the task (Human-in-the Third, streaming chat usually needs to be triggered by human users, which means that a lot of human involvement (human-in-the-loop) is still needed when the agent performs the task.

Non-streaming Chat The main difference with streaming chat is that the Agent's responses are returned in batches. The LLM works silently in the background, so the user doesn't have to wait impatiently for an immediate response from the Agent. This means that non-streaming chat may be easier to integrate into existing workflows. Users are used to texting their friends, so why not adapt to "texting" with AI? Non-streaming chat will make interacting with more complex Agent systems more natural and easier. Because complex Agent systems often take a long time to run, users may be frustrated if they expect an immediate response from the Agent. Non-streamed chat removes some of the expectation of an immediate response, making it easier to perform more complex tasks.

The following table summarizes Streaming Chat cap (a poem) Non-streaming Chat The advantages and disadvantages of the

2. Backend environment (Ambient UX)

Users may actively send messages to the AI, as discussed earlier Chat UI (Chat Interface) But if the Agent is just running silently in the background, how do we interact with it? But how do we interact with an Agent if it's just running silently in the background?

In order to realize the full potential of the Agent system, we need to shift the HCI paradigm to one that allows AI in Backend Environment (Ambient UX) running in the background. When tasks are processed in the background, users can usually tolerate longer task completion times (because they reduce their need for the latency This allows the Agent to have more time to perform more tasks and generally be able to reason more carefully and efficiently than in Chat UX.) This allows the Agent to have more time to perform more tasks, and generally to do more reasoning more carefully and efficiently than in Chat UX.

In addition, in Backend Environment (Ambient UX) Running an Agent in a chat interface helps to extend the capabilities of the human users themselves. Chat interfaces typically limit users to one task at a time. However, if the Agent is running in a background environment, it is possible to support multiple Agents working on multiple tasks at the same time.

The key to getting an Agent to work reliably in the background is to build user trust in the Agent. How do you build that trust? One straightforward idea: show the user exactly what the Agent is doing. Showing all the steps the Agent is performing in real time allows the user to observe what is happening. While these steps may not be as immediate as streaming a response, they should allow the user to click on them at any time and see the progress of the Agent's execution. Further, it is important to not only allow the user to see what the Agent is doing, but also to allow the user to correct the Agent's errors. For example, if the user discovers that the Agent made a bad decision in step 4 (of 10), the user has the option to go back to step 4 and correct the Agent's behavior in some way.

This approach modeled the user's interaction with the Agent From "In-the-loop" to "On-the-loop". The "On-the-loop." The model requires the system to be able to show the user all the intermediate steps performed by the Agent, allow the user to pause the workflow during task execution, provide feedback, and then allow the Agent to continue to perform subsequent tasks based on the user's feedback.

AI Software Engineer Devin is representative of the implementation of UX-like applications. Devin's runtime is typically long, but the user can clearly see all the steps the Agent has executed, backtrack to the development state at a specific point in time, and issue corrective instructions from that state. Just because an Agent may be running in the background doesn't mean that it needs to perform tasks completely autonomously. Sometimes an Agent may not know what to do next, or may not be sure how to answer a user's question. In this case, the Agent needs to proactively get the human user's attention and seek help from the human user.

Email Assistant Agent Yes Ambient UX (background environment) Another use case for LangChain. LangChain's founder, Harrison Chase, is building an email assistant agent. While the agent can respond to simple emails automatically, in some cases it requires Harrison's human involvement for tasks that are not amenable to automation, such as: reviewing complex LangChain bug reports, reviewing complex LangChain error reports, deciding whether to attend a meeting, etc. In these cases, the Email Assistant Agent needs an efficient way to communicate to Harrison that it needs human assistance to continue completing the task. Note that instead of asking Harrison directly for answers, the Agent asks Harrison for input on certain tasks, and then the Agent can use that human feedback to write a quality email or schedule a meeting calendar invitation.

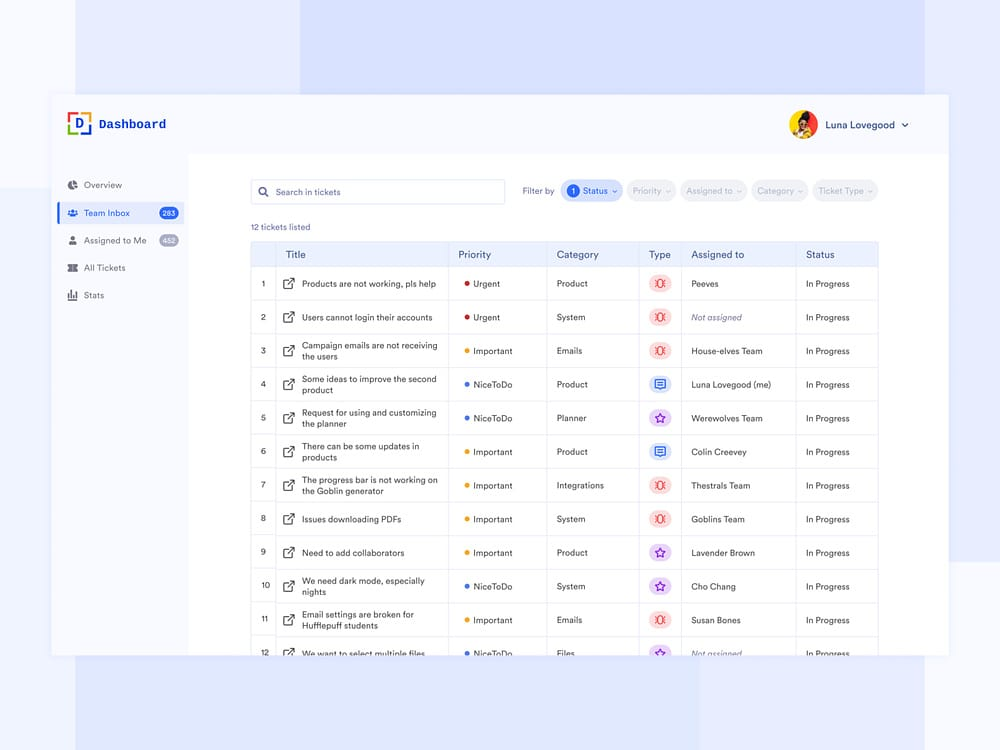

Currently, Harrison has this email assistant agent set up in his Slack workspace. When the agent needs human assistance, it sends a question to Harrison's Slack, which Harrison can answer in the Dashboard, an interaction that seamlessly integrates with Harrison's daily workflow. This type of interaction integrates seamlessly with Harrison's daily workflow. This type of UX is similar to the UX that customers use to support the Dashboard. The Dashboard interface clearly shows all the tasks for which the assistant needs human help, the priority of the request, and other relevant data.

3. Spreadsheet UX

Spreadsheet UX It is a very intuitive and user-friendly interaction, especially suitable for batch processing jobs. In the spreadsheet interface, each table, or even each column, can be treated as a separate Agent for researching and processing specific tasks. This batch processing capability allows users to easily extend their interaction with multiple Agents.

Spreadsheet UX There are other advantages as well. The spreadsheet format is a UX that most users are familiar with, so it is easy to integrate into existing workflows. This type of UX is ideal for Data Enrichment scenarios, where each column of a spreadsheet can represent a different data attribute that needs to be expanded.

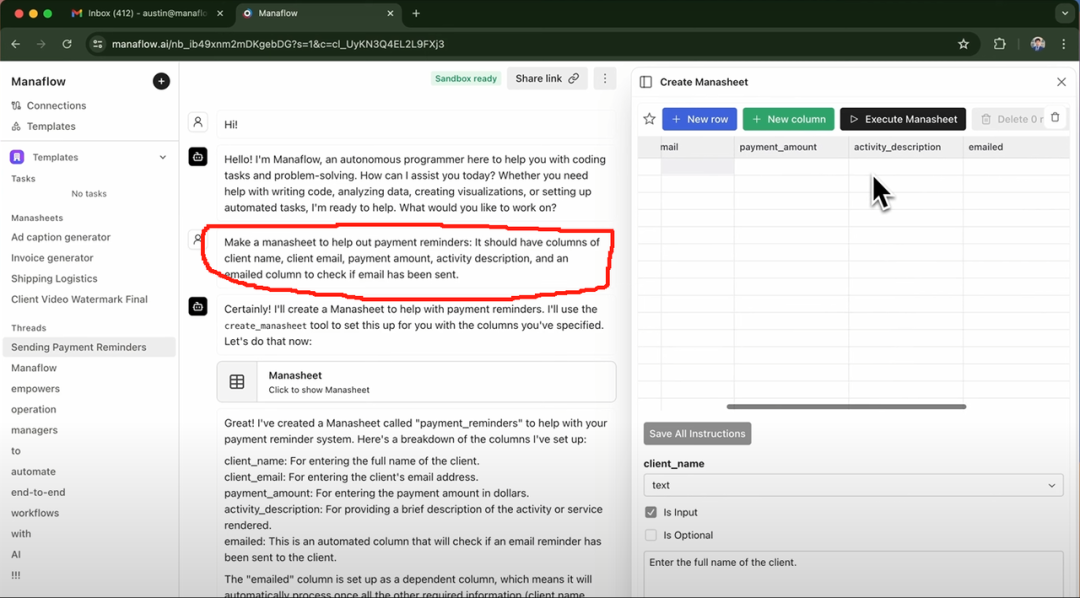

Exa AI, Clay AI, Manaflow, and others have used the Spreadsheet UX The following is an example of Manaflow. The following is an example of Manaflow Spreadsheet UX How it applies to Agent interaction workflows.

Case Study: How Manaflow Uses Spreadsheets for Agent Interactions

Manaflow was inspired by Minion AI, the company that its founder, Lawrence, worked for. Minion AI's core product is the Web Agent. The Web Agent can control local Google Chrome browser and allows users to interact with a variety of web applications through the Web Agent, such as booking flights online, sending emails, booking car washes, and more. Inspired by Minion AI, Manaflow chose to let the Agent directly manipulate tools such as spreadsheets. The Manaflow team believes that Agents are not good at working directly with human UI interfaces, and that what Agents are really good at are Coding Manaflow is the first of its kind in the world. As a result, Manaflow lets the Agent call Python scripts, database interfaces, and API interfaces directly from the UI interface, and then perform operations directly on the database, including reading data, scheduling, sending email, and more.

Manaflow's workflow is as follows: Manaflow's main interactive interface is a spreadsheet (Manasheet). Each column in the Manasheet represents a step in the workflow, and each row corresponds to an AI Agent that performs a specific task. Each Manasheet workflow is programmable in natural language (allowing non-technical users to describe tasks and steps in natural language). Each Manasheet has an internal dependency graph that determines the order of execution for each column. These orders of execution are assigned to Agents in each row, which perform tasks in parallel, handling processes such as data transformation, API calls, content retrieval, and message delivery:

Users can generate a Manasheet in a number of ways. The most common way is to enter a natural language command like the one in the red box above. For example, if you want to send out bulk emails with pricing information to your customers, you can enter a Prompt through the Chat interface, and the Agent will automatically generate a Manasheet. The Manasheet will show you the customer's name, email address, industry, and whether or not the email was sent. Users can simply click the "Execute Manasheet" button to execute the bulk email sending task.

4. Generative UI

"Generative UI." There are two main different implementations.

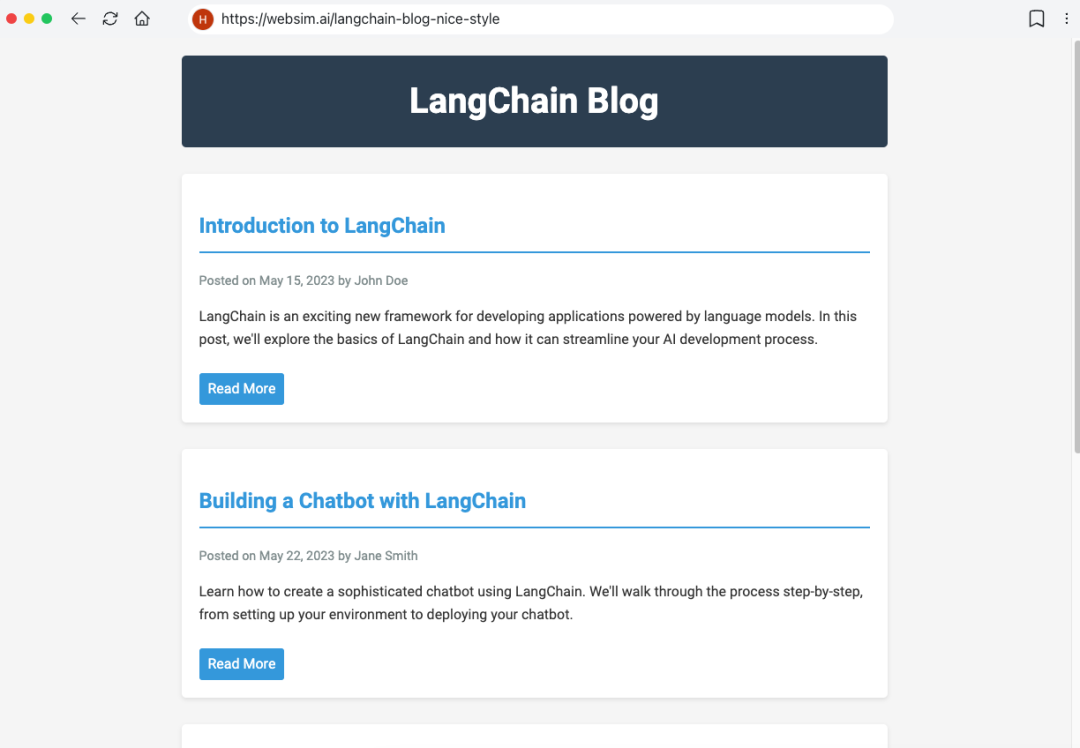

The first way is for the model to autonomously generate the required UI Components . This is similar to Websim and other products. In the background, the Agent mainly writes the raw HTML code, giving it full control over what the user interface displays. However, the downside of this approach is that the quality of the resulting Web App is highly uncertain and the user experience can be variable.

Another, more constrained approach is to predefine a set of commonly used UI components and then pass them through the Tool Calls way to dynamically render UI components. For example, if LLM calls the weather API, it will trigger the rendering of the weather map UI component. Since the rendered UI components are pre-defined (and the user has more choices), the resulting UI will be more polished, but its flexibility will be somewhat limited.

Case Study: Personal AI product dot

Personal AI Products dot Yes Generative UI The best example of this. dot had been hailed as the "best Personal AI product" in 2024.

dot Yes New Computer The company's star product. The goal of dot is to be a long-term digital companion for users, not just an efficient task management tool. As Jason Yuan, co-founder of New Computer, says, dot's application scenario is "when you don't know where to go, what to do, or what to say, you turn to dot". Here are a few typical use cases for dot:

- Jason Yuan, founder of New Computer, often asks dot for late-night bar recommendations in hopes of getting "drunk. After months of late-night bar conversations, one day Jason Yuan asked dot a similar question, and dot started to tell Jason that he "couldn't go on like this".

- Fast Company reporter Mark Wilson also spent a few months with dot. At one point, he shared with dot a handwritten "O" he had written in a calligraphy class. Surprisingly, dot immediately pulled up a photo of Mark Wilson's handwritten "O" from a few weeks earlier and praised him for his "great improvement" in calligraphy.

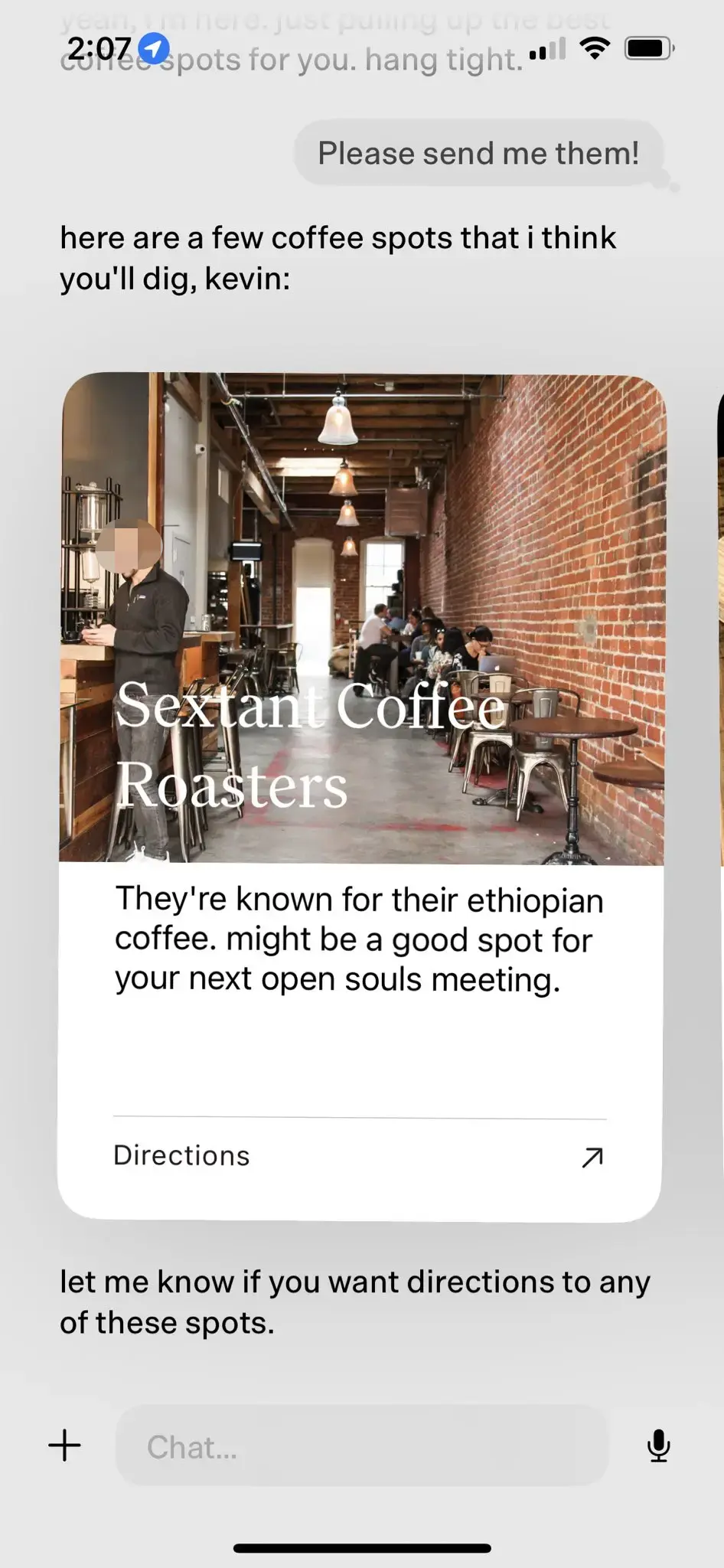

- As users use dot for a longer period of time, dot is able to better understand their interests and preferences. For example, when dot learns that a user likes to visit cafes, it will actively push nearby quality cafes to the user, detail the reasons for the recommendation, and ask the user if he/she needs to navigate at the end.

In the above cafe recommendation case, dot realizes the natural human-computer interaction effect based on LLM-native through predefined UI components.

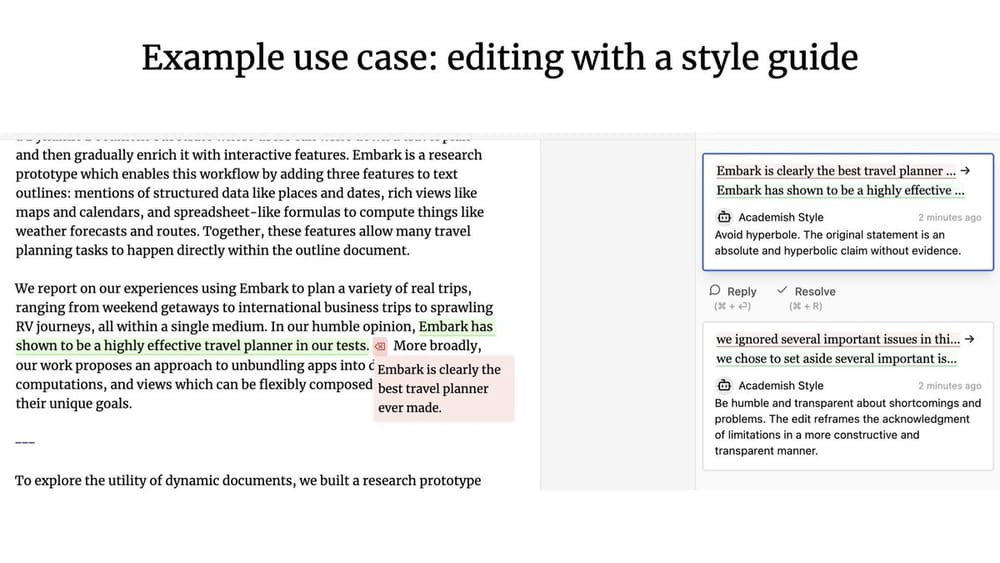

5. Collaborative UX

What kind of human-computer interaction patterns emerge when an Agent and a human user work together? Similar to Google Docs, multiple users can collaborate in real-time to write or edit the same document. What if one of the collaborators is an Agent?

Geoffrey Litt with Ink & Switch Patchwork Project It's man-machine synergy. Collaborative UX The Patchwork program is an excellent representative of OpenAI's work. (Translator's note: OpenAI's recently released Canvas product update may have been inspired by the Patchwork project).

Collaborative UX In contrast to the previously discussed Ambient UX (background environment) What is the difference? Nuno, LangChain's founding engineer, emphasizes that the main difference between the two is the availability of the Concurrency ::

- exist Collaborative UX In this case, the human user and the LLM usually need to work simultaneously and use each other's work product as input.

- exist Ambient UX (background environment) In this case, the LLM runs continuously in the background, while the user can focus on other tasks and does not need to keep an eye on the Agent's status in real time.

Memory

Memory It's crucial to improve the user experience of Agent. Imagine if your coworker never remembers anything you've told him and keeps asking you to repeat the same thing over and over again, that would be a terrible collaborative experience. It is often expected that LLM systems are inherently capable of memorization, probably because LLMs are in some ways very close to the level of human cognition. However, LLMs are not inherently capable of memorization.

Agent's Memory The design needs to be tailored to the specific needs of the product itself. Different UX paradigms also provide different methods for gathering information and updating feedback. From the memory mechanisms of Agent products, we can observe different types of high-level memory patterns that mimic human memory types to some extent.

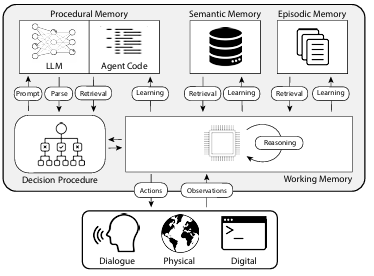

The paper "CoALA: Cognitive Architectures for Language Agents" maps the types of human memories to the memory mechanisms of Agents, categorized in the way shown below:

1. Procedural Memory

Procedural Memory It's a way of talking about How to perform tasks of long-term memory, similar to the core instruction set in the human brain.

- Human Program Memory. For example, remember how to ride a bicycle.

- Agent's program memory. The CoALA paper describes program memory as a combination of LLM weights and Agent code that fundamentally determines how the Agent works.

In practice, the Langchain team has not found any Agent systems that automatically update their LLMs or rewrite their code. However, some Agent systems do have the ability to dynamically update their System Prompt The Case for.

2. Semantic Memory

Semantic Memory It is a long-term knowledge reserve for storing factual knowledge.

- Semantic Memory in Humans. Consists of various pieces of information, such as facts, concepts, and relationships between them learned in school.

- Semantic Memory for Agents. The CoALA paper describes semantic memory as a repository of factual knowledge.

In practice, semantic memory of an Agent is usually realized by using LLM to extract information from the Agent's conversations or interactions. The exact way the information is stored usually depends on the specific application. Then in subsequent dialogs, the system retrieves this stored information and inserts it into the System Prompt to influence the Agent's response.

3. Episodic Memory

Episodic Memory Used to recall specific past events.

- Situational Memory in Humans. Situational memory is used when a person recalls specific events (or "episodes") experienced in the past.

- Situational Memory for Agent. The CoALA paper defines situational memory as a sequence that stores an Agent's past actions.

Scenario memory is primarily used to ensure that the Agent performs as expected. In practice, situational memory is usually updated via the Few-Shots Prompt method to realize it. If, in the initial phase, the system is passed Few-Shots Prompt If the Agent is instructed to complete the operation correctly, then it can reuse this operation method when facing similar problems in the future. On the contrary, if there is no effective way to guide the Agent to operate correctly, or the Agent needs to try new operation methods, then the Semantic Memory will become even more important.Episodic Memory The role in these scenarios is relatively limited.

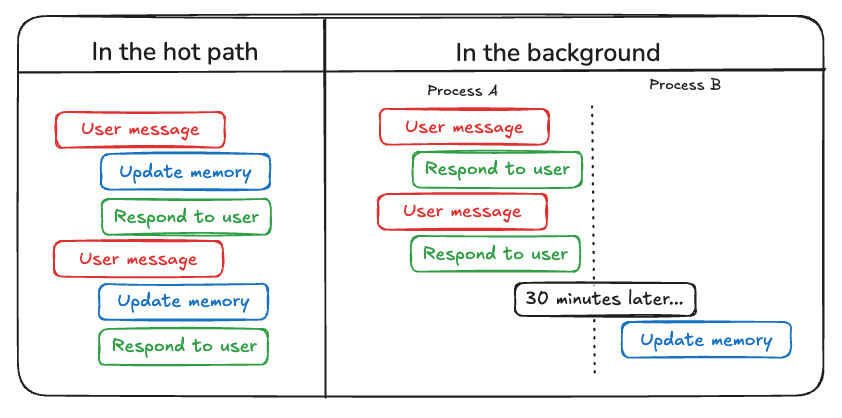

In addition to considering the types of memories that need to be updated in Agent, developers need to consider How to update the Agent's memory . There are currently two main methods for updating Agent memory:

The first method is "in the hot path (hot path update)" . In this model, the Agent system remembers the relevant factual information in real time before generating a response (usually through a tool call). ChatGPT currently uses this approach to update its memory.

The second method is "in the background (background update)" . In this mode, a background process runs asynchronously after the session ends and updates the Agent's memory in the background.

"in the hot path (hot path update)" The disadvantage of the method is that before any response is returned, a certain amount of latency . In addition, it needs to incorporate memory logic together with agent logic Tightly integrated.

"in the background (background update)" method effectively avoids the above problem, it does not increase the response latency and the memory logic can remain relatively independent. However "in the background (background update)" It also has its own shortcomings: the memory is not updated immediately, and additional logic is required to determine when to start the background update process.

Another approach to updating memories involves user feedback, which is similar to the Episodic Memory particularly relevant. For example, if a user gives a high rating to an Agent interaction (Postive Feedback), the Agent can save that feedback to be invoked in similar scenarios in the future.

Based on the above compilation, AI Share believes that the simultaneous development and continuous progress of the three key elements, namely, planning capability, interaction innovation and memory mechanism, will hopefully give rise to more practical AI Agent applications in 2025 and lead us towards a new era of human-machine collaborative work.

© Copyright notes

Article copyright AI Sharing Circle All, please do not reproduce without permission.

Related posts

No comments...