2024 Chinese Large Model Benchmark Measurement Report (SuperCLUE)

contexts

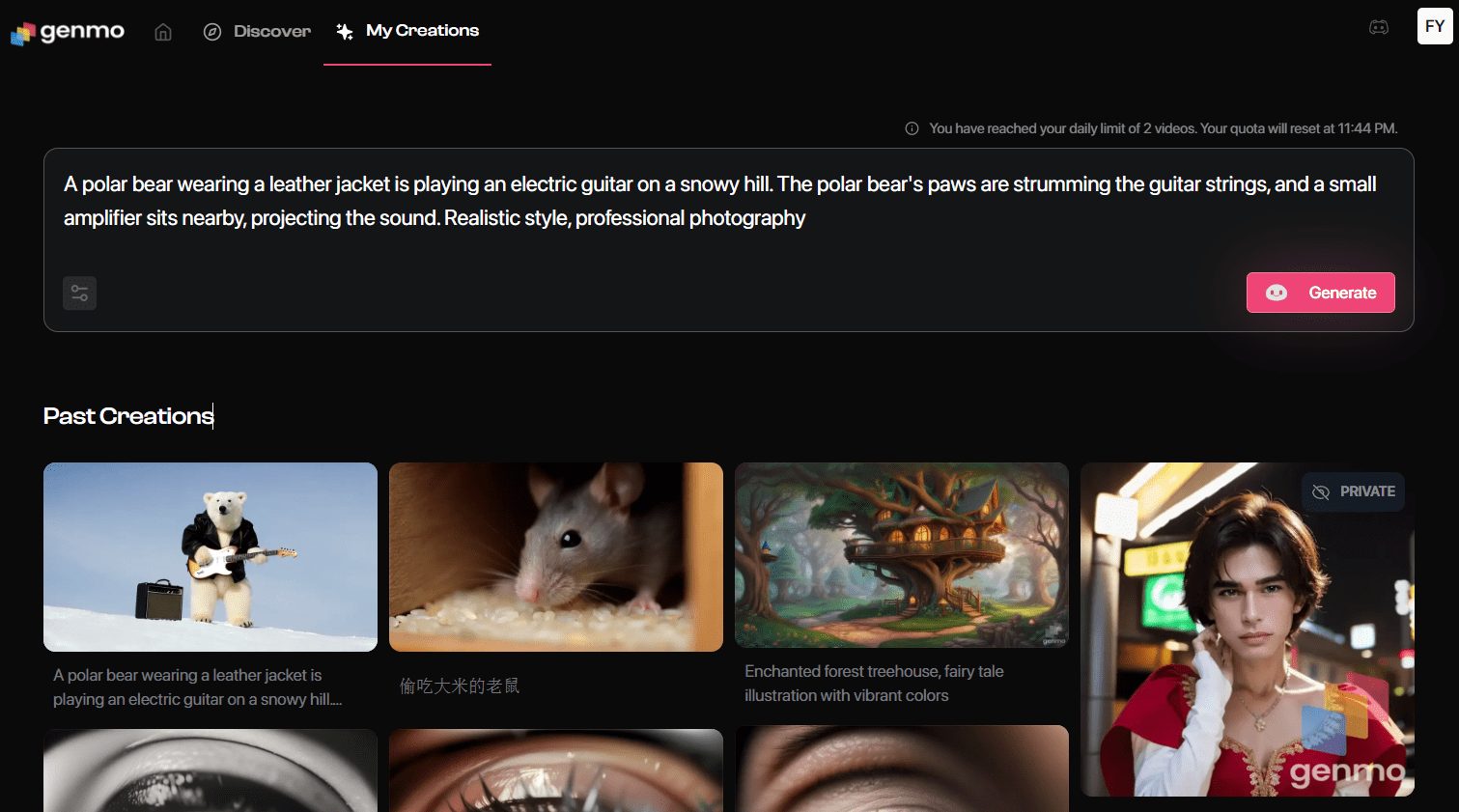

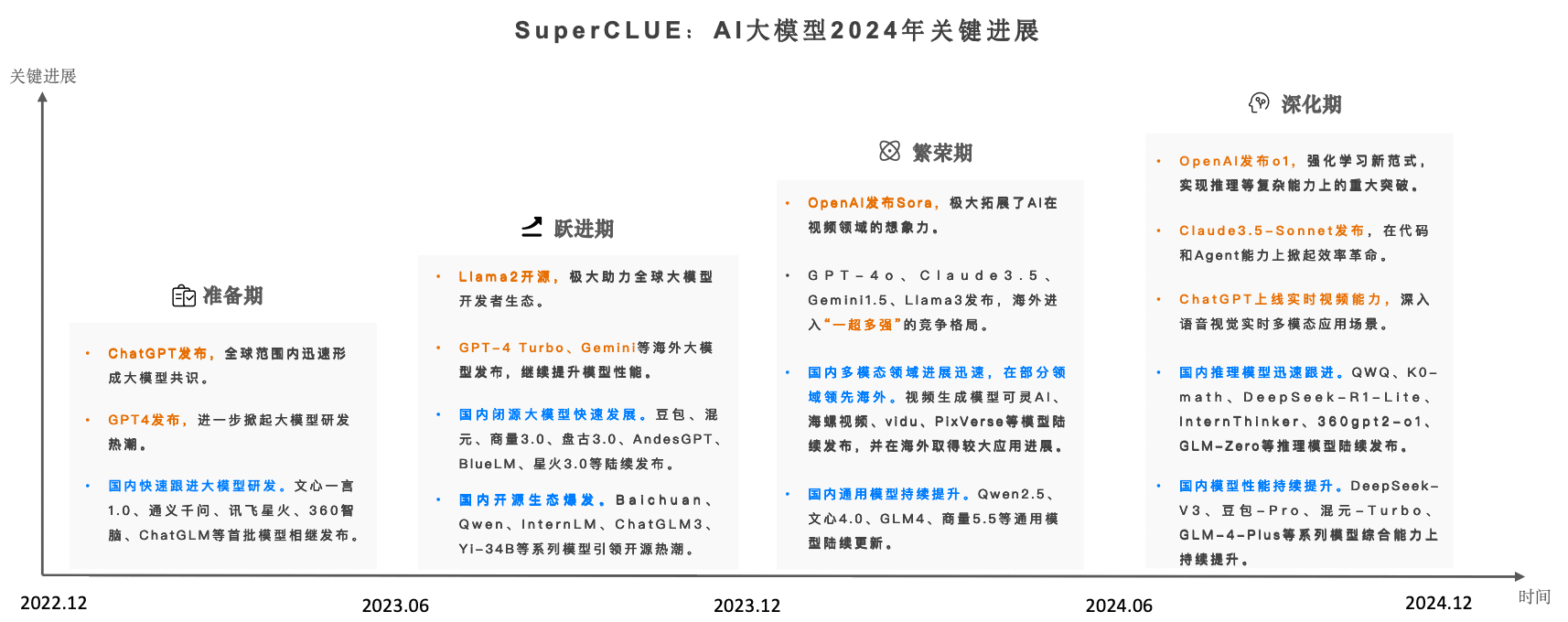

Since 2023, AI Big Models have been creating the largest wave of artificial intelligence ever on a global scale. As we enter 2024, the global competitive landscape for big models is growing andWith the release of the Sora, the GPT-4o, and the o1, the domestic big models are engaged in a wave of big model chases in 2024.

The Chinese big model evaluation benchmark SuperCLUE has continuously tracked the development trend and comprehensive effect of big models at home and abroad in real time, and is officially released.Chinese Large Model Benchmark Measurement 2024 Annual Report.

The full report consists of 89 pages, this article only shows the key contents of the report, the address of the full report online (downloadable):

www.cluebenchmarks.com/superclue_2024

SuperCLUE Leaderboard Address:

www.superclueai.com

Key elements of the report

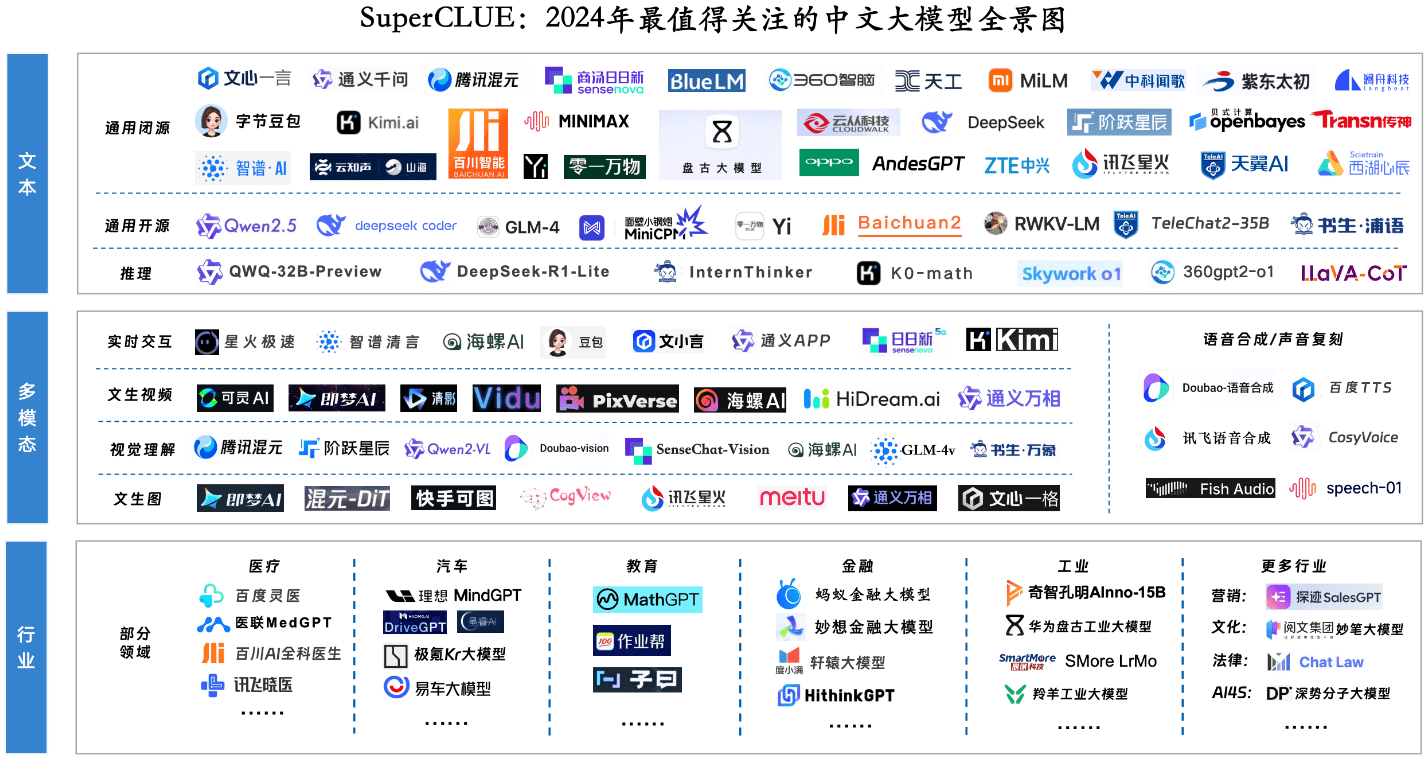

Key Component 1: Panorama of the Most Noteworthy Large Models for 2024

Key Component 2: Annual Overall List and Model Quadrant

Introduction to Evaluation

This annual report focuses on the General Competency Assessment, which consists of three dimensions: science, arts, and Hard.The questions are all original new questions, totaling 1,325 multi-round short answer questions.

[Science Tasks] is categorized into Computing, Logical Reasoning, and Code Measurement sets; [Arts Tasks] is categorized into Language Understanding, Generative Creation, and Security Measurement sets; and [Hard Tasks] is categorized into Instruction Following, Deep Reasoning, and Agent Measurement sets.

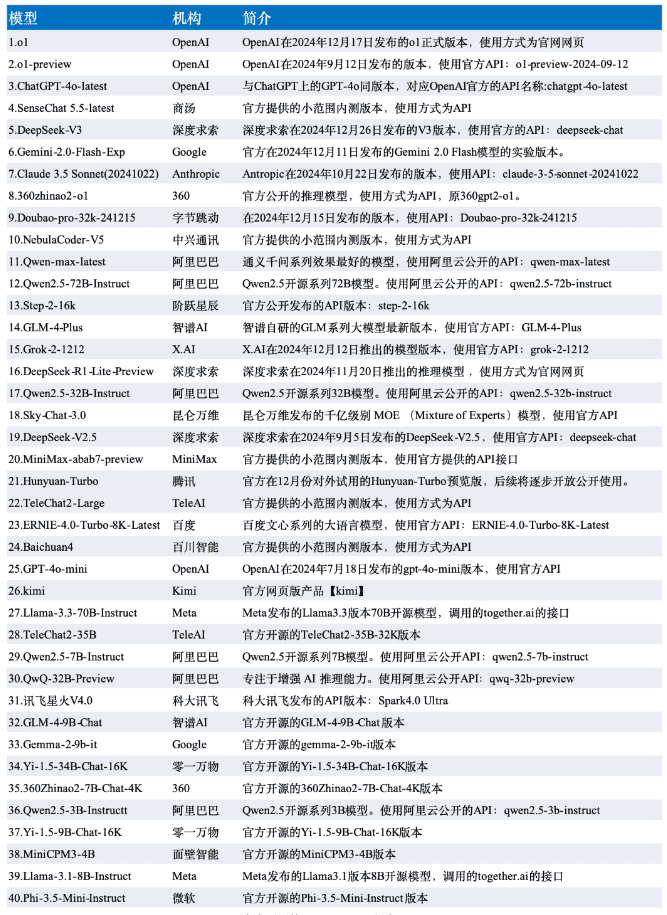

The data of this evaluation is selected from the SuperCLUE-December evaluation results, and the model is selected from the representative 42 large models at home and abroad in the December version.

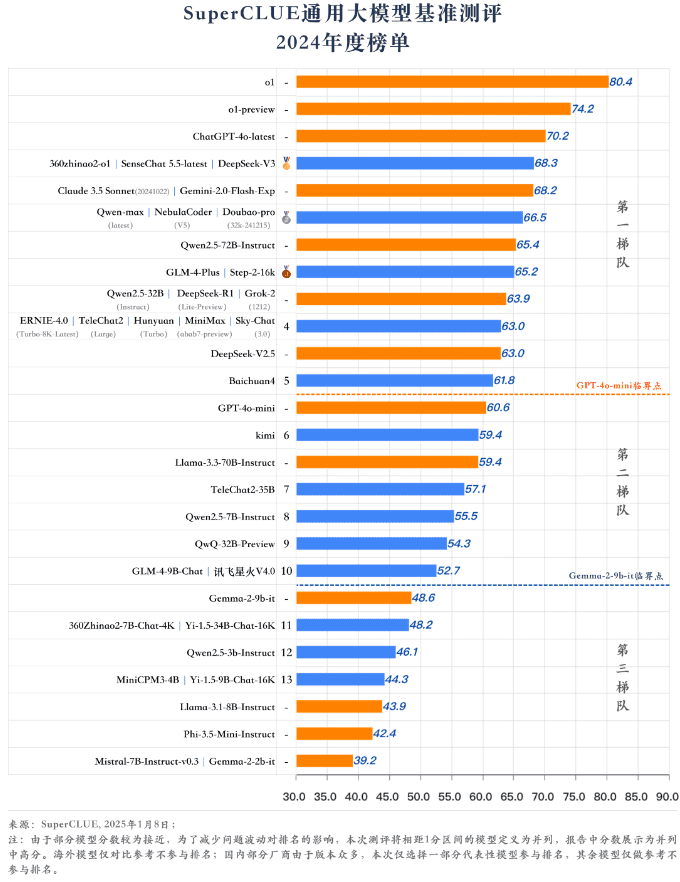

league table

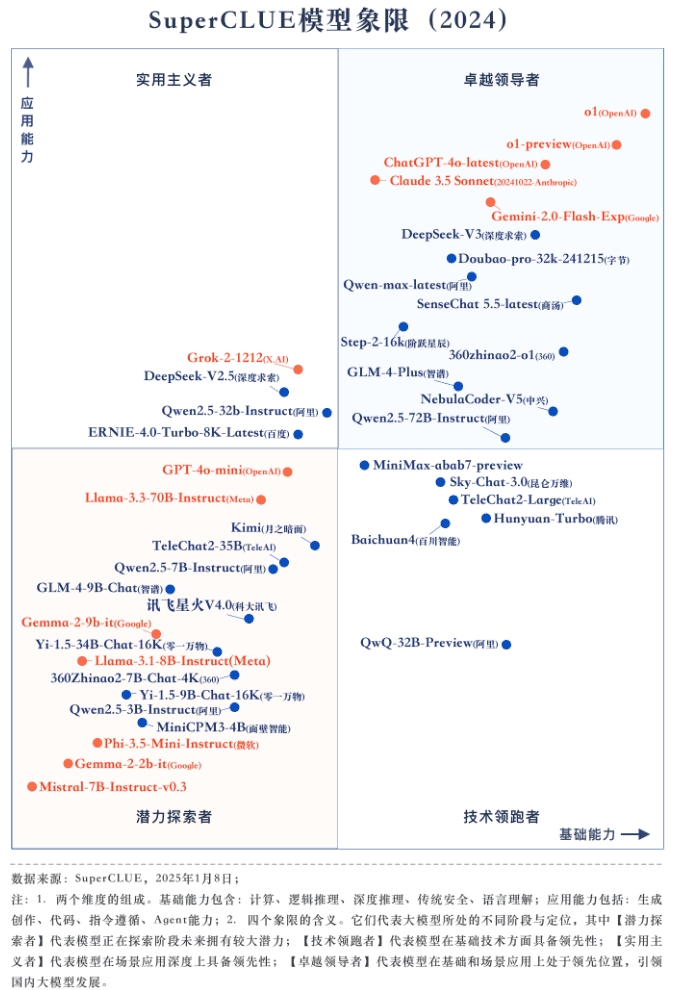

Annual model quadrant

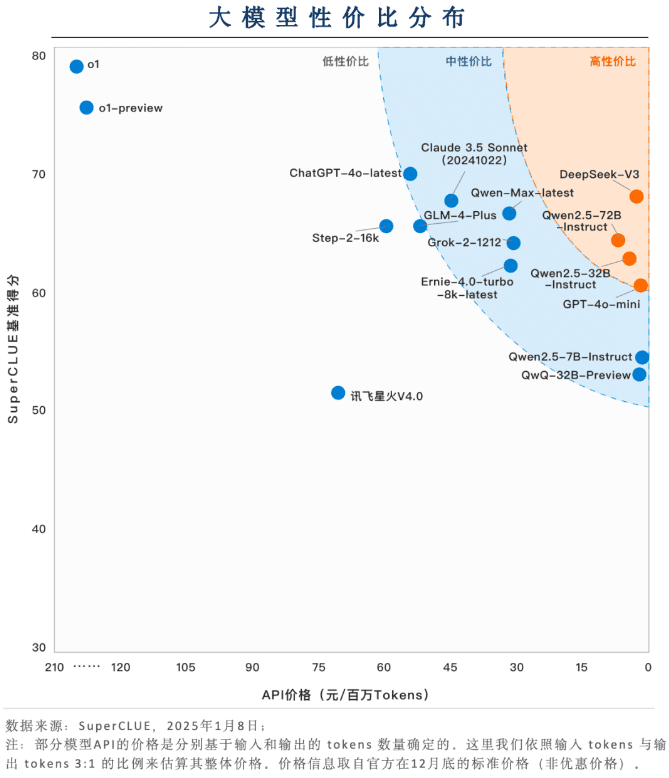

Key element 3: Distribution of value for money zones

Domestic large models have a large advantage in terms of cost-effectiveness (price + effectiveness)

Domestic large models such as DeepSeek-V3, Qwen2.5-72B-Instruct and Qwen2.5-32B-Instruct show great competitiveness in terms of price/performance ratio. On the basis of a higher level of capability can maintain a very low application cost, in the application of landing to show a friendly usability.

Most models are in the medium value for money range

Most of the models are still at a high price point in order to maintain a high level of capability. For example, the GLM-4-Plus, Qwen-Max-latest, Claude 3.5 Sonnet, and Grok-2-1212 are all priced above $30 per million tokens.

o1 and other inference models have more room for optimization in terms of price/performance ratio

Although o1 and o1-preview show a high level of capability, they are several times more expensive than other models in terms of price. How to reduce the cost might become a prerequisite for the wide application of inference models.

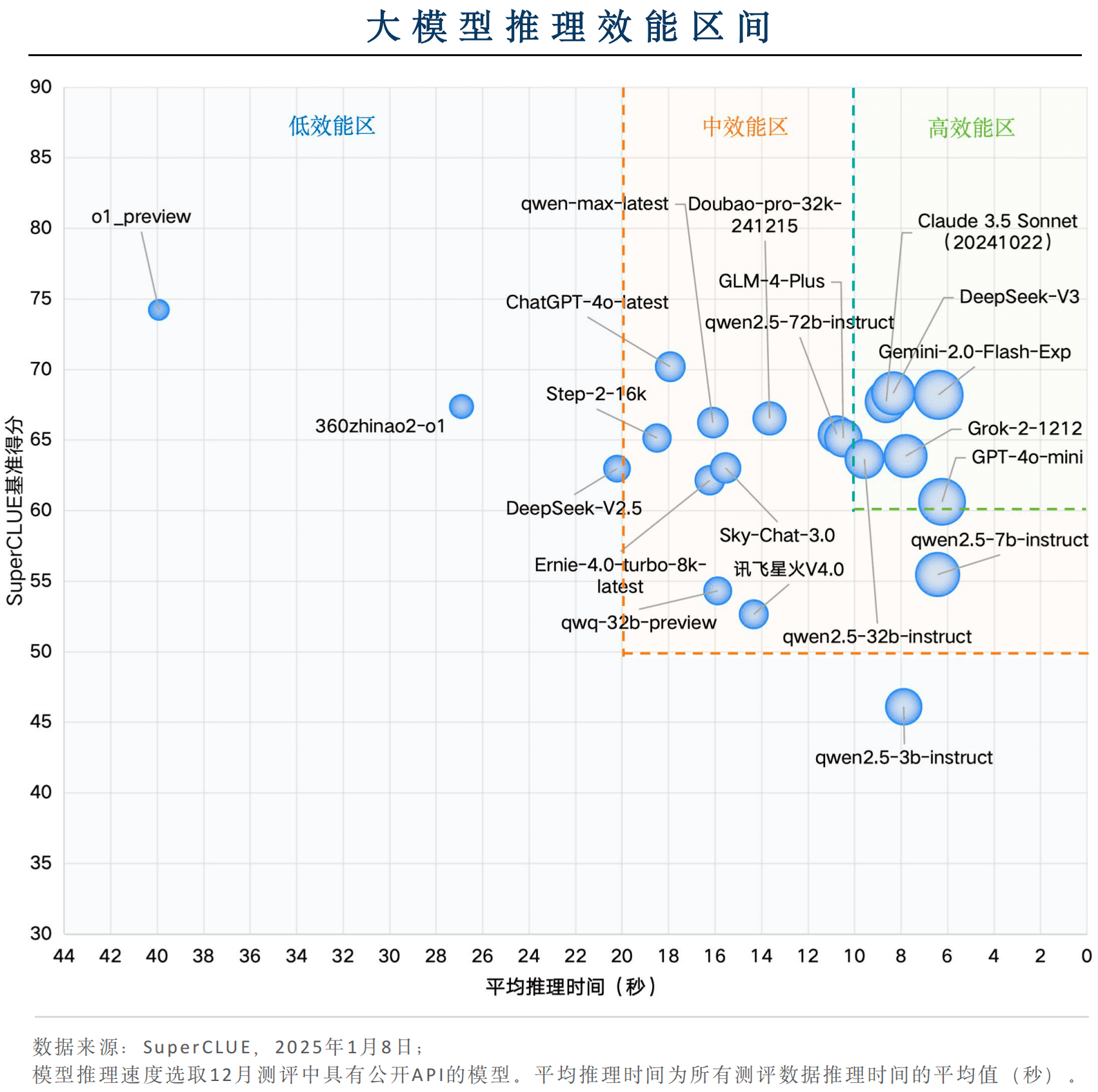

Key Component 4: Reasoning about the distribution of efficiency intervals

Some domestic models are competitive in terms of overall effectiveness

Among the domestic models, DeepSeek-V3 and Qwen2.5-32B-Instruct have excellent inference speeds, with an average inference time of less than 10s per question, and at the same time, the benchmark scores are above 60, which are in line with the "high performance zone" and show a very strong application efficacy.

Gemini-2.0-Flash-Exp Leads the World in Large Model Application Performance

The overseas models Gemini-2.0-Flash-Exp, Claude 3.5 Sonnet (20241022), Grok-2-1212, and GPT-4o-mini qualify for the 'high performance zone', with Gemini-2.0-Flash-Exp performing the best in terms of combined effectiveness in terms of inference time and benchmark score. GPT-4o-mini performs best in terms of inference speed.

inference modelThere is much room for optimizing the performance of the model.

Although the inference model represented by o1-preview performs well in the benchmark score, the average inference time per question is about 40s, and the overall performance is consistent with the "low performance zone". In order to have a wide range of application scenarios, the inference model needs to focus on improving its inference speed.

Key Component 5: Domestic and International Large Modeling Gaps and Trends, 2024

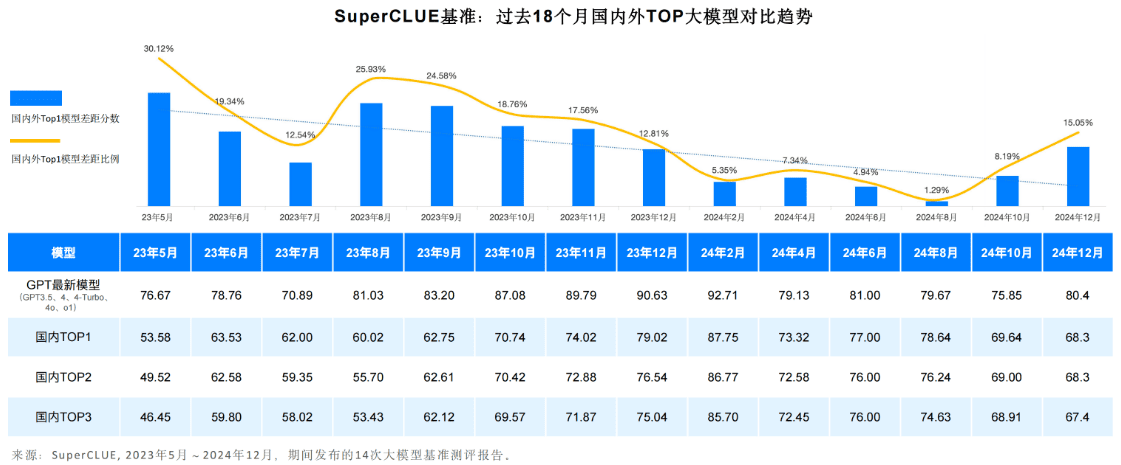

The overall trend is that the gap between the generalized capabilities of the first tier of domestic and foreign big models in the Chinese domain is widening.

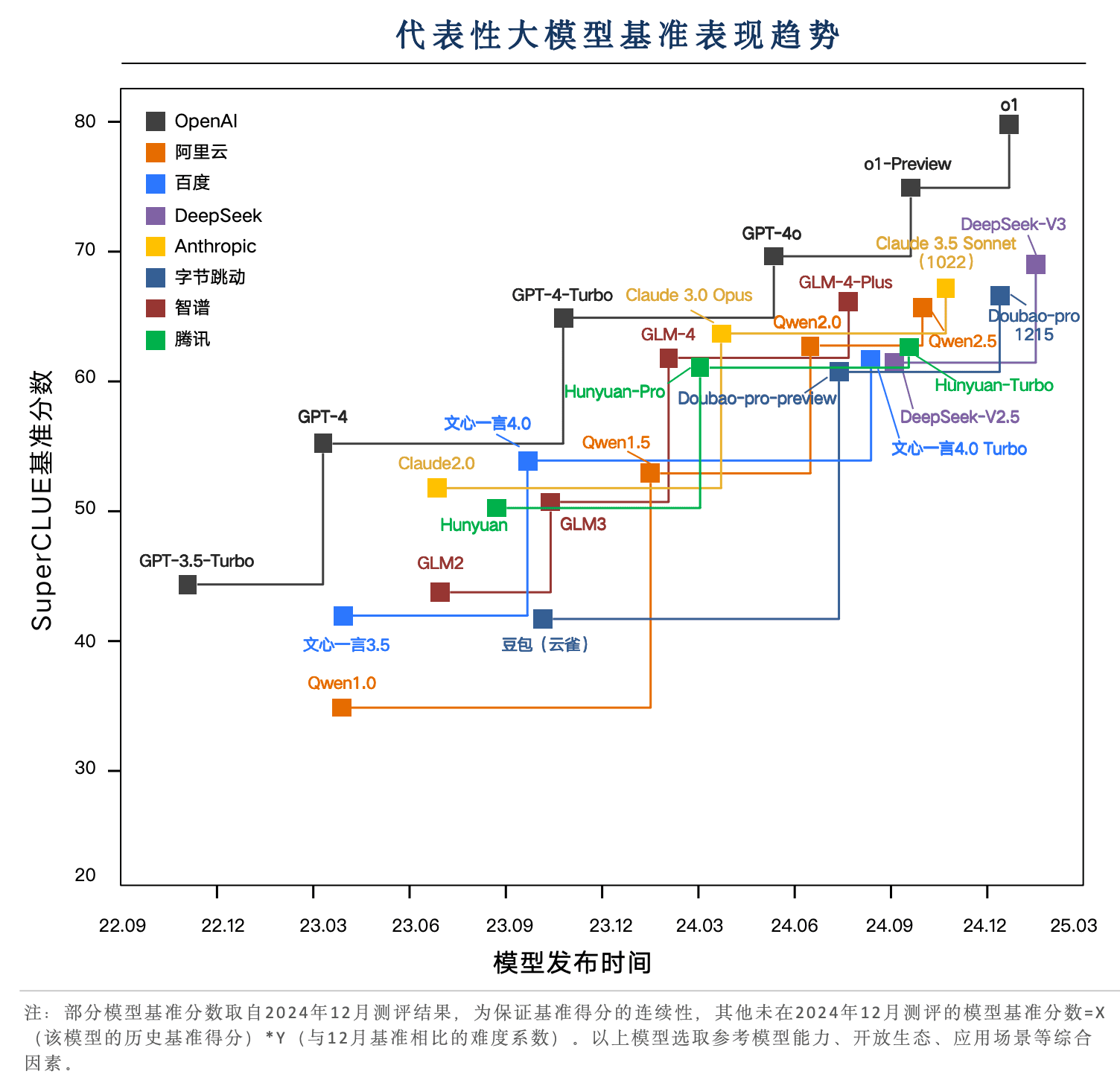

From May 2023 to the present, domestic and overseas large model capabilities have continued to evolve. Among them, the best overseas models represented by the GPT series of models have gone through multiple iterations from GPT3 . 5, GPT4, GPT4 - Turbo, GPT4o, o1 of multiple versions of iterative upgrades.

The domestic model also went through a choppy 1 8-month iteration cycle, narrowing the gap from 0.121 TP3T in May 2 0 2 3 to 1.291 TP3T in August 2024. but with the release of o1, the gap widened again to 15.051 TP3T.

Domestic models represented by DeepSeek-V3 are getting extremely close to GPT-4o-latest

In the past 2 years, domestic representative models have been iterated in several versions, DeepSeek-V3, Doubao-pro, GLM-4-Plus, and Qwen2.5 have been close to GPT-4o in Chinese tasks, among which DeepSeek-V3 has performed well, surpassing the performance of Claude 3.5 Sonnet in the December evaluation.

o1 Reasoning model based on the new paradigm of reinforcement learning, breaking through 80 points to widen the gap between the top models at home and abroad

In the SuperCLUE evaluation in December, the main head big models at home and abroad in SuperCLUE benchmark scores concentrated in 60-70 points. o1 and o1-preview based on the new paradigm of reinforcement learning inference model has become an important technology representative of the breakthrough of the 70-point bottleneck, especially the o1 formal version of the breakthrough of the 80-point mark, showing a large leading advantage.

Key element 6: Other sub-dimension lists

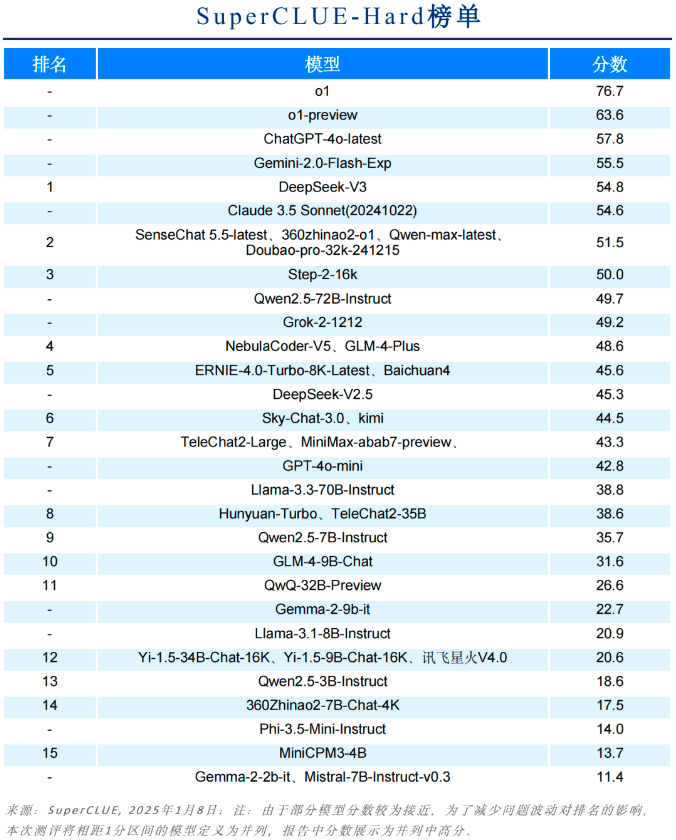

Hard List

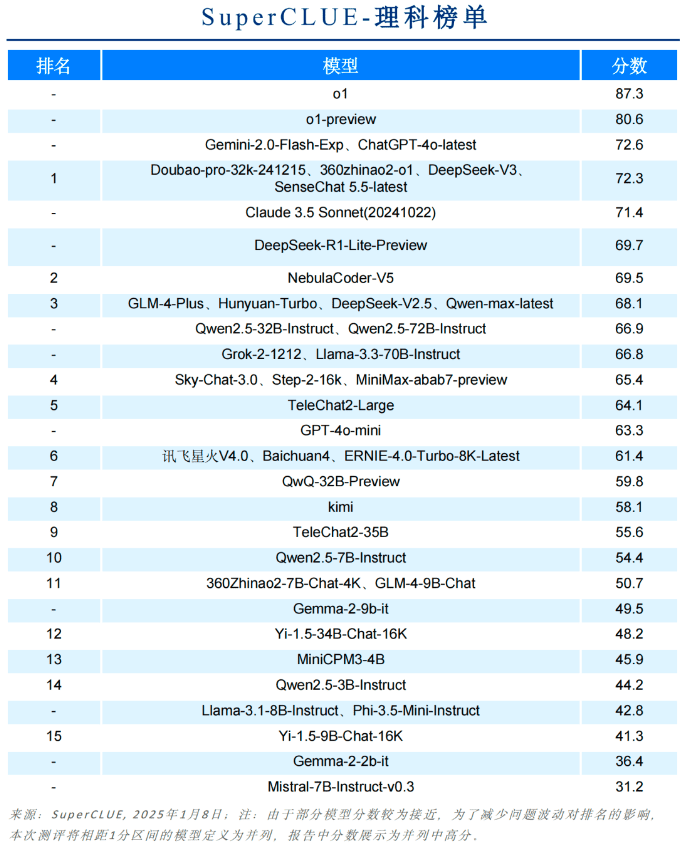

Science List

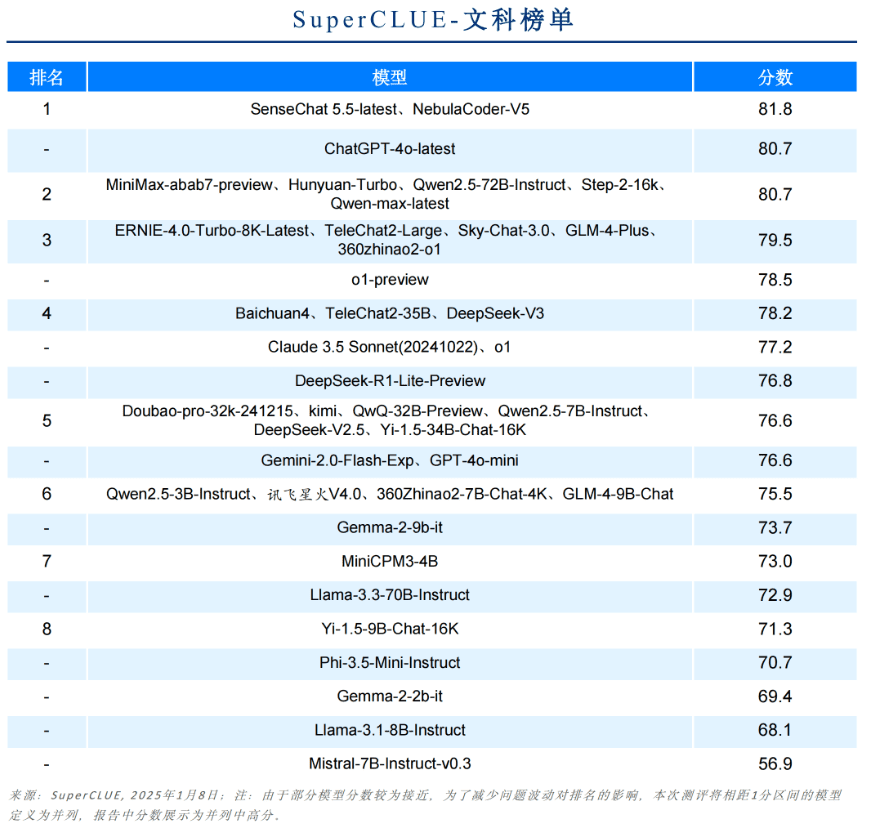

Liberal Arts List

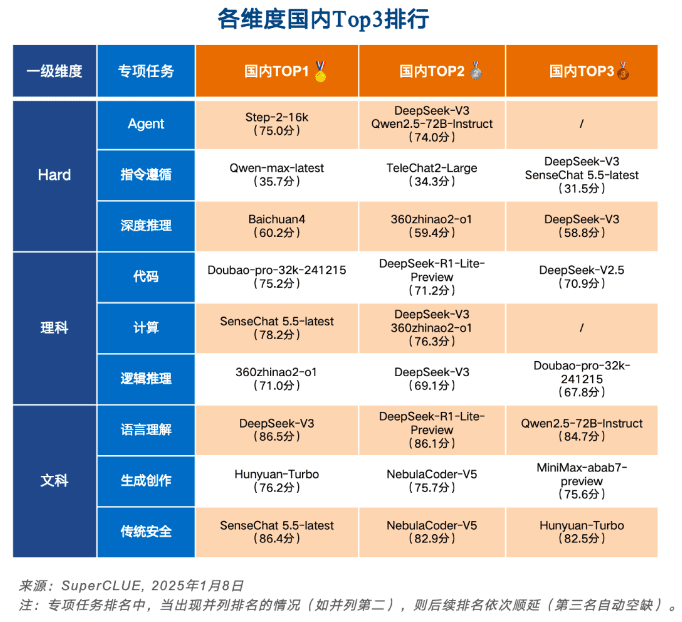

Top 3 in China for each dimension

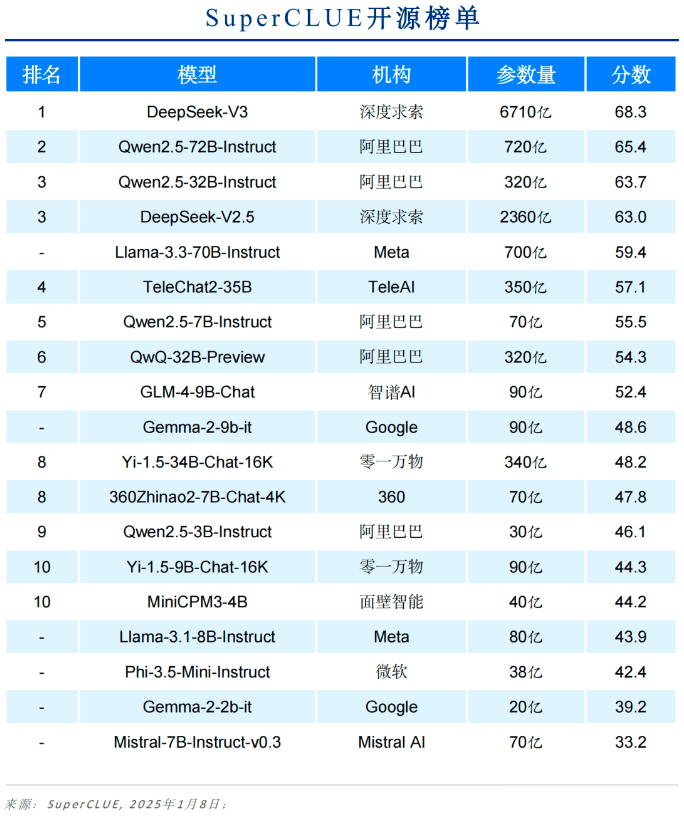

Open Source Model List

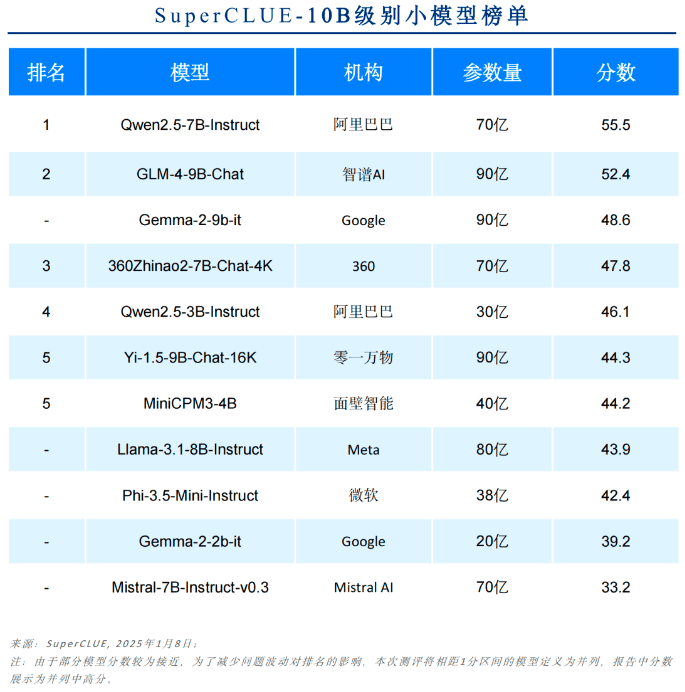

List of models up to 10B

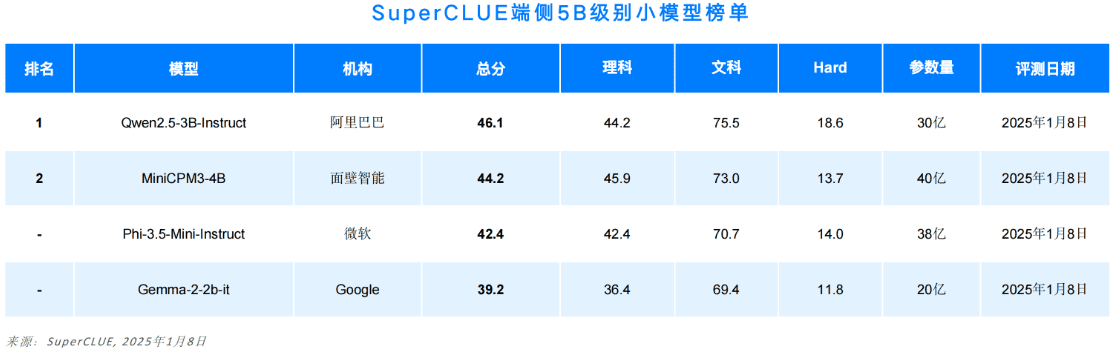

List of end-side models up to 5B

List of secondary fine-grained scores

Due to space limitation, this paper only shows part of the report. The complete content includes the assessment methodology, assessment examples, sub-task lists, multimodality, applications, and an introduction to inference benchmarks.

© Copyright notes

Article copyright AI Sharing Circle All, please do not reproduce without permission.

Related posts

No comments...