Implementação múltipla na nuvem do modelo de desenho de IA Flux.1 do projeto de código aberto para gerar 10.000 imagens gratuitas por dia

FluxoO modelo .1 está disponível há alguns meses e já se pode dizer que ele se mantém no SDXL, com o uso diário substituindo o Midjourney! Abaixo estão três soluções de código aberto que não exigem recursos locais de GPU e permitem a implantação gratuita na nuvem em poucos minutos.

Flux.1 Há muitos benefícios na implantação da nuvem, como: a implantação privada é mais segura, pode ser fornecida como um serviço aberto aos seus usuários e está sempre disponível sem a necessidade de lançamento.

Se sua necessidade for apenas gerar imagens, recomendamos que você implemente seu próprio conjunto de serviços on-line do Flux.1.

A seguir, apresentamos alguns projetos que dependem da Cloudflare para registrar sua própria conta numérica. Se você quiser acessá-la normalmente na China, precisará preparar sua própria contanome de domínio autônomoque se vincula ao cloudflare.

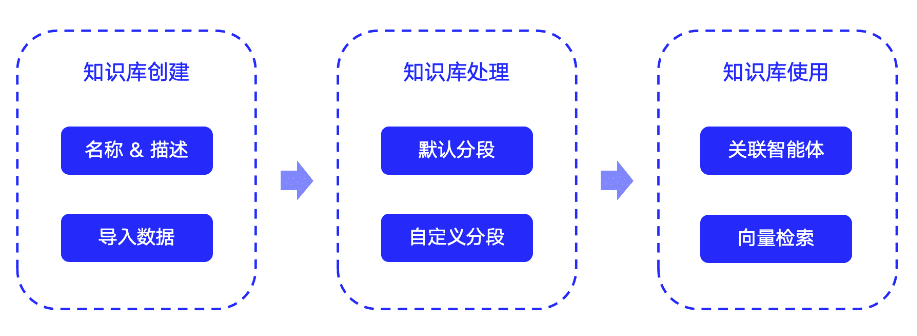

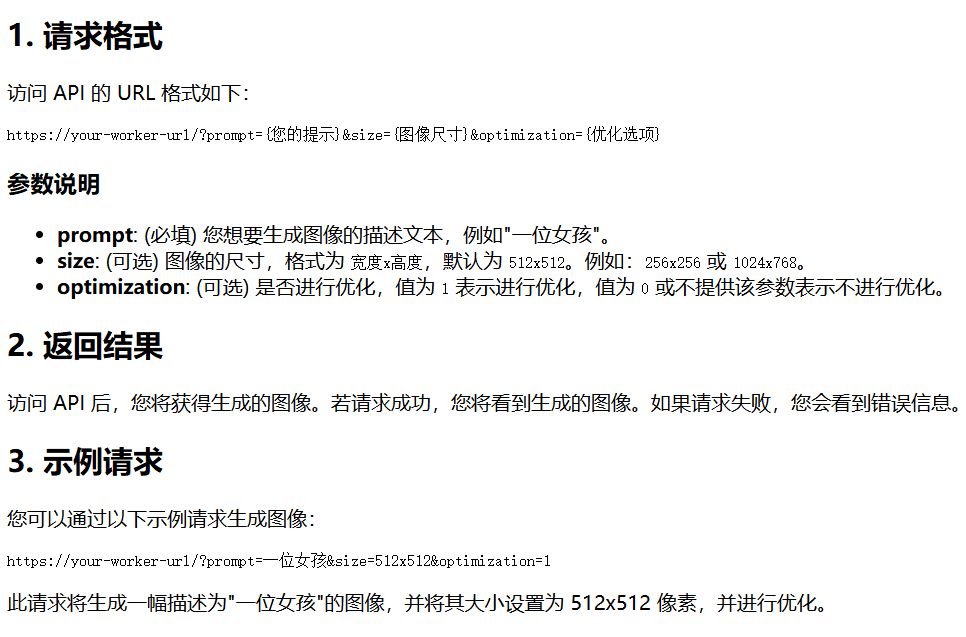

Projeto 1: Serviço gratuito e aberto Flux.1 fácil de usar, emenda de URL de palavras-chave para gerar imagens

O código desse projeto é totalmente escrito por IA, e nem mesmo o próprio autor consegue lê-lo, mas a funcionalidade implementada é muito útil e pode ser considerada extremamente recomendada entre os três projetos. Este é um artigo introdutório:A interface do White John FLUX1 foi fechada, resultando em uma grande perda de tráfego neste site... Bem, estou descobrindo como implementar a API do FLUX1 gratuitamente!.

O projeto conta com a implementação de interfaces gratuitas fornecidas pela Silicon Mobility e pela Wisdom Spectrum Clear Words, que podem ser abertas ao público como um serviço estável.

Características:

Gerar imagens por meio da emenda de prompts em URLs

Fornecer uma GUI fácil, por exemplo: https://img.kdjingpai.com/gui/

Suporte à inserção de palavras de alerta em chinês

Suporta a entrada de imagens de diferentes tamanhos

Suporte a palavras-chave expandidas e inteligentes

Implementação simples de pesquisa de várias chaves e balanceamento de carga de visitantes de front-end para implementações externas

Endereço do projeto:https://github.com/pptt121212/freefluxapi

APIs gratuitas das quais o projeto depende (solicitadas com bastante antecedência)

1. API de geração de imagens

Endereço da API: https://api.siliconflow.cn/v1/image/generations

Endereço do aplicativo: https://cloud.siliconflow.cn/

Usando o modelo:: `black-forest-labs/FLUX.1-schnell' (modelo livre)

Esteja ciente da limitação da taxa, é recomendável solicitar o polling com várias teclas.

2. API de modelo grande para gerar dicas

Endereço da API: https://open.bigmodel.cn/api/paas/v4/chat/completions

Endereço do aplicativo: https://open.bigmodel.cn/

Usando o modelo:: `glm-4-flash` (modelo livre)

Guia de implantação

1. dependências

Verifique se você tem uma conta na Cloudflare e se pode adicionar Workers corretamente.

2) Configurar o projeto

Faça login no painel de controle da Cloudflare e crie um novo trabalhador.

3. cole e salve o código do Worker.

comandante-em-chefe (militar)workers.jsO código é copiado e colado no editor do Worker.

Clique no botão "Save and Deploy" (Salvar e implantar) para salvar e implantar seu trabalhador.

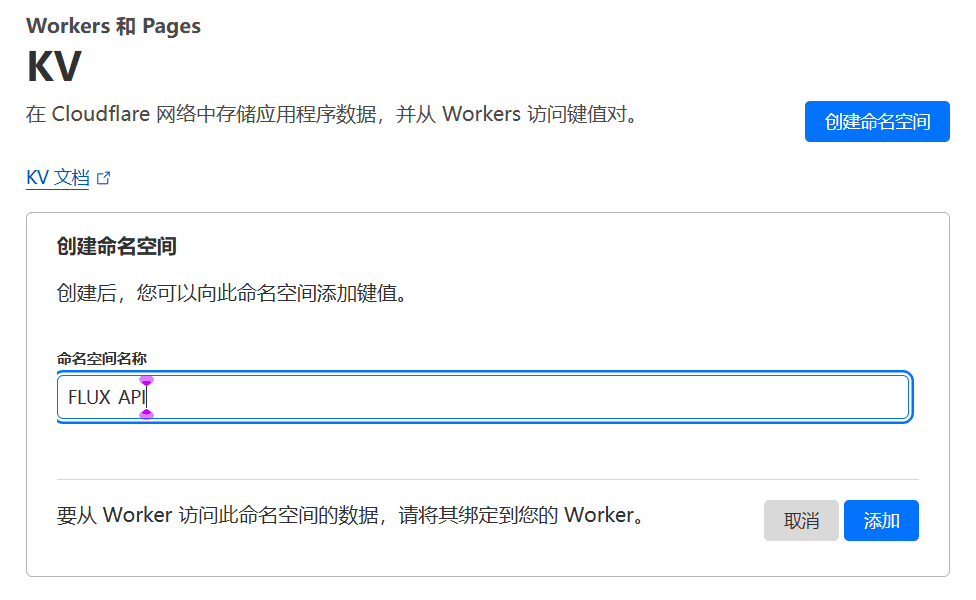

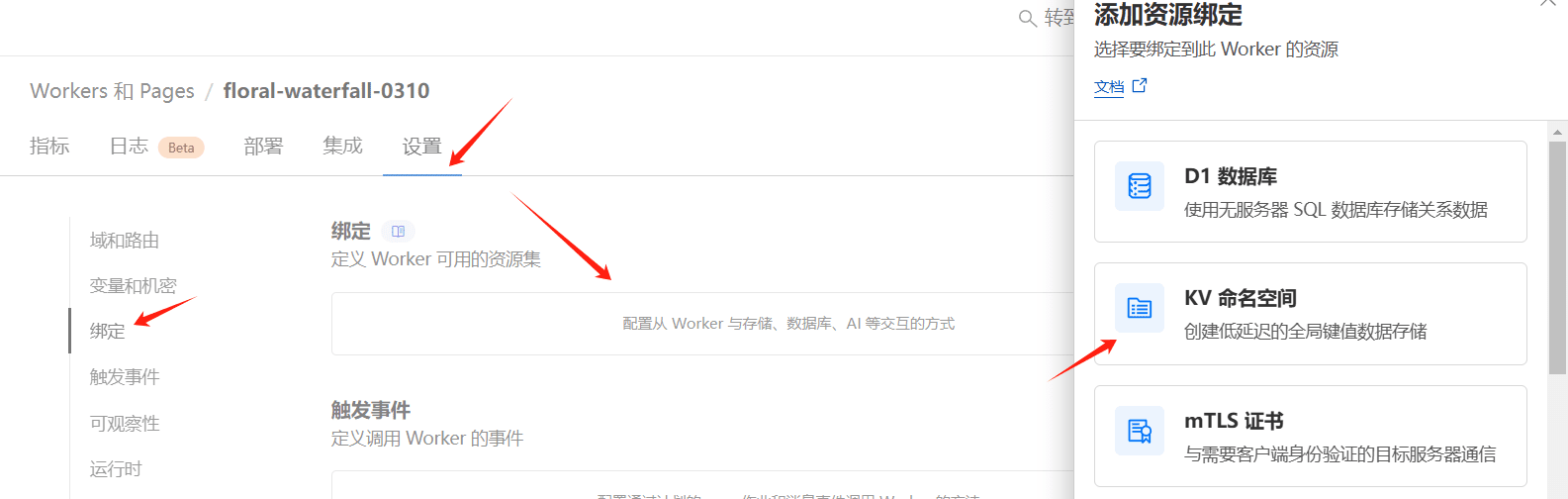

4. configurar o espaço KV(não essencial)

O objetivo dessa configuração de serviço é salvar um endereço de acesso de imagem fixo para a imagem temporária gerada; o usuário gera uma imagem e, em seguida, atualiza a URL e ainda vê a imagem antiga (não há necessidade de um leito de imagem, porque a API do Silicon Mobility retorna a URL da imagem original, portanto, naturalmente, você não quer um leito de imagem).

A desvantagem é que ele ocupa um número limitado de leituras e gravações no espaço KV, portanto, os usuários gratuitos não devem usá-lo ou não devem configurá-lo.

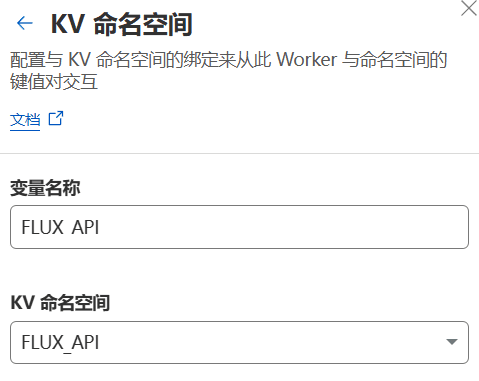

Criação de namespaces KV no Cloudflare Workers: FLUX_API

Também vincule esse nome de variável de espaço KV em woroers para escrever FLUX_API

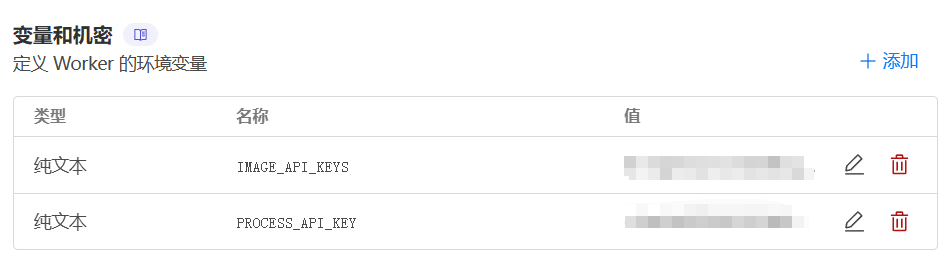

5. adição de variáveis

IMAGE_API_KEYS

Preencha a "API de geração de imagens" pré-solicitada aqui.

Várias KEYs podem ser inseridas, polling suportado, formato key1,key2,key3

PROCESS_API_KEY

Preencha o pré-solicitado "Big Model API for Cue Word Generation" aqui.

Digite uma KEY

As instruções de uso podem ser encontradas em: https://img.kdjingpai.com/

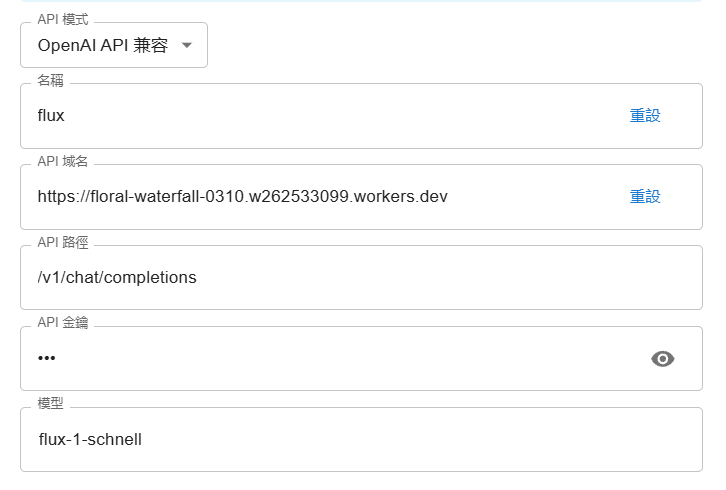

Projeto 2: API de geração de imagens Flux.1 que depende apenas do Cloudflare e é compatível com o formato de interface OPENAI

Características:

Qualquer endereço de API em formato openai pode ser preenchido para usar o modelo personalizado (o acesso à new-api é aberto ao público como uma API de geração de imagens)

Suporte para armazenamento temporário de imagens

Preparativos antes da implantação

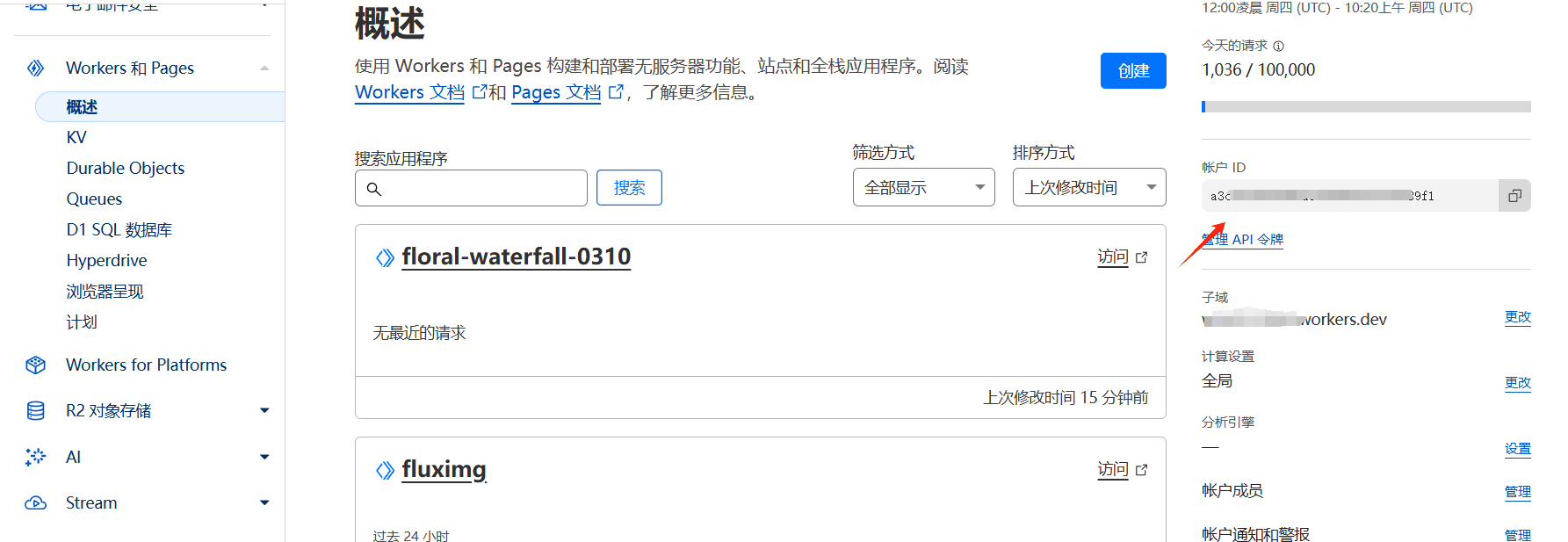

Registre o ID da conta do cloudeflare

Essa cadeia de caracteres no URL após o login no cloudeflare também é o ID da conta, o mesmo que o obtido acima

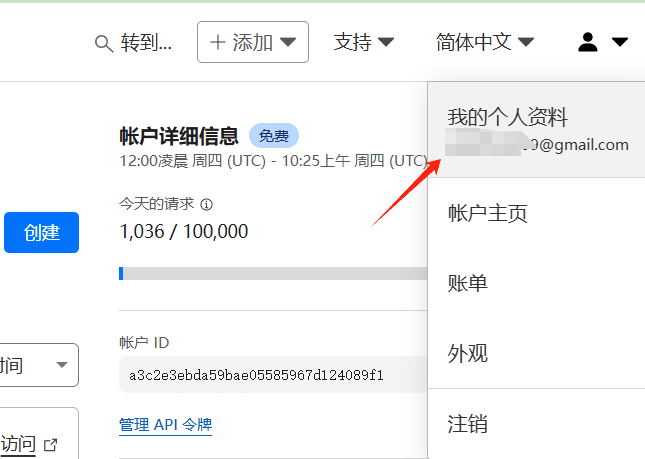

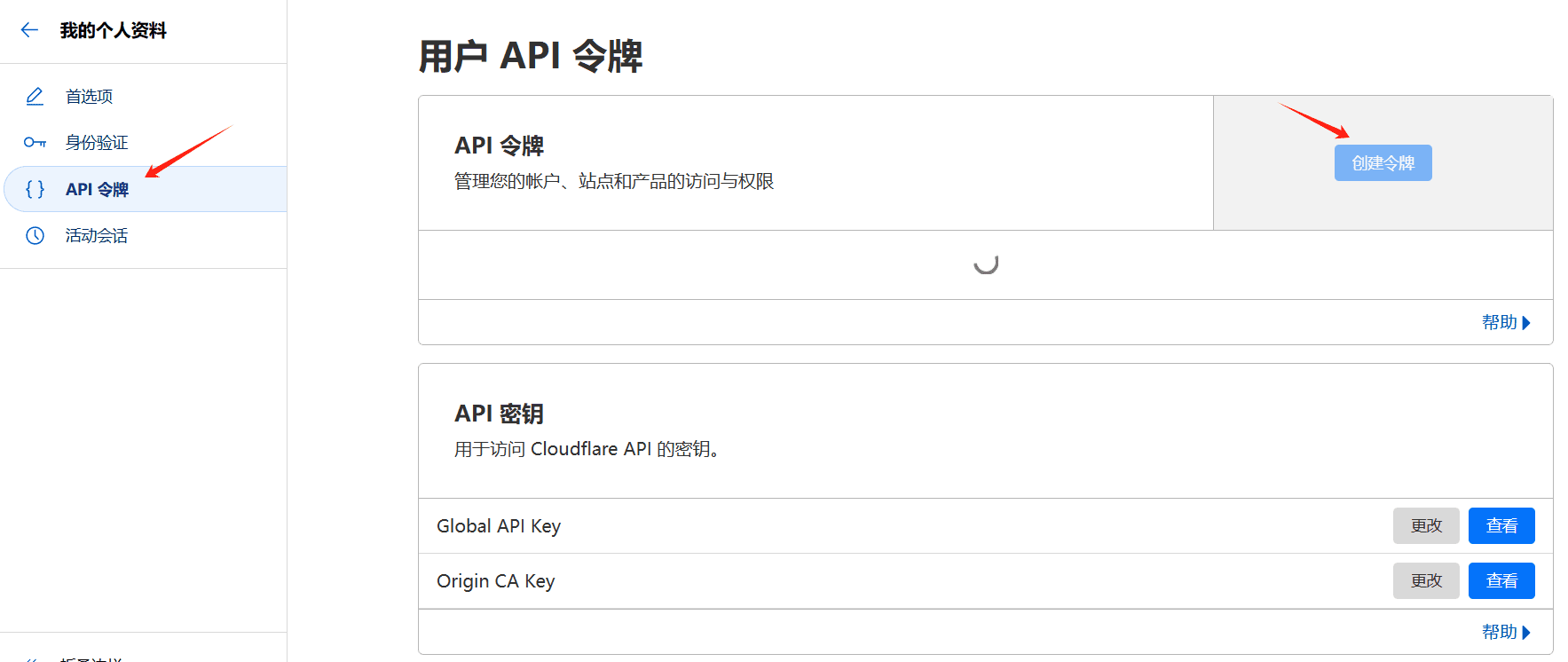

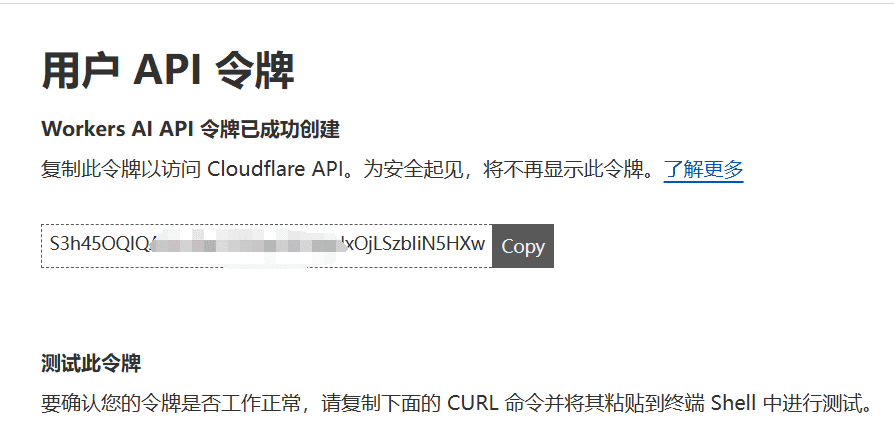

Registro do token da API do cloudeflare

Clique em Profile (Perfil) no canto superior direito

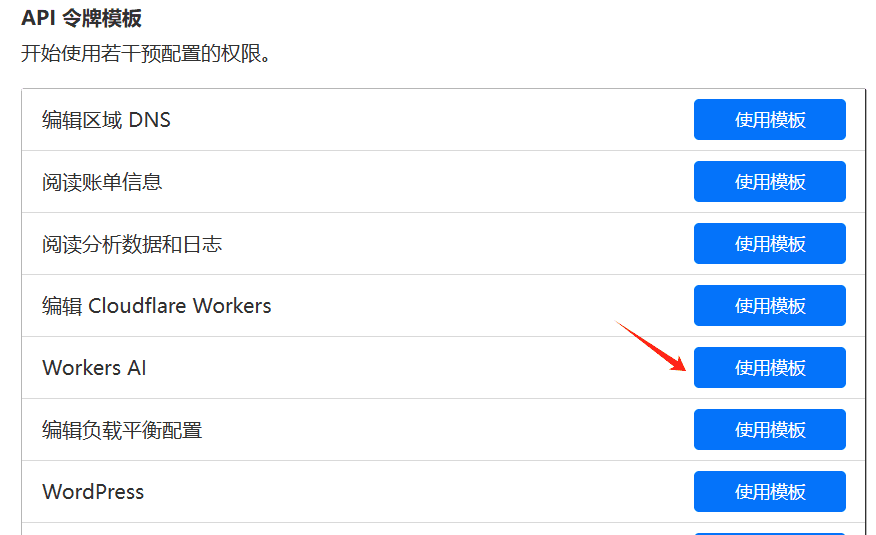

Clique em API Token e, em seguida, clique em Create Token

Selecionar o modelo de IA dos trabalhadores

Trabalhe para obter o token. Talvez você precise de recursos de conta no meio, apenas todas as contas aleatoriamente.

Código dos trabalhadores a ser implantado

// 配置

const CONFIG = {

API_KEY: "xxx", // 对外验证key

CF_ACCOUNT_LIST: [{ account_id: "xxx", token: "xxx" }], // 换成自己的,可以多个号随机调用

CF_IS_TRANSLATE: true, // 是否启用提示词AI翻译及优化,关闭后将会把提示词直接发送给绘图模型

CF_TRANSLATE_MODEL: "@cf/qwen/qwen1.5-14b-chat-awq", // 使用的cf ai模型

USE_EXTERNAL_API: false, // 是否使用自定义API,开启后将使用外部模型生成提示词,需要填写下面三项

EXTERNAL_API: "", //自定义API地址,例如:https://xxx.com/v1/chat/completions

EXTERNAL_MODEL: "", // 模型名称,例如:gpt-4o

EXTERNAL_API_KEY: "", // API密钥

FLUX_NUM_STEPS: 4, // Flux模型的num_steps参数,范围:4-8

CUSTOMER_MODEL_MAP: {

// "SD-1.5-Inpainting-CF": "@cf/runwayml/stable-diffusion-v1-5-inpainting", // 不知道是哪里有问题,先禁用了

"DS-8-CF": "@cf/lykon/dreamshaper-8-lcm",

"SD-XL-Bash-CF": "@cf/stabilityai/stable-diffusion-xl-base-1.0",

"SD-XL-Lightning-CF": "@cf/bytedance/stable-diffusion-xl-lightning",

"FLUX.1-Schnell-CF": "@cf/black-forest-labs/flux-1-schnell"

},

IMAGE_EXPIRATION: 60 * 30 // 图片在 KV 中的过期时间(秒),这里设置为 30 分钟

};

// 主处理函数

async function handleRequest(request) {

if (request.method === "OPTIONS") {

return handleCORS();

}

if (!isAuthorized(request)) {

return new Response("Unauthorized", { status: 401 });

}

const url = new URL(request.url);

if (url.pathname.endsWith("/v1/models")) {

return handleModelsRequest();

}

if (request.method !== "POST" || !url.pathname.endsWith("/v1/chat/completions")) {

return new Response("Not Found", { status: 404 });

}

return handleChatCompletions(request);

}

// 处理CORS预检请求

function handleCORS() {

return new Response(null, {

status: 204,

headers: {

'Access-Control-Allow-Origin': '*',

'Access-Control-Allow-Methods': 'GET, POST, OPTIONS',

'Access-Control-Allow-Headers': 'Content-Type, Authorization'

}

});

}

// 验证授权

function isAuthorized(request) {

const authHeader = request.headers.get("Authorization");

return authHeader && authHeader.startsWith("Bearer ") && authHeader.split(" ")[1] === CONFIG.API_KEY;

}

// 处理模型列表请求

function handleModelsRequest() {

const models = Object.keys(CONFIG.CUSTOMER_MODEL_MAP).map(id => ({ id, object: "model" }));

return new Response(JSON.stringify({ data: models, object: "list" }), {

headers: {

'Content-Type': 'application/json',

'Access-Control-Allow-Origin': '*'

}

});

}

// 处理聊天完成请求

async function handleChatCompletions(request) {

try {

const data = await request.json();

const { messages, model: requestedModel, stream } = data;

const userMessage = messages.find(msg => msg.role === "user")?.content;

if (!userMessage) {

return new Response(JSON.stringify({ error: "未找到用户消息" }), { status: 400, headers: { 'Content-Type': 'application/json' } });

}

const isTranslate = extractTranslate(userMessage);

const originalPrompt = cleanPromptString(userMessage);

const model = CONFIG.CUSTOMER_MODEL_MAP[requestedModel] || CONFIG.CUSTOMER_MODEL_MAP["SD-XL-Lightning-CF"];

// 确定使用哪个模型生成提示词

const promptModel = determinePromptModel();

const translatedPrompt = isTranslate ?

(model === CONFIG.CUSTOMER_MODEL_MAP["FLUX.1-Schnell-CF"] ?

await getFluxPrompt(originalPrompt, promptModel) :

await getPrompt(originalPrompt, promptModel)) :

originalPrompt;

const imageUrl = model === CONFIG.CUSTOMER_MODEL_MAP["FLUX.1-Schnell-CF"] ?

await generateAndStoreFluxImage(model, translatedPrompt, request.url) :

await generateAndStoreImage(model, translatedPrompt, request.url);

return stream ?

handleStreamResponse(originalPrompt, translatedPrompt, "1024x1024", model, imageUrl, promptModel) :

handleNonStreamResponse(originalPrompt, translatedPrompt, "1024x1024", model, imageUrl, promptModel);

} catch (error) {

return new Response(JSON.stringify({ error: "Internal Server Error: " + error.message }), { status: 500, headers: { 'Content-Type': 'application/json' } });

}

}

function determinePromptModel() {

return (CONFIG.USE_EXTERNAL_API && CONFIG.EXTERNAL_API && CONFIG.EXTERNAL_MODEL && CONFIG.EXTERNAL_API_KEY) ?

CONFIG.EXTERNAL_MODEL : CONFIG.CF_TRANSLATE_MODEL;

}

// 获取翻译后的提示词

async function getPrompt(prompt, model) {

const requestBody = {

messages: [

{

role: "system",

content: `作为 Stable Diffusion Prompt 提示词专家,您将从关键词中创建提示,通常来自 Danbooru 等数据库。

提示通常描述图像,使用常见词汇,按重要性排列,并用逗号分隔。避免使用"-"或".",但可以接受空格和自然语言。避免词汇重复。

为了强调关键词,请将其放在括号中以增加其权重。例如,"(flowers)"将'flowers'的权重增加1.1倍,而"(((flowers)))"将其增加1.331倍。使用"(flowers:1.5)"将'flowers'的权重增加1.5倍。只为重要的标签增加权重。

提示包括三个部分:**前缀** (质量标签+风格词+效果器)+ **主题** (图像的主要焦点)+ **场景** (背景、环境)。

* 前缀影响图像质量。像"masterpiece"、"best quality"、"4k"这样的标签可以提高图像的细节。像"illustration"、"lensflare"这样的风格词定义图像的风格。像"bestlighting"、"lensflare"、"depthoffield"这样的效果器会影响光照和深度。

* 主题是图像的主要焦点,如角色或场景。对主题进行详细描述可以确保图像丰富而详细。增加主题的权重以增强其清晰度。对于角色,描述面部、头发、身体、服装、姿势等特征。

* 场景描述环境。没有场景,图像的背景是平淡的,主题显得过大。某些主题本身包含场景(例如建筑物、风景)。像"花草草地"、"阳光"、"河流"这样的环境词可以丰富场景。你的任务是设计图像生成的提示。请按照以下步骤进行操作:

1. 我会发送给您一个图像场景。需要你生成详细的图像描述

2. 图像描述必须是英文,输出为Positive Prompt。

示例:

我发送:二战时期的护士。

您回复只回复:

A WWII-era nurse in a German uniform, holding a wine bottle and stethoscope, sitting at a table in white attire, with a table in the background, masterpiece, best quality, 4k, illustration style, best lighting, depth of field, detailed character, detailed environment.`

},

{ role: "user", content: prompt }

],

model: CONFIG.EXTERNAL_MODEL

};

if (model === CONFIG.EXTERNAL_MODEL) {

return await getExternalPrompt(requestBody);

} else {

return await getCloudflarePrompt(CONFIG.CF_TRANSLATE_MODEL, requestBody);

}

}

// 获取 Flux 模型的翻译后的提示词

async function getFluxPrompt(prompt, model) {

const requestBody = {

messages: [

{

role: "system",

content: `你是一个基于Flux.1模型的提示词生成机器人。根据用户的需求,自动生成符合Flux.1格式的绘画提示词。虽然你可以参考提供的模板来学习提示词结构和规律,但你必须具备灵活性来应对各种不同需求。最终输出应仅限提示词,无需任何其他解释或信息。你的回答必须全部使用英语进行回复我!

### **提示词生成逻辑**:

1. **需求解析**:从用户的描述中提取关键信息,包括:

- 角色:外貌、动作、表情等。

- 场景:环境、光线、天气等。

- 风格:艺术风格、情感氛围、配色等。

- 其他元素:特定物品、背景或特效。

2. **提示词结构规律**:

- **简洁、精确且具象**:提示词需要简单、清晰地描述核心对象,并包含足够细节以引导生成出符合需求的图像。

- **灵活多样**:参考下列模板和已有示例,但需根据具体需求生成多样化的提示词,避免固定化或过于依赖模板。

- **符合Flux.1风格的描述**:提示词必须遵循Flux.1的要求,尽量包含艺术风格、视觉效果、情感氛围的描述,使用与Flux.1模型生成相符的关键词和描述模式。

3. **仅供你参考和学习的几种场景提示词**(你需要学习并灵活调整,"[ ]"中内容视用户问题而定):

- **角色表情集**:

场景说明:适合动画或漫画创作者为角色设计多样的表情。这些提示词可以生成展示同一角色在不同情绪下的表情集,涵盖快乐、悲伤、愤怒等多种情感。

提示词:An anime [SUBJECT], animated expression reference sheet, character design, reference sheet, turnaround, lofi style, soft colors, gentle natural linework, key art, range of emotions, happy sad mad scared nervous embarrassed confused neutral, hand drawn, award winning anime, fully clothed

[SUBJECT] character, animation expression reference sheet with several good animation expressions featuring the same character in each one, showing different faces from the same person in a grid pattern: happy sad mad scared nervous embarrassed confused neutral, super minimalist cartoon style flat muted kawaii pastel color palette, soft dreamy backgrounds, cute round character designs, minimalist facial features, retro-futuristic elements, kawaii style, space themes, gentle line work, slightly muted tones, simple geometric shapes, subtle gradients, oversized clothing on characters, whimsical, soft puffy art, pastels, watercolor

- **全角度角色视图**:

场景说明:当需要从现有角色设计中生成不同角度的全身图时,如正面、侧面和背面,适用于角色设计细化或动画建模。

提示词:A character sheet of [SUBJECT] in different poses and angles, including front view, side view, and back view

- **80 年代复古风格**:

场景说明:适合希望创造 80 年代复古风格照片效果的艺术家或设计师。这些提示词可以生成带有怀旧感的模糊宝丽来风格照片。

提示词:blurry polaroid of [a simple description of the scene], 1980s.

- **智能手机内部展示**:

场景说明:适合需要展示智能手机等产品设计的科技博客作者或产品设计师。这些提示词帮助生成展示手机外观和屏幕内容的图像。

提示词:a iphone product image showing the iphone standing and inside the screen the image is shown

- **双重曝光效果**:

场景说明:适合摄影师或视觉艺术家通过双重曝光技术创造深度和情感表达的艺术作品。

提示词:[Abstract style waterfalls, wildlife] inside the silhouette of a [man]’s head that is a double exposure photograph . Non-representational, colors and shapes, expression of feelings, imaginative, highly detailed

- **高质感电影海报**:

场景说明:适合需要为电影创建引人注目海报的电影宣传或平面设计师。

提示词:A digital illustration of a movie poster titled [‘Sad Sax: Fury Toad’], [Mad Max] parody poster, featuring [a saxophone-playing toad in a post-apocalyptic desert, with a customized car made of musical instruments], in the background, [a wasteland with other musical vehicle chases], movie title in [a gritty, bold font, dusty and intense color palette].

- **镜面自拍效果**:

场景说明:适合想要捕捉日常生活瞬间的摄影师或社交媒体用户。

提示词:Phone photo: A woman stands in front of a mirror, capturing a selfie. The image quality is grainy, with a slight blur softening the details. The lighting is dim, casting shadows that obscure her features. [The room is cluttered, with clothes strewn across the bed and an unmade blanket. Her expression is casual, full of concentration], while the old iPhone struggles to focus, giving the photo an authentic, unpolished feel. The mirror shows smudges and fingerprints, adding to the raw, everyday atmosphere of the scene.

- **像素艺术创作**:

场景说明:适合像素艺术爱好者或复古游戏开发者创造或复刻经典像素风格图像。

提示词:[Anything you want] pixel art style, pixels, pixel art

- **以上部分场景仅供你学习,一定要学会灵活变通,以适应任何绘画需求**:

4. **Flux.1提示词要点总结**:

- **简洁精准的主体描述**:明确图像中核心对象的身份或场景。

- **风格和情感氛围的具体描述**:确保提示词包含艺术风格、光线、配色、以及图像的氛围等信息。

- **动态与细节的补充**:提示词可包括场景中的动作、情绪、或光影效果等重要细节。

- **其他更多规律请自己寻找**

---

**问答案例1**:

**用户输入**:一个80年代复古风格的照片。

**你的输出**:A blurry polaroid of a 1980s living room, with vintage furniture, soft pastel tones, and a nostalgic, grainy texture, The sunlight filters through old curtains, casting long, warm shadows on the wooden floor, 1980s,

**问答案例2**:

**用户输入**:一个赛博朋克风格的夜晚城市背景

**你的输出**:A futuristic cityscape at night, in a cyberpunk style, with neon lights reflecting off wet streets, towering skyscrapers, and a glowing, high-tech atmosphere. Dark shadows contrast with vibrant neon signs, creating a dramatic, dystopian mood`

},

{ role: "user", content: prompt }

],

model: CONFIG.EXTERNAL_MODEL

};

if (model === CONFIG.EXTERNAL_MODEL) {

return await getExternalPrompt(requestBody);

} else {

return await getCloudflarePrompt(CONFIG.CF_TRANSLATE_MODEL, requestBody);

}

}

// 从外部API获取提示词

async function getExternalPrompt(requestBody) {

try {

const response = await fetch(CONFIG.EXTERNAL_API, {

method: 'POST',

headers: {

'Authorization': `Bearer ${CONFIG.EXTERNAL_API_KEY}`,

'Content-Type': 'application/json'

},

body: JSON.stringify(requestBody)

});

if (!response.ok) {

throw new Error(`External API request failed with status ${response.status}`);

}

const jsonResponse = await response.json();

if (!jsonResponse.choices || jsonResponse.choices.length === 0 || !jsonResponse.choices[0].message) {

throw new Error('Invalid response format from external API');

}

return jsonResponse.choices[0].message.content;

} catch (error) {

console.error('Error in getExternalPrompt:', error);

// 如果外部API失败,回退到使用原始提示词

return requestBody.messages[1].content;

}

}

// 从Cloudflare获取提示词

async function getCloudflarePrompt(model, requestBody) {

const response = await postRequest(model, requestBody);

if (!response.ok) return requestBody.messages[1].content;

const jsonResponse = await response.json();

return jsonResponse.result.response;

}

// 生成图像并存储到 KV

async function generateAndStoreImage(model, prompt, requestUrl) {

try {

const jsonBody = { prompt, num_steps: 20, guidance: 7.5, strength: 1, width: 1024, height: 1024 };

const response = await postRequest(model, jsonBody);

const imageBuffer = await response.arrayBuffer();

const key = `image_${Date.now()}_${Math.random().toString(36).substring(7)}`;

await IMAGE_KV.put(key, imageBuffer, {

expirationTtl: CONFIG.IMAGE_EXPIRATION,

metadata: { contentType: 'image/png' }

});

return `${new URL(requestUrl).origin}/image/${key}`;

} catch (error) {

throw new Error("图像生成失败: " + error.message);

}

}

// 使用 Flux 模型生成并存储图像

async function generateAndStoreFluxImage(model, prompt, requestUrl) {

try {

const jsonBody = { prompt, num_steps: CONFIG.FLUX_NUM_STEPS };

const response = await postRequest(model, jsonBody);

const jsonResponse = await response.json();

const base64ImageData = jsonResponse.result.image;

const imageBuffer = base64ToArrayBuffer(base64ImageData);

const key = `image_${Date.now()}_${Math.random().toString(36).substring(7)}`;

await IMAGE_KV.put(key, imageBuffer, {

expirationTtl: CONFIG.IMAGE_EXPIRATION,

metadata: { contentType: 'image/png' }

});

return `${new URL(requestUrl).origin}/image/${key}`;

} catch (error) {

throw new Error("Flux图像生成失败: " + error.message);

}

}

// 处理流式响应

function handleStreamResponse(originalPrompt, translatedPrompt, size, model, imageUrl, promptModel) {

const content = generateResponseContent(originalPrompt, translatedPrompt, size, model, imageUrl, promptModel);

const encoder = new TextEncoder();

const stream = new ReadableStream({

start(controller) {

controller.enqueue(encoder.encode(`data: ${JSON.stringify({

id: `chatcmpl-${Date.now()}`,

object: "chat.completion.chunk",

created: Math.floor(Date.now() / 1000),

model: model,

choices: [{ delta: { content: content }, index: 0, finish_reason: null }]

})}\n\n`));

controller.enqueue(encoder.encode('data: [DONE]\n\n'));

controller.close();

}

});

return new Response(stream, {

headers: {

"Content-Type": "text/event-stream",

'Access-Control-Allow-Origin': '*',

"Cache-Control": "no-cache",

"Connection": "keep-alive"

}

});

}

// 处理非流式响应

function handleNonStreamResponse(originalPrompt, translatedPrompt, size, model, imageUrl, promptModel) {

const content = generateResponseContent(originalPrompt, translatedPrompt, size, model, imageUrl, promptModel);

const response = {

id: `chatcmpl-${Date.now()}`,

object: "chat.completion",

created: Math.floor(Date.now() / 1000),

model: model,

choices: [{

index: 0,

message: { role: "assistant", content },

finish_reason: "stop"

}],

usage: {

prompt_tokens: translatedPrompt.length,

completion_tokens: content.length,

total_tokens: translatedPrompt.length + content.length

}

};

return new Response(JSON.stringify(response), {

headers: {

'Content-Type': 'application/json',

'Access-Control-Allow-Origin': '*'

}

});

}

// 生成响应内容

function generateResponseContent(originalPrompt, translatedPrompt, size, model, imageUrl, promptModel) {

return `🎨 原始提示词:${originalPrompt}\n` +

`💬 提示词生成模型:${promptModel}\n` +

`🌐 翻译后的提示词:${translatedPrompt}\n` +

`📐 图像规格:${size}\n` +

`🖼️ 绘图模型:${model}\n` +

`🌟 图像生成成功!\n` +

`以下是结果:\n\n` +

``;

}

// 发送POST请求

async function postRequest(model, jsonBody) {

const cf_account = CONFIG.CF_ACCOUNT_LIST[Math.floor(Math.random() * CONFIG.CF_ACCOUNT_LIST.length)];

const apiUrl = `https://api.cloudflare.com/client/v4/accounts/${cf_account.account_id}/ai/run/${model}`;

const response = await fetch(apiUrl, {

method: 'POST',

headers: {

'Authorization': `Bearer ${cf_account.token}`,

'Content-Type': 'application/json'

},

body: JSON.stringify(jsonBody)

});

if (!response.ok) {

throw new Error('Cloudflare API request failed: ' + response.status);

}

return response;

}

// 提取翻译标志

function extractTranslate(prompt) {

const match = prompt.match(/---n?tl/);

return match ? match[0] === "---tl" : CONFIG.CF_IS_TRANSLATE;

}

// 清理提示词字符串

function cleanPromptString(prompt) {

return prompt.replace(/---n?tl/, "").trim();

}

// 处理图片请求

async function handleImageRequest(request) {

const url = new URL(request.url);

const key = url.pathname.split('/').pop();

const imageData = await IMAGE_KV.get(key, 'arrayBuffer');

if (!imageData) {

return new Response('Image not found', { status: 404 });

}

return new Response(imageData, {

headers: {

'Content-Type': 'image/png',

'Cache-Control': 'public, max-age=604800',

},

});

}

// base64 字符串转换为 ArrayBuffer

function base64ToArrayBuffer(base64) {

const binaryString = atob(base64);

const bytes = new Uint8Array(binaryString.length);

for (let i = 0; i < binaryString.length; i++) {

bytes[i] = binaryString.charCodeAt(i);

}

return bytes.buffer;

}

addEventListener('fetch', event => {

const url = new URL(event.request.url);

if (url.pathname.startsWith('/image/')) {

event.respondWith(handleImageRequest(event.request));

} else {

event.respondWith(handleRequest(event.request));

}

});

Parâmetro Descrição da configuração

API_KEY: como a KEY da autenticação externa do serviço de API, definida por você mesmo

account_id: "xxx", token: "xxx": xx é a ID da conta coudeflare obtida e o token da API

Consulte as observações do código para obter outros parâmetros e observe aqui que oParâmetros USE_EXTERNAL_APIComo a API de modelo grande usa o cloudeflare por padrão, a quantidade é muito pequena, é recomendável consultar o item um e solicitar o glm-4-flash gratuito separadamente.

Criar espaço KV

O espaço KV livre é gravado 1.000 vezes por dia e lido 100.000 vezes por dia, o que pode não ser suficiente para ficar de olho.

Lembre-se de criar um espaço KV chamado IMAGE_KV para vincular-se ao trabalhador quando usá-lo. Consulte a captura de tela da configuração do projeto um.

Como a API funciona

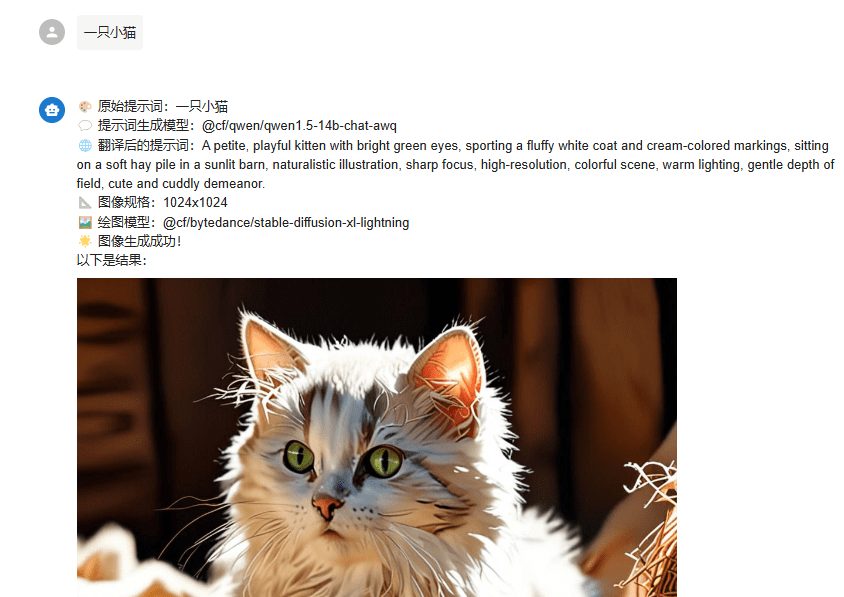

Software de bate-papo compatível e que permite o preenchimento de APIs openai personalizadas. PróximoChat talvez caixa de bate-papo Ambos, usando a versão web do chatbox como exemplo:

Geração de efeitos

Projeto 3: personalizar a interface da API de geração de imagens para camas de imagens externas para uso pessoal

O processo de implantação é mais ou menos o mesmo de antes, portanto, não vou explicá-lo

Características:

Camadas gráficas personalizadas, necessária autoaplicação: https://sm.ms

Código:

//本项目授权api_key,防止被恶意调用

const API_KEY = "sk-1234567890";

//https://sm.ms 图床key,可自行申请,为空则返回base64编码后的图片

const SMMS_API_KEY = '';

//cloudflare账号列表,每次请求都会随机从列表里取一个账号

const CF_ACCOUNT_LIST = [

{ account_id: "xxxx", token: "xxxx" }

];

//在你输入的prompt中添加 ---ntl可强制禁止提示词翻译、优化功能

//在你输入的prompt中添加 ---tl可强制开启提示词翻译、优化功能

//是否开启提示词翻译、优化功能

const CF_IS_TRANSLATE = true;

//示词翻译、优化模型

const CF_TRANSLATE_MODEL = "@cf/qwen/qwen1.5-14b-chat-awq";

//模型映射,设置客户端可用的模型。one-api,new-api在添加渠道时可使用“获取模型列表”功能,一键添加模型

const CUSTOMER_MODEL_MAP = {

"dreamshaper-8": "@cf/lykon/dreamshaper-8-lcm",

"stable-diffusion-xl-base-cf": "@cf/stabilityai/stable-diffusion-xl-base-1.0",

"stable-diffusion-xl-lightning-cf": "@cf/bytedance/stable-diffusion-xl-lightning"

};

async function handleRequest(request) {

try {

if (request.method === "OPTIONS") {

return new Response("", {

status: 204,

headers: {

'Access-Control-Allow-Origin': '*',

"Access-Control-Allow-Headers": '*'

}

});

}

const authHeader = request.headers.get("Authorization");

if (!authHeader || !authHeader.startsWith("Bearer ") || authHeader.split(" ")[1] !== API_KEY) {

return new Response("Unauthorized", { status: 401 });

}

if (request.url.endsWith("/v1/models")) {

const arrs = [];

Object.keys(CUSTOMER_MODEL_MAP).map(element => arrs.push({ id: element, object: "model" }))

const response = {

data: arrs,

success: true

};

return new Response(JSON.stringify(response), {

headers: {

'Content-Type': 'application/json',

'Access-Control-Allow-Origin': '*',

'Access-Control-Allow-Headers': '*'

}

});

}

if (request.method !== "POST") {

return new Response("Only POST requests are allowed", {

status: 405,

headers: {

'Access-Control-Allow-Origin': '*',

"Access-Control-Allow-Headers": '*'

}

});

}

if (!request.url.endsWith("/v1/chat/completions")) {

return new Response("Not Found", {

status: 404,

headers: {

'Access-Control-Allow-Origin': '*',

"Access-Control-Allow-Headers": '*'

}

});

}

const data = await request.json();

const messages = data.messages || [];

const model = CUSTOMER_MODEL_MAP[data.model] || CUSTOMER_MODEL_MAP["stable-diffusion-xl-lightning"];

const stream = data.stream || false;

const userMessage = messages.reverse().find((msg) => msg.role === "user")?.content;

if (!userMessage) {

return new Response(JSON.stringify({ error: "未找到用户消息" }), {

status: 400,

headers: {

'Content-Type': 'application/json',

'Access-Control-Allow-Origin': '*',

'Access-Control-Allow-Headers': '*'

}

});

}

const is_translate = extractTranslate(userMessage);

const originalPrompt = cleanPromptString(userMessage);

const translatedPrompt = is_translate ? await getPrompt(originalPrompt) : originalPrompt;

const imageUrl = await generateImageByText(model, translatedPrompt);

if (stream) {

return handleStreamResponse(originalPrompt, translatedPrompt, "1024x1024", model, imageUrl);

} else {

return handleNonStreamResponse(originalPrompt, translatedPrompt, "1024x1024", model, imageUrl);

}

} catch (error) {

return new Response("Internal Server Error: " + error.message, {

status: 500,

headers: {

'Content-Type': 'application/json',

'Access-Control-Allow-Origin': '*',

'Access-Control-Allow-Headers': '*'

}

});

}

}

async function getPrompt(prompt) {

const requestBodyJson = {

messages: [

{

role: "system",

content: `作为 Stable Diffusion Prompt 提示词专家,您将从关键词中创建提示,通常来自 Danbooru 等数据库。

提示通常描述图像,使用常见词汇,按重要性排列,并用逗号分隔。避免使用"-"或".",但可以接受空格和自然语言。避免词汇重复。

为了强调关键词,请将其放在括号中以增加其权重。例如,"(flowers)"将'flowers'的权重增加1.1倍,而"(((flowers)))"将其增加1.331倍。使用"(flowers:1.5)"将'flowers'的权重增加1.5倍。只为重要的标签增加权重。

提示包括三个部分:**前缀**(质量标签+风格词+效果器)+ **主题**(图像的主要焦点)+ **场景**(背景、环境)。

* 前缀影响图像质量。像"masterpiece"、"best quality"、"4k"这样的标签可以提高图像的细节。像"illustration"、"lensflare"这样的风格词定义图像的风格。像"bestlighting"、"lensflare"、"depthoffield"这样的效果器会影响光照和深度。

* 主题是图像的主要焦点,如角色或场景。对主题进行详细描述可以确保图像丰富而详细。增加主题的权重以增强其清晰度。对于角色,描述面部、头发、身体、服装、姿势等特征。

* 场景描述环境。没有场景,图像的背景是平淡的,主题显得过大。某些主题本身包含场景(例如建筑物、风景)。像"花草草地"、"阳光"、"河流"这样的环境词可以丰富场景。你的任务是设计图像生成的提示。请按照以下步骤进行操作:

1. 我会发送给您一个图像场景。需要你生成详细的图像描述

2. 图像描述必须是英文,输出为Positive Prompt。

示例:

我发送:二战时期的护士。

您回复只回复:

A WWII-era nurse in a German uniform, holding a wine bottle and stethoscope, sitting at a table in white attire, with a table in the background, masterpiece, best quality, 4k, illustration style, best lighting, depth of field, detailed character, detailed environment.`

},

{

role: "user",

content: prompt

}

]

};

const response = await postRequest(CF_TRANSLATE_MODEL, requestBodyJson);

if (!response.ok) {

return prompt;

}

const jsonResponse = await response.json();

const res = jsonResponse.result.response;

return res;

}

async function generateImageByText(model, prompt) {

try {

const jsonBody = { prompt: prompt, num_steps: 20, guidance: 7.5, strength: 1, width: 1024, height: 1024 };

const response = await postRequest(model, jsonBody);

if (SMMS_API_KEY) {

const imageUrl = await uploadImage(response);

return imageUrl;

}

else {

const arrayBuffer = await response.arrayBuffer();

const base64Image = arrayBufferToBase64(arrayBuffer);

return `data:image/webp;base64,${base64Image}`;

}

}

catch (error) {

return "图像生成或转换失败,请检查!" + error.message;

}

}

function arrayBufferToBase64(buffer) {

let binary = '';

const bytes = new Uint8Array(buffer);

const len = bytes.byteLength;

for (let i = 0; i < len; i++) {

binary += String.fromCharCode(bytes[i]);

}

return btoa(binary);

}

async function uploadImage(response) {

const imageBlob = await response.blob();

const formData = new FormData();

formData.append("smfile", imageBlob, "image.jpg");

const uploadResponse = await fetch("https://sm.ms/api/v2/upload", {

method: 'POST',

headers: {

'Authorization': `${SMMS_API_KEY}`,

},

body: formData,

});

if (!uploadResponse.ok) {

throw new Error("Failed to upload image");

}

const uploadResult = await uploadResponse.json();

//const imageUrl = uploadResult[0].src;

const imageUrl = uploadResult.data.url;

return imageUrl;

}

function handleStreamResponse(originalPrompt, translatedPrompt, size, model, imageUrl) {

const uniqueId = `chatcmpl-${Date.now()}`;

const createdTimestamp = Math.floor(Date.now() / 1000);

const systemFingerprint = "fp_" + Math.random().toString(36).substr(2, 9);

const content = `🎨 原始提示词:${originalPrompt}\n` +

`🌐 翻译后的提示词:${translatedPrompt}\n` +

`📐 图像规格:${size}\n` +

`🌟 图像生成成功!\n` +

`以下是结果:\n\n` +

``;

const responsePayload = {

id: uniqueId,

object: "chat.completion.chunk",

created: createdTimestamp,

model: model,

system_fingerprint: systemFingerprint,

choices: [

{

index: 0,

delta: {

content: content,

},

finish_reason: "stop",

},

],

};

const dataString = JSON.stringify(responsePayload);

return new Response(`data: ${dataString}\n\n`, {

status: 200,

headers: {

"Content-Type": "text/event-stream",

'Access-Control-Allow-Origin': '*',

"Access-Control-Allow-Headers": '*',

},

});

}

function handleNonStreamResponse(originalPrompt, translatedPrompt, size, model, imageUrl) {

const uniqueId = `chatcmpl-${Date.now()}`;

const createdTimestamp = Math.floor(Date.now() / 1000);

const systemFingerprint = "fp_" + Math.random().toString(36).substr(2, 9);

const content = `🎨 原始提示词:${originalPrompt}\n` +

`🌐 翻译后的提示词:${translatedPrompt}\n` +

`📐 图像规格:${size}\n` +

`🌟 图像生成成功!\n` +

`以下是结果:\n\n` +

``;

const response = {

id: uniqueId,

object: "chat.completion",

created: createdTimestamp,

model: model,

system_fingerprint: systemFingerprint,

choices: [{

index: 0,

message: {

role: "assistant",

content: content

},

finish_reason: "stop"

}],

usage: {

prompt_tokens: translatedPrompt.length,

completion_tokens: content.length,

total_tokens: translatedPrompt.length + content.length

}

};

const dataString = JSON.stringify(response);

return new Response(dataString, {

status: 200,

headers: {

'Content-Type': 'application/json',

'Access-Control-Allow-Origin': '*',

'Access-Control-Allow-Headers': '*'

}

});

}

async function postRequest(model, jsonBody) {

const cf_account = CF_ACCOUNT_LIST[Math.floor(Math.random() * CF_ACCOUNT_LIST.length)];

const apiUrl = `https://api.cloudflare.com/client/v4/accounts/${cf_account.account_id}/ai/run/${model}`;

const response = await fetch(apiUrl, {

method: 'POST',

headers: {

'Authorization': `Bearer ${cf_account.token}`,

'Content-Type': 'application/json'

},

body: JSON.stringify(jsonBody)

});

if (!response.ok) {

throw new Error('Unexpected response ' + response.status);

}

return response;

}

function extractTranslate(prompt) {

const match = prompt.match(/---n?tl/);

if (match && match[0]) {

if (match[0] == "---ntl") {

return false;

}

else if (match[0] == "---tl") {

return true;

}

}

return CF_IS_TRANSLATE;

}

function cleanPromptString(prompt) {

return prompt.replace(/---n?tl/, "").trim();

}

addEventListener('fetch', event => {

event.respondWith(handleRequest(event.request));

});

Projeto 4: Outra API para geração de imagens com base em fluxos de silício com parâmetros mais personalizáveis

//本项目授权api_key,防止被恶意调用

const API_KEY = "sk-1234567890";

//硅基流动Token列表,每次请求都会随机从列表里取一个Token

const SILICONFLOW_TOKEN_LIST = ["sk-xxxxxxxxx"];

//是否开启提示词翻译、优化功能

const SILICONFLOW_IS_TRANSLATE = true;

//提示词翻译、优化模型

const SILICONFLOW_TRANSLATE_MODEL = "Qwen/Qwen2-7B-Instruct";

//模型映射,设置客户端可用的模型。one-api,new-api在添加渠道时可使用“获取模型列表”功能,一键添加模型

//test为测速模型,方便one-api/new-api测速时使用,避免测速时直接调用画图接口

const CUSTOMER_MODEL_MAP = {

"test": {

body: {

model: "test"

}

},

"FLUX.1-schnell": {

isImage2Image: false,

body: {

model: "black-forest-labs/FLUX.1-schnell",

prompt: "",

image_size: "1024x1024",

seed: 1

},

RATIO_MAP: {

"1:1": "1024x1024",

"1:2": "512x1024",

"3:2": "768x512",

"4:3": "768x1024",

"16:9": "1024x576",

"9:16": "576x1024"

}

},

"stable-diffusion-xl-base-1.0": {

isImage2Image: true,

body: {

model: "stabilityai/stable-diffusion-xl-base-1.0",

prompt: "",

image_size: "1024x1024",

seed: 1,

batch_size: 1,

num_inference_steps: 20,

guidance_scale: 7.5,

image: ""

},

RATIO_MAP: {

"1:1": "1024x1024",

"1:2": "1024x2048",

"3:2": "1536x1024",

"4:3": "1536x2048",

"16:9": "2048x1152",

"9:16": "1152x2048"

}

},

"stable-diffusion-2-1": {

isImage2Image: true,

body: {

model: "stabilityai/stable-diffusion-2-1",

prompt: "",

image_size: "512x512",

seed: 1,

batch_size: 1,

num_inference_steps: 20,

guidance_scale: 7.5,

image: ""

},

RATIO_MAP: {

"1:1": "512x512",

"1:2": "512x1024",

"3:2": "768x512",

"4:3": "768x1024",

"16:9": "1024x576",

"9:16": "576x1024"

}

},

};

async function handleRequest(request) {

try {

if (request.method === "OPTIONS") {

return getResponse("", 204);

}

const authHeader = request.headers.get("Authorization");

if (!authHeader || !authHeader.startsWith("Bearer ") || authHeader.split(" ")[1] !== API_KEY) {

return getResponse("Unauthorized", 401);

}

if (request.url.endsWith("/v1/models")) {

const arrs = [];

Object.keys(CUSTOMER_MODEL_MAP).map(element => arrs.push({

id: element,

object: "model"

}))

const response = {

data: arrs,

success: true

};

return getResponse(JSON.stringify(response), 200);

}

if (request.method !== "POST") {

return getResponse("Only POST requests are allowed", 405);

}

if (!request.url.endsWith("/v1/chat/completions")) {

return getResponse("Not Found", 404);

}

const data = await request.json();

const messages = data.messages || [];

const modelInfo = CUSTOMER_MODEL_MAP[data.model] || CUSTOMER_MODEL_MAP["FLUX.1-schnell"];

const stream = data.stream || false;

const userMessage = messages.reverse().find((msg) => msg.role === "user")?.content;

if (!userMessage) {

return getResponse(JSON.stringify({

error: "未找到用户消息"

}), 400);

}

if (modelInfo.body.model == "test") {

if (stream) {

return handleStreamResponse(userMessage, "", "", data.model, "");

} else {

return handleNonStreamResponse(userMessage, "", "", data.model, "");

}

}

const is_translate = extractTranslate(userMessage);

const size = extractImageSize(userMessage, modelInfo.RATIO_MAP);

const imageUrl = extractImageUrl(userMessage);

const originalPrompt = cleanPromptString(userMessage);

const translatedPrompt = is_translate ? await getPrompt(originalPrompt) : originalPrompt;

let url;

if (!imageUrl) {

url = await generateImage(translatedPrompt, "", modelInfo, size);

} else {

const base64 = await convertImageToBase64(imageUrl);

url = await generateImage(translatedPrompt, base64, modelInfo, size);

}

if (!url) {

url = "https://pic.netbian.com/uploads/allimg/240808/192001-17231160015724.jpg";

}

if (stream) {

return handleStreamResponse(originalPrompt, translatedPrompt, size, data.model, url);

} else {

return handleNonStreamResponse(originalPrompt, translatedPrompt, size, data.model, url);

}

} catch (error) {

return getResponse(JSON.stringify({

error: `处理请求失败: ${error.message}`

}), 500);

}

}

async function generateImage(translatedPrompt, base64Image, modelInfo, imageSize) {

const jsonBody = modelInfo.body;

jsonBody.prompt = translatedPrompt;

jsonBody.imageSize = imageSize;

if (modelInfo.isImage2Image && base64Image) {

jsonBody.image = base64Image;

}

return await getImageUrl("https://api.siliconflow.cn/v1/image/generations", jsonBody);

}

function handleStreamResponse(originalPrompt, translatedPrompt, size, model, imageUrl) {

const uniqueId = `chatcmpl-${Date.now()}`;

const createdTimestamp = Math.floor(Date.now() / 1000);

const systemFingerprint = "fp_" + Math.random().toString(36).substr(2, 9);

const content = `🎨 原始提示词:${originalPrompt}\n` +

`🌐 翻译后的提示词:${translatedPrompt}\n` +

`📐 图像规格:${size}\n` +

`🌟 图像生成成功!\n` +

`以下是结果:\n\n` +

``;

const responsePayload = {

id: uniqueId,

object: "chat.completion.chunk",

created: createdTimestamp,

model: model,

system_fingerprint: systemFingerprint,

choices: [{

index: 0,

delta: {

content: content,

},

finish_reason: "stop",

}, ],

};

const dataString = JSON.stringify(responsePayload);

return new Response(`data: ${dataString}\n\n`, {

status: 200,

headers: {

"Content-Type": "text/event-stream",

'Access-Control-Allow-Origin': '*',

"Access-Control-Allow-Headers": '*',

},

});

}

function handleNonStreamResponse(originalPrompt, translatedPrompt, size, model, imageUrl) {

const uniqueId = `chatcmpl-${Date.now()}`;

const createdTimestamp = Math.floor(Date.now() / 1000);

const systemFingerprint = "fp_" + Math.random().toString(36).substr(2, 9);

const content = `🎨 原始提示词:${originalPrompt}\n` +

`🌐 翻译后的提示词:${translatedPrompt}\n` +

`📐 图像规格:${size}\n` +

`🌟 图像生成成功!\n` +

`以下是结果:\n\n` +

``;

const response = {

id: uniqueId,

object: "chat.completion",

created: createdTimestamp,

model: model,

system_fingerprint: systemFingerprint,

choices: [{

index: 0,

message: {

role: "assistant",

content: content

},

finish_reason: "stop"

}],

usage: {

prompt_tokens: translatedPrompt.length,

completion_tokens: content.length,

total_tokens: translatedPrompt.length + content.length

}

};

return getResponse(JSON.stringify(response), 200);

}

function getResponse(resp, status) {

return new Response(resp, {

status: status,

headers: {

'Content-Type': 'application/json',

'Access-Control-Allow-Origin': '*',

'Access-Control-Allow-Headers': '*'

}

});

}

async function getPrompt(prompt) {

const requestBodyJson = {

model: SILICONFLOW_TRANSLATE_MODEL,

messages: [{

role: "system",

content: `作为 Stable Diffusion Prompt 提示词专家,您将从关键词中创建提示,通常来自 Danbooru 等数据库。

提示通常描述图像,使用常见词汇,按重要性排列,并用逗号分隔。避免使用"-"或".",但可以接受空格和自然语言。避免词汇重复。

为了强调关键词,请将其放在括号中以增加其权重。例如,"(flowers)"将'flowers'的权重增加1.1倍,而"(((flowers)))"将其增加1.331倍。使用"(flowers:1.5)"将'flowers'的权重增加1.5倍。只为重要的标签增加权重。

提示包括三个部分:**前缀**(质量标签+风格词+效果器)+ **主题**(图像的主要焦点)+ **场景**(背景、环境)。

* 前缀影响图像质量。像"masterpiece"、"best quality"、"4k"这样的标签可以提高图像的细节。像"illustration"、"lensflare"这样的风格词定义图像的风格。像"bestlighting"、"lensflare"、"depthoffield"这样的效果器会影响光照和深度。

* 主题是图像的主要焦点,如角色或场景。对主题进行详细描述可以确保图像丰富而详细。增加主题的权重以增强其清晰度。对于角色,描述面部、头发、身体、服装、姿势等特征。

* 场景描述环境。没有场景,图像的背景是平淡的,主题显得过大。某些主题本身包含场景(例如建筑物、风景)。像"花草草地"、"阳光"、"河流"这样的环境词可以丰富场景。你的任务是设计图像生成的提示。请按照以下步骤进行操作:

1. 我会发送给您一个图像场景。需要你生成详细的图像描述

2. 图像描述必须是英文,输出为Positive Prompt。

示例:

我发送:二战时期的护士。

您回复只回复:

A WWII-era nurse in a German uniform, holding a wine bottle and stethoscope, sitting at a table in white attire, with a table in the background, masterpiece, best quality, 4k, illustration style, best lighting, depth of field, detailed character, detailed environment.`

},

{

role: "user",

content: prompt

}

],

stream: false,

max_tokens: 512,

temperature: 0.7,

top_p: 0.7,

top_k: 50,

frequency_penalty: 0.5,

n: 1

};

const apiUrl = "https://api.siliconflow.cn/v1/chat/completions";

const response = await postRequest(apiUrl, requestBodyJson);

if (response.ok) {

const jsonResponse = await response.json();

const res = jsonResponse.choices[0].message.content;

return res;

} else {

return prompt;

}

}

async function getImageUrl(apiUrl, jsonBody) {

const response = await postRequest(apiUrl, jsonBody);

if (!response.ok) {

throw new Error('Unexpected response ' + response.status);

}

const jsonResponse = await response.json();

return jsonResponse.images[0].url;

}

async function postRequest(apiUrl, jsonBody) {

const token = SILICONFLOW_TOKEN_LIST[Math.floor(Math.random() * SILICONFLOW_TOKEN_LIST.length)];

const response = await fetch(apiUrl, {

method: 'POST',

headers: {

'Authorization': `Bearer ${token}`,

'Accept': 'application/json',

'Content-Type': 'application/json'

},

body: JSON.stringify(jsonBody)

});

return response;

}

function extractImageSize(prompt, RATIO_MAP) {

const match = prompt.match(/---(\d+:\d+)/);

return match ? RATIO_MAP[match[1].trim()] || "1024x1024" : "1024x1024";

}

function extractImageUrl(prompt) {

const regex = /(https?:\/\/[^\s]+?\.(?:png|jpe?g|gif|bmp|webp|svg))/i;

const match = prompt.match(regex);

return match ? match[0] : null;

}

function extractTranslate(prompt) {

const match = prompt.match(/---n?tl/);

if (match && match[0]) {

if (match[0] == "---ntl") {

return false;

} else if (match[0] == "---tl") {

return true;

}

}

return SILICONFLOW_IS_TRANSLATE;

}

function cleanPromptString(prompt) {

return prompt.replace(/---\d+:\d+/, "").replace(/---n?tl/, "").replace(/https?:\/\/\S+\.(?:png|jpe?g|gif|bmp|webp|svg)/gi, "").trim();

}

async function convertImageToBase64(imageUrl) {

const response = await fetch(imageUrl);

if (!response.ok) {

throw new Error('Failed to download image');

}

const arrayBuffer = await response.arrayBuffer();

const base64Image = arrayBufferToBase64(arrayBuffer);

return `data:image/webp;base64,${base64Image}`;

}

function arrayBufferToBase64(buffer) {

let binary = '';

const bytes = new Uint8Array(buffer);

const len = bytes.byteLength;

for (let i = 0; i < len; i++) {

binary += String.fromCharCode(bytes[i]);

}

return btoa(binary);

}

addEventListener('fetch', event => {

event.respondWith(handleRequest(event.request));

});

© declaração de direitos autorais

Direitos autorais do artigo Círculo de compartilhamento de IA A todos, favor não reproduzir sem permissão.

Artigos relacionados

Nenhum comentário...