Heavy Update: Dify v0.10.0 Introduces File Uploads, Easy to Build NotebookLM AI Podcasts

Dify Dify is dedicated to helping developers get their AI ideas off the ground quickly, whether it's validating product prototypes or building productivity tools. Workflow All the best choices.In the latest v0.10.0 release, we introduced the much-anticipated file upload feature, which enables Workflow to handle documents and audio/video in multiple formats, further expanding the boundaries of AI application development.

This feature is particularly suitable for the following scenarios.

- Documentation Q&A: Answer questions based on uploaded documents with reliable sources.

- Summary of the report: Quickly distill core points from lengthy documents to generate summaries.

- Form processing: Quickly retrieve and process specific content in various documents or spreadsheets.

What's more, the file upload feature paves the way for multimodal AI applications.Developers can now easily build complex workflows that understand and process images, audio, and video, dramatically improving the functionality and user experience of their applications.

Getting Started with File Upload Functionality

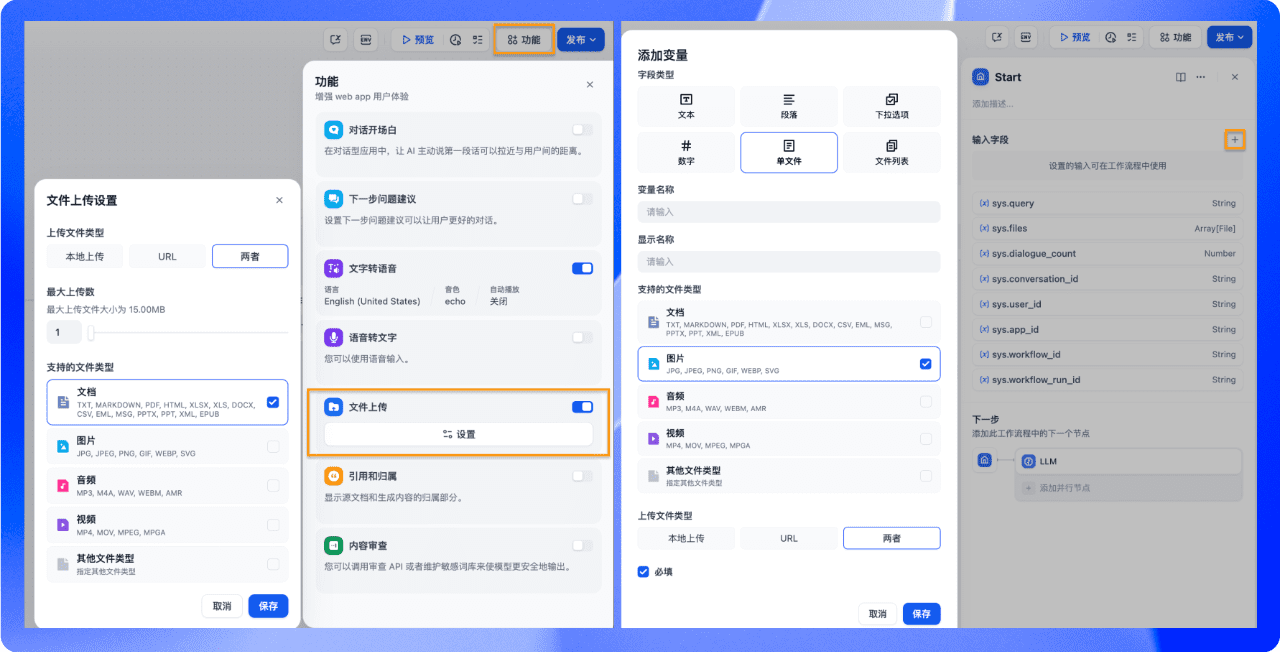

1. Enable file uploading directly

Enabling file uploads is very simple, just turn on the file upload switch in the function list (defaults to a system variable) sys.files ). Users can upload files directly through the dialog box and the latest uploaded file will automatically overwrite the previous one. If flexible context management is needed, developers can turn on the memory feature.

2. Creation of customized variables

Another way is to create custom variables in the start node to support single or multiple file uploads. Once set up, the user interface will display the file upload form, and subsequent conversations and workflow processing will always center around the uploaded files.

After uploading a file, it also needs to be pre-processed based on the file type so that LLM can effectively understand and analyze the content. Document-type files (e.g. TXT, PDF, HTML, etc.) require text extraction in Workflow using the Document Extractor node, which converts them into string variables available to LLM. Audio-video files need to be encoded with additional tools, such as audio-to-text or video keyframe extraction. (It is worth noting that OpenAI's newly released "gpt-40-audio-preview" model supports direct processing of audio for inference and dialog, and we will be adapting this functionality in a later iteration of the release.)

In this update we have not only added a new Document Extractor and List Manipulation node for extracting and filtering files respectively, but also enhanced most of the Workflow nodes, see the help for more details:

https://docs.dify.ai/zh-hans/guides/workflow/file-upload

Quickly Build an AI Podcast Using File Uploads

Google's recently launched AI tool, NotebookLM, has attracted a lot of attention because of its new 'audio' feature. It can quickly analyze long-form content, extract key information, and generate conversational audio summaries similar to podcasts. This not only saves users a lot of reading time, but also makes it easier for them to get through to the gist of the content.

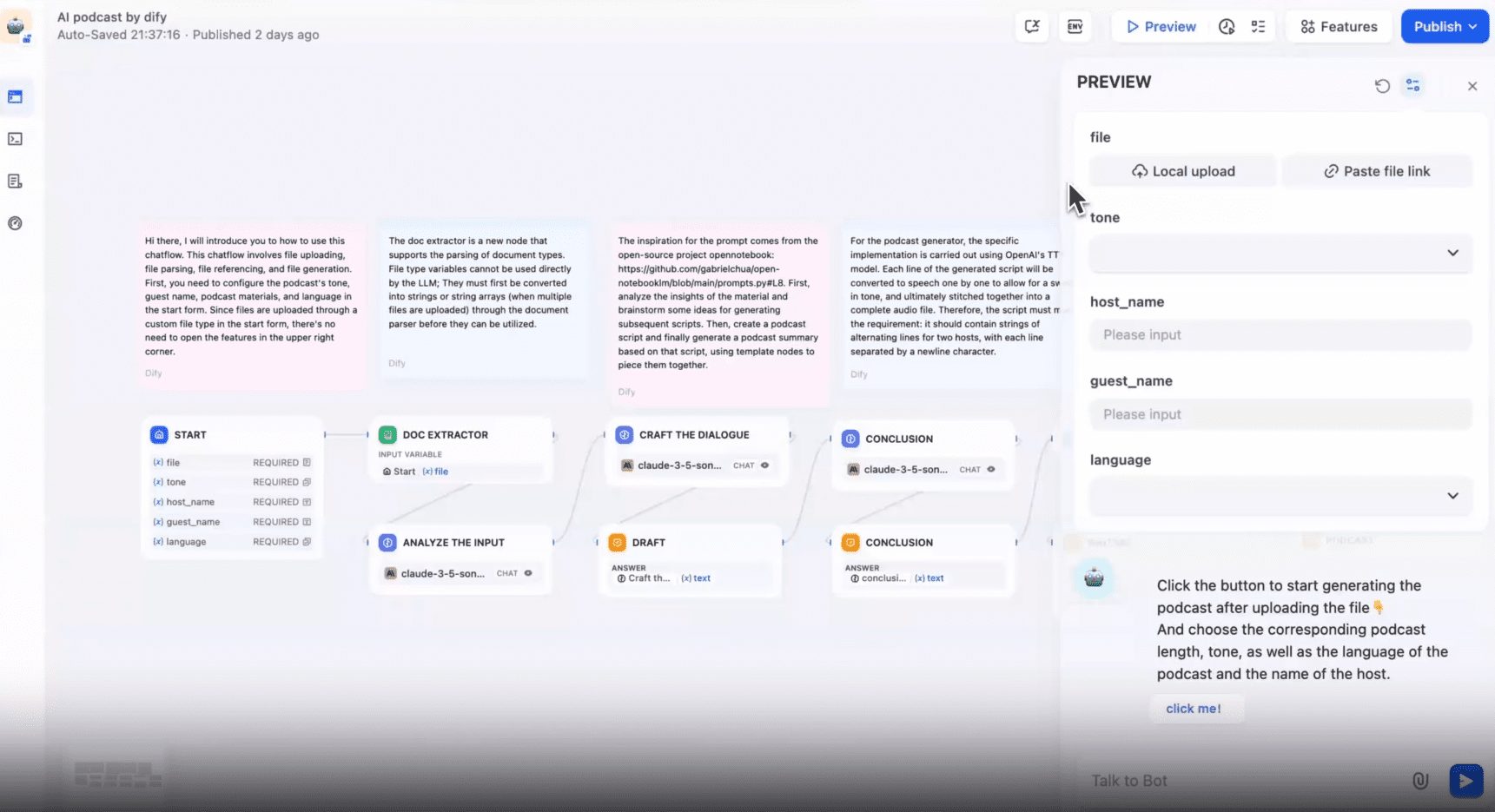

Next, we'll show you how to leverage the file upload feature and associated nodes to turn documents into conversational AI podcasts via Workflow, enabling something like the NotebookLM The function of the

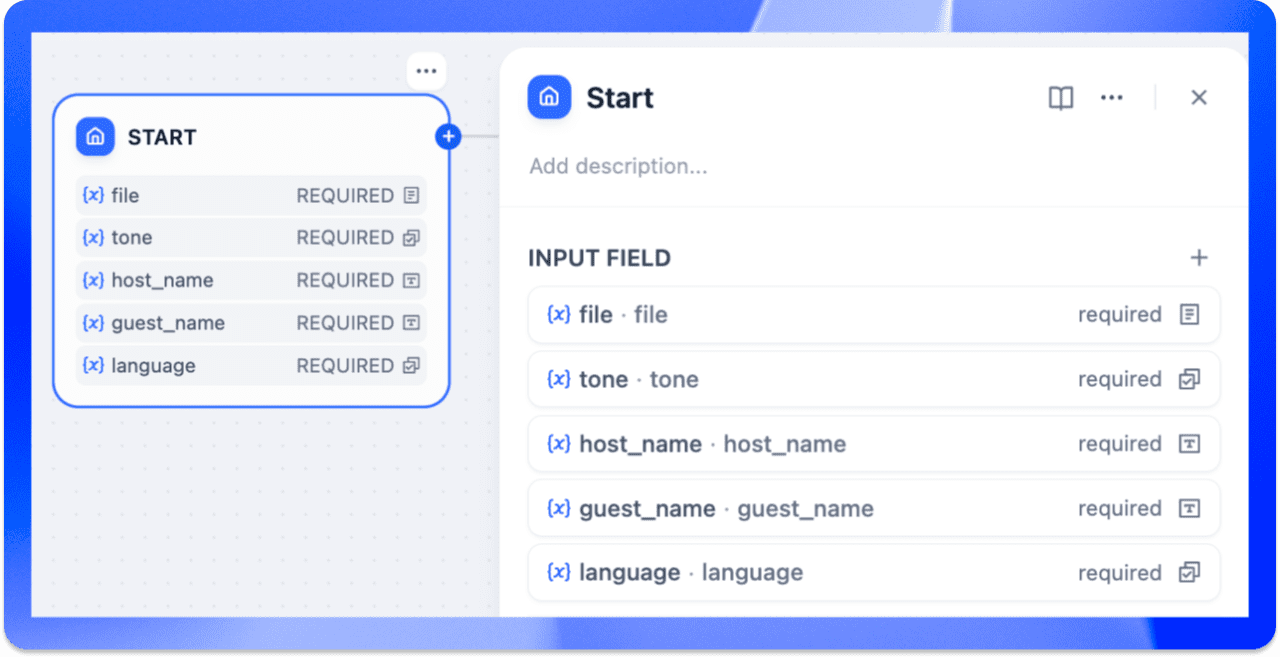

Parameter configuration of the start node

Create a new Chatflow, and in the Start node, set up file uploads and define key variables (such as tone, host, guests, and language).

- ** file : **Select the "Single File" field type to allow uploading of document type files.

- ** tone :: Let users customize the communication style of the AI podcast using "drop-down option" types, including Casaul, Formal, Humorous, and so on.

- ** host_name : **Select the "Text" type for inputting the name of the facilitator.

- ** guest_name :** Select "Text" type for inputting guest name.

- ** language :: **Use a "drop-down option" type, providing options for Chinese, English, Japanese, etc., to make it easier for users to choose the language of the podcast.

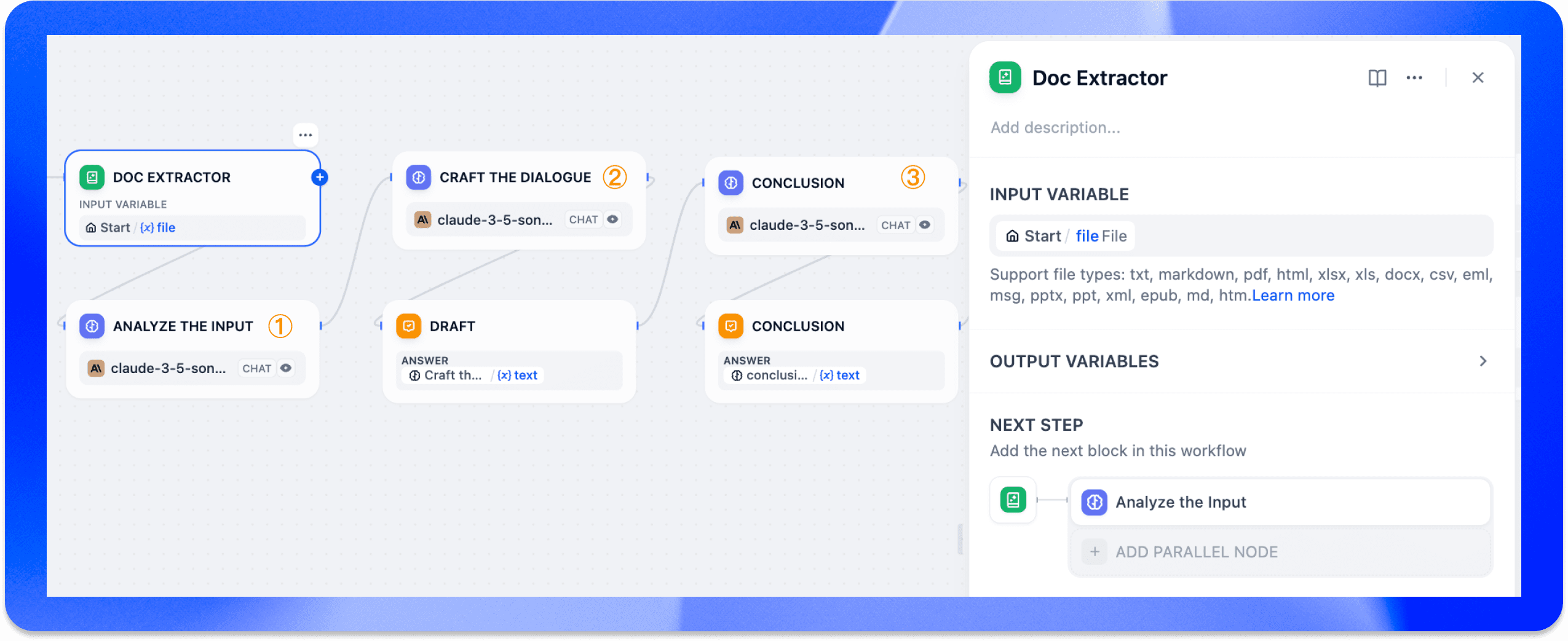

Generating Podcast Scripts Using File Extractor with LLM Nodes

After a successful file upload, the document extractor extracts the text in the variable ***file ***, converting unstructured data into processable text content. Next, the extracted content will be processed through three LLM nodes to gradually generate the complete podcast script.

1. LLM Analyze the Input

Analyze the extracted text to distill the key information needed for the podcast, including important themes, story points, data, etc., to lay the foundation for subsequent generation of podcast content.

2. LLM Script Generation Node (Craft the Dialogue)

Based on the extracted content and pre-defined variables (e.g., tone, language, host_name, and guest_name), natural-sounding, personalized podcast dialogue scripts are generated to ensure that the host and guest interactions are in line with the set roles and styles.

3. LLM Conclusion Node (Conclusion)

Generate a podcast summary that recapitulates the key points through a conversation between the host and the guest, ensuring that the wrap-up section leaves a lasting impression on the listener and leads to some food for thought or suggestions for action.

With the processing of the LLM node, we get the podcast conversation and summary.

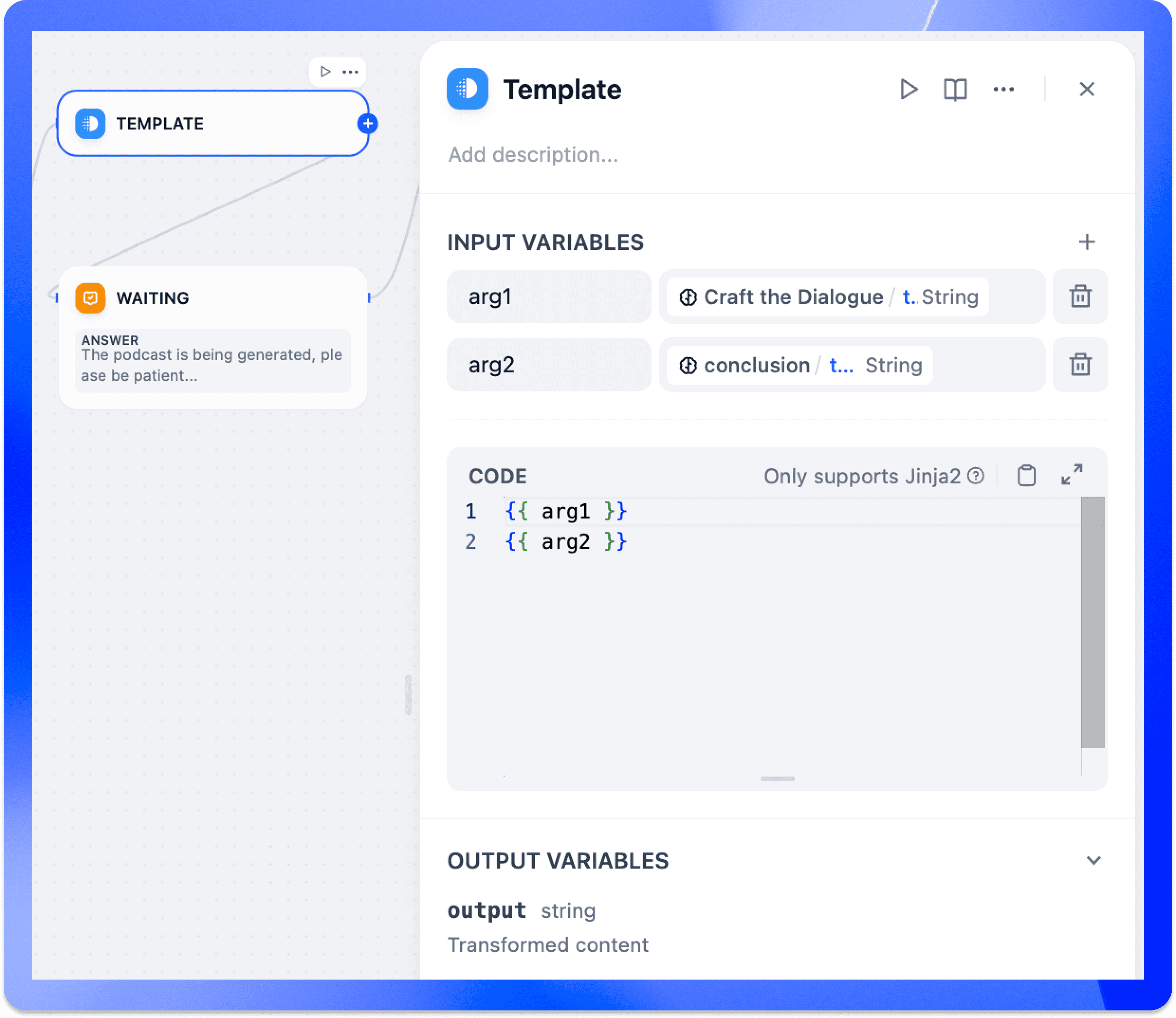

Merge text blocks via template conversion nodes

The Template Conversion node consolidates the partial content generated by individual LLM nodes into a complete output and converts it into a uniform format usable by downstream tools.

- Input: Get the text snippets of the LLM nodes Craft the Dialogue and Conclusion, by passing the variables *** arg1*** and arg2 Make a citation.

- Output: merge *** arg1*** (conversation content) and *** arg2*** (summary section) to generate a complete and coherent podcast script, output in string format for easy processing by subsequent tools.

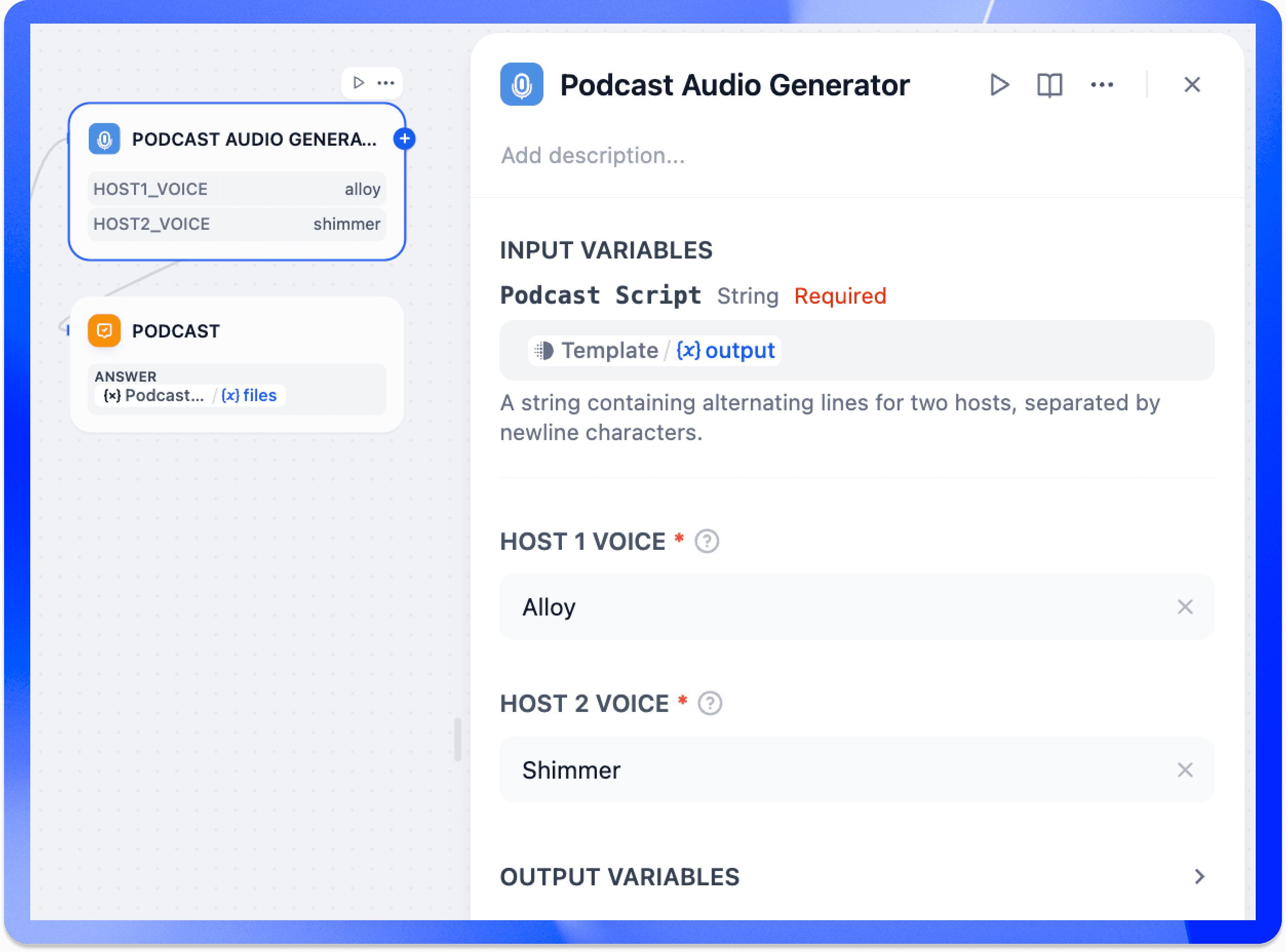

Podcast Audio Generator Configuration and Output

After the merging of the text is complete, the script passes the template conversion node output variable is passed to the podcast audio generator to begin the final stage of audio generation.

The tool generates podcast audio from a text script, and developers can select host and guest voices (e.g., "Alloy" and "Shimmer") to determine how the characters will sound. The podcast generator converts the received full script into an audio file and makes it available for download.

With the above steps, you can easily master how to generate AI podcasts using the file upload feature. We've also made a template of this app and placed it on the Explore page to make it easy for you to get started quickly and further explore more features.

Other things you need to know

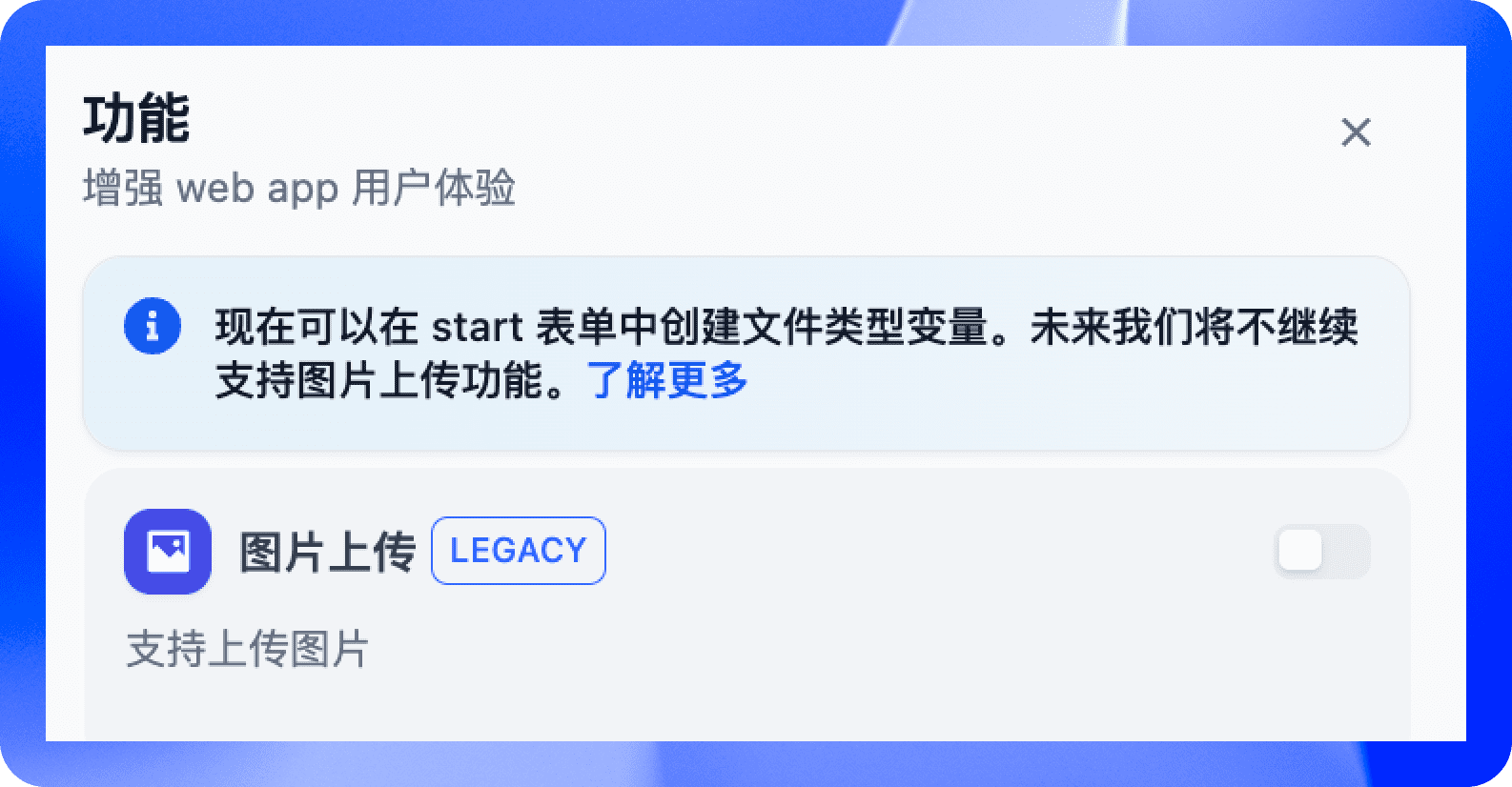

Image upload function offline statement

1. for the Chatflow application:

In v0.10.0, we extended the original image upload feature to file uploads, allowing the app to handle more formats of documents and audio/video files.

- The image upload feature has been integrated as part of the file upload. With the file upload feature enabled, you can refer to files such as images uploaded in the chat window by selecting ***sys.file *** through the visual variable selector in the LLM node.

- We have performed compatibility processing on older versions of the app to ensure that apps that used the image upload feature in previous versions remain stable.

2. for Workflow applications:

- We recommend customizing the file type variable in the start node for file uploads to handle more types of files.

- Please note: We will be deprecating the old image upload function and system variables in a future release! sys.file The

See the help file for more information:

https://docs.dify.ai/zh-hans/guides/workflow/bulletin

© Copyright notes

Article copyright AI Sharing Circle All, please do not reproduce without permission.

Related posts

No comments...