zChunk: a generic semantic chunking strategy based on Llama-70B

General Introduction

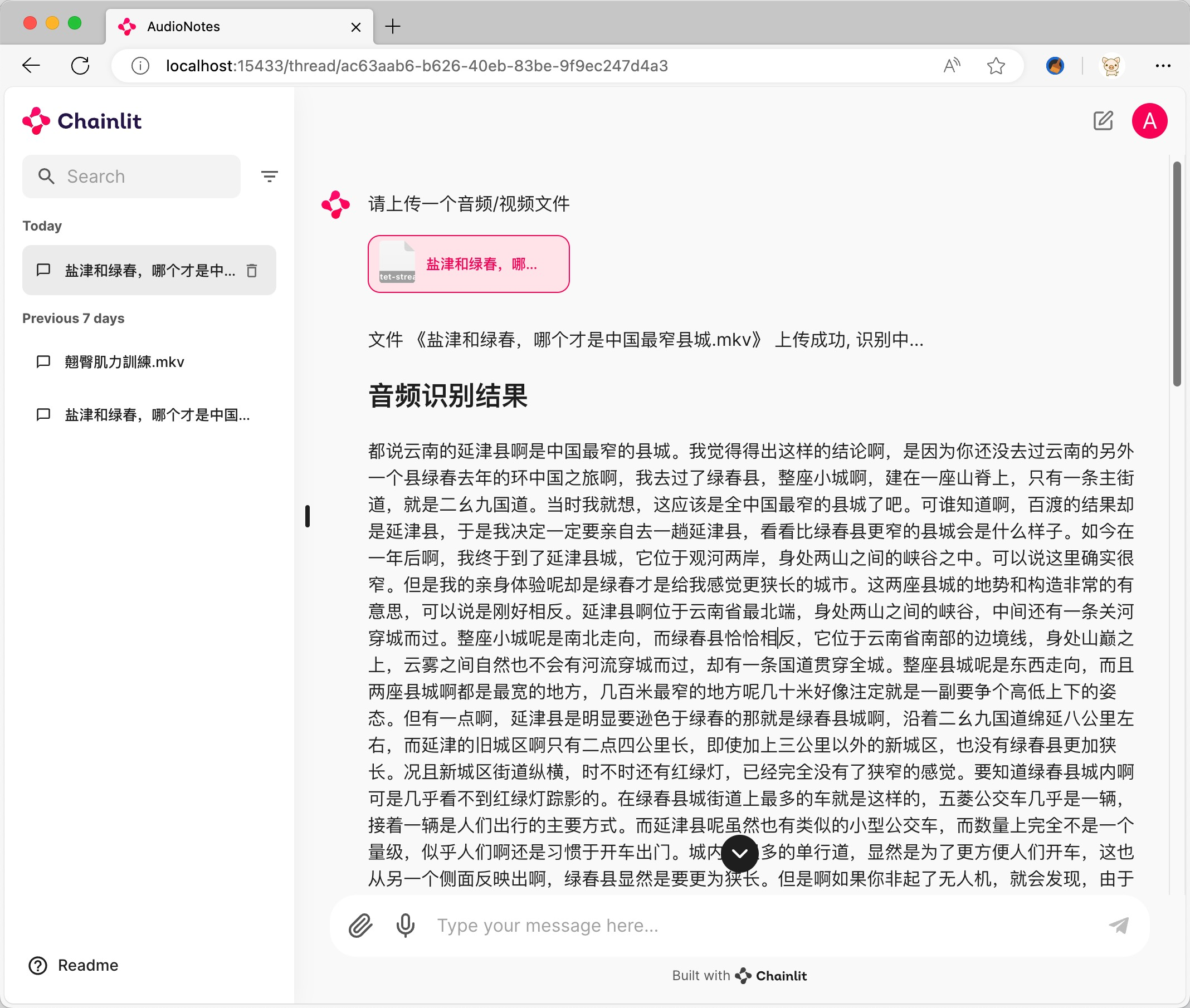

zChunk is a novel chunking strategy developed by ZeroEntropy to provide a solution for generic semantic chunking. The strategy is based on the Llama-70B model, which optimizes the chunking process of a document by prompting for chunk generation, ensuring that a high signal-to-noise ratio is maintained during information retrieval. zChunk is particularly suited to RAG (Retrieval Augmentation Generation) applications that require high-precision retrieval, and solves the limitations of traditional chunking methods when dealing with complex documents. With zChunk, users can more effectively partition documents into meaningful chunks, thereby improving the accuracy and efficiency of information retrieval.

Your job is to act as a chunker.

You should insert the "paragraph" throughout the input.

Your goal is to separate the content into semantically relevant groupings.

methodology and Limitations of LLM OCR: The Document Parsing Challenge Behind the Glossy Surface The PROMPTs mentioned have some commonalities.

Function List

- Llama-70B based chunking algorithm: Generating cues for semantic chunking using the Llama-70B model.

- High signal-to-noise ratio chunking: Optimize the chunking strategy to ensure that the retrieved information has a high signal-to-noise ratio.

- Multiple chunking strategies: Supports various strategies such as fixed-size chunking, embedding similarity-based chunking, etc.

- hyperparameter tuning: Provide hyper-parameter tuning pipeline, users can adjust the chunk size and overlap parameter according to specific needs.

- open source: Full open source code is provided and can be freely used and modified by the user.

Using Help

Installation process

- clone warehouse::

git clone https://github.com/zeroentropy-ai/zchunk.git

cd zchunk

- Installation of dependencies::

pip install -r requirements.txt

Usage

- Preparing the input file: Save the document to be chunked as a text file, e.g.

example_input.txtThe - Run the chunking script::

python test.py --input example_input.txt --output example_output.txt

- Viewing the output file: The chunking results will be saved in the

example_output.txtCenter.

Detailed function operation flow

- Choosing a chunking strategy::

- NaiveChunk: Fixed-size chunking for simple documents.

- SemanticChunk: Chunking based on embedding similarity for documents that need to maintain semantic integrity.

- zChunk Algorithm: Generate chunks based on hints from the Llama-70B model for complex documents.

- Adjustment of hyperparameters::

- chunk size: This can be done by adjusting the parameter

chunk_sizeto set the size of each chunk. - overlap ratio: via the parameter

overlap_ratioSet the percentage of overlap between chunks to ensure continuity of information.

- chunk size: This can be done by adjusting the parameter

- Running hyperparameter tuning::

python hyperparameter_tuning.py --input example_input.txt --output tuned_output.txt

The script will automatically adjust the chunk size and overlap ratio based on the input document to generate optimal chunking results.

- Evaluating the effects of chunking::

- Evaluate the chunking results using the provided evaluation script to ensure the effectiveness of the chunking strategy.

python evaluate.py --input example_input.txt --output example_output.txt

typical example

Suppose we have a text of the U.S. Constitution that needs to be chunked:

Original text:

Section. 1.

All legislative Powers herein granted shall be vested in a Congress of the United States, which shall consist of a Senate and House of Representatives.

Section. 2.

The House of Representatives shall be composed of Members chosen every second Year by the People of the several States, and the Electors in each State shall have the Qualifications requisite for Electors of the most numerous Branch of the State Legislature.

No Person shall be a Representative who shall not have attained to the Age of twenty five Years, and been seven Years a Citizen of the United States, and who shall not, when elected, be an Inhabitant of that State in which he shall be chosen.

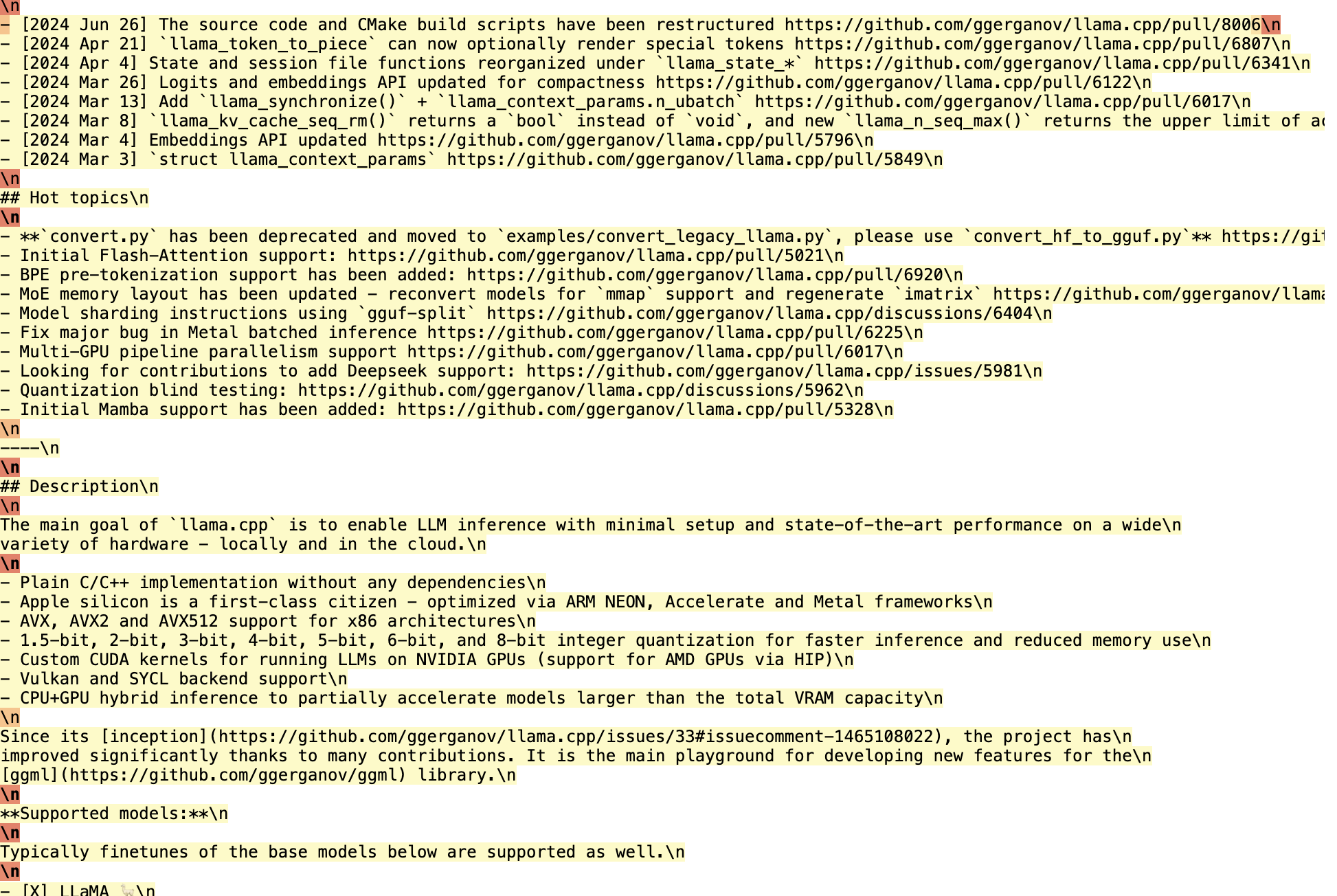

Chunking is performed using the zChunk algorithm:

- Select Cue Words: Select a special token (e.g., "paragraph") that is not in the corpus.

- Insert Cues: Have Llama insert the token in the user message.

SYSTEM_PROMPT (简化版):

你的任务是作为一个分块器。

你应该在输入中插入“段”标记。

你的目标是将内容分成语义相关的组。

- Generate chunks::

Section. 1.

All legislative Powers herein granted shall be vested in a Congress of the United States, which shall consist of a Senate and House of Representatives.段

Section. 2.

The House of Representatives shall be composed of Members chosen every second Year by the People of the several States, and the Electors in each State shall have the Qualifications requisite for Electors of the most numerous Branch of the State Legislature.段

No Person shall be a Representative who shall not have attained to the Age of twenty five Years, and been seven Years a Citizen of the United States, and who shall not, when elected, be an Inhabitant of that State in which he shall be chosen.段

In this way, we can segment the document into semantically related blocks, each of which can be retrieved independently, improving the signal-to-noise ratio and accuracy of information retrieval.

make superior

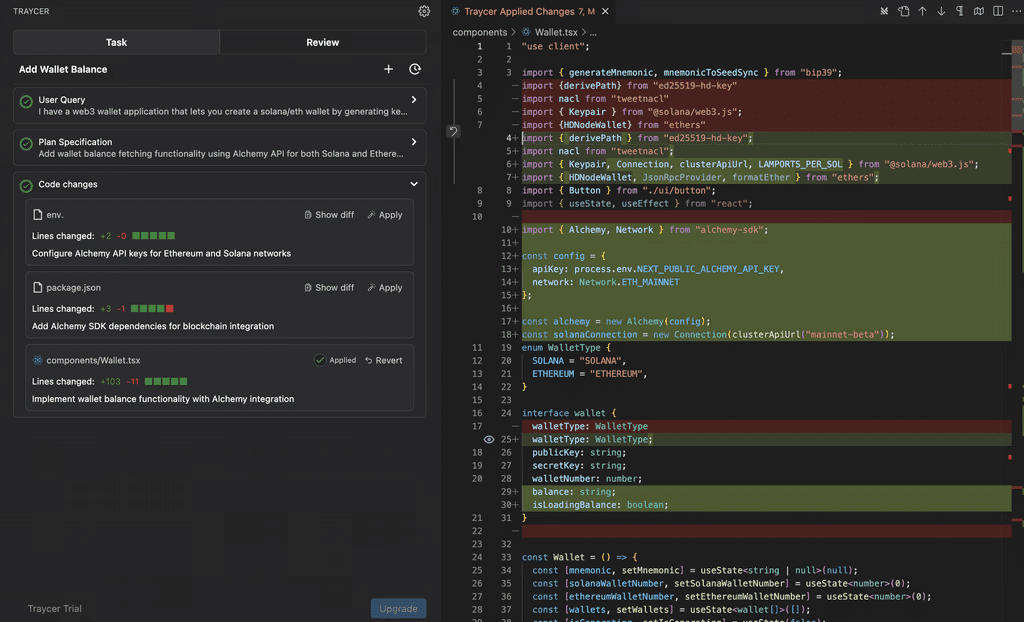

- With local inference Llama, entire passages can be processed efficiently and logprobs can be examined to determine chunk locations.

- Processing 450,000 characters takes about 15 minutes, but can be significantly reduced if the code is optimized.

benchmarking

- zChunk has higher retrieval ratio and signal ratio scores than NaiveChunk and semantic chunking methods on the LegalBenchConsumerContractsQA dataset.

With the zChunk algorithm, we can easily segment any type of document without relying on regular expressions or manually created rules, improving the efficiency and accuracy of RAG applications.

© Copyright notes

Article copyright AI Sharing Circle All, please do not reproduce without permission.

Related posts

No comments...