Enhanced Generation of Knowledge Graph Retrieval Enhancements for Customer Service Quizzing

Paper address: https://arxiv.org/abs/2404.17723

Knowledge graphs can only extract entity relationships in a targeted manner, and such stably extractable entity relationships can be understood asApproaching structured dataThe

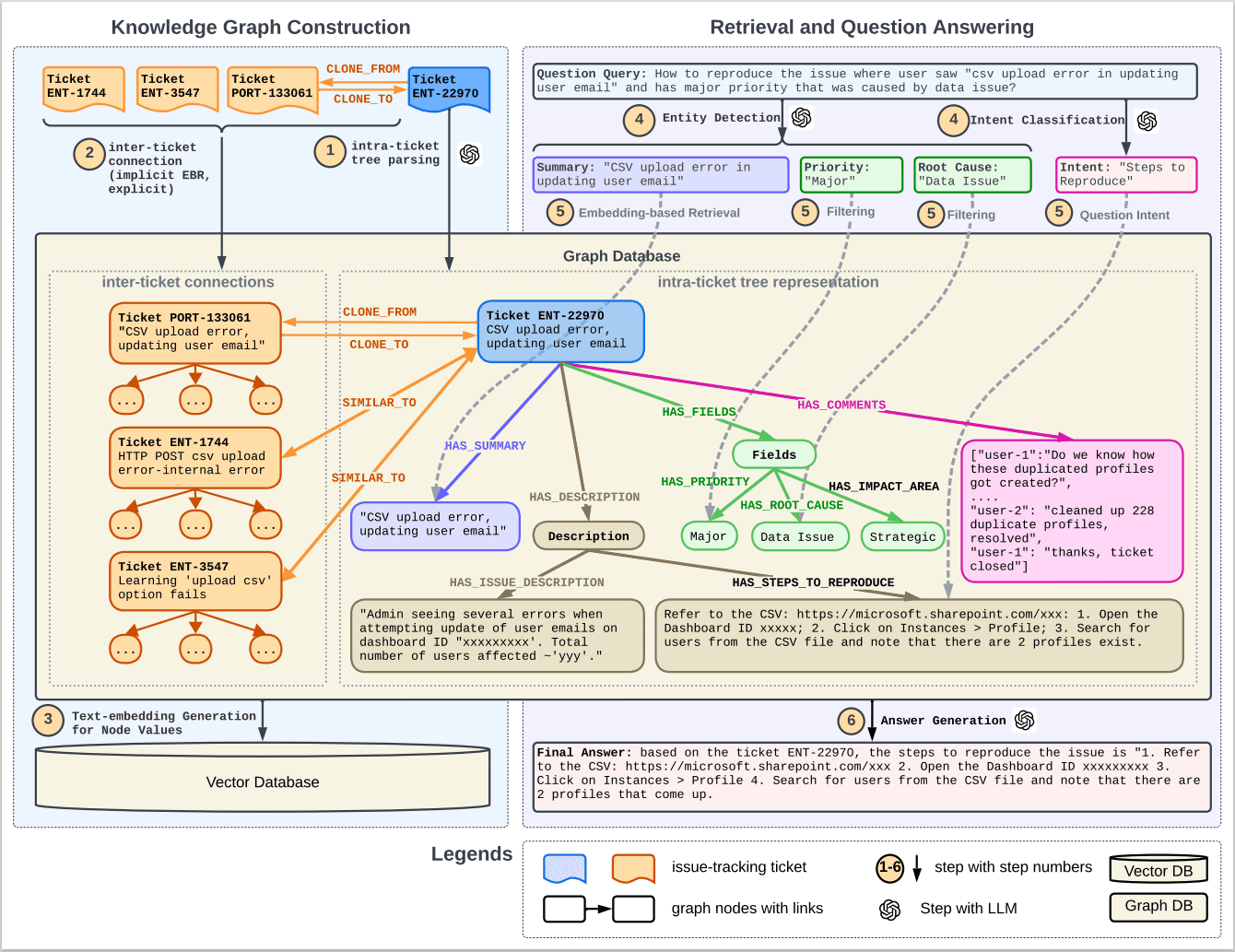

Figure 1 illustrates the workflow of a customer service Q&A system that combines Knowledge Graph (KG) and Retrieval Augmented Generation (RAG). The process is summarized below:

1. Knowledge mapping: The system builds a comprehensive knowledge map from historical customer service issue tickets, including two main steps:

- Inner Ticket Tree Representation: each issue ticket is parsed as a tree structure with nodes representing different parts of the ticket (e.g., summary, description, priority, etc.).

- Inter-ticket linking: connecting individual ticket trees into a complete graph based on explicit linking in issue tracking tickets and implicit linking deduced through semantic similarity.

2. Embedding Generation: Generate embedding vectors for nodes in the graph, using pre-trained text embedding models (e.g., BERT or E5), and store these embeddings in a vector database.

3. Search and question-and-answer process:

- Question intent embedding: parsing user queries to recognize named entities and intents.

- Embedding-based retrieval: use entities to retrieve the most relevant tickets and filter out relevant subgraphs.

- Filtering: further screening and identification of the most relevant information.

4. Retrieved tickets: The system retrieves specific tickets related to the user's query, such as ENT-22970, PORT-133061, ENT-1744, and ENT-3547, and displays the clone (CLONE_FROM/CLONE_TO) and similarity (SIMILAR_TO) relationships between them.

5. Answer Generation: Eventually, the system will synthesize the retrieved information and the original user query to generate an answer through a large-scale language model (LLM).

6. Graph database and vector database: Throughout the process, the graph database is used to store and manage the nodes and links in the atlas, while the vector database is used to store and manage the text embedding vectors of the nodes.

7. Steps in using LLM: In multiple steps, the Large Language Model is used to parse text, generate queries, extract subgraphs and generate answers.

This flowchart provides a high-level view of how to improve the efficiency and accuracy of a customer service automated Q&A system by combining knowledge graph and retrieval enhancement generation techniques.

The left side of this figure shows the construction of the knowledge graph; the right side shows the retrieval and Q&A process.

© Copyright notes

Article copyright AI Sharing Circle All, please do not reproduce without permission.

Related posts

No comments...