Microsoft AI Agent Introductory Course: Tools Using (Invoking) Design Patterns

Tools are interesting because they allow AI intelligences to have a wider range of capabilities. By adding tools, the intelligence is no longer limited to the limited set of operations it can perform, but can perform a wide variety of operations. In this chapter, we will explore the Tool Usage Design Pattern, which describes how an AI Intelligence can use a particular tool to achieve its goals.

summary

In this course, we hope to answer the following questions:

- What are tool-use design patterns?

- What use cases can it be applied to?

- What elements/building blocks are needed to implement this design pattern?

- What are some special considerations for using tools to build trusted AI intelligences using design patterns?

Learning Objectives

Upon completion of this course, you will be able to:

- Define the tool's use of design patterns and their purpose.

- Identify use cases where tool usage design patterns apply.

- Understand the key elements required to implement this design pattern.

- Recognize the considerations for ensuring the trustworthiness of AI intelligences when using this design pattern.

What are tool-use design patterns?

Tools using design patterns Focuses on giving Large Language Models (LLMs) the ability to interact with external tools to achieve specific goals. Tools are pieces of code that intelligences can execute to perform operations. Tools can be simple functions (e.g., calculators) or API calls to third-party services (e.g., stock price lookups or weather forecasts). In the context of an AI Intelligence, tools are designed to be executed by the Intelligence in response to a Function calls for model generationThe

What use cases can it be applied to?

AI intelligences can utilize tools to perform complex tasks, retrieve information, or make decisions. The Tool Usage design pattern is typically used in scenarios that require dynamic interaction with external systems, such as databases, web services, or code interpreters. This feature is useful for many different use cases, including:

- Dynamic information retrieval: Intelligentsia can query external APIs or databases for up-to-date data (e.g., querying a SQLite database for data analysis, getting stock prices or weather information).

- Code execution and interpretation: Intelligentsia can execute code or scripts to solve math problems, generate reports, or perform simulations.

- Workflow automation: Automate repetitive or multi-step workflows by integrating tools such as task schedulers, email services, or data pipelines.

- Customer Support: Intelligentsia can interact with CRM systems, work order platforms or knowledge bases to resolve user queries.

- Content generation and editing: Intelligentsia can utilize tools such as grammar checkers, text summarizers, or content security evaluators to assist with content creation tasks.

What elements/building blocks are needed to implement a tool to use design patterns?

These building blocks allow AI intelligences to perform a wide variety of tasks. Let's take a look at the key elements needed to implement a tool that uses design patterns:

- Function/tool calls: This is the primary way in which Large Language Models (LLMs) can interact with tools. Functions or tools are reusable chunks of code that intelligences use to perform tasks. These can be simple functions (e.g., calculators) or API calls to third-party services (e.g., stock price lookups or weather forecasts1 ).

- Dynamic information retrieval: Intelligentsia can query external APIs or databases for the latest data. This is useful for tasks such as analyzing data, getting stock prices or weather information1.

- Code execution and interpretation: Intelligentsia can execute code or scripts to solve math problems, generate reports, or perform simulations 1.

- Workflow automation: This involves automating repetitive or multi-step workflows by integrating tools such as task schedulers, email services, or data pipelines1.

- Customer Support: Intelligences can interact with CRM systems, work order platforms, or knowledge bases to resolve user queries 1.

- Content generation and editing: Intelligentsia can utilize tools such as grammar checkers, text summarizers, or content security evaluators to assist in content creation tasksThe

Next, let's look at function/tool calls in more detail.

Function/tool calls

Function calls are the main way we enable Large Language Models (LLMs) to interact with tools. You'll often see "function" and "tool" used interchangeably, because "functions" (reusable blocks of code) are the "tools" that intelligences use to perform tasks. tools". In order to invoke the code of a function, the Large Language Model (LLM) must compare the user's request with the function's description. For this purpose, a schema containing all available function descriptions is sent to the LLM. The Large Language Model (LLM) then selects the function that best suits the task and returns its name and parameters. The selected function is called and its response is sent back to the Large Language Model (LLM), which uses this information to respond to the user's request.

For developers implementing function calls for intelligences, you need:

- Large Language Model (LLM) model with function call support

- Patterns containing function descriptions

- Code for each described function

Let's illustrate with an example of getting the current time of a city:

- Initialize the Large Language Model (LLM) that supports function calls:

Not all models support function calls, so it's important to check that the Large Language Model (LLM) you're using does.Azure OpenAI Function calls are supported. We can start by initializing the Azure OpenAI client.# Initialize the Azure OpenAI client client = AzureOpenAI( azure_endpoint = os.getenv("AZURE_OPENAI_ENDPOINT"), api_key=os.getenv("AZURE_OPENAI_API_KEY"), api_version="2024-05-01-preview" ) - Creating Function Patterns::

Next, we will define a JSON schema that contains the name of the function, a description of the function's function, and the names and descriptions of the function's parameters. We will then take this schema and pass it along with the user's request to the previously created client to find the time in San Francisco. It's important to note that the return of the Tool Call(math.) genusrather than The final answer to the question. As mentioned earlier, the Large Language Model (LLM) returns the name of the function it has chosen for the task, and the arguments that will be passed to it.

# Function description for the model to read

tools = [

{

"type": "function",

"function": {

"name": "get_current_time",

"description": "Get the current time in a given location",

"parameters": {

"type": "object",

"properties": {

"location": {

"type": "string",

"description": "The city name, e.g. San Francisco",

},

},

"required": ["location"],

},

}

}

]

# Initial user message

messages = [{"role": "user", "content": "What's the current time in San Francisco"}]

# First API call: Ask the model to use the function

response = client.chat.completions.create(

model=deployment_name,

messages=messages,

tools=tools,

tool_choice="auto",

)

# Process the model's response

response_message = response.choices[0].message

messages.append(response_message)

print("Model's response:")

print(response_message)

Model's response:

ChatCompletionMessage(content=None, role='assistant', function_call=None, tool_calls=[ChatCompletionMessageToolCall(id='call_pOsKdUlqvdyttYB67MOj434b', function=Function(arguments='{"location":"San Francisco"}', name='get_current_time'), type='function')])

- The code of the function required to perform the task:

Now that the Large Language Model (LLM) has selected the function that needs to be run, the code to perform the task needs to be implemented and executed. We can implement code to get the current time in Python. We also need to write code to extract the name and parameters from the response_message to get the final result.def get_current_time(location): """Get the current time for a given location""" print(f"get_current_time called with location: {location}") location_lower = location.lower() for key, timezone in TIMEZONE_DATA.items(): if key in location_lower: print(f"Timezone found for {key}") current_time = datetime.now(ZoneInfo(timezone)).strftime("%I:%M %p") return json.dumps({ "location": location, "current_time": current_time }) print(f"No timezone data found for {location_lower}") return json.dumps({"location": location, "current_time": "unknown"})# Handle function calls if response_message.tool_calls: for tool_call in response_message.tool_calls: if tool_call.function.name == "get_current_time": function_args = json.loads(tool_call.function.arguments) time_response = get_current_time( location=function_args.get("location") ) messages.append({ "tool_call_id": tool_call.id, "role": "tool", "name": "get_current_time", "content": time_response, }) else: print("No tool calls were made by the model.") # Second API call: Get the final response from the model final_response = client.chat.completions.create( model=deployment_name, messages=messages, ) return final_response.choices[0].message.contentget_current_time called with location: San Francisco Timezone found for san francisco The current time in San Francisco is 09:24 AM.

Function calls are at the core of most (if not all) smartbody tool usage designs, but implementing it from scratch can sometimes be challenging. As we've seen in the Lesson 2 As we learned, the Agentic framework provides us with pre-built building blocks to implement tools to use.

Examples of tool usage using the Agentic framework

Here are some examples of how to use design patterns with different agentic framework implementation tools:

Semantic Kernel

Semantic Kernel is an open source AI framework for .NET, Python, and Java developers using Large Language Models (LLMs). NET, Python, and Java developers using large language models (LLMs). serialize process automatically describes your function and its arguments to the model, thus simplifying the process of using function calls. It also handles the back and forth communication between the model and your code. Another advantage of using an agentic framework like Semantic Kernel is that it allows you to access pre-built tools such as the Document Search cap (a poem) code interpreterThe

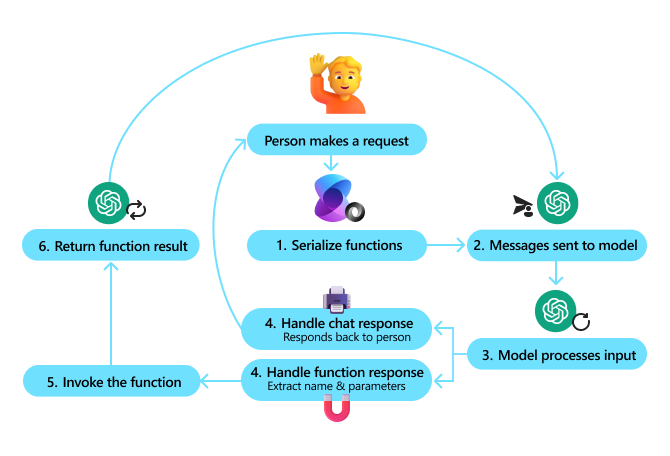

The following figure illustrates the process of making function calls using the Semantic Kernel:

In Semantic Kernel, functions/tools are called take part inPieces. We can do this by combining what we saw earlier with the get_current_time function into a class and place that function in it, thus converting it into a plugin. We can also import the kernel_function decorator that accepts the description of the function. Then, when you create the kernel using the GetCurrentTimePlugin, the kernel automatically serializes the function and its arguments to create the schema to be sent to the Large Language Model (LLM) in the process.

from semantic_kernel.functions import kernel_function

class GetCurrentTimePlugin:

async def __init__(self, location):

self.location = location

@kernel_function(

description="Get the current time for a given location"

)

def get_current_time(location: str = ""):

...

from semantic_kernel import Kernel

# Create the kernel

kernel = Kernel()

# Create the plugin

get_current_time_plugin = GetCurrentTimePlugin(location)

# Add the plugin to the kernel

kernel.add_plugin(get_current_time_plugin)

Azure AI Agent Service

Azure AI Agent Service is a newer agentic framework designed to enable developers to securely build, deploy, and scale high-quality, scalable AI intelligences without managing underlying compute and storage resources. It is particularly useful for enterprise applications because it is a fully managed service with enterprise-grade security.

The Azure AI Agent Service offers several advantages over developing directly with the Large Language Model (LLM) API, including:

- Automated tool calls - no need to parse tool calls, invoke tools, and process responses; all of this is now done server-side!

- Safely managed data - you can rely on threads to store all the information you need instead of managing your own conversation state

- Out-of-the-box tools - Tools you can use to interact with data sources such as Bing, Azure AI Search, and Azure Functions.

The tools available in the Azure AI Agent Service can be divided into two categories:

- Knowledge tools:

- Basic Processing with Bing Search

- Document Search

- Azure AI Search

- Operating Tools:

- function call

- code interpreter

- Tools defined by OpenAI

- Azure Functions

Agent Service allows us to use these tools together as toolset. It also utilizes threads to keep track of the message history from a particular conversation.

Suppose you are a sales agent for Contoso Corporation. You want to develop a dialog agent that can answer questions about your sales data.

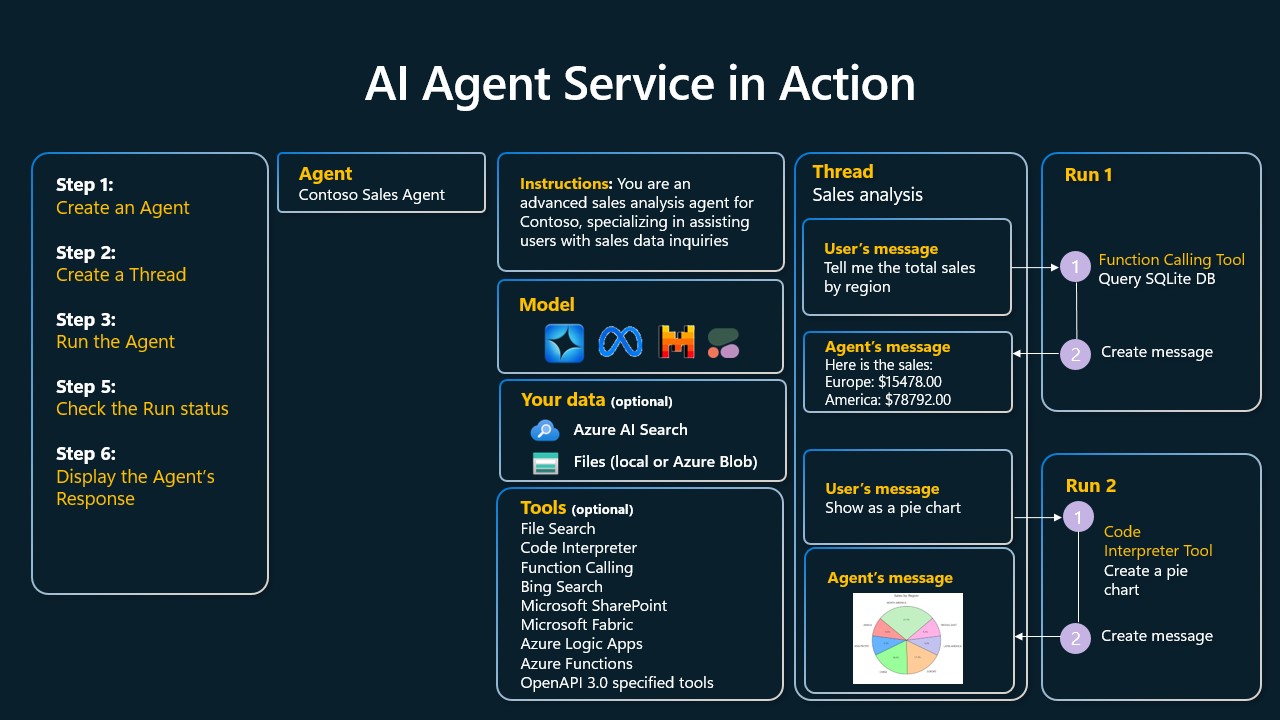

The following figure illustrates how to use the Azure AI Agent Service to analyze your sales data:

To use any of these tools with a service, we can create a client and define a tool or toolset. To actually accomplish this, we can use the following Python code. The Large Language Model (LLM) will be able to look at the toolset and decide whether to use a user-created function or not. fetch_sales_data_using_sqlite_query or a pre-built code interpreter, depending on the user request.

import os

from azure.ai.projects import AIProjectClient

from azure.identity import DefaultAzureCredential

from fecth_sales_data_functions import fetch_sales_data_using_sqlite_query # fetch_sales_data_using_sqlite_query function which can be found in a fetch_sales_data_functions.py file.

from azure.ai.projects.models import ToolSet, FunctionTool, CodeInterpreterTool

project_client = AIProjectClient.from_connection_string(

credential=DefaultAzureCredential(),

conn_str=os.environ["PROJECT_CONNECTION_STRING"],

)

# Initialize function calling agent with the fetch_sales_data_using_sqlite_query function and adding it to the toolset

fetch_data_function = FunctionTool(fetch_sales_data_using_sqlite_query)

toolset = ToolSet()

toolset.add(fetch_data_function)

# Initialize Code Interpreter tool and adding it to the toolset.

code_interpreter = code_interpreter = CodeInterpreterTool()

toolset = ToolSet()

toolset.add(code_interpreter)

agent = project_client.agents.create_agent(

model="gpt-4o-mini", name="my-agent", instructions="You are helpful agent",

toolset=toolset

)

What are some special considerations for using tools to build trusted AI intelligences using design patterns?

A common issue with dynamically generated SQL for Large Language Models (LLMs) is security, specifically the risk of SQL injection or malicious operations such as deleting or tampering with the database. While these concerns are legitimate, they can be effectively mitigated by properly configuring database access rights. For most databases, this involves configuring the database as read-only. For database services such as PostgreSQL or Azure SQL, read-only (SELECT) roles should be assigned to applications.

Protection can be further enhanced by running applications in a secure environment. In an enterprise scenario, data is often extracted from operational systems and transformed into a read-only database or data warehouse with a user-friendly schema. This approach ensures that data is secure, optimized for performance and accessibility, and that applications have restricted read-only access.

© Copyright notes

Article copyright AI Sharing Circle All, please do not reproduce without permission.

Related articles

No comments...