Vision Agent: A Visual Intelligence to Solve Multiple Visual Target Detection Tasks

General Introduction

Vision Agent is an open source project developed by LandingAI (Enda Wu's team) and hosted on GitHub, aiming to help users quickly generate code to solve computer vision tasks. It utilizes an advanced agent framework and a multimodal model to generate efficient vision AI code with simple prompts for a variety of scenarios such as image detection, video tracking, object counting, and more. The tool not only supports rapid prototyping, but also seamlessly deploys to production environments and is widely applicable to manufacturing, medical, agriculture, etc. Vision Agent is designed to automate complex vision tasks, and developers can obtain runnable code by simply providing a description of the task, which greatly reduces the threshold of vision AI development. Currently, it has received more than 3,000 star tags, and the community is highly active and continuously updated.

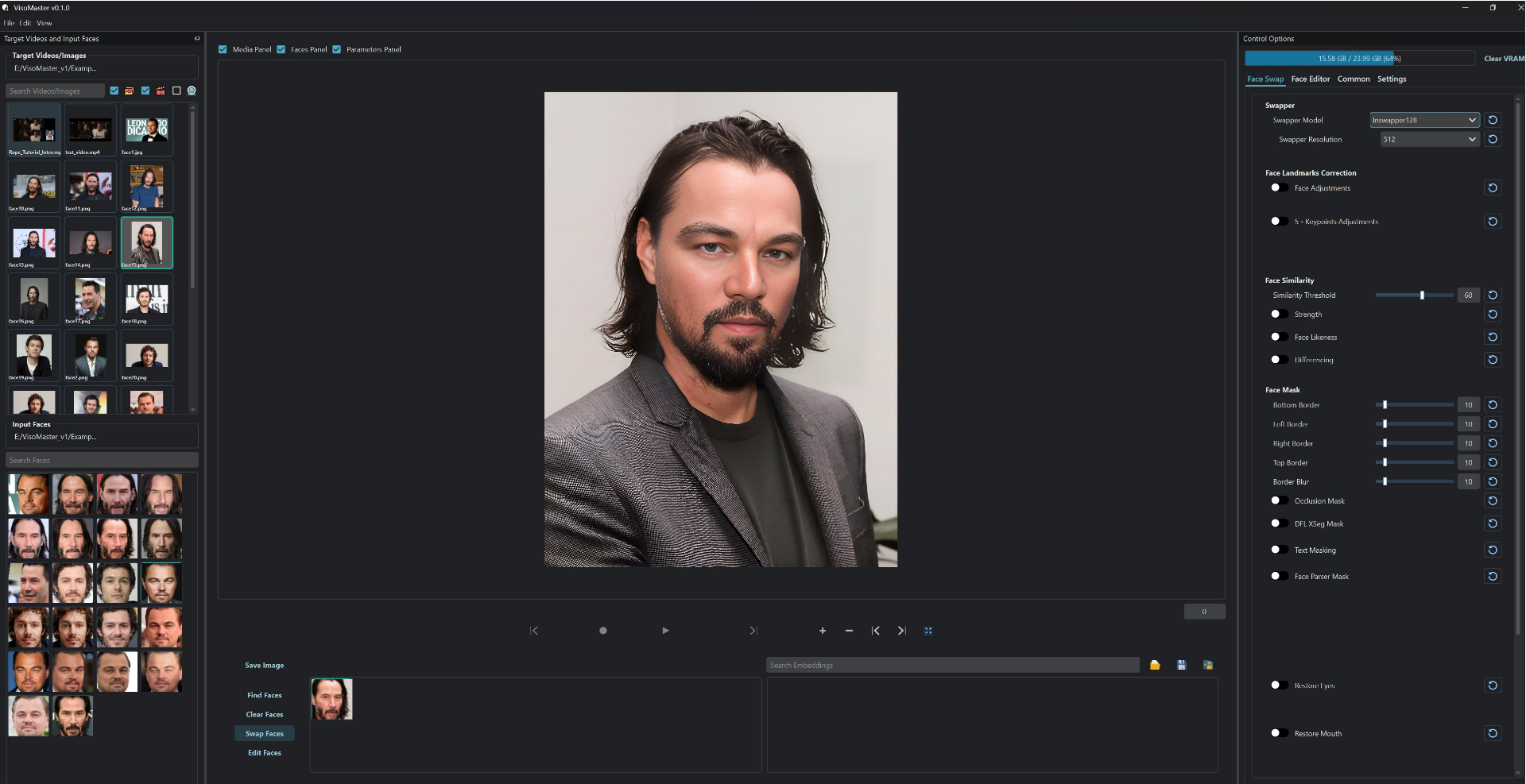

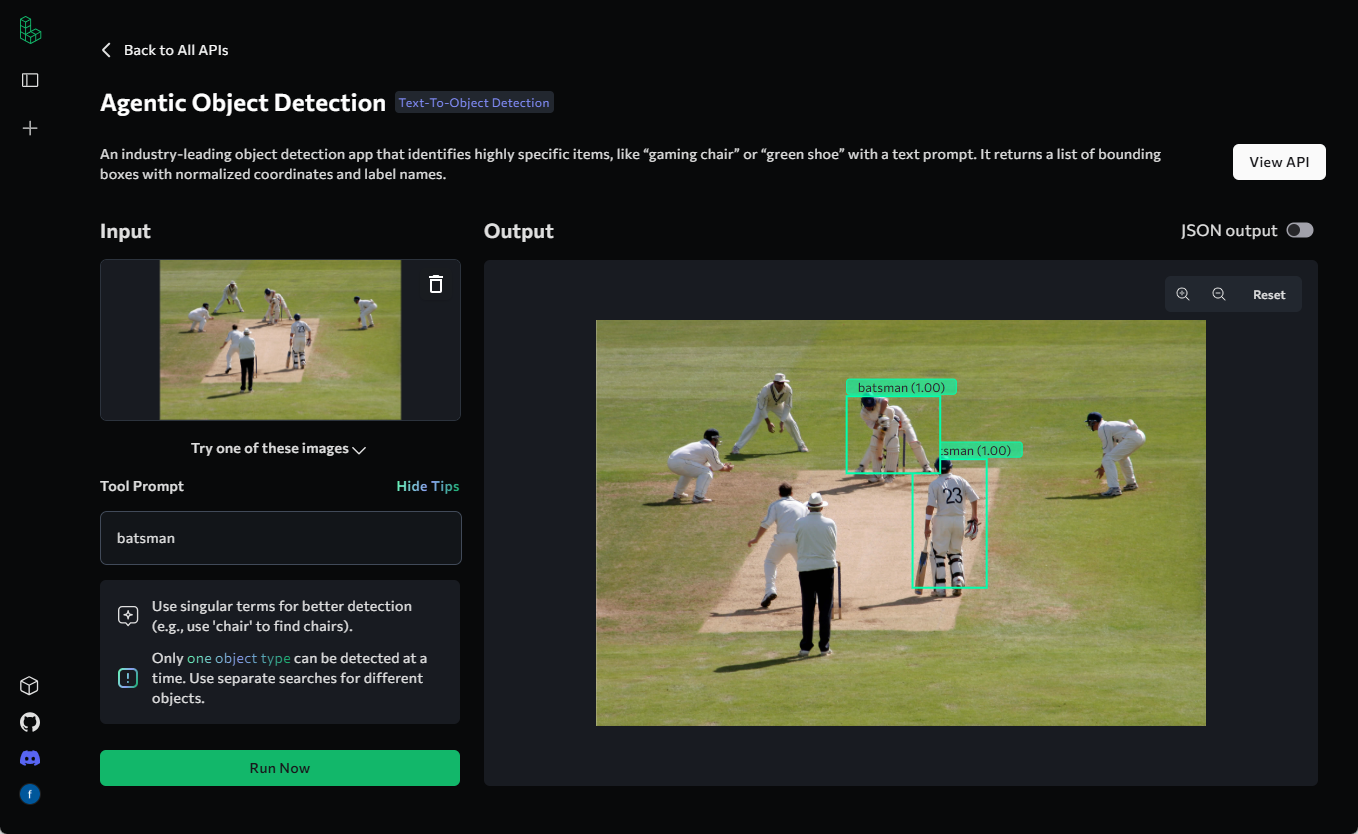

Examples of application can be found in Agentic Object Detection: A Visual Object Detection Tool without Annotation and Training

Function List

- Automatic code generation: Generate visual AI code for an image or video based on a task description entered by the user.

- Object detection and counting: Support for detecting specific objects in an image or video and counting them, e.g. headcount.

- video frame analysis: Extract frames from video and perform object tracking or segmentation.

- Visualization tools: Provides image/video visualization of bounding box overlays and split masks.

- Multi-model support: Integrate a variety of open source visual models that users can switch between according to their needs.

- Customized tool extensions: Allows users to add new tools or models to meet specific task requirements.

- Deployment support: Generate code to support rapid prototyping and deployment in production environments.

Using Help

How to install and use

Vision Agent is a Python based library, users need to get the source code via GitHub and install it in their local environment. Here is the detailed installation and usage procedure:

Installation process

- environmental preparation

- Make sure you have Python 3.8 or later installed on your computer.

- Install Git in order to clone repositories from GitHub.

- Optional: It is recommended to use a virtual environment (e.g.

venvmaybeconda) to isolate dependencies.

- clone warehouse

Open a terminal and run the following command to get the Vision Agent source code:git clone https://github.com/landing-ai/vision-agent.git cd vision-agent

- Installation of dependencies

In the repository directory, install the required dependencies:pip install -r requirements.txtIf you need to use a specific model (e.g. Anthropic or OpenAI), additional configuration of the API key is required, see later.

- Verify Installation

Run the following command to check if the installation was successful:python -c "import vision_agent; print(vision_agent.__version__)"If a version number is returned (e.g. 0.2), the installation is complete.

Using the main functions

The core function of the Vision Agent is to generate code and perform visual tasks from prompts. The following is a detailed step-by-step procedure for the main functions:

1. Generate visual AI code

- procedure::

- Import the Vision Agent module:

from vision_agent.agent import VisionAgentCoderV2 agent = VisionAgentCoderV2(verbose=True) - Prepare the task description and media files. For example, count the number of people in an image:

code_context = agent.generate_code([ {"role": "user", "content": "Count the number of people in this image", "media": ["people.png"]} ]) - Save the generated code:

with open("count_people.py", "w") as f: f.write(code_context.code)

- Import the Vision Agent module:

- Functional Description: After entering an image path and a task description, the Vision Agent automatically generates Python code that contains the object detection and counting logic. The generated code relies on built-in tools such as

countgd_object_detectionThe

2. Object detection and visualization

- procedure::

- Load the image and run the detection:

import vision_agent.tools as T image = T.load_image("people.png") dets = T.countgd_object_detection("person", image) - Overlay the bounding box and save the result:

viz = T.overlay_bounding_boxes(image, dets) T.save_image(viz, "people_detected.png") - Visualize results (optional):

import matplotlib.pyplot as plt plt.imshow(viz) plt.show()

- Load the image and run the detection:

- Functional Description: This function detects a specified object (e.g., "person") in an image and draws a bounding box on the image, which is suitable for quality checking, monitoring, and other scenarios.

3. Video frame analysis and tracking

- procedure::

- Extract video frames:

frames_and_ts = T.extract_frames_and_timestamps("people.mp4") frames = [f["frame"] for f in frames_and_ts] - Run an object trace:

tracks = T.countgd_sam2_video_tracking("person", frames) - Save the visualization video:

viz = T.overlay_segmentation_masks(frames, tracks) T.save_video(viz, "people_tracked.mp4")

- Extract video frames:

- Functional Description: extracts frames from video, detects and tracks objects, and finally generates a visualization of the video with a segmentation mask, suitable for dynamic scene analysis.

4. Configuring other models

- procedure::

- Modify the configuration file to use other models (e.g. Anthropic):

cp vision_agent/configs/anthropic_config.py vision_agent/configs/config.py - Set the API key:

Add the key to the environment variable:export ANTHROPIC_API_KEY="your_key_here" - Rerun the code generation task.

- Modify the configuration file to use other models (e.g. Anthropic):

- Functional Description: Vision Agent supports multiple models by default, and users can switch them according to their needs to enhance the effectiveness of their tasks.

Example of an operation process: Calculating the percentage of coffee bean filling

Suppose you have an image of a jar with coffee beans and want to calculate the fill percentage:

- Write a task description and run it:

agent = VisionAgentCoderV2() code_context = agent.generate_code([ {"role": "user", "content": "What percentage of the jar is filled with coffee beans?", "media": ["jar.jpg"]} ]) - Execute the generated code:

The generated code may be:from vision_agent.tools import load_image, florence2_sam2_image image = load_image("jar.jpg") jar_segments = florence2_sam2_image("jar", image) beans_segments = florence2_sam2_image("coffee beans", image) jar_area = sum(segment["mask"].sum() for segment in jar_segments) beans_area = sum(segment["mask"].sum() for segment in beans_segments) percentage = (beans_area / jar_area) * 100 if jar_area else 0 print(f"Filled percentage: {percentage:.2f}%") - Run the code to get the result.

caveat

- Make sure the input image or video is in the correct format (e.g. PNG, MP4).

- If you encounter dependency issues, try updating the

pip install --upgrade vision-agentThe - Community Support: For help, join LandingAI's Discord community for advice.

© Copyright notes

Article copyright AI Sharing Circle All, please do not reproduce without permission.

Related posts

No comments...