General Introduction

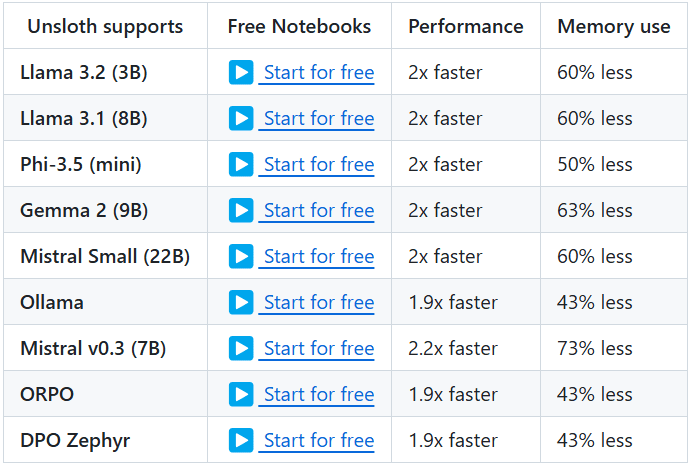

Unsloth is an open source project designed to provide efficient tools for fine-tuning and training large language models (LLMs). The project supports a wide range of well-known models, including Llama, Mistral, Phi, and Gemma, etc. Unsloth's key features are the ability to significantly reduce memory usage and speed up training, allowing users to finish fine-tuning and training their models in less time. In addition, Unsloth provides extensive documentation and tutorials to help users quickly get started and take full advantage of its features.

Function List

- Efficient fine-tuning: Support for multiple models such as Llama, Mistral, Phi, and Gemma, with 2-5x faster fine-tuning and 50-80% less memory usage.

- free of charge: A free-to-use notebook is provided so that users can simply add a dataset and run all the code to get a fine-tuned model.

- Multiple export formats: Support for exporting fine-tuned models to GGUF, Ollama, vLLM or uploading to Hugging Face.

- dynamic quantification: Supports dynamic 4-bit quantization to improve model accuracy while adding less than 10% of memory.

- Long Context Support: Supports 89K context windows for Llama 3.3 (70B) models and 342K context windows for Llama 3.1 (8B) models.

- Visual Modeling Support: Supports vision models such as Llama 3.2 Vision (11B), Qwen 2.5 VL (7B) and Pixtral (12B).

- Reasoning Optimization: Provides a variety of inference optimization options to significantly improve inference speed.

Using Help

Installation process

- Installation of dependencies: Ensure that Python 3.8 and above is installed, and that the following dependencies are installed:

bash

pip install torch transformers datasets

- clone warehouse: Clone the Unsloth repository using Git:

bash

git clone https://github.com/unslothai/unsloth.git

cd unsloth

- Install Unsloth: Run the following command to install Unsloth:

bash

pip install -e .

Tutorials

- Loading Models: Load the pre-trained model in a Python script:

from transformers import AutoModelForCausalLM, AutoTokenizer model_name = "unslothai/llama-3.3" model = AutoModelForCausalLM.from_pretrained(model_name) tokenizer = AutoTokenizer.from_pretrained(model_name) - fine-tuned model: Use the notebook provided by Unsloth for model fine-tuning. Here is a simple example:

from unsloth import Trainer, TrainingArguments training_args = TrainingArguments( output_dir=". /results", num_train_epochs=3, per_device_train_batch_size=4, save_steps=10_000, save_total_limit=2, ) trainer = Trainer( model=model, args=training_args, train_dataset=train_dataset, ) trainer = Trainer( train_dataset=train_dataset, eval_dataset=eval_dataset, ) trainer = Trainer( eval_dataset=eval_dataset, ) ) trainer.train() - Export model: After fine-tuning is complete, the model can be exported to a variety of formats:

python

model.save_pretrained(". /finetuned_model")

tokenizer.save_pretrained(". /finetuned_model")

Detailed Function Operation

- dynamic quantificationUnsloth supports dynamic 4-bit quantization during the fine-tuning process, which can significantly improve the accuracy of the model while adding less than 10% of memory usage. Users can enable this feature in the training parameters:

training_args = TrainingArguments( output_dir=". /results", num_train_epochs=3, per_device_train_batch_size=4, save_steps=10_000, save_total_limit=2, quantization="dynamic_4bitization", and quantization="dynamic_4bit" ) - Long Context Support: Unsloth supports the 89K context window of the Llama 3.3 (70B) model and the 342K context window of the Llama 3.1 (8B) model. This makes the model even better at handling long text. The user can specify the size of the context window when loading the model:

model = AutoModelForCausalLM.from_pretrained(model_name, context_window=89000) - Visual Modeling Support: Unsloth also supports a variety of vision models such as Llama 3.2 Vision (11B), Qwen 2.5 VL (7B) and Pixtral (12B). Users can use these models for image generation and processing tasks:

python

model_name = "unslothai/llama-3.2-vision"

model = AutoModelForImageGeneration.from_pretrained(model_name)