SkyReels-V1: An Open Source Video Model for Generating High Quality Human Action Video

General Introduction

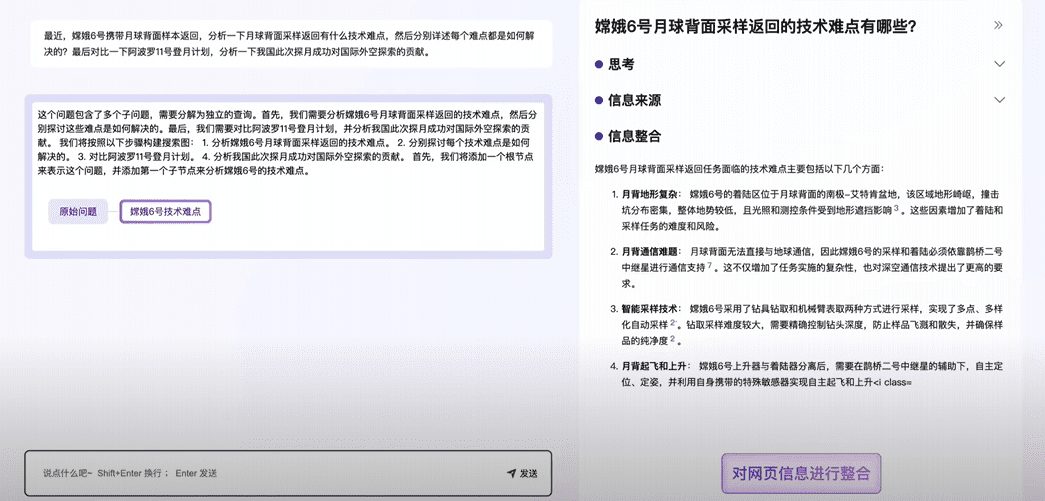

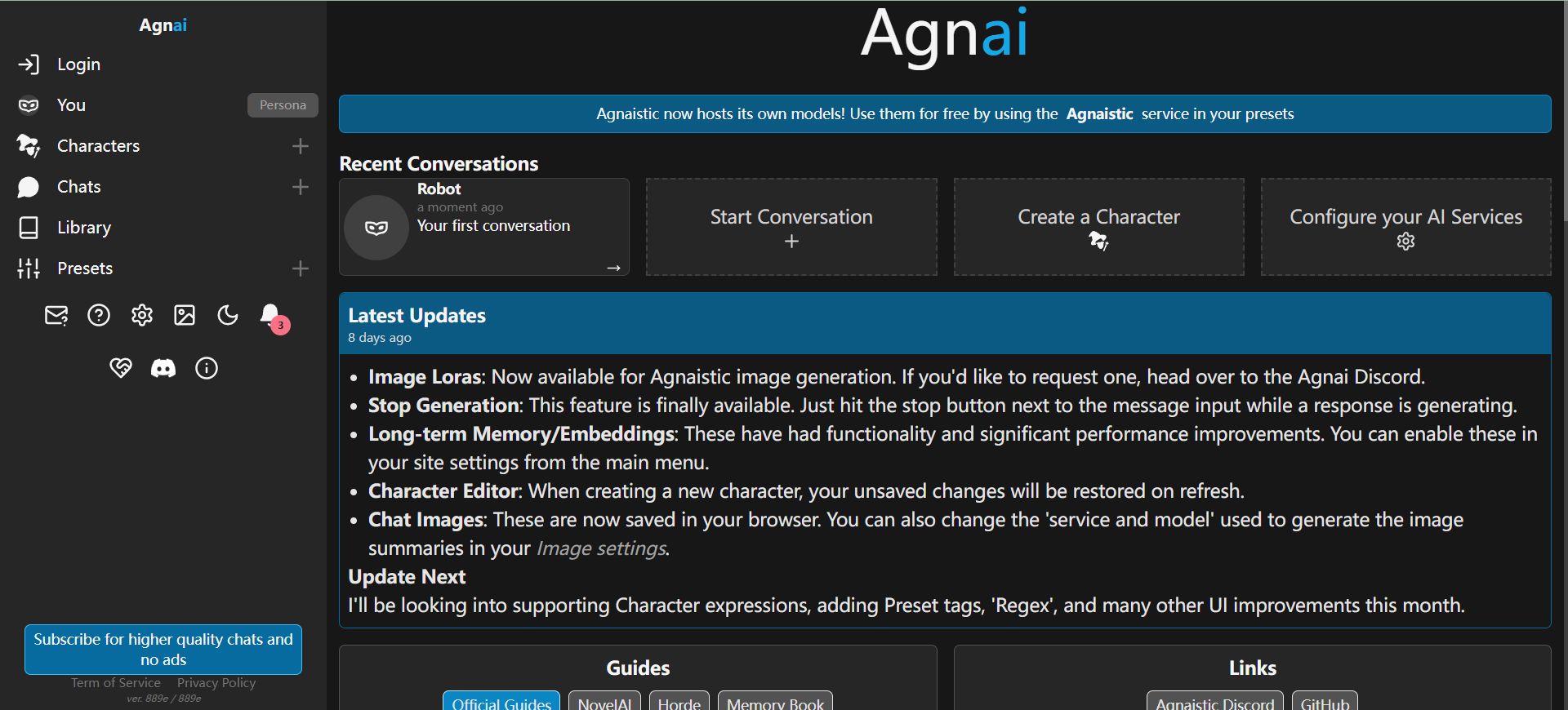

SkyReels-V1 is an open source project developed by the SkyworkAI team focused on generating high-quality, human-centered video content. The project is based on HunyuanVideo By fine-tuning tens of millions of high-quality movie and TV clips, the world's first basic human action video model has been created. It not only supports text-to-video (T2V) and image-to-video (I2V) functionality, but also generates realistic animations with 33 facial expressions and more than 400 natural movements, with film-quality images. the open-source nature of SkyReels-V1 sets it apart from other tools in its class, and makes it a suitable tool for creators, educators, and AI researchers to use in short sketches, animations, or technical explorations. The project is hosted on GitHub. The project is hosted on GitHub and provides detailed code, model weights, and documentation for users to get started quickly.

Function List

- Text to video (T2V):: Generate dynamic videos based on user-entered text descriptions, such as "A cat wearing sunglasses works as a lifeguard at the pool".

- Image to video (I2V): Convert still images into motion video, preserving original image features and adding natural movement.

- Advanced Facial Animation: Supports 33 subtle expressions and more than 400 combinations of movements, accurately rendering human emotions and body language.

- Cinema-quality picture: Utilizes high-quality film and television data training to provide professional composition, lighting effects, and camera sense.

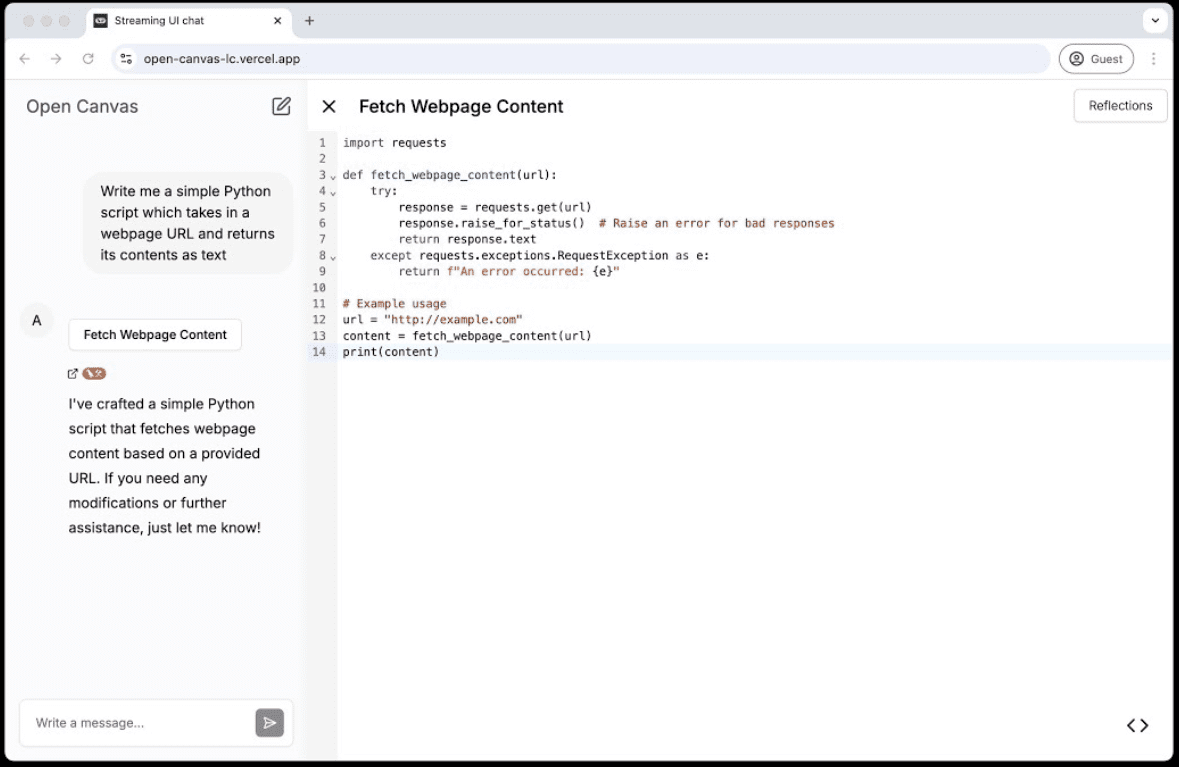

- Efficient Reasoning Framework: Fast video generation through SkyReelsInfer, which supports multi-GPU parallel computing to improve generation efficiency.

- Flexible parameter adjustment: User-definable parameters such as video resolution (e.g., 544x960), number of frames (e.g., 97 frames), and guidance scale.

- Open Source Model Weighting: Provide pre-trained models for direct download and secondary development by developers.

Using Help

Installation process

SkyReels-V1 is a Python based tool that requires some hardware and software environment support. Below are the detailed installation and usage steps:

Environmental requirements

- software: It is recommended to use computers with NVIDIA GPUs such as RTX 4090 or A800 to ensure CUDA support.

- operating system: Windows, Linux or macOS (the latter may require additional configuration).

- software dependency: Python 3.10+, CUDA 12.2, PyTorch, Git.

Installation steps

- clone warehouse

Open a terminal and enter the following command to download the SkyReels-V1 project code:

git clone https://github.com/SkyworkAI/SkyReels-V1.git

cd SkyReels-V1

This will create a project folder locally.

- Creating a Virtual Environment(Optional but recommended)

To avoid dependency conflicts, a virtual environment is recommended:

conda create -n skyreels python=3.10

conda activate skyreels

- Installation of dependencies

The program provides arequirements.txtfile, run the following command to install the required libraries:

pip install -r requirements.txt

Ensure that the network is open, it may take a few minutes to complete the installation.

- Download model weights

Model weights for SkyReels-V1 are hosted on Hugging Face and can be downloaded locally manually or by specifying the path directly through the code. Access Hugging Face Model PageDownloadSkyReels-V1-Hunyuan-T2Vfolder, placed in the project directory (e.g./path/to/SkyReels-V1/models). - Verify Installation

Run the sample commands to test that the environment is working:

python3 video_generate.py --model_id ./models/SkyReels-V1-Hunyuan-T2V --prompt "FPS-24, A dog running in a park"

If no errors are reported and a video is generated, the installation is successful.

Operation of the main functions

Text to video (T2V)

- Preparing Cues

Write a cue word that describes the content of the video, it needs to start with "FPS-24", for example:

FPS-24, A cat wearing sunglasses and working as a lifeguard at a pool

- Run the generate command

Enter the following command in the terminal:

python3 video_generate.py

--model_id /path/to/SkyReels-V1-Hunyuan-T2V

--guidance_scale 6.0

--height 544

--width 960

--num_frames 97

--prompt "FPS-24, A cat wearing sunglasses and working as a lifeguard at a pool"

--embedded_guidance_scale 1.0

--quant --offload --high_cpu_memory

--gpu_num 1

--guidance_scale: Controls the intensity of text steering, recommended 6.0.--heightcap (a poem)--width: Set the video resolution, default 544x960.--num_frames: Generates frames, 97 frames equals approximately 4 seconds of video (24 FPS).--quant,--offload: Optimize memory usage for lower-end devices.

- output result

The generated video will be saved in theresults/skyreelsfolder, with a filename of cue word + seed value, e.g.FPS-24_A_cat_wearing_sunglasses_42_0.mp4The

Image to video (I2V)

- Prepare the image

Upload a still image (e.g. PNG or JPG), making sure it is clear, with a recommended resolution close to 544x960. - Run command

increase--task_type i2vcap (a poem)--imageparameters, for example:

python3 video_generate.py

--model_id /path/to/SkyReels-V1-Hunyuan-T2V

--task_type i2v

--guidance_scale 6.0

--height 544

--width 960

--num_frames 97

--prompt "FPS-24, A person dancing"

--image ./input/cat_photo.png

--embedded_guidance_scale 1.0

- View Results

The output video will generate dynamic content based on the image, also saved in theresults/skyreelsFolder.

Adjusting parameters to optimize the effect

- Frame Rate and Duration:: Modifications

--num_framescap (a poem)--fps(default 24), or 240 fps for 10-second videos. - picture quality:: Increase

--num_inference_steps(default 30), which improves detail but takes longer. - Multi-GPU Support: Settings

--gpu_numfor the number of available GPUs to accelerate processing.

Featured Function Operation

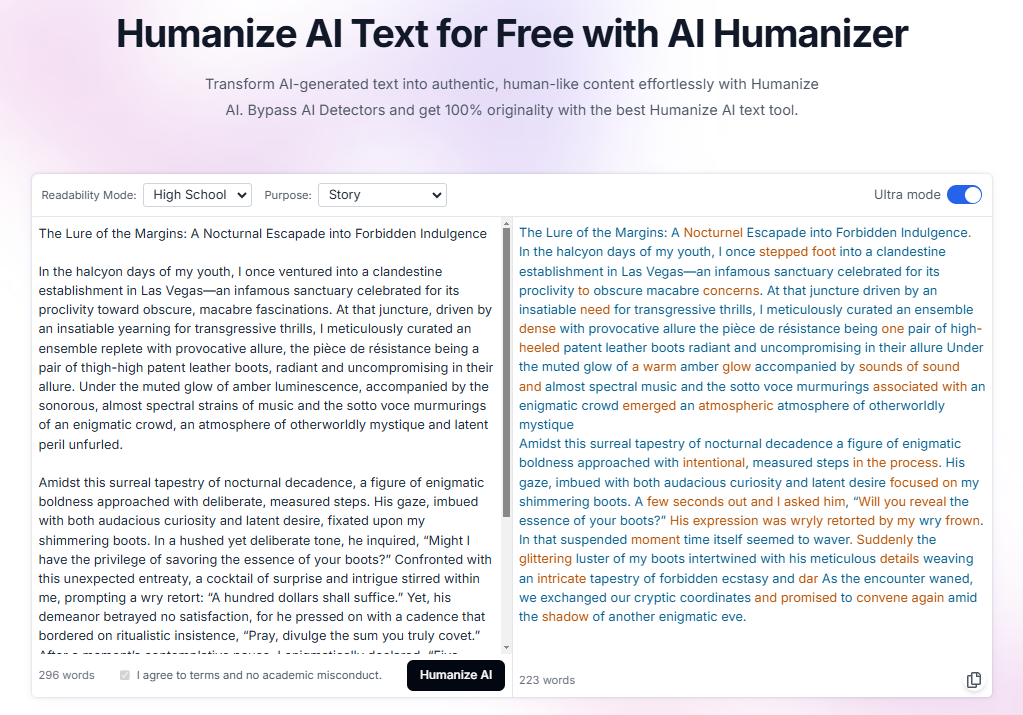

Advanced Facial Animation

The centerpiece of SkyReels-V1 is its facial animation capability. The cue describes a specific expression (e.g., "surprised" or "smiling"), and the model automatically generates one of 33 expressions with natural movements. For example:

FPS-24, A woman laughing heartily in a cafe

Once generated, the characters in the video display realistic smiles and body micro-movements, with details comparable to real-life performances.

Movie-quality graphics

With no additional configuration required, SkyReels-V1 outputs video with professional lighting and composition by default. Add a scene description to the cue (e.g. "under neon lights at night") to get a more cinematic look.

caveat

- hardware limitation: If the GPU memory is insufficient (e.g., less than 12GB), it is recommended to enable the

--quantcap (a poem)--offload, or reduce the resolution to 512x320. - cue-word technique: Concise and specific descriptions work best, avoid vague words.

- Community Support: Visit the GitHub Issues page to submit feedback or check out the community discussions.

With these steps, users can easily get started with SkyReels-V1 and generate high-quality video content, whether it's for short skits or animation experiments.

© Copyright notes

Article copyright AI Sharing Circle All, please do not reproduce without permission.

Related posts

No comments...