Visual RAG for PDF with Vespa - a Python demo application

present (sb for a job etc)

present (sb for a job etc)

Thomas joined Vespa in April 2024 as a Senior Software Engineer. In his last previous assignment as an AI consultant, he actually built a large-scale PDF collection based on Vespa's RAG Applications.

PDFs are ubiquitous in the corporate world and the ability to search and retrieve information from them is a common use case. The challenge is that many PDFs typically fall into one or more of the following categories:

- The fact that they are scanned documents means that the text cannot be easily extracted, so OCR must be used, which adds to the complexity.

- They contain a large number of charts, tables and diagrams, which are not easily retrievable, even if the text can be extracted.

- They contain many images, which sometimes contain valuable information.

Note that the term ColPali There are two meanings:

- particular mould and a related discuss a paper or thesis (old) It trains a LoRa-adapter on top of the VLM (PaliGemma) to generate joint text and image embeddings (one embedding for each patch in the image) for "post-interaction" based on the ColBERT Methods to extend the visual language model.

- It also represents a visual document retrieval orientations that combines the capabilities of VLMs with efficient post-interaction mechanisms. This direction is not limited to the specific model in the original paper, but can also be applied to other VLMs, such as our proposal to use ColQwen2 and Vespa's notebook The

In this blog post, we'll dive into how to build a real-time demo application showcasing visual RAG on Vespa using ColPali embeds. We will describe the architecture of the application, the user experience, and the technology stack used to build the application.

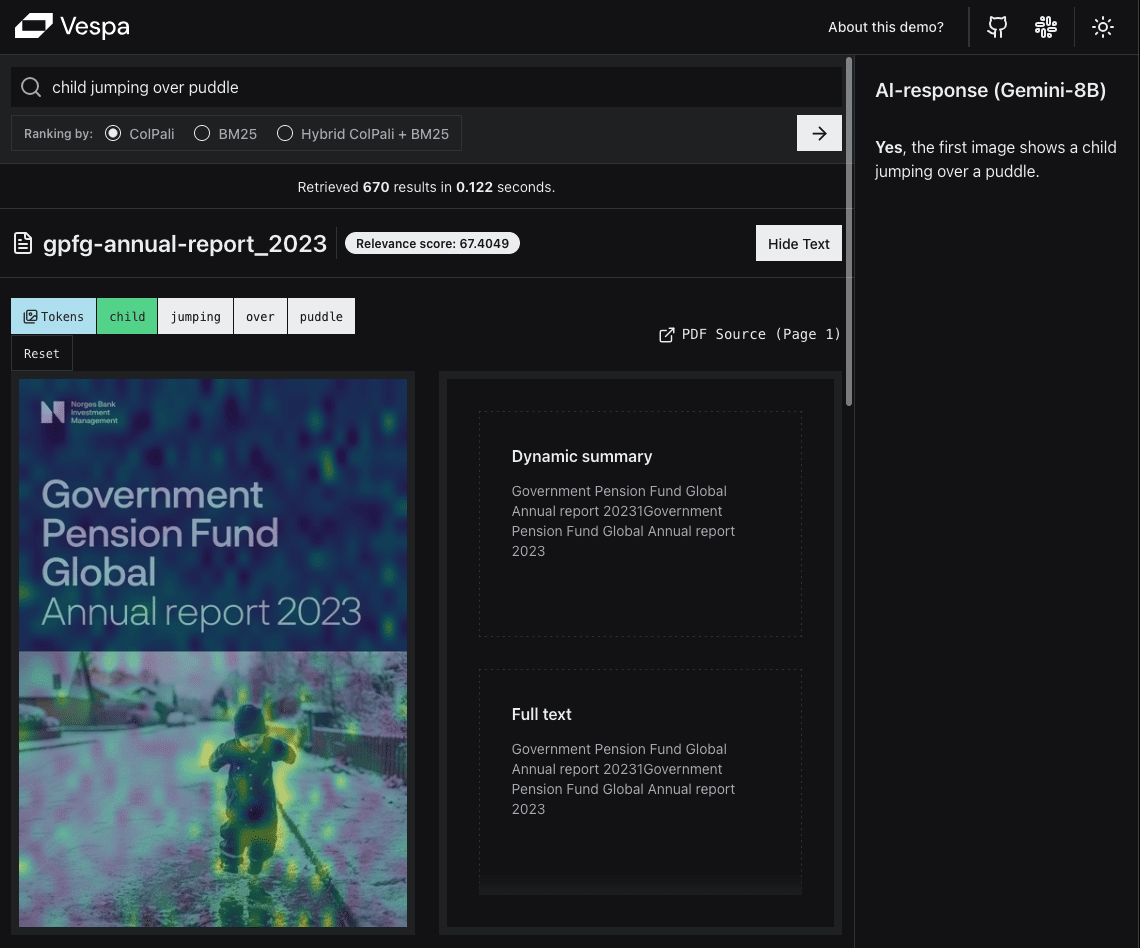

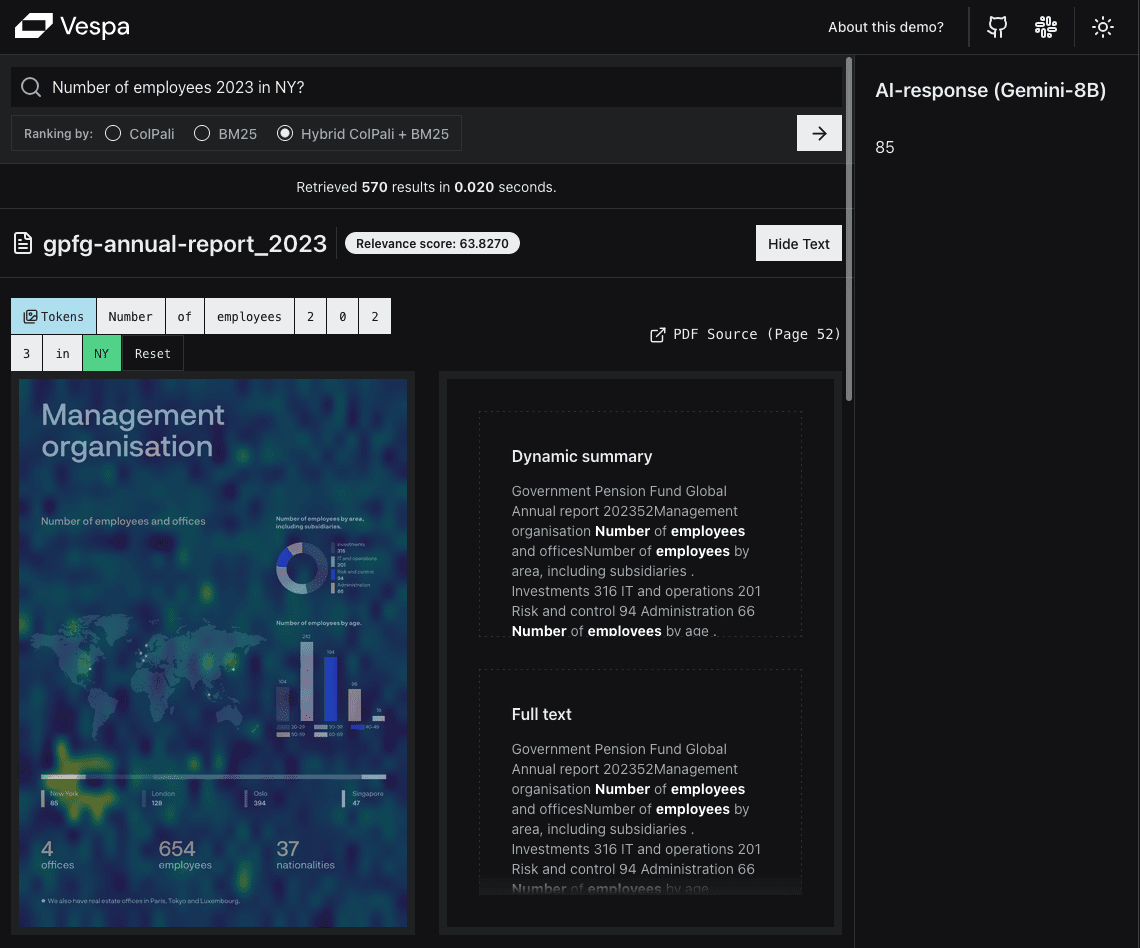

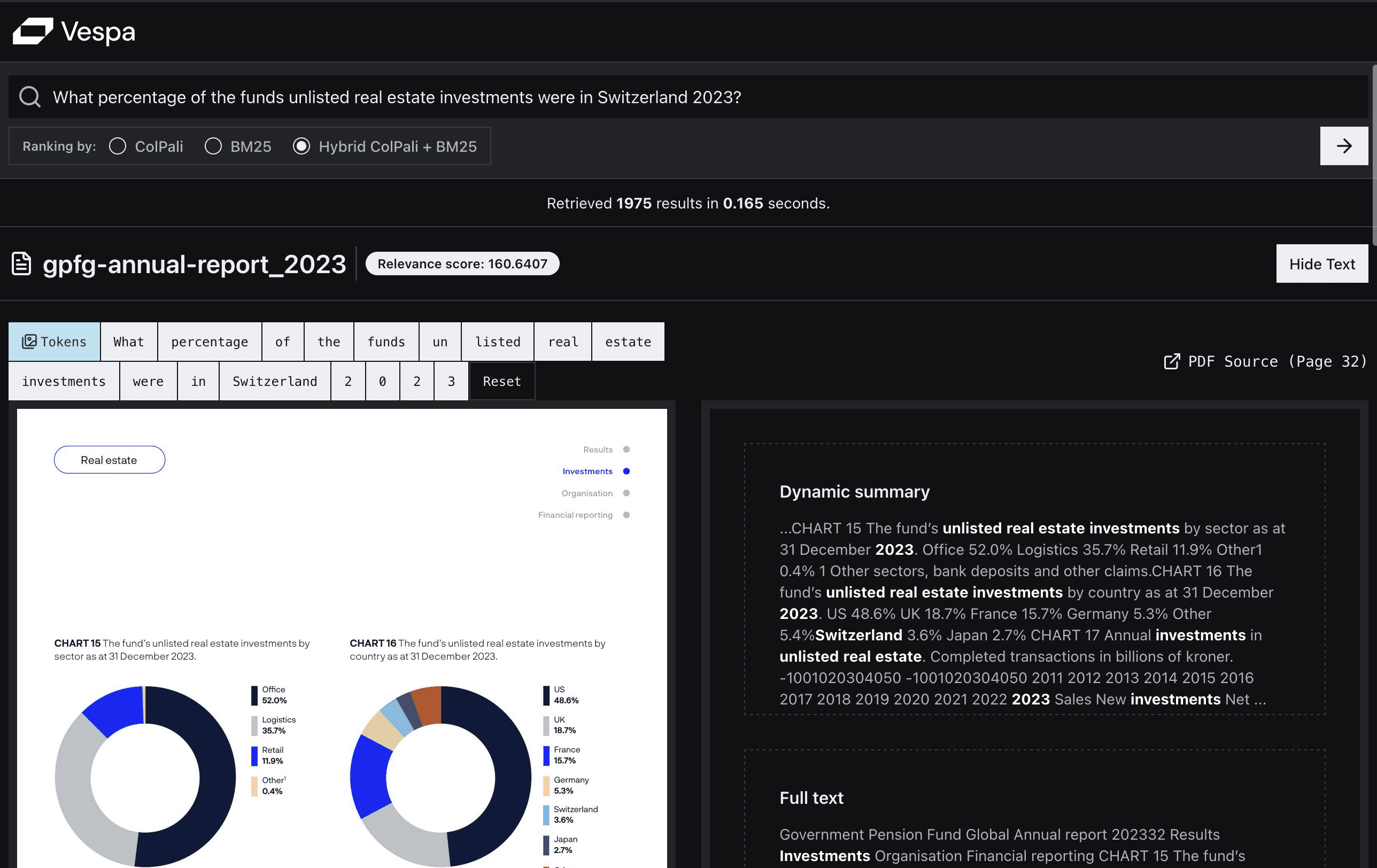

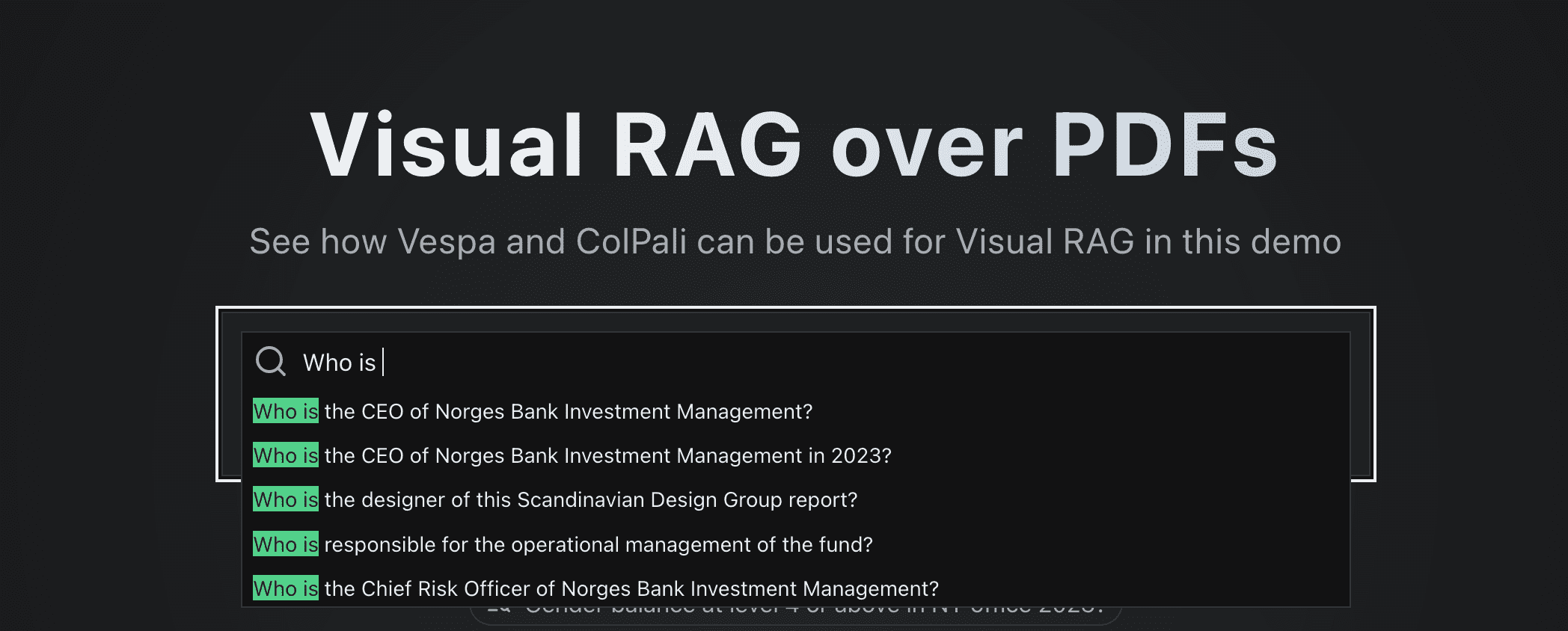

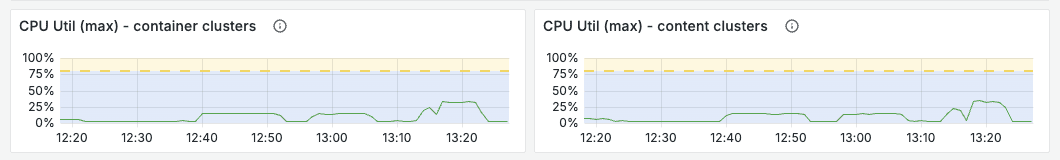

Here are some screenshots of the demo app:

The first example is not a common query, but it demonstrates the power of visual search for certain types of queries. This is a good example of the "What You See Is What You Search (WYSIWYS)" paradigm.

Similarity mapping highlights the most similar sections so that users can easily see which parts of the page are most relevant to the query.

The second example, a more common user query, demonstrates the power of ColPali in terms of semantic similarity.

The second example, a more common user query, demonstrates the power of ColPali in terms of semantic similarity.

Having experienced first-hand the difficulties of making PDFs searchable, Thomas is particularly interested in the latest advances in the field of visual language modeling (VLM).

After reading the previous post on ColPali's Vespa Blog Posts collaboration with Jo Bergum After a series of in-depth discussions, he was inspired to propose a project to build a visual RAG application using Vespa.

At Vespa, employees have the opportunity to propose a program of work they would like to carry out during each iteration cycle. As long as the proposed work aligns with the company's goals and there are no other urgent priorities, we can get started. For Thomas, who comes from the consulting industry, this autonomy is a breath of fresh air.

TL;DR

We constructed a Real-time demo applicationThis article shows how to implement PDF-based Visual RAG using ColPali embedding in Vespa and Python with only FastHTML.

We also provide reproduction code:

- A runnable notebookThis is used to set up your own Vespa application to implement Visual RAG.

- FastHTML Application code that you can use to set up a web application that interacts with the Vespa application.

Project Objectives

The project has two main objectives:

1. Building a real-time presentation

While developers may be happy with a demo with terminal JSON output as the UI, the truth is that most people prefer a web interface.

This will allow us to demonstrate the PDF Visual RAG in Vespa based on ColPali embedding, which we believe is relevant in a wide range of domains and use cases such as legal, financial, construction, academic and medical.

This will allow us to demonstrate the PDF Visual RAG in Vespa based on ColPali embedding, which we believe is relevant in a wide range of domains and use cases such as legal, financial, construction, academic and medical.

We are confident that this will be important in the future, but we have not yet seen any real-world applications that demonstrate this.

At the same time, it provided us with a lot of valuable insights in terms of efficiency, scalability and user experience. Also, we were very curious (or a little nervous) to see if it was fast enough to provide a good user experience.

We also wanted to highlight some of Vespa's useful features, for example:

- Sort by stage

- Keyword association suggestions

- Multi-vector MaxSim calculation

2. Create an open source template

We would like to provide a template for others to build their own Visual RAG applications.

This template should be sufficient for otherssimplerThe program is not required to master a large number of specific programming languages or frameworks.

Creating Data Sets

For our demo, we wanted to use a PDF document dataset containing a large amount of important information in the form of images, tables and charts. We also need a dataset of sufficient size to demonstrate that it is not feasible to upload all the images directly to the VLM (skipping the retrieval step).

utilization gemini-1.5-flash-8bThe current maximum number of input images is 3600.

Since there was no public dataset that met our needs, we decided to create our own.

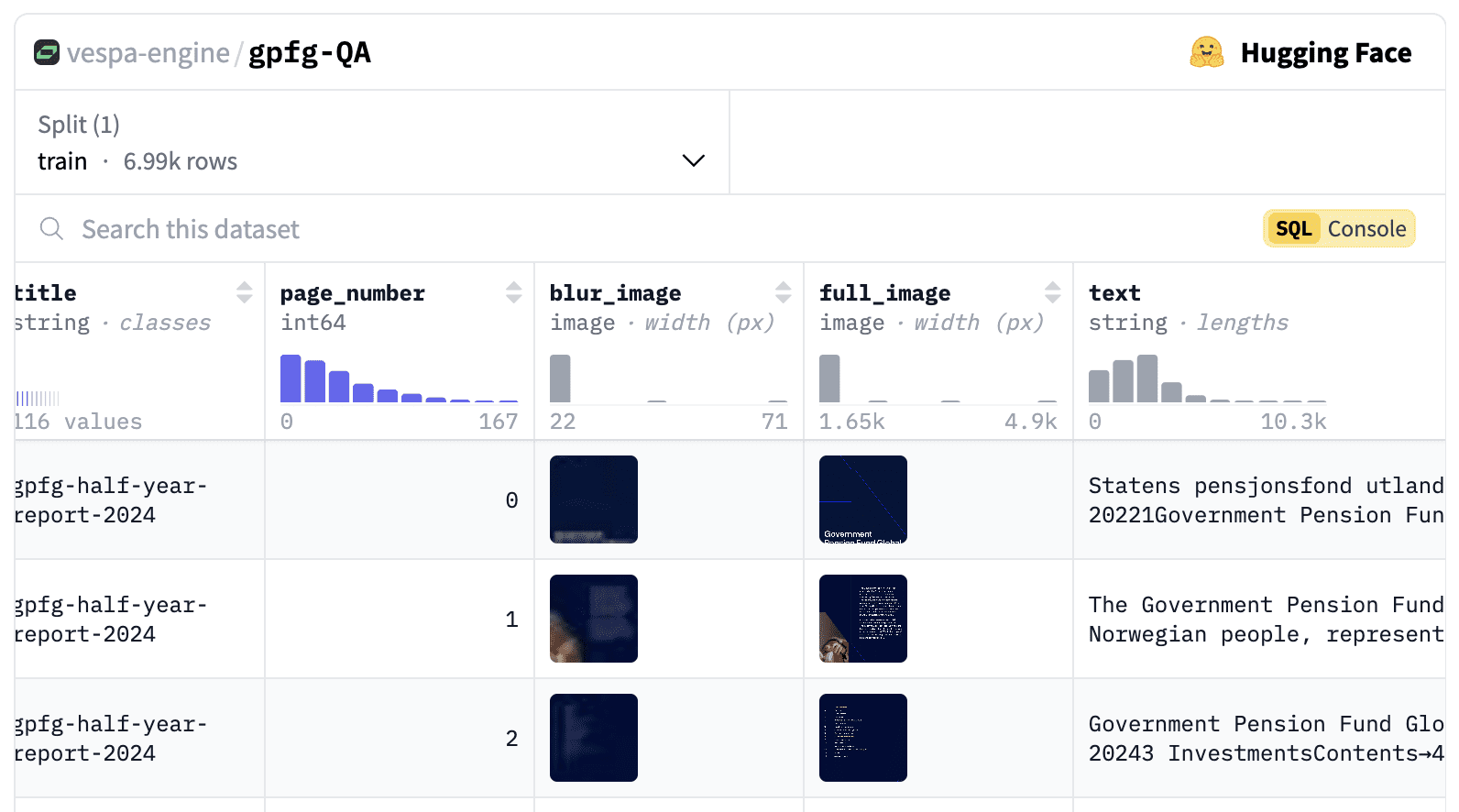

As proud Norwegians, we were pleased to find that the Norwegian Government Global Pension Fund (GPFG, also known as the Petroleum Fund) has published annual reports and governance documents on its website since 2000. There is no mention of copyright on the website and its recentheralddemonstrated that it is the most transparent fund in the world, so we are confident that we can use this data for demonstration purposes.

The dataset consists of 116 different PDF reports from 2000 to 2024, totaling 6992 pages.

The dataset, which includes images, text, URLs, page numbers, generated questions, queries, and ColPali embeddings, is now available in the here areThe

Generate synthetic queries and questions

We also generate synthetic queries and questions for each page. These can be used for two purposes:

- Provide keyword association suggestions for the search box as the user types.

- For assessment purposes.

The hints we use to generate questions and queries come from the This great blog post by Daniel van StrienThe

您是一名投资者、股票分析师和金融专家。接下来您将看到挪威政府全球养老基金(GPFG)发布的报告页面图像。该报告可能是年度或季度报告,或关于责任投资、风险等主题的政策报告。

您的任务是生成检索查询和问题,这些查询和问题可以用于在大型文档库中检索此文档(或基于该文档提出问题)。

请生成三种不同类型的检索查询和问题。

检索查询是基于关键词的查询,由 2-5 个单词组成,用于在搜索引擎中找到该文档。

问题是自然语言问题,文档中包含该问题的答案。

查询类型如下:

1. 广泛主题查询:覆盖文档的主要主题。

2. 具体细节查询:涵盖文档的某个具体细节或方面。

3. 可视元素查询:涵盖文档中的某个可视元素,例如图表、图形或图像。

重要指南:

- 确保查询与检索任务相关,而不仅仅是描述页面内容。

- 使用基于事实的自然语言风格来书写问题。

- 设计查询时,以有人在大型文档库中搜索此文档为前提。

- 查询应多样化,代表不同的搜索策略。

将您的回答格式化为如下结构的 JSON 对象:

{

"broad_topical_question": "2019 年的责任投资政策是什么?",

"broad_topical_query": "2019 责任投资政策",

"specific_detail_question": "可再生能源的投资比例是多少?",

"specific_detail_query": "可再生能源投资比例",

"visual_element_question": "总持有价值的时间趋势如何?",

"visual_element_query": "总持有价值趋势"

}

如果没有相关的可视元素,请在可视元素问题和查询中提供空字符串。

以下是需要分析的文档图像:

请基于此图像生成查询,并以指定的 JSON 格式提供响应。

只返回 JSON,不返回任何额外说明文本。

We use gemini-1.5-flash-8b Generate questions and queries.

take note of

On the first run, we found that some very lengthy issues were generated, so we added a new section to the generationconfig Added the maxOutputTokens=500It's very helpful.

We also noticed some oddities in the generated questions and queries, such as "string" appearing multiple times in the questions. We do want to do more in-depth validation of the generated questions and queries.

Use Python throughout

Our target audience is the growing data science and AI community. This group is likely to be one of the most important users of Python on GitHub. Octoverse Status ReportOne of the main reasons why it's ranked as the most popular (and fastest-growing) programming language in the

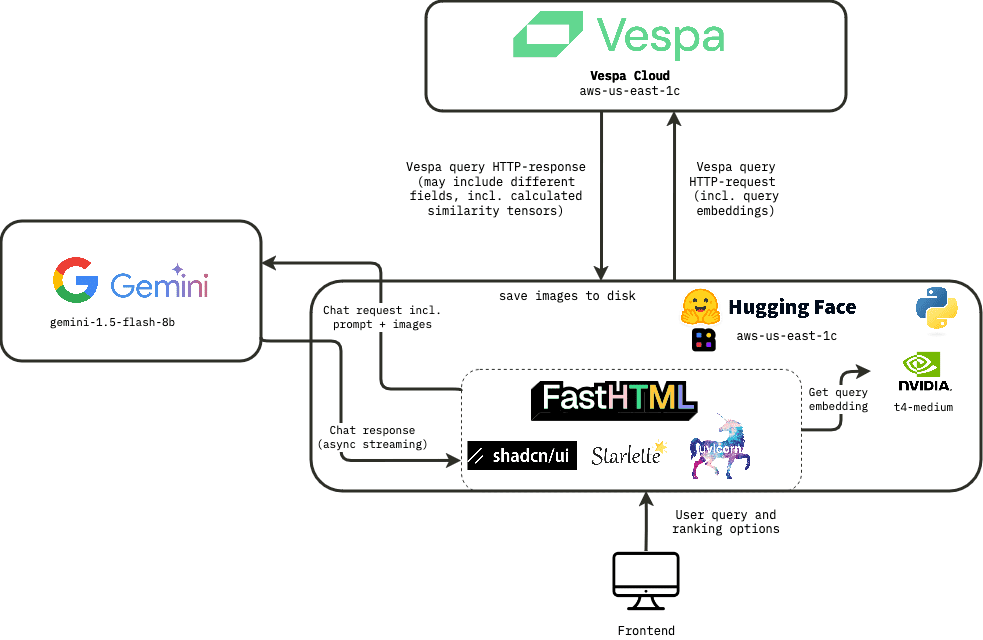

We need to use Python on the backend for query embedded reasoning (using the colpali-engine-library), until Vespa natively supports the ColpaliEmbedder (Under development, see github issue). If other languages (and their frameworks) are used for the front-end, it will increase the complexity of the project, thus making it more difficult for others to reproduce the application.

Therefore, we decided to build the entire application in Python.

Choice of front-end framework

Streamlit and Gradio

We recognize that it's easy to build simple PoCs (proof of concepts) using Gradio and Streamlit, and we've used them in the past for that purpose. But there are two main reasons why we decided against them:

- We needed a professional looking UI that could be used in a production environment.

- We need good performance. Waiting a few seconds or having the UI freeze intermittently is not enough for the application we want to present.

While we love working out, we don't like the "Running" message in the upper right corner of the Streamlit screen.

FastHTML to the rescue

We are. answer.ai of their loyal fans. So when they released earlier this year FastHTML3When we do, we're happy to give it a try.

FastHTML is a framework for building modern web applications using pure Python. According to its vision (of the future)::

FastHTML is a general-purpose, full-stack Web programming system, in the same category as Django, NextJS, and Ruby on Rails. Its vision is to be the easiest way to create rapid prototypes, but also the easiest way to create scalable, powerful, rich applications.

FastHTML uses the underlying starlette cap (a poem) uvicornThe

It comes with Pico CSS for styling. Since Leandro, an experienced web developer on the team, wanted to try out Tailwind CSS, along with our recently discovered shad4fastWe decided to combine FastHTML and shadcn/ui The UI components that make it look good.

Pyvespa

Our Vespa Python Client pyvespa In the past, it was mainly used for prototyping Vespa applications. However, recently we have worked to provide more support for Vespa features through pyvespa. Deployment to production is now supported, and advanced configuration of Vespa via pyvespa has been added. services.xml function of the file. For details, see these Examples and details in the notebook.

As a result, most Vespa applications that do not require custom Java components can be built with pyvespa.

Anecdote:

pyvespa's advanced configuration features are actually affected by the fact that FastHTML will ft-inspired by the way components are encapsulated and converted to HTML tags. In pyvespa, we've made a few changes to the vt-component performs a similar operation, converting it to a Vespa services.xml Tags. Readers interested in this can check out This PR Learn more. This approach saves us a lot of work compared to implementing custom classes for all supported tags.

Additionally, the process of building a Vespa application using pyvespa allowed us to perform hands-on validation.

software

As a ColPali embedder with native support for Vespa, which is still in the WIP state, we know that a GPU is needed to accomplish the inference. From our experiments in Colab, we conclude that a T4 instance is sufficient.

In order to generate the embedding before embedding the PDF pages of the dataset into Vespa, we considered using a serverless GPU provider (Modal (one of our favorites). However, since the dataset is "only" 6,692 pages, we used a Macbook M2 Pro and worked for 5-6 hours to create these embeds.

trusteeship

There are a lot of options here. We could go with a traditional cloud provider such as AWS, GCP, or Azure, but that would require more effort on our part to set up and manage the infrastructure and would make it harder for others to replicate the application.

We learned that Hugging Face Spaces They offer a hosting service where you can add GPUs as needed. They also provide a one-click "Clone this space" button that makes it very easy for others to copy the application.

We found answer.ai Creates a Reusable libraries, which can be used to deploy FastHTML applications on Hugging Face Spaces. Upon further research, however, we realized that their approach uses the Docker SDK to manipulate Spaces, and that there are actually simpler ways to do this.

By utilizing Custom Python SpacesThe

ground huggingface-hub documentation::

While this is not an official workflow, you can run your own Python + interface stack in Spaces by choosing Gradio as the SDK and providing a front-end interface on port 7860.

Anecdote 2: There is a typo in the documentation stating that the port on which the service is provided is 7680. Fortunately, it didn't take us long to realize that the correct port should be 7860and submitted a PR, merged by Julien Chaumond, CTO of Hugging Face, fixed the bug. Checklist tasks completed!

visual language model

For the "Generation" part of the Visual RAG, we need a Visual Language Model (VLM) to generate responses based on the top-k ranked documents obtained from Vespa.

Vespa Native Support LLM(Large Language Model), either externally or internally integrated, but VLM (Visual Language Model) is not yet natively supported in Vespa.

With OpenAI, Anthropic, and Google all releasing excellent visual language models (VLMs) in the past year, the field is growing rapidly. For performance reasons, we wanted to choose a smaller model, given Google's Gemini API has recently improved the developer experience, we decided to use the gemini-1.5-flash-8bThe

Of course, quantitative evaluation of different models is recommended before selecting a model in a production environment, but this is beyond the scope of this project.

build

With the technology stack in place, we can start building the application. The high level architecture of the application is as follows:

Vespa applications

Key components of the Vespa application include:

- Documents containing fields and types schema definitionThe

- Rank profile Definition.

- an

services.xmlConfiguration file.

All of them. possible Defined in Python using pyvespa, but we recommend also checking the generated configuration file, which can be accessed by calling the app.package.to_files() to realize. For detailed information, see pyvespa documentationThe

Ranking Configuration

One of the most underrated features of the Vespa is the Ranking by stage Function. It allows you to define multiple ranking profiles, each of which can contain different (or inherited) ranking phases that can be executed on content nodes (phase 1 and phase 2) or container nodes (global stage).

This allows us to handle many different use cases separately and find the ideal balance between latency, cost and quality for each situation.

Read what our CEO Jon Bratseth has to say about the architectural inversion of moving compute to the data side of the equation. This blog postThe

For this application, we defined 3 different ranking configurations:

take note of look upThe stage is to pass the query through the yql specified, and theranking strategyis specified in the ranking configuration file (which is part of the application package provided at deployment time).

1. Pure ColPali

The yql used for this ranking mode in our application is:

select title, text from pdf_page where targetHits:{100}nearestNeighbor(embedding,rq{i}) OR targetHits:{100}nearestNeighbor(embedding,rq{i+1}) .. targetHits:{100}nearestNeighbor(embedding,rq{n}) OR userQuery();

We will also hnsw.exploreAdditionalHits The parameter is adjusted to 300 to ensure that no relevant matches are missed during the retrieval phase. Please note that this will incur a performance cost.

included among these rq{i} is the i'th query in the query Token (which must be provided as a parameter in the HTTP request).n is the maximum number of query tokens to retrieve (we use 64 in this application).

This ranking configuration uses the max_sim_binary ranking expression that takes advantage of the optimized Hamming distance calculation feature in Vespa (for details see Scaling ColPali to billions. This is used in the first stage of ranking and the top 100 matches are re-ranked using the full floating-point representation of the ColPali embedding.

2. Purely text-based ranking (BM25)

In this case, we are based only on the weakAnd Retrieve the document.

select title, text from pdf_page where userQuery();

In the ranking phase, we use bm25 Conduct Phase I ranking (no Phase II).

Note that for optimal performance, we will likely want to use a combination of text-based and vision-based ranking features (e.g., using the Reciprocal Ranking Integration), but in this demo we want to show the differences between them rather than find the optimal combination.

3. Mix BM25 + ColPali

In the retrieval phase, we use the same yql as the pure ColPali ranking configuration.

We noticed that for some queries, especially shorter ones, pure ColPali matched many pages without text (images only), while many of the answers we were looking for actually appeared in pages with text.

To solve this problem, we added a second-stage ranking expression combining the BM25 score and the ColPali score, using a linear combination of the two scores (max_sim + 2 * (bm25(title) + bm25(text))).

This method is based on simple heuristics, but it would be more beneficial to find the optimal weights for different features by performing ranking experiments.

Fragment Generation in Vespa

In the search front-end, it is common to include some excerpts from the source text, and to list certain words as bold (typeface) (highlighted) display.

Displaying snippets of matching query terms in context allows users to quickly determine whether the results are likely to satisfy their information needs.

In Vespa, this feature is called "dynamic snippets" and has various parameters that can be adjusted, such as how much surrounding context to include and the labels used to highlight matching words.

In this demo, we show both the snippet and the full extracted text of the page for comparison.

To minimize visual noise in the results, we removed the stop words (and, in, the, etc.) from the user query so they would not be highlighted.

Learn more about Vespa Dynamic Segments.

Query recommendations in Vespa

A common feature in search is "search suggestions", which are displayed as the user types.

Real user queries are often used to provide pre-calculated results, but here we do not have any user traffic to analyze.

In this example, we use a simple substring search that matches a user-entered prefix to a relevant question generated from a PDF page to provide suggestions.

The yql query we use to get these suggestions is:

select questions from pdf_page where questions matches (".*{query}.*")

One advantage of this approach is that any questions that appear in the recommendations can be recognized as having answers in the available data!

We could have ensured that the page generating the suggested question always appeared in the top three responses (by adding a similarity metric between the user query and the document-generated question in the sort configuration), but this would have been a bit of a "cheat" from the point of view of demonstrating the functionality of the ColPali model.

user experience

We are fortunate to have the Chief Scientist from Jo Bergum He got great UX feedback from us. He pushed us to make UX "fast and fluid". People are used to Google, so there's no doubt that speed is critical to the user experience in search (and RAG). It's something that's still somewhat underestimated in the AI community, where many people seem to be happy waiting 5-10 seconds for a response. And we want to achieve response times in milliseconds.

Based on his feedback, we need to set up a staged request process to avoid waiting for the complete image and similarity mapping tensor to be returned from Vespa before displaying the results.

The solution is to first extract only the most important data from the results. For us, this means extracting only the title,url,text,page_no, as well as a scaled-down (blurred) version of the image (32x32 pixels) for the initial search results display. This allows us to display the results immediately and continue loading the full image and similarity mapping in the background.

The complete UX process is shown below:

The main sources of delay are:

The main sources of delay are:

- Inference time for generating ColPali embeddings (done on the GPU, depending on the number of Token in the query)

- So we decided to use the

@lru_cachedecorator to avoid recomputing the embedding multiple times for the same query.

- So we decided to use the

- Hugging network latency between Face Spaces and Vespa (including TCP handshakes)

- The transfer time for complete images is also significant (about 0.5MB per image).

- The size of the similarity mapping tensor is larger (

n_query_tokensxn_images(x 1030 patches x 128).

- Creating a similarity-mapped hybrid image is a CPU-intensive task, but this is accomplished through the

fastcore(used form a nominal expression)@threadedThe decorator is done in a multithreaded background task where each image polls its corresponding endpoint to check if the similarity mapping is ready.

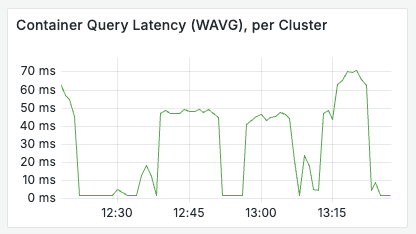

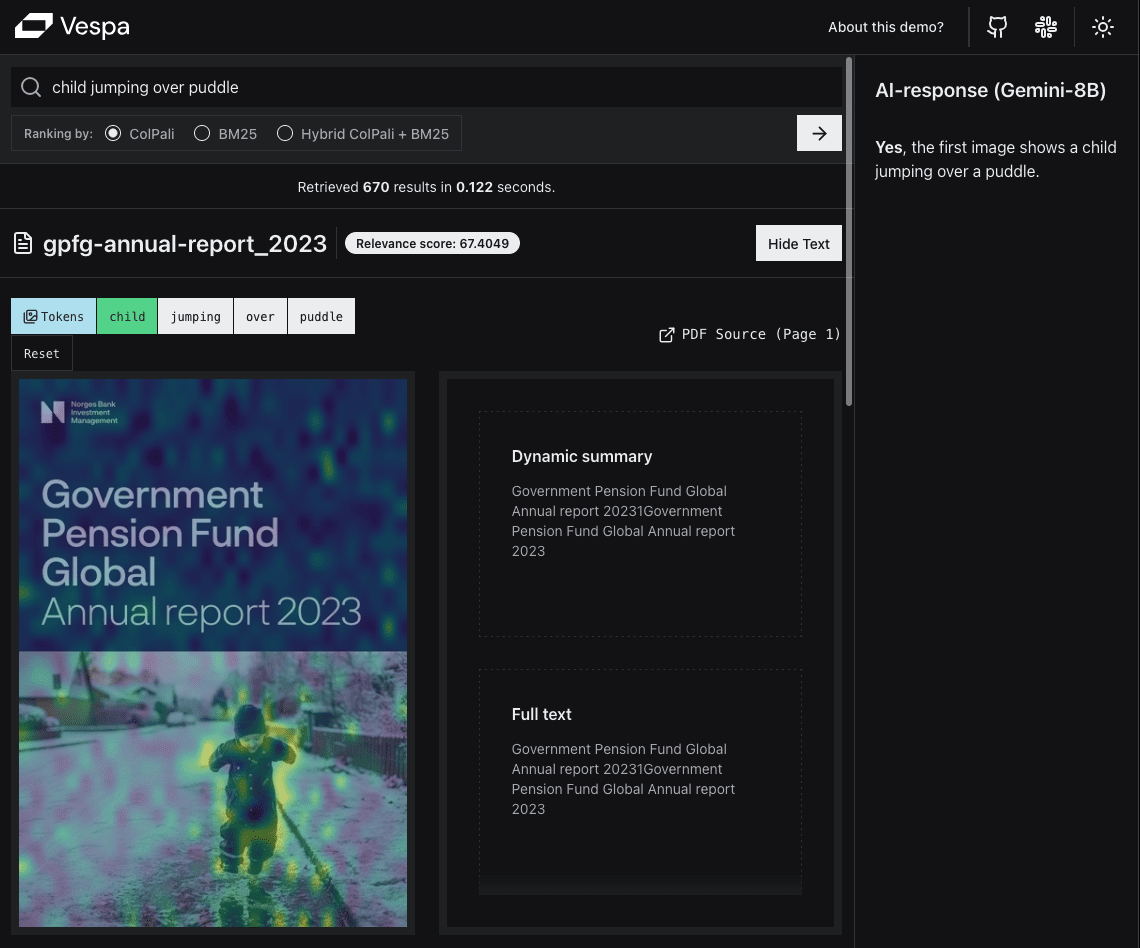

stress test

We were concerned about the performance of the application during a surge in traffic, so we conducted a simple stress-testing experiment. The experiment was conducted by using a browser development tool to send requests /fetch_results The cURL command was copied (with caching not enabled) and run in a loop across 10 parallel terminals. (At this point we disabled the @lru_cache Decorator.)

in the end

While the tests were very basic, the first tests showed that the bottleneck in search throughput was computing ColPali embeddings on the GPUs in Huggingface space, whereas the Vespa backend could easily handle more than 20 queries per second with very low resource utilization. We think this is more than enough for demos. If we need to scale, our first step would be to enable a larger GPU instance for the Huggingface space.

The Vespa application performs well under load as shown in the following charts.

Reflections on using FastHTML

The main takeaway from using FastHTML is that it breaks down the barriers between front-end and back-end development. The code is tightly integrated, allowing all of us to understand and contribute to every part of the application. This should not be underestimated.

We really enjoyed being able to use the browser's development tools to inspect the front-end code and actually see and understand most of it.

The development and deployment process is significantly simplified compared to using a standalone front-end framework.

It allows us to use uv managerial possess dependencies, which dramatically changes the way we handle dependencies in Python.

Thomas' view:

As a developer with a data science and AI background, preferring Python but having worked with multiple JS frameworks, my experience has been very positive. I felt better able to engage in front-end related tasks without adding too much complexity to the project. I really enjoyed being able to understand every part of the application.

The view of Andreas:

I've been working on Vespa for a long time, but haven't dabbled much in Python or front-end development. I felt a little overwhelmed for the first day or two, but it's so exciting to be able to work in full stack and see the effects of my changes in almost real time! With the help of the big language model, it's easier than ever to enter an unfamiliar environment. I really liked that we were able to create similarity maps with much lower latency and resource consumption by calculating the similarity of image patches through tensor expressions within Vespa (the vectors are already stored in memory) and returning them with the search results.

Leandro's view:

As a developer with a solid foundation in web development using React, JavaScript, TypeScript, HTML, and CSS, moving to FastHTML was relatively simple. The framework's direct HTML element mapping was highly consistent with my prior knowledge, which reduced the learning curve. The main challenge was adapting to FastHTML's Python-based syntax, which differs from the standard HTML/JS structure.

Is visual technology all you need?

We have seen that utilizing Token level late interaction embedding from the Vision Language Model (VLM) is very powerful for certain types of queries, but we do not see it as a one-size-fits-all solution, but more like a very valuable tool in the toolbox.

In addition to ColPali, we have seen other innovations in visual retrieval over the past year. Two particularly interesting approaches are:

- Document Screenshot Embeddings (DSE)5 - A dual encoder model for generating dense embeddings for screenshots of documents and utilizing these embeddings for retrieval.

- IBM Docling - A library for parsing multiple types of documents (e.g., PDF, PPT, DOCX, etc.) into Markdown, avoiding OCR and using computer vision models instead.

Vespa support combines these approaches and enables developers to find the most attractive balance between latency, cost and quality for a given use case.

We can envision an application that combines high-quality text extraction with Docling or similar tools, intensive retrieval using document screenshot embedding, and intensive retrieval through text features and ColPali-like modeling of the MaxSim The scores are sorted. If you really want to improve performance, you can even combine all these features with features such as the XGBoost maybe LightGBM of the GBDT model is combined.

Therefore, while ColPali is a powerful tool for making hard-to-extract information in text retrievable, it is not a panacea and should be combined with other approaches to achieve optimal performance.

missing link

Models are temporary, while assessments are permanent.

Adding automated evaluations is beyond the scope of this demo, but we strongly recommend that you create an evaluation dataset for your own use case. You can use LLM-as-a-judge to bootstrap (see this Blog PostsLearn more about how we provide search.vespa.ai (Realize this).

Vespa offers a number of adjustable parameters, and by providing quantitative feedback on different experiments, you can find the most appealing tradeoffs for your specific use case.

reach a verdict

We have built a live demo application that shows how to perform visual RAG retrieval of PDFs in Vespa using ColPali embedding.

If you've read this far, you may be interested in the code. You can find the code in the here (literary) Find the code for the app.

Now, go build your own visual RAG app!

For those who want to know more about visual retrieval, ColPali or Vespa, feel free to join the Vespa's Slack Community Ask a question, seek help from the community, or stay up to date on the latest developments at Vespa.

common problems

Does using ColPali require the use of a GPU for inference?

Currently, in order to reason about the query in a reasonable amount of time, we need to use the GPU.

In the future, we expect the quality and efficiency (e.g., smaller embeddings) of ColPali-like models to improve and more similar models to emerge, as we have seen with the ColBERT family of models, such as answer.ai's answerai-colbert-small-v1The ColBERT model has been developed to exceed the performance of the original ColBERT model, albeit at less than one-third of the size of the original model.

See also Vespa Blog Learn how to use Vespa's answerai-colbert-small-v1The

Is it possible to use ColPali in conjunction with a query filter in Vespa?

Can. In this application, we've added to the page the published_year field, but have not yet implemented its functionality as a filter option on the front-end.

When will Vespa natively support ColPali embeds?

See also This GitHub issueThe

Does this scale to billions of documents?

Yes. Vespa supports horizontal scaling and allows you to adjust the tradeoffs between latency, cost, and quality for specific use cases.

Can this demo be adapted to support ColQwen2?

It is possible, but there are some differences in the calculation of similarity maps.

See also This notebook As a starting point.

Can I run this demo with my own data?

Absolutely! By adjusting the provided notebook Pointing to your data, you can set up your own Vespa application for the visual RAG. You can also use the provided web application as a starting point for your own front end.

bibliography

© Copyright notes

Article copyright AI Sharing Circle All, please do not reproduce without permission.

Related posts

No comments...

present (sb for a job etc)

present (sb for a job etc)