Train Your Own DeepSeek R1 Inference Model with Unsloth

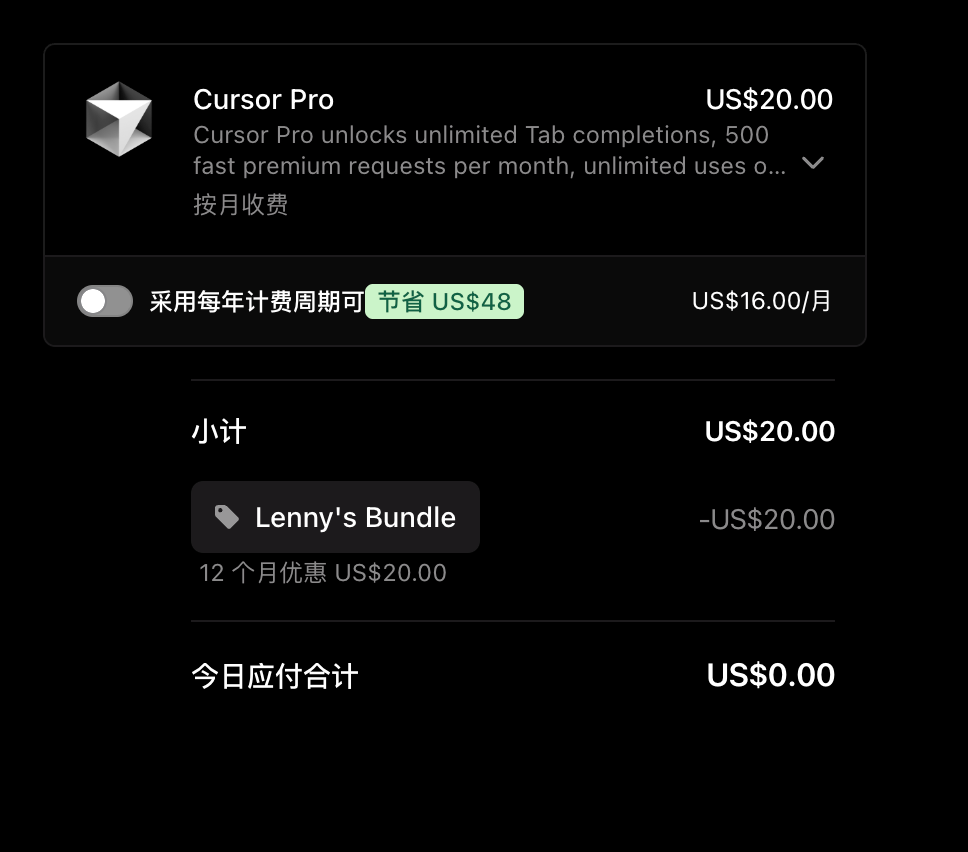

Today, Unsloth is pleased to present the Unsloth DeepSeek's R1 study reveals an "epiphany moment" in which R1-Zero is optimized by using group relative policies (GRPO) Self-directed learning allocates more thinking time without manual feedback.

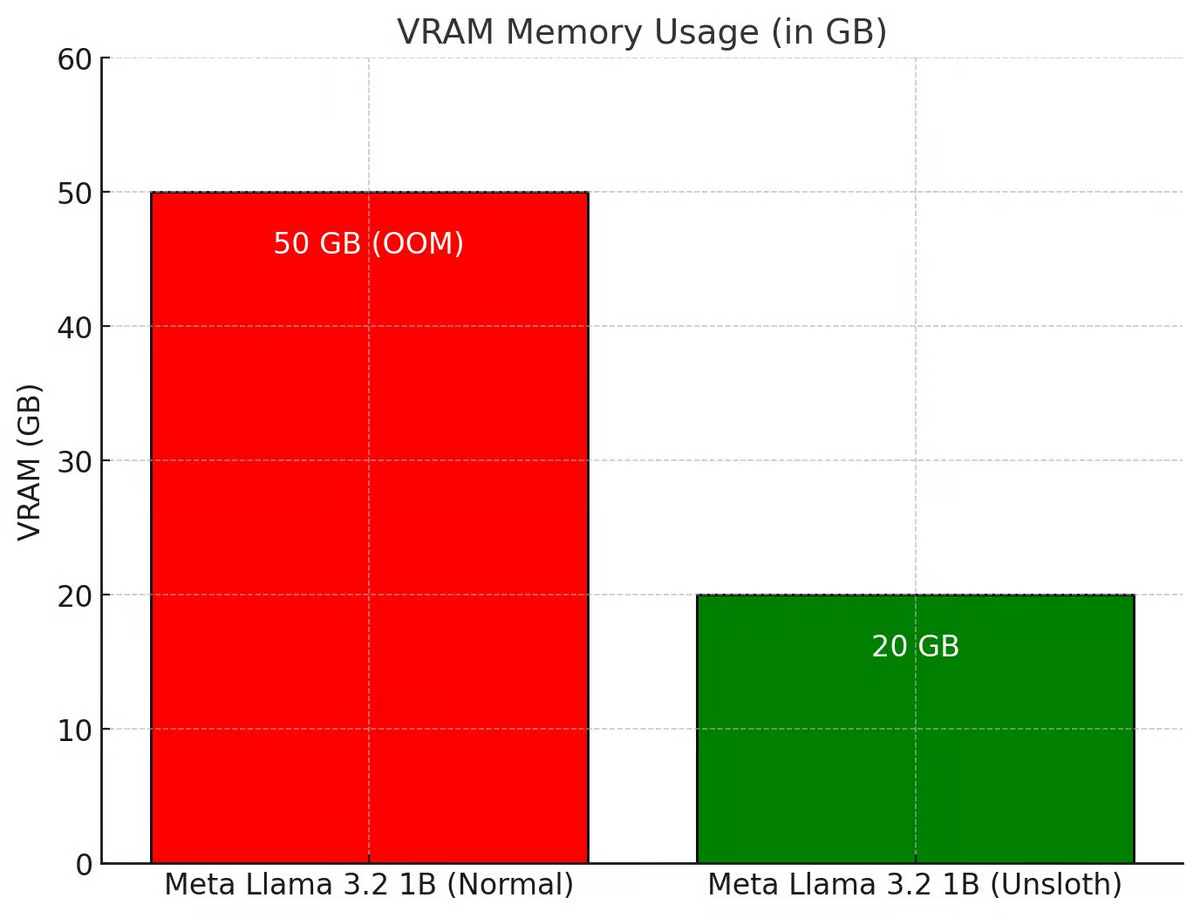

Unsloth has enhanced the entire GRPO process to use 80% less VRAM than Hugging Face + FA2, which allows users to recreate R1-Zero's "epiphany moment" on only 7GB of VRAM using Qwen2.5 (1.5B).

Try Unsloth's free GRPO notebook:Llama 3.1 (8B) on Colab

For GRPO notebooks for other models such as Phi-4, visit the Unsloth documentation

💡 Key details

- With 15GB of VRAM, Unsloth allows users to convert any model with up to 15B of parameters (e.g., Llama 3.1 (8B), Phi-4 (14B), Mistral (7B), or Qwen2.5 (7B)) into an inference model

- Minimum Requirements: Train your own inference models locally with just 7GB of VRAM.

- The brilliant team at Tiny-Zero proved that users could achieve their own epiphany moments using Qwen2.5 (1.5B) - but that required 2 A100 GPUs (160GB VRAM). Now, with Unsloth, users can achieve the same epiphany moment with just one 7GB VRAM GPU!

- Previously, GRPO only supported full fine-tuning, but Unsloth makes it available for QLoRA and LoRA

- Note that this is not fine-tuning DeepSeek of the R1 distillation model, nor is it tuning using R1 distillation data (which Unsloth already supports). This is a conversion of the standard model to a full-fledged inference model using GRPO.

- Use cases for GRPO include: if a user wants to create a customized model with incentives (e.g., for law, medicine, etc.), then GRPO can help.

If a user has input and output data (e.g., questions and answers) but no thought chain or reasoning process, GRPO can magically create the reasoning process for the user! + more

🤔 GRPO + "epiphany" moments

DeepSeek researchers observed an "epiphany moment" while training R1-Zero using pure reinforcement learning (RL). The model learned to extend its thinking time by reevaluating its initial approach without any human guidance or predefined instructions.

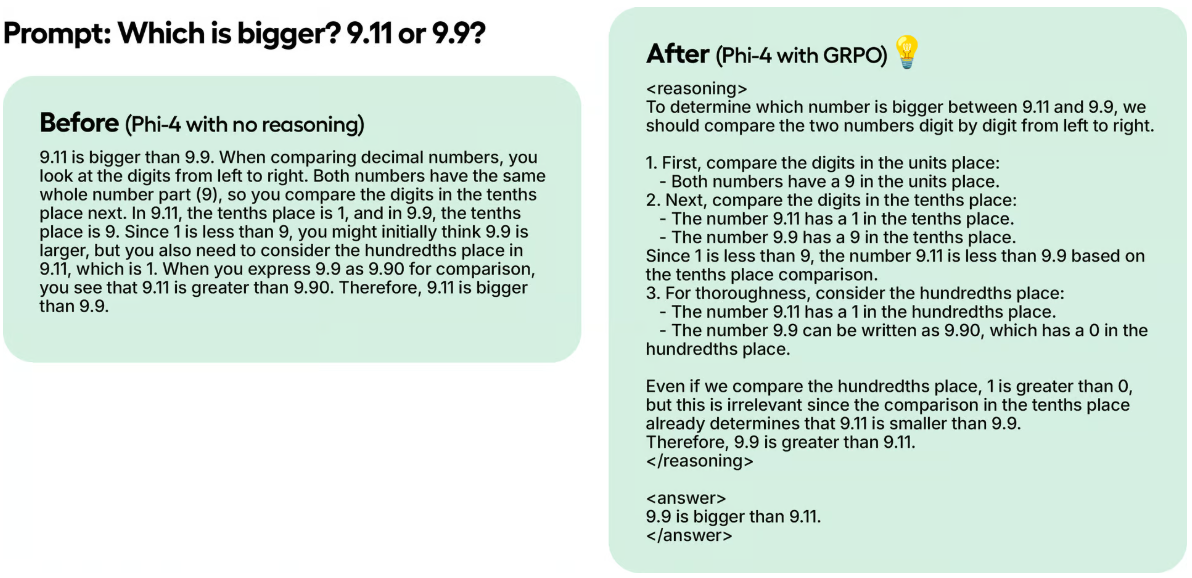

In a test example, even though Unsloth trained Phi-4 in 100 steps using only GRPO, the results are already obvious. The model without GRPO did not think about the Token, while the model trained with GRPO did think about the Token and also had the correct answer.

This magic can be reproduced with GRPO, an RL algorithm that efficiently optimizes responses without the need for a value function, unlike proximal policy optimization (PPO), which relies on a value function. In Unsloth's notebooks, Unsloth uses GRPO to train a model with the goal of allowing it to autonomously develop its own self-validation and search capabilities-creating a mini-moment of epiphany.

How it works:

- The model generates response groups.

- Each response is scored based on correctness or some other metric created by some reward function, rather than by the Big Language Model reward model.

- Calculate the average score for the group.

- Scores for each response were compared to the group mean.

- The model was enhanced to support higher scoring responses.

For example, suppose Unsloth wants the model to solve:

What is 1+1? >> chain of thought/calculation process >> The answer is 2.

What is 2+2? >> chain of thought/calculation process >> The answer is 4.

Initially, one has to collect a lot of data to populate the computational process/chain of thought. But GRPO (the algorithm used by DeepSeek) or other RL algorithms can guide the model to automatically show reasoning power and create reasoning trajectories. Instead, Unsloth needs to create good reward functions or validators. For example, if it gets the right answer, give it 1 point. If some words are misspelled, subtract 0.1. And so on! Unsloth can provide many functions to reward this process.

🦥 GRPO in Unsloth

If you are using GRPO with Unsloth locally, please also "pip install diffusers" as it is a dependency.

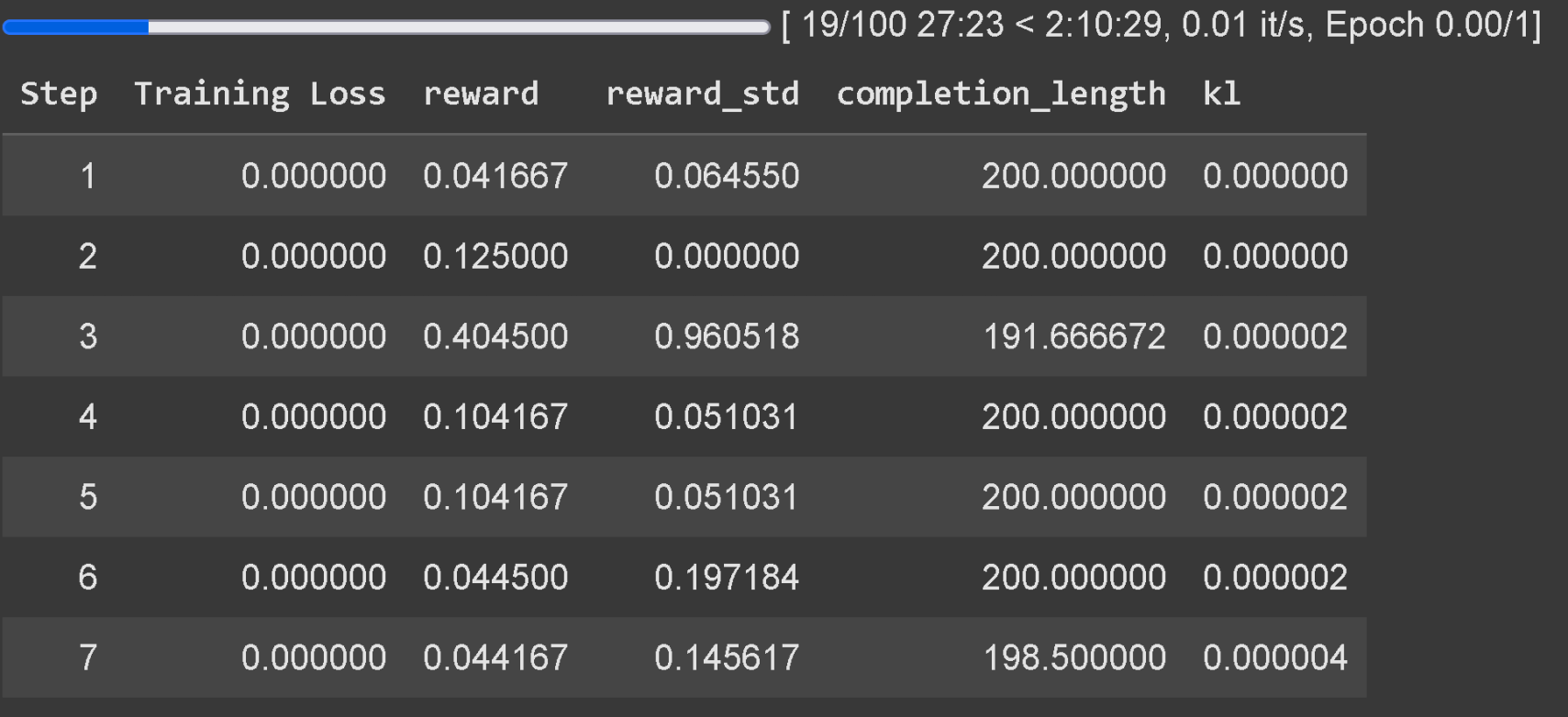

Wait at least 300 steps for the reward to actually increase, use the latest version of vLLM. keep in mind that Unsloth's example on Colab was only trained in one hour, so the results are not ideal. In order to get good results, the user needs to train for at least 12 hours (that's how GRPO works), but keep in mind that this is not mandatory as the user can stop at any time.

It is recommended to apply GRPO to models with at least 1.5B parameters to properly generate Thinking Token, as smaller models may not be able to generate it. If the user is using a base model, make sure the user has the chat template. training loss tracking for GRPO is now built directly into Unsloth, eliminating the need for external tools such as wandb.

In addition to adding GRPO support, Unsloth subsequently supported Online DPO, PPO, and RLOO! See below for a chart comparing Unsloth's Online DPO VRAM consumption to the standard Hugging Face + FA2.

✨ Unsloth x vLLM

20x higher throughput and 50% VRAM savings:

Users can now use vLLM directly in the fine-tuning stack, which allows for greater throughput and allows users to fine-tune and reason about the model simultaneously! Dynamic 4-bit quantization using Unsloth's Llama 3.2 3B Instruct on 1 A100 40GB, estimated 4000 tokens / s or so. On a 16GB Tesla T4 (with a free Colab GPU), users get 300 tokens / s.

Unsloth has also magically eliminated the need to simultaneously load vLLM Unsloth can initially fine-tune the Llama 3.3 70B Instruct in 1 48GB GPU, with the Llama 3.3 70B weights taking up 40GB of VRAM. 40GB of VRAM. without eliminating double memory usage, Unsloth would require >= 80GB of VRAM when loading Unsloth and vLLM at the same time.

But with Unsloth, users can still fine-tune and get the benefits of fast inference in VRAMs up to 48GB! To use fast inference, first install vllm and instantiate Unsloth with fast_inference:

pip install unsloth vllm from unsloth import FastLanguageModel model, tokenizer = FastLanguageModel.from_pretrained( model_name = "unsloth/Llama-3.2-3B-Instruct", fast_inference = True, ) model.fast_generate(["Hello!"])

vLLM discovery in Unsloth

- vLLM can now load Unsloth Dynamic 4-bit quantization. Like Unsloth's 1.58bit Dynamic R1 GGUF, Unsloth shows that dynamically quantizing some layers to 4-bit and some layers to 16-bit can significantly improve accuracy while keeping the model small.

- Unsloth automatically selects multiple parameters to account for RAM, VRAM efficiency, and maximum throughput (e.g., # block pre-populated tokens, # max sequences, etc.) Unsloth defaults to enabling -O3 in vLLM and enabling prefix caching Unsloth found that Flashinfer is actually slower on older GPUs by 10%. The FP8 KV cache makes it 10% slower, but doubles the throughput potential.

- Unsloth allows LoRA to be loaded in vLLM by parsing the state dictionary instead of loading it from disk - this can make your GRPO training run 1.5x faster. An active area of research is how to edit the LoRA adapter in vLLM directly (Unsloth doesn't know how yet). This could be a huge speedup, as Unsloth is now moving GPU data unnecessarily.

- vLLM can strangely have random VRAM spikes, especially during batch generation. unsloth added a batch generation function to reduce memory spikes.

© Copyright notes

Article copyright AI Sharing Circle All, please do not reproduce without permission.

Related posts

No comments...