Creating Next-Generation Chat Assistants with Amazon Bedrock, Amazon Connect, Amazon Lex, LangChain, and WhatsApp

This article was co-authored by Harrison Chase, Erick Friis and Linda Ye of LangChain.

Generative AI will revolutionize the user experience in the coming years. A key step in this process is the introduction of AI assistants that can intelligently use tools to help customers navigate the digital world. In this paper, we show how to deploy a context-aware AI assistant. The assistant is based on Amazon Bedrock Knowledge Base,Amazon Lex cap (a poem) Amazon Connect built and uses WhatsApp as an interaction channel to provide a familiar and convenient interface for users.

The Amazon Bedrock Knowledge Base provides contextual information from private enterprise data sources for Foundational Models (FMs) and agents, supporting Search Enhanced Generation (RAG) to provide more relevant, accurate and customized responses. This feature provides a powerful solution for organizations looking to enhance their generative AI applications. With native compatibility with Amazon Lex and Amazon Connect, it simplifies the integration of domain-specific knowledge. By automating document import, chunking, and embedding, it eliminates the need to manually set up complex vector databases or customized retrieval systems, dramatically reducing development complexity and time.

This solution improves the accuracy of base model responses and reduces false answers due to validation-based data. The solution improves cost efficiency by reducing development resources and operational costs compared to maintaining a custom knowledge management system. With AWS' serverless services, it is scalable to quickly adapt to growing volumes of data and user queries. AWS' robust security infrastructure is also utilized to maintain data privacy and compliance. By continuously updating and expanding the knowledge base, AI applications can always keep up with the latest information. By choosing the Amazon Bedrock Knowledge Base, organizations can focus on creating value-added AI applications while AWS handles the complexities of knowledge management and retrieval, resulting in faster deployment of more accurate and powerful AI solutions with less effort.

pre-conditions

To implement the solution, you need to have the following:

- an AWS AccountThe newest addition to Amazon Bedrock, Amazon Lex, Amazon Connect, and Amazon Web Services is the newest addition to Amazon Web Services. AWS Lambda permission to create resources in the

- Model access rights are available on the Amazon Bedrock enable Claude 3 Haiku of Anthropic Model. In accordance with the Access to the Amazon Bedrock Base Model The steps in the

- an WhatsApp Business account for integration with Amazon Connect.

- Product documentation, knowledge articles, or other relevant data in a compatible format (e.g., PDF or text) to be imported into the knowledge base.

Solution Overview

This solution uses several key AWS AI services to build and deploy AI assistants:

- Amazon Bedrock - Amazon Bedrock is a fully hosted service that delivers high-performance Foundational Models (FMs) from leading AI companies such as AI21 Labs, Anthropic, Cohere, Meta, Mistral AI, Stability AI, and Amazon through a single API. and the broad range of capabilities needed to build generative AI applications with security, privacy, and responsible AI.

- Amazon Bedrock Knowledge Bases - Provide AI assistants with contextual information from your company's private data sources.

- Amazon OpenSearch Service - Native support for Amazon Bedrock Knowledge Bases as vector storage.

- Amazon Lex - A dialog interface for building AI assistants, including defining intents and slots.

- Amazon Connect - Integration with WhatsApp makes AI Assistant available in this popular messaging app.

- AWS Lambda - Run the code to integrate the services and implement the LangChain agent that forms the core logic of the AI assistant.

- Amazon API Gateway - Receive inbound requests triggered from WhatsApp and route the request to AWS Lambda for further processing.

- Amazon DynamoDB - Stores received and generated messages to support dialog memory.

- Amazon SNS - Routes that handle outbound responses from Amazon Connect.

- LangChain - Provides a powerful abstraction layer for building LangChain agents that help Foundation Models (FMs) perform context-aware reasoning.

- LangSmith - Upload agent execution logs to LangSmith for enhanced observability, including debugging, monitoring, and test and evaluation capabilities.

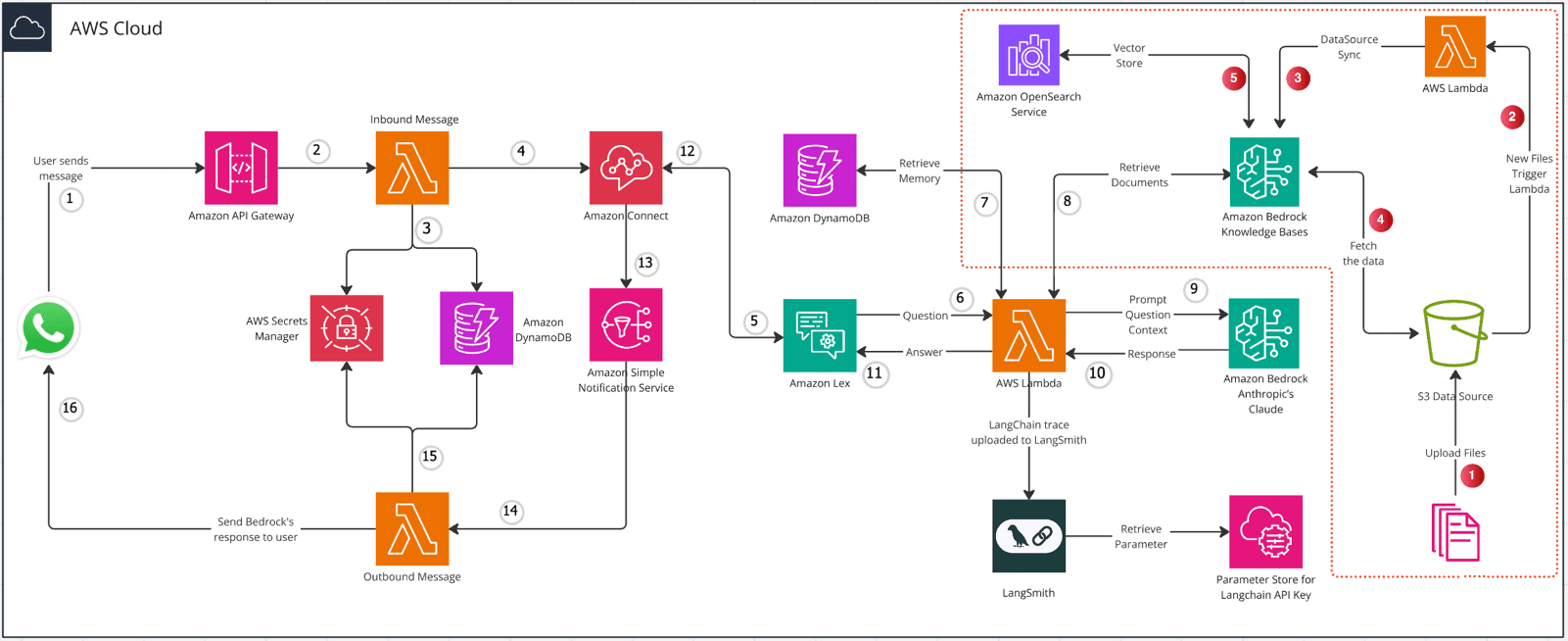

The following illustration shows the architecture.

Process Description

The data ingestion process is indicated by red numbers on the right side of the figure:

- Upload the file to the Amazon Simple Storage Service (Amazon S3) data source.

- The new file triggers the Lambda function.

- Lambda functions invoke synchronization operations on knowledge base data sources.

- Amazon Bedrock Knowledge Bases takes data from Amazon S3, chunks it, and generates embedding vectors from selected base models (FMs).

- Amazon Bedrock Knowledge Bases stores embedding vectors in the Amazon OpenSearch Service.

The left side of the figure represents the message passing process with numbers:

- The user initiates communication by sending a message through WhatsApp to a webhook hosted somewhere.

- Amazon API Gateway routes inbound messages to an inbound message processor performed by AWS Lambda.

- The inbound message processor has an inbound message processor in the Amazon DynamoDB The user's contact information is recorded in the

- For first-time users, the inbound message processor creates a new session in Amazon Connect and logs it to DynamoDB. for returning users, their existing Amazon Connect session is restored.

- Amazon Connect forwards user messages to Amazon Lex for natural language processing.

- Amazon Lex triggers the LangChain AI assistant implemented by Lambda functions.

- LangChain AI assistant retrieves conversation history from DynamoDB.

- Use Amazon Bedrock Knowledge Bases, the LangChain AI assistant to get relevant contextual information.

- The LangChain AI assistant generates a hint, combines contextual data and user queries, and submits it to the underlying model running on Amazon Bedrock.

- Amazon Bedrock processes the input and returns the model's response to the LangChain AI assistant.

- The LangChain AI assistant passes the model's response back to Amazon Lex.

- Amazon Lex transmits the model's response to Amazon Connect.

- Amazon Connect publishes the model's response to Amazon Simple Notification Service (Amazon SNS).

- Amazon SNS triggers the Outbound Message Processor Lambda function.

- The outbound message processor retrieves relevant chat contact information from Amazon DynamoDB.

- The outbound message handler sends the response to the user via Meta's WhatsApp API.

Deploying this AI assistant involves three main steps:

- Use Amazon Bedrock Knowledge Bases to create a knowledge base and import relevant product documentation, frequently asked questions (FAQs), knowledge articles, and other useful data that will help AI Assistant answer user questions. The data should cover key use cases and topics supported by the AI Assistant.

- Create a LangChain agent to drive the logic of the AI assistant. The agent is implemented in Lambda functions and uses a knowledge base as its primary tool for information finding. This is accomplished by providing the AWS CloudFormation Templates Automatically deploy agents and other resources. See the list of resources in the next section.

- establish Amazon Connect Instances and configure WhatsApp IntegrationThis enables users to chat with AI assistants through WhatsApp, providing a familiar interface and supporting rich interactions such as images and buttons. This enables users to chat with the AI Assistant through WhatsApp, providing a familiar interface and supporting rich interactions such as images and buttons.The popularity of WhatsApp increases the accessibility of the AI Assistant.

Solution Deployment

We provide pre-built AWS CloudFormation Templates to deploy all the content you need in your AWS account.

- If not already logged in, please log in AWS ConsoleThe

- Choose from the following Launch Stack button to open the CloudFormation Console and create a new stack.

- Enter the following parameters:

StackName: Name your stack, e.g.WhatsAppAIStackLangchainAPIKey: By LangChain Generated API key

| shore | Deployment Button | Template URL (for upgrading an existing stack to a new version) | AWS CDK stack (customizable as needed) |

|---|---|---|---|

| Northern Virginia (us-east-1) | YML | GitHub |

- Check the box to confirm that you are creating AWS Identity and Access Management (IAM) resource, then select Create StackThe

- Wait for the stack to finish creating, which takes about 10 minutes. When it finishes, the following is created:

- LangChain agent

- Amazon Lex bot

- Amazon Bedrock Knowledge Base

- Vector Storage (Amazon OpenSearch Serverless)

- lambda (Greek letter Λλ)(for data import and providers)

- Data sources (Amazon S3)

- DynamoDB

- Parameter Store Used to store LangChain API keys

- IAM Role and authority

- Upload the file to the data source created for WhatsApp (Amazon S3). Once you upload a file, the data source is automatically synchronized.

- Select the most recently created assistant for testing in the Amazon Lex console. Select Englishand then select Test and send a message.

Creating an Amazon Connect Instance and Integrating WhatsApp

Configure Amazon Connect to integrate with your WhatsApp enterprise account and enable the WhatsApp channel for AI Assistant:

- exist Amazon Connect in the AWS Console Navigate in the Create an instance if you have not already done so. Create an instance in the Distribution settings Copy your Instance ARNThis information will be needed later to link your WhatsApp business account. This information will be needed later to link your WhatsApp business account.

- Select your instance, and then in the navigation panel, choose Flows. Scroll down and select Amazon Lex. Select your robot and choose Add Amazon Lex BotThe

- In the navigation panel select OverviewThe In Access Information lower option Log in for emergency accessThe

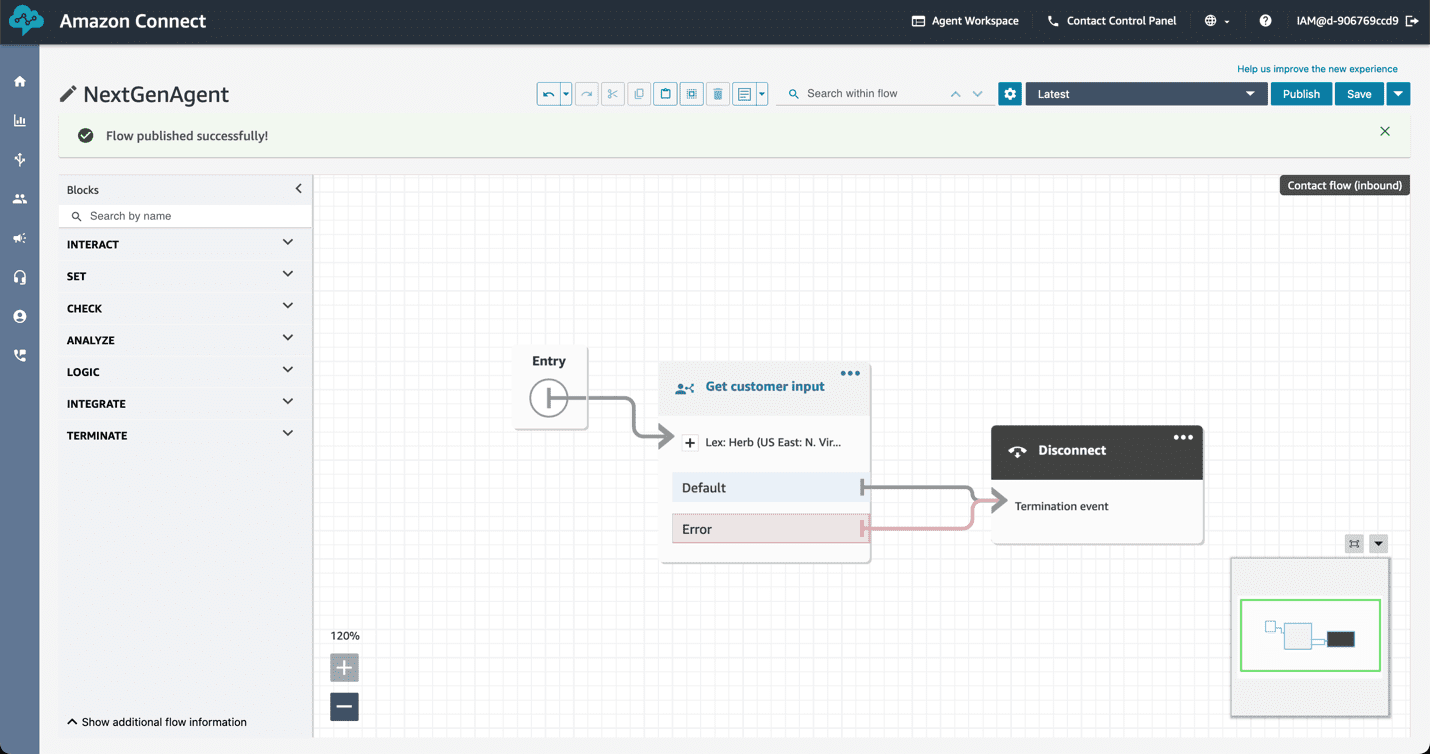

- In the Amazon Connect console, in the navigation panel of the Routing lower option Flows. Selection Create flow. Place a Get customer input Drag the block into the flow. Select the block. Select the Text-to-speech or chat text and add an introductory message such as "Hello, how can I help you today?" Scroll down and choose Amazon LexThen select the Amazon Lex bot you created in step 2.

- After saving the block, add another block named "Disconnect". Place the Entry The arrow connects to the Get customer inputand will Get customer input The arrow connects to the Disconnect. Selection PublishThe

- After publishing, at the bottom of the navigation panel select Show additional flow information. Copy the Amazon Resource Name (ARN) of the process. you will need this information later to deploy the WhatsApp integration. The following screenshot shows the flow of the Amazon Connect console.

- ground Providing WhatsApp messages as a channel through Amazon Connect Deploy the WhatsApp integration in the details.

Test Solutions

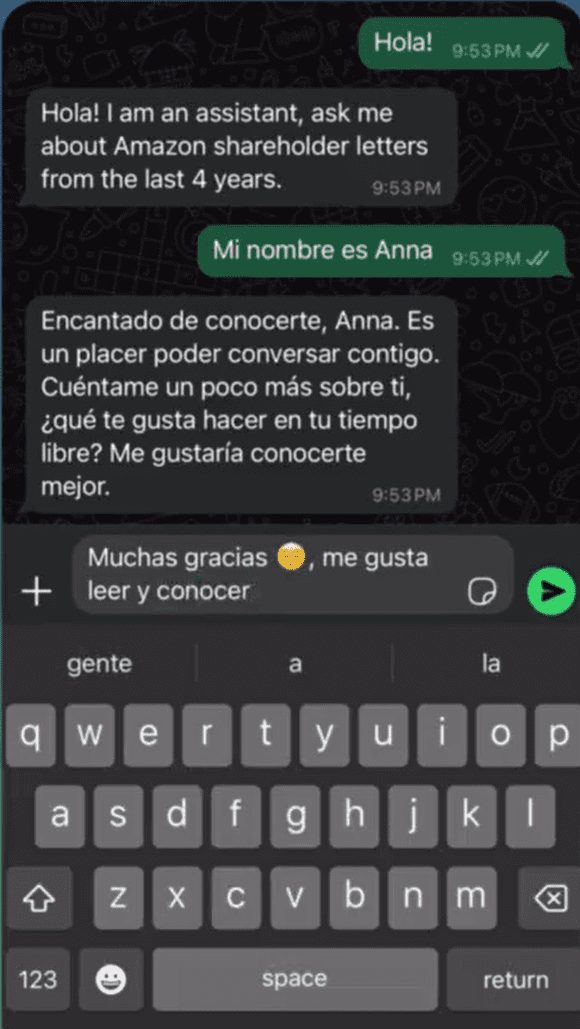

Interact with the AI assistant via WhatsApp, as demonstrated below:

clear up

To avoid incurring ongoing costs, delete resources after you have finished using them:

- Delete the CloudFormation stack.

- Delete an Amazon Connect instance.

summarize

This article describes how to integrate Amazon Bedrock,Amazon Lex cap (a poem) Amazon Connect Create a smart conversational AI assistant and deploy it to the WhatsAppThe

The solution imports relevant data into Amazon Bedrock Knowledge Bases Knowledge base, using LangChain agent realization, answering questions through the knowledge base, and through the WhatsApp Provide users with an access interface. This solution provides an accessible, intelligent AI assistant that guides users through your company's products and services.

Possible next steps include customizing the AI assistant to specific use cases, expanding the knowledge base, and using the LangSmith Analyze conversation logs to identify problems, improve errors and break down performance bottlenecks in FM call sequences.

© Copyright notes

Article copyright AI Sharing Circle All, please do not reproduce without permission.

Related posts

No comments...