General Introduction

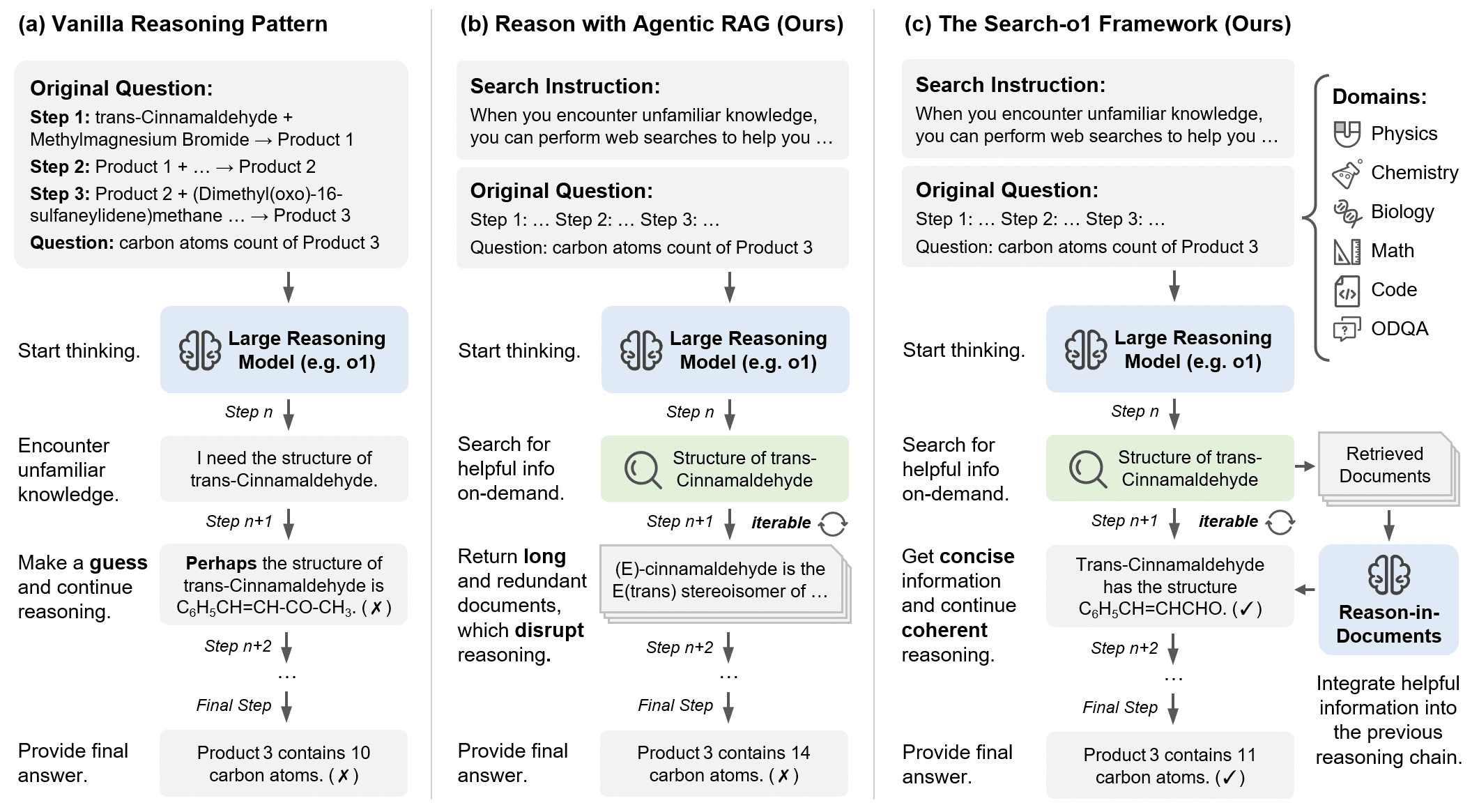

Search-o1 is an open source project that aims to enhance the performance of large-scale reasoning models (LRMs) by integrating advanced search mechanisms. Its core idea is to solve the knowledge deficit problem encountered in the reasoning process through dynamic search and knowledge integration. The project is developed by the sunnynexus team , provides a batch generation mechanism and alternate search methods , can be inserted in the reasoning process in real time relevant documents to improve the accuracy and reliability of the reasoning . search-o1 for complex scientific , mathematical and programming questions , support for multiple language environments , mainly using Python for development and deployment .

Function List

- Batch generation mechanism: Generate multiple inference sequences at the same time to improve efficiency.

- alternate search: Dynamically search for relevant information when knowledge deficiencies are detected during the reasoning process.

- Document Integration: Refine and seamlessly integrate searched documents into the reasoning chain.

- Multi-disciplinary support: Applies to questions and answers in complex areas such as science, math, and coding.

- Real-time knowledge update: Ensure that the model has access to up-to-date knowledge during the reasoning process.

Using Help

Installation process

The Search-o1 project is primarily hosted and distributed via GitHub. The installation process is as follows:

1.clone warehouse::

git clone https://github.com/sunnynexus/Search-o1.git

- Creating a Virtual Environment::

conda create -n search_o1 python=3.9 conda activate search_o1 - Installation of dependencies::

cd Search-o1 pip install -r requirements.txt - Data preprocessing::

- Use the code in data/data_pre_process.ipynb to preprocess the dataset into standard JSON format.

Usage

Initialize the inference sequence

Search-o1 initializes an inference sequence by combining task instructions and input questions. For example:

from search_o1 import initialize_reasoning

init_sequence = initialize_reasoning("Please count the number of primes", "between 1 and 100")

Batch Generation and Search

The search function is triggered when the model encounters a need for external knowledge:

from search_o1 import batch_generate_and_search

results = batch_generate_and_search(init_sequence, max_tokens=500)

- Batch Generation: By batch_generate_and_search The function generates multiple inference paths simultaneously and detects the need for further knowledge queries in each path.

- Search Integration: Once the need for a search is detected, the system uses a predefined search engine (e.g., Google or a customized database), to obtain relevant documents, which are subsequently refined and integrated into the inference chain.

iterative inference

Reasoning is an iterative process that may require new searches and document integration after each generation:

from search_o1 import iterate_reasoning

final_answer = iterate_reasoning(results, iterations=5)

- Number of iterations: Depending on the complexity of the task, the number of iterations can be adjusted to ensure the accuracy of the reasoning.

Application to practical problems

Search-o1 is particularly well suited for solving problems that require a lot of background knowledge, such as complex computations in scientific research or algorithm optimization in programming. An example:

- math problem: Problems such as "Solve differential equations using Euler's method" can be solved using Search-o1, where the model automatically searches for information about Euler's method and applies it to the reasoning.

- Programming Issues: For programming problems such as "how to optimize the fast sorting algorithm", Search-o1 can reason with the algorithmic improvement suggestions searched.

With the above method, users can utilize the Search-o1 Perform complex, knowledge-intensive tasks, ensuring that every step of reasoning is based on the most current and relevant knowledge.