How to Test LLM Cues Effectively - A Complete Guide from Theory to Practice

I. The root cause of the test cue word:

- LLM is highly sensitive to cues, and subtle changes in wording can lead to significantly different output results

- Untested cue words may be generated:

- misinformation

- Irrelevant replies

- Unnecessary wasted API costs

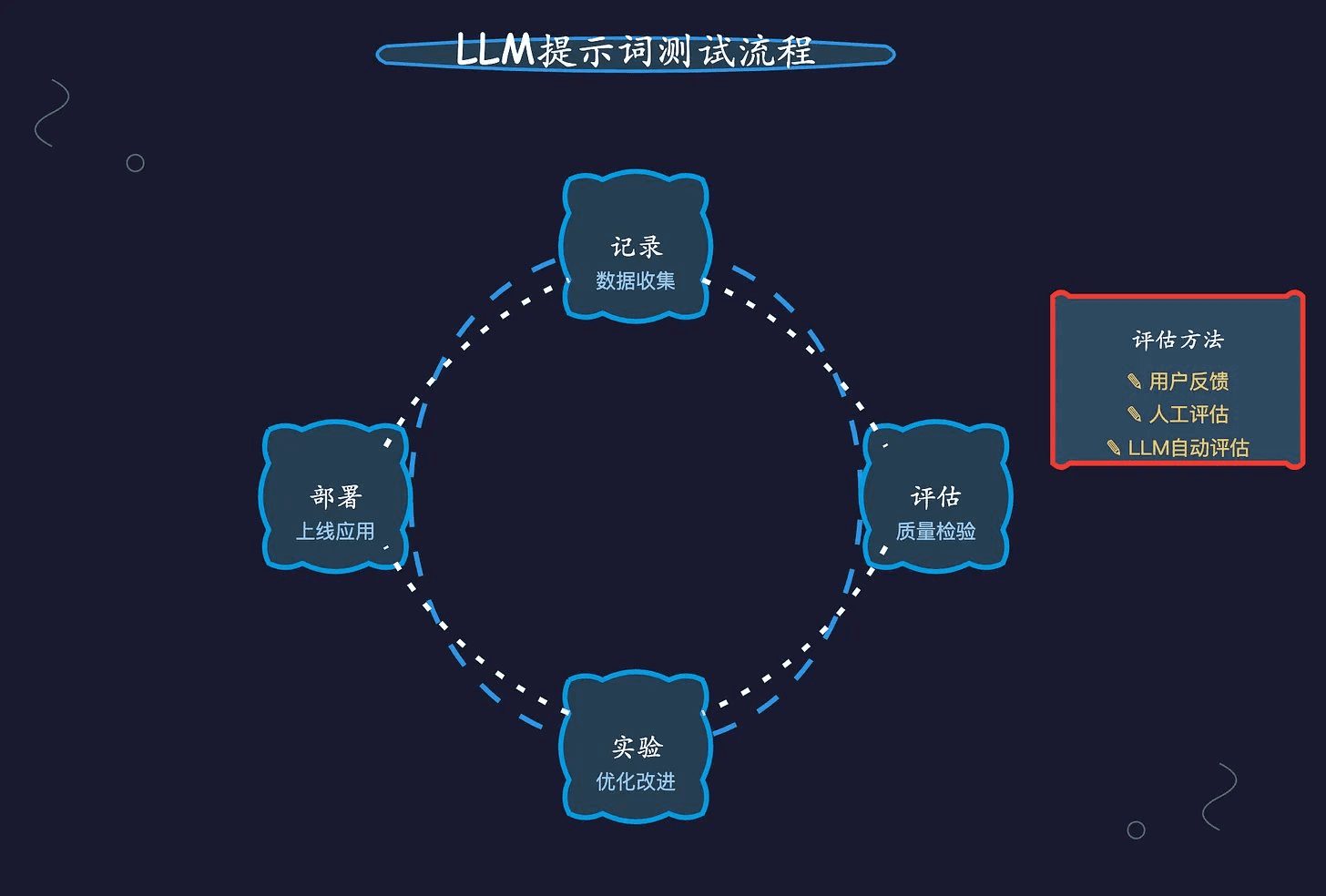

Second, a systematic cue word optimization process:

- preparatory phase

- Logging LLM Requests with the Observation Tool

- Track key metrics: usage, latency, cost, first response time, etc.

- Monitoring anomalies: increased error rates, sudden increase in API costs, decreased user satisfaction

- Testing process

- Create multiple cue word variants, using techniques such as chain thinking and multiple examples

- Tested using real data:

- Golden datasets: carefully curated inputs and expected outputs

- Sampling production data: challenges to better reflect real-world scenarios

- Comparative evaluation of the effects of different versions

- Deployment of best practices to production environments

III. In-depth analysis of the three key assessment methods:

- Real user feedback

- Advantage: directly reflect the actual use of the effect

- Characteristics: can be collected through explicit ratings or implicit behavioral data

- Limitations: takes time to build up, feedback can be subjective

- manual assessment

- Application scenarios: subjective tasks requiring fine-grained judgment

- Assessment approach:

- Yes/No judgment

- Scoring on a scale of 0-10

- A/B test comparison

- Limitations: resource-intensive and difficult to scale

- LLM automated assessment

- Applicable Scenarios:

- Classification of tasks

- Structured Output Validation

- Constraints checking

- Key Elements:

- Quality control of the assessment prompts themselves

- Provide guidance on assessment using sample less learning

- Temperature parameter is set to 0 to ensure consistency

- Strengths: Scalable and efficient

- Caveat: possible inheritance model bias

- Applicable Scenarios:

IV. Practical recommendations for an assessment framework:

- Clarify the assessment dimensions:

- Accuracy: whether the problem is solved correctly

- Fluency: grammar and naturalness

- Relevance: whether it hits the user's intent

- Creativity: imagination and engagement

- Coherence: harmonization with historical outputs

- Specific assessment strategies for different task types:

- Technical support category: focus on accuracy and professionalism in problem solving

- Creative writing category: focus on originality and brand tone

- Structured tasks: emphasis on formatting and data accuracy

V. Key points for continuous optimization:

- Create a complete feedback loop

- Maintain a mindset of iterative experimentation

- Data-driven decision-making

- Balancing impact enhancement and resource investment

© Copyright notes

Article copyright AI Sharing Circle All, please do not reproduce without permission.

Related posts

No comments...