RAGLite: Efficient Retrieval Augmentation Generation (RAG) tool supporting multiple databases and language models.

General Introduction

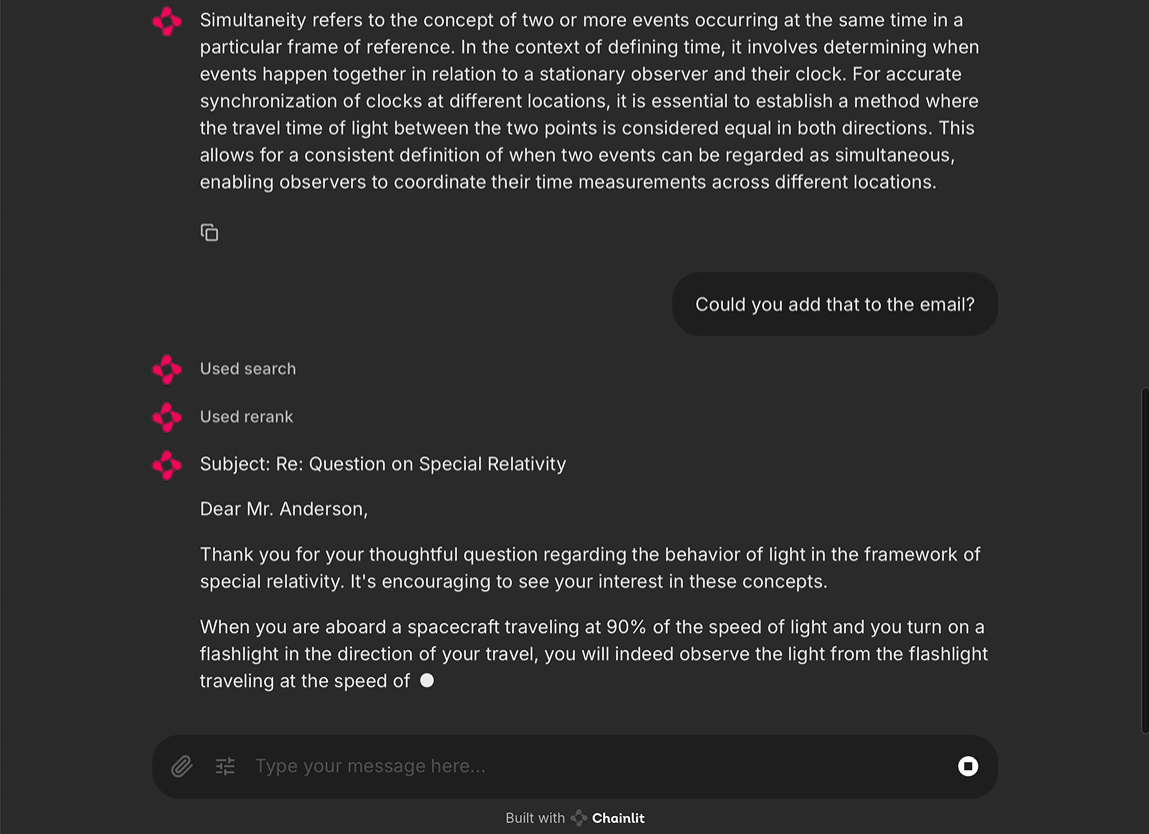

RAGLite is a Python toolkit for Retrieval Augmented Generation (RAG) with support for PostgreSQL or SQLite databases. It provides flexible configuration options that allow users to choose different language models and reorderers.RAGLite is known for its lightweight and efficient features, works on a wide range of operating systems, and supports a variety of acceleration options, such as Metal and CUDA.

Function List

- Support for multiple language models, including native llama-cpp-python models

- Supports PostgreSQL and SQLite as keyword and vector retrieval databases

- Provides multiple reorderer options, including multi-language FlashRank.

- Lightweight dependencies, no need for PyTorch or LangChain

- Support PDF to Markdown conversion

- Multi-vector embedding and contextual block headings

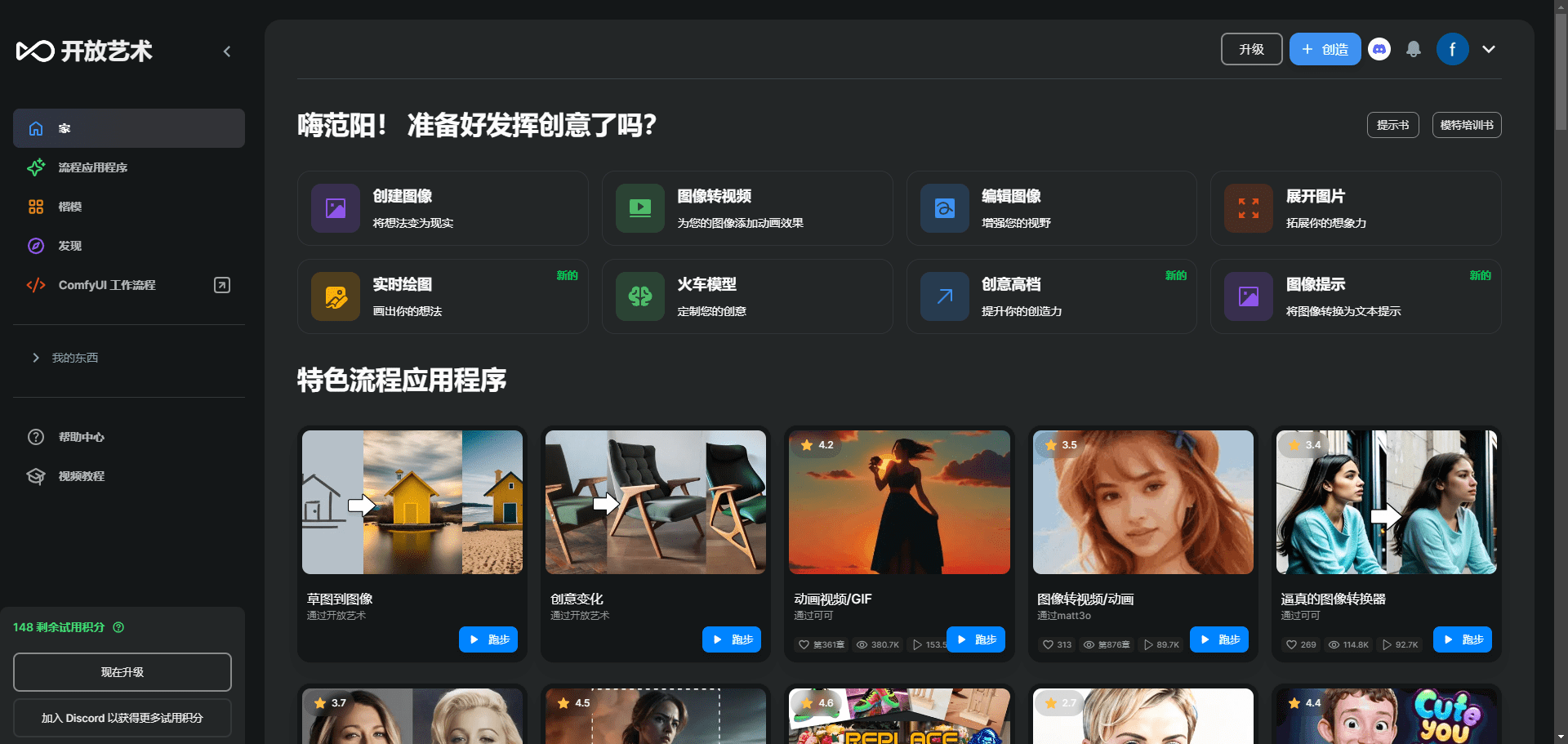

- Provides customizable ChatGPT Class front-end with support for Web, Slack and Teams

- Supports document insertion and retrieval for multiple file types

- Provide tools for retrieving and generating performance evaluations

Using Help

Installation process

- Install spaCy's multilingual sentence model:

pip install https://github.com/explosion/spacy-models/releases/download/xx_sent_ud_sm-3.7.0/xx_sent_ud_sm-3.7.0-py3-none-any.whl - Install the accelerated llama-cpp-python precompiled binary (optional but recommended):

LLAMA_CPP_PYTHON_VERSION=0.2.88 PYTHON_VERSION=310 ACCELERATOR=metal | cu121 | cu122 | cu123 | cu124 PLATFORM=macosx_11_0_arm64 | linux_x86_64 | win_amd64 pip install "https://github.com/abetlen/llama-cpp-python/releases/download/v$LLAMA_CPP_PYTHON_VERSION-$ACCELERATOR/llama_cpp_python-$LLAMA_CPP_PYTHON_VERSION-cp$PYTHON_VERSION-cp$PYTHON_VERSION-$PLATFORM.whl" - Install RAGLite:

pip install raglite - Install customizable ChatGPT-like front-end support:

pip install raglite[chainlit] - Install additional file type support:

pip install raglite[pandoc] - Installation assessment support:

pip install raglite[ragas]

Guidelines for use

- Configuring RAGLite::

- Configure a PostgreSQL or SQLite database and any supported language models:

from raglite import RAGLiteConfig my_config = RAGLiteConfig( db_url="postgresql://my_username:my_password@my_host:5432/my_database", llm="gpt-4o-mini", embedder="text-embedding-3-large" )

- Configure a PostgreSQL or SQLite database and any supported language models:

- Inserting Documents::

- Insert PDF documents and convert and embed them:

from pathlib import Path from raglite import insert_document insert_document(Path("On the Measure of Intelligence.pdf"), config=my_config)

- Insert PDF documents and convert and embed them:

- Retrieval and generation::

- Use vector search, keyword search, or hybrid search for your query:

from raglite import hybrid_search, keyword_search, vector_search prompt = "How is intelligence measured?" chunk_ids_hybrid, _ = hybrid_search(prompt, num_results=20, config=my_config)

- Use vector search, keyword search, or hybrid search for your query:

- Reordering and answering questions::

- Reorder the search results and generate answers:

from raglite import rerank_chunks, rag chunks_reranked = rerank_chunks(prompt, chunk_ids_hybrid, config=my_config) stream = rag(prompt, search=chunks_reranked, config=my_config) for update in stream: print(update, end="")

- Reorder the search results and generate answers:

- Assessment retrieval and generation::

- Use Ragas to retrieve and generate performance evaluations:

from raglite import answer_evals, evaluate, insert_evals insert_evals(num_evals=100, config=my_config) answered_evals_df = answer_evals(num_evals=10, config=my_config) evaluation_df = evaluate(answered_evals_df, config=my_config)

- Use Ragas to retrieve and generate performance evaluations:

- Deploying a ChatGPT-like front-end::

- Deploy customizable ChatGPT-like front-ends:

raglite chainlit --db_url sqlite:///raglite.sqlite --llm llama-cpp-python/bartowski/Llama-3.2-3B-Instruct-GGUF/*Q4_K_M.gguf@4096 --embedder llama-cpp-python/lm-kit/bge-m3-gguf/*F16.gguf

- Deploy customizable ChatGPT-like front-ends:

© Copyright notes

Article copyright AI Sharing Circle All, please do not reproduce without permission.

Related posts

No comments...