PDL: Declarative Prompted Word Programming Language

summaries

Large Language Models (LLMs) have generated a lot of interest worldwide, making many previously difficult AI applications possible.LLMs are controlled by highly expressive textual prompts and return textual answers. However, this unstructured text of input and output makes LLM-based applications vulnerable. This has fueled the rise of prompting frameworks that aim to regulate LLM's interaction with the external world. However, existing hinting frameworks either have a high learning curve or deprive developers of control over precise hints. To address this dilemma, this paper introduces the Prompting Declarative Language (PDL).PDL is a simple declarative data-directed language, based on YAML, that puts prompting at its core.PDL works well with multiple LLM platforms and LLMs, supports the writing of interactive applications that invoke LLMs and tools, and is easy to implement for example, chatbots, RAGs or agents, among other common use cases such as chatbots, RAGs or proxies. We hope that the PDL will make prompt programming easier, more robust and more enjoyable.

1. Introduction

Large Language Models (LLMs) have made great progress, demonstrating the ability to perform a variety of useful tasks. Since LLMs are controlled through natural language cues, cue engineering has become an ad hoc method to enhance accuracy (White et al.2023). Learning through cueing patterns, such as contextualization (Brown et al.2020), multiple LLM call chains (Chase et al.2022), enhanced generation (RAG) (Lewis et al.2020), tool use (Schick et al.2023), the Program Assisted Language (PAL) model (Gao et al.2023) and agents (Yao et al.2023) can unlock more features. However, despite its power, LLM is still fragile: it sometimes hallucinates or even fails to adhere to the expected syntax and types.

The cueing framework (Liu et al.2023) enables developers to use LLM and related hint patterns more easily while reducing their vulnerability. Some frameworks, such as LangChain (Chase et al.2022) and AutoGen (Wu et al.2023), by providing specific features for popular patterns such as RAGs or proxies. However, such features can deprive users of control over basic prompts and force them to learn many complex framework features. In contrast, low-level prompting frameworks such as Guidance (Microsoft.2023) and LMQL (Beurer-Kellner et al.2023) that provide more control through syntax and types. However, they require users to program in imperative languages such as Python or TypeScript. Frameworks at the other end of the spectrum, such as DSPy (Khattab et al.2023) and Vieira (Li et al.2024), avoiding handwritten prompts altogether by automatically generating prompts. Unfortunately, this further removes control from the developer. The question thus becomes how to make LLM programming more robust and keep the developer in the driver's seat while maintaining simplicity.

To address this problem, we draw on time-tested programming language design ideas. The principle of orthogonality advocates the use of a small and simple set of functions that are combined to achieve powerful functionality (van Wijngaarden et al.1977). In this context, orthogonality means avoiding special cases as much as possible. For hinting frameworks, orthogonality is a way to avoid specific features. Next, if the language can pass type and role checking (Hugging Face, the2023) enforced structurally, developers will struggle less with vulnerability. An intractable tension remains: on the one hand, we want developers to be able to control precise hints, and on the other hand, we need a simple declarative language. For this reason, we chose a data-oriented language that deliberately blurs the line between programs (e.g., for chains and tools) and data (for hints). This inspiration came from the old notion of code-as-data (McCarthy.1960), and seminal work on layerless programming (Cooper et al.2006).

This paper introduces the Prompt Declaration Language (PDL), an orthogonal and typed data-oriented language. Unlike other prompt languages embedded in imperative languages, PDL is based on YAML (Ben-Kiki et al.2004).YAML is a data serialization format that is both human-readable (by facilitating a simple syntax for unstructured strings) and structured (JSON-compatible).Variables in PDL also hold JSON values and optionally use JSON Schema (Pezoa et al.2016) is typed.PDL is currently implemented by an interpreter that performs dynamic type checking. One advantage of representing programs as data is the ease of program transformation (Mernik et al.2005), e.g. for optimization. Rendering programs in a data representation format may even facilitate the generation of PDL programs by PDL programs through a large language model, similar to PAL (Gao et al.2023).

PDL programs consist of blocks (YAML objects), each of which adds data to the prompt context. This mindset is ideal for use with prompting technologies such as chatbots or agents: program execution implicitly builds the conversation or trajectory without explicit pipelining. This context then becomes the input for the next LLM call.PDL supports native LLMs, as well as LLMs hosted by multiple vendors, including, but not limited to, the open-source Granite model on IBM Watsonx1 and Replicate2 (Abdelaziz et al.2024; Granite Team, IBM.2024PDL provides loops and conditional control structures, as well as function and file introduction for modularity.PDL uses Jinja2 (Ronacher.2008) expressions to template not just hints, but entire programs.

This article provides an overview of PDL through an introductory example (Section 2), followed by a detailed description of the language (Section 3). It describes the tools for running and editing PDL programs (Section 4), and provides case studies to demonstrate additional applications of PDL (Section 5). Finally, the paper discusses related work (Section 6), and inSection 7PDL is open source and can be found in the https://github.com/IBM/prompt-declaration-language Getting it. Overall, PDL is a simple yet powerful new LLM prompt programming language.

2. Overview

This section provides an overview of PDL functionality through a chatbot example. A PDL program executes a series of lump (of earth)The data generated by each block is contributed to the background context. There are various types of blocks that can generate data in different ways: model calls, reading data from stdin or files, creating various JSON data directly, and executing code. In addition, there are a variety of control blocks (if-then-else, for, and repeat) that allow PDL users to express rich data pipelines and AI applications.

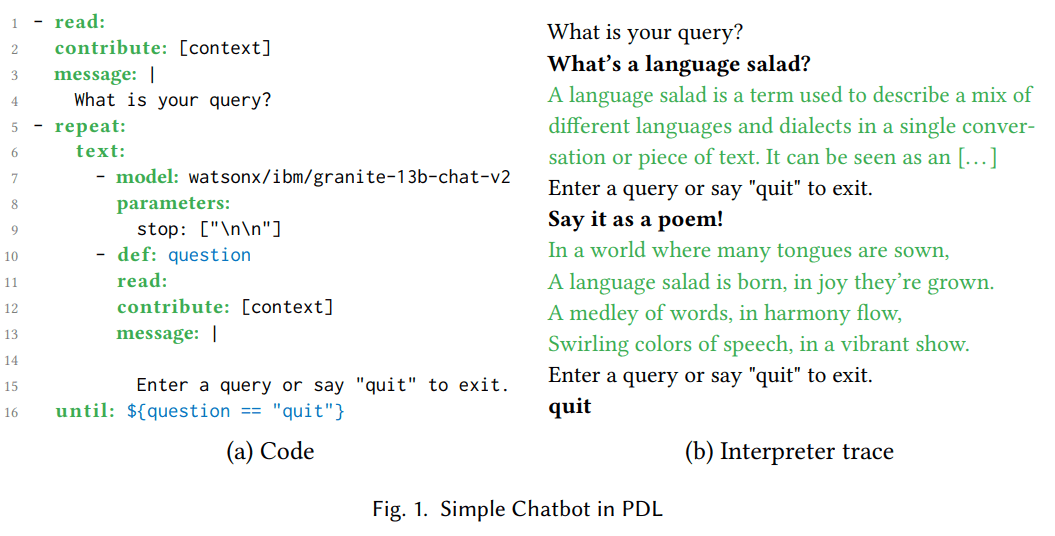

seek 1(a) shows the PDL code for a simple chatbot. The read: block on lines 1-4 prints a message asking the user to enter a query and reads it from stdin. Fig. 1(b) A trace of the execution of the same program is shown. For example, a user might ask "What is a language salad?" . To avoid repetition, the "attribute: [context]" clause puts the user response into the background context, but not the result (what is printed on stdout).

The repeat:until: block on lines 5-16 contains a nested text: block, which in turn contains a sequence of two nested blocks. text: blocks convert the results of their nested blocks to strings and concatenate them. The model: block on lines 7-9 calls a large language model (LLM) that uses the current accumulated context as a hint. In the first iteration of the loop, the context consists of just two lines: "What is your query?" and "What's a language salad?". The 'stop: [\n\n]' model parameter causes the LLM to stop generating tokens after two consecutive line breaks are generated. the LLM interpreter prints the LLM output in green; Fig. 1(b) shows that in this example, LLM generated "A language salad is [...]". The read: block on lines 10-15 prints the message using YAML's multi-line string syntax, starting with a vertical line (|). This example shows how PDL puts the prompt first, while making it easy to read and giving the developer precise control. The interpreter trace on the right shows that the user typed "Say it as a poem!", which is defined as the variable question on the left at line 10 and appended to the context at line 12. The until: clause on line 16 specifies that the Jinja2 expression '${question == "quit"}’ PDL uses the '${...}' syntax to embed Jinja2 templates instead of '{{...}}' instead of '{{...}}', because the latter is incompatible with YAML's special characters (curly braces).

In the second iteration of the loop, the context contains the effects of the first iteration of the loop. Thus, the second execution of the model: block sees the output of the first execution and can paraphrase it as a poem, "In a world where many tongues [...]" as shown here 1(b). Eventually, during the second read: block execution of this example, the user types "quit", causing the loop to terminate. Now that we've seen some common PDL blocks (read:, repeat:, text:, and model:) in action, we can move on to the first read: block. 3 section that describes the remaining blocks and language features.

(a) Code

- read:

contribute: [context]

message: |

您的查询是什么?

- repeat:

text:

- model: watsonx/ibm/granite-13b-chat-v2

parameters:

stop: ["\n\n"]

- def: question

read:

contribute: [context]

message: |

输入查询或说“quit”退出。

until: ${question == "quit"}

(b) Interpreter tracing

您的查询是什么?

什么是语言沙拉?

语言沙拉是一个术语,用于描述在单一对话或文本中混合不同语言和方言。它可以被看作是[…]

输入查询或说“quit”退出。

用诗的形式表达!

在语言众多的世界中,

语言沙拉诞生,喜悦中成长。

词语交织,和谐中流动,

五彩缤纷的语言,活力绽放。

输入查询或说“quit”退出。

quit

Figure 1. Simple chatbot in PDL

3. Language

Figure 2. PDL Quick Reference

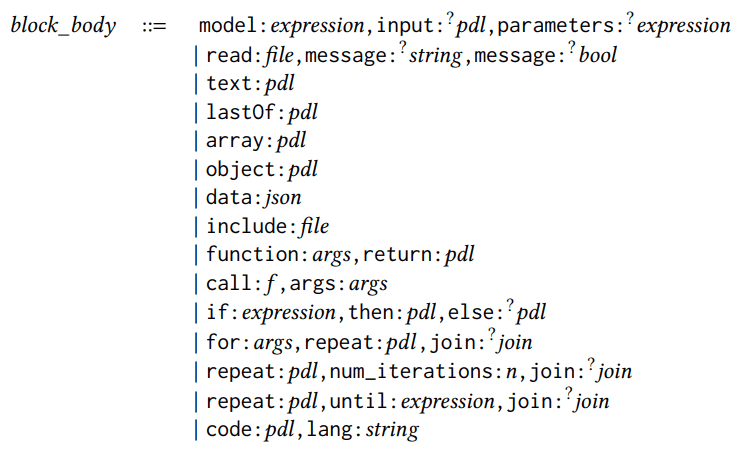

PDL is a language embedded in YAML, making each PDL program a valid YAML document that conforms to the PDL architecture. Figure 2 is a quick reference to the PDL and is explained in this section using the syntax rules. A program is a block or a list of blocks, where a block can be an expression or a structured block, as shown in the following syntax rules:

pdl ::= block | [block, . . . ,block]block ::= expression | structured_block

All the syntax rules in this section use YAML's flow-style syntax (e.g., [block, ...,block]). ...,block]). The same PDL code can also be rendered as YAML's block-style syntax, for example:

- block

... - block

Each block contains a block body with a keyword indicating the type of block (e.g., model or read). There are 15 types of block bodies (optional fields are annotated with question marks):

block_body ::=

block_body ::=

model:expression,input:?pdl,parameters:?

expression

| read:file,message:?

string,message:?

bool

| text:pdl

| lastOf:pdl

| array:pdl

| object:pdl

| data:json

| include:file

| function:args,return:pdl

| call:𝑓,args:args

| if:expression,then:pdl,else:?pdl

| for:args,repeat:𝑝𝑑𝑙,join:?

join

| repeat:pdl,num_iterations:n,join:?

join

| repeat:pdl,until:expression,join:?

join

| code:pdl,lang:string

We've already seen the model: and read: blocks in the previous section. model: blocks invoke the large language model. The prompts are taken from the current context unless the optional input: field is specified. The optional parameters: field is used to configure the inference behavior of the model. read: block reads input from a file, or from standard input if no file name is specified. The optional message: field is used to display a message to the user, the optional multiline: field determines whether to stop at line breaks.

The five block types for creating data include: text:, lastOf:, array:, object:, and data:. Figure 2 They are shown in a simple example. The list of blocks without the keyword is shown as lastOf:. the difference between the object: block and the data: block is that the PDL interpreter ignores the PDL keyword in the data: block and treats it as a normal JSON field.

For modularity, PDL supports include: blocks and functions. include: blocks open a PDL program at a specified relative path and add its output to the location where it appears. The syntax for function arguments is as follows:

args::={x:exp ression,... ,x:expres sion}

Each x:expressi on maps parameter names to type specifications (in function: definition) or values (in function call:). return: keyword provides the body of the function, which may contain nested blocks; figure 2 A simple Jinja2 expression example is shown. The optional pdl_context: keyword resets the context during a call, e.g. to the empty context [].

There are three types of control blocks: if:, for:, and various repeat: forms. They can contain nested blocks or simple expressions; if they contain a list of blocks, the list behaves as lastOf: by default. If you don't want lastOf: behavior, a common approach is to encapsulate the loop body in a text: block, or to merge the results of loop iterations using the join: keyword:

join::=as:? (text∣array∣lastOf),with:? string

The above 15 blocks can be used in combination with zero or more optional keywords that apply to any block:

structured _block::=

{ block_body,

description:?

string,

def:?

x,

defs:?

defs,

role:?

string,

contribute:?

contribute,

parser:?parser,

spec:?

type }

description: is a special comment. def: assigns the result of a block to a variable; figure 1 There is already an example in line 10. In contrast, defs: creates multiple variable definitions, each with its own name, x, and assigns values through a nested PDL program:

defs::={x:pdl ,...,x:pdl}

role: Assigns a specific role, such as 'user', 'assistant', or 'system', to the data generated by the block.The PDL calls to the chat model follow the common practice of modern chat APIs by passing {content:str, role:str} pairs of sequences as prompts instead of plain text. The model API then applies the model-specific chat template and flattens the sequence by inserting appropriate control tags, thus giving the PDL program some model independence. If the block does not explicitly specify role:, the model block defaults to 'assistant' and the other blocks default to 'user'. The roles of the embedded blocks are consistent with those of the outer blocks. In future research, we also plan to implement a permission-based security mechanism using roles.

The contribute: keyword can be used to specify a (possibly empty) subset to two destinations 'result' or 'context'. By default, each module contributes to its own result and to the background context used for subsequent Large Language Model (LLM) calls. Figure 1 line 2 shows an example of restricting module contributions to background context only to simplify the output.

The parser: keyword enables a module that normally generates only flat strings (e.g., an LLM call) to generate structured data. Supported parsers include json, yaml, regex, and jsonl. spec: keyword specifies the type. types for PDL are a subset of JSON Schema (Pezoa et al. 2016), Fig. 2 A brief demonstration of the common shorthand syntax is shown in. For example, the type '{questions: [str], answers: [str]}' is an object containing two fields for questions and answers, both of which contain arrays of strings. The first 5 Section will show how parser: and spec: work together. Future work will also utilize these keywords for constraint decoding (Scholak et al. 2021).

An atomic block is an expression:

expression ::= bool | number | string | ${𝑗𝑖𝑛𝑗𝑎_𝑒𝑥𝑝𝑟𝑒 𝑠𝑠𝑖𝑜𝑛} | string_expression

Expressions can be basic values, Jinja2 expressions (Ronacher. 2008) or strings containing Jinja expressions.Jinja2 is a convenient way to specify templates for hints, where parts of the hint are hard-coded and other parts are filled in by expressions. However, the PDL further extends the use of Jinja2 by allowing developers to template not only individual hints, but entire model call chains and other modules. Although we recommend that readers refer to the Jinja2 documentation for a complete list of possible expressions, Figure 2 The PDL uses only Jinja2 expressions and does not include Jinja2 statements such as {% if .. %} maybe {% for .. %}because these already overlap with PDL's if: and for: functions.

Last but not least, PDL has a code: module that allows execution of code in a given programming language (as of this writing, only Python is supported). The next section describes the PDL tools, including the interpreter, which provides a sandboxing feature to minimize the risk of executing arbitrary code. For more information, see the link to the tutorial in PDL's GitHub repository.

4. Tools

The PDL provides tools to make the PDL program easy to write, run and understand.

First, the PDL interpreter is an execution engine with a command line interface, as one would expect from a scripting language. The interpreter supports streaming mode, where the LLM output is progressively visible as it is generated, thus providing a more interactive chat experience. The interpreter also supports sandboxing, which allows it to be launched in a container and is recommended when executing LLM-generated actions or code.

PDL IDE Support VSCode has been enhanced to make it easier to write PDL code through syntax highlighting, auto-completion, tooltips for PDL keywords, and error checking. These features are driven in part by the PDL meta-mode, the JSON schema that defines a valid PDL.

%%pdl unit of magic Enhanced with Jupyter Notebooks, developers can write units of code directly in PDL. In this way, the hosted notebook platform can be used as a simple playground for interactive exploration of prompts. Given multiple PDL code units in the same notebook, later units can use variables defined in earlier units. Additionally, the background context of the later unit carries over from the earlier unit; when this is not desired, developers can use the %%pdl --reset-context to override this behavior.

PDL Real-time Document Visualizer A specific trace of the execution of the PDL program is displayed in the form of colored nested boxes, similar to the typical graphics found in papers or blog posts about LLM tips. The user can then select one of the boxes to display the corresponding PDL code, similar to how a spreadsheet cell displays data, but the user can select them to examine the formulas that generated that data. This real-time view allows the user to quickly understand the specific data and then move on to understanding the code that generated that data.

Finally, the PDL has a SDK(Software Development Kit), a small Python library for calling PDLs from Python.This is useful for extending larger Python applications to use hint-based programs such as agents. As described in Section 3 As discussed in Section , PDL files can contain Python in code blocks. when developing larger applications using PDL, we found it useful to keep this code to a few lines, by defining the function in a separate Python file and then calling it from PDL. A good practice is to pass data between PDL and Python in the form of JSON objects. Optionally, this can be done using the PDL's spec: keyword, and TypedDict or Pydantic on the Python side for type checking, as shown in the next section. 3 Shown.

(a) PDL codes

(a) PDL codes

1text:

2- lang: python

3 code: |

4 import rag_mbpp

5 PDL_SESSION.mbpp = rag_mbpp.initialize()

6 result = ""

7- defs:

8 test_query: >-

9 编写一个 Python 函数,从字符串中删除给定字符的第一个和最后一个出现。

12 retrieved:

13 lang: python

14 spec: [{query: str, answer: str}]

15 code: |

16 import rag_mbpp

17 result = rag_mbpp.retrieve(

18 PDL_SESSION.mbpp, "${test_query}", 5

19 )

20 text: >

21 给定文本在 "Q:" 之后,生成一个 Python 函数在 "A:" 之后。

24 这里有一些示例,请完成最后一个:

25- for:

26 few_shot_sample: ${retrieved}

27 repeat: |

28 Q: ${few_shot_sample.query}

29 A: ‘‘‘${few_shot_sample.answer}‘‘‘

30- |-

31 Q: ${test_query}

32 A:

33- model: watsonx/ibm/granite-3-8b-instruct

34 parameters:

35 stop: ["Q:", "A:"]

(b) Python code

1from typing import TypedDict

2import datasets

3from sklearn.feature_extraction.text \

4 import TfidfVectorizer

5

6def initialize():

7 train_in = datasets.load_dataset(

8 "mbpp", "sanitized", split="train"

9 )

10 corpus = [row["prompt"] for row in train_in]

11 tfidf = TfidfVectorizer().fit(corpus)

12 def embed(text):

13 sparse_result = tfidf.transform(

14 raw_documents=[text]

15 )

16 return sparse_result.toarray().flatten()

17 train_em = train_in.map(

18 lambda row: {"em": embed(row["prompt"])}

19 )

20 vec_db = train_em.add_faiss_index("em")

21 return vec_db, embed

22

23QA = TypedDict("QA", {"query":str,"answer":str})

24def retrieve(mbpp, query, n: int) -> list[QA]:

25 vec_db, embed = mbpp

26 key = embed(query)

27 nearest = vec_db.get_nearest_examples(

28 "em", key, n

29 )

30 queries = nearest.examples["prompt"]

31 answers = nearest.examples["code"]

32 return [

33 {"query": q, "answer": a}

34 for q, a in zip(queries, answers)

35 ]

Figure 3. RAG Example in PDL

5. Case studies

We're on the first 2 A simple example of a PDL chatbot was seen in the section. This section will show some slightly more complex use cases for PDL: RAGs, proxies, and generating PDLs from PDLs.

5.1 Retrieve enhancement generation

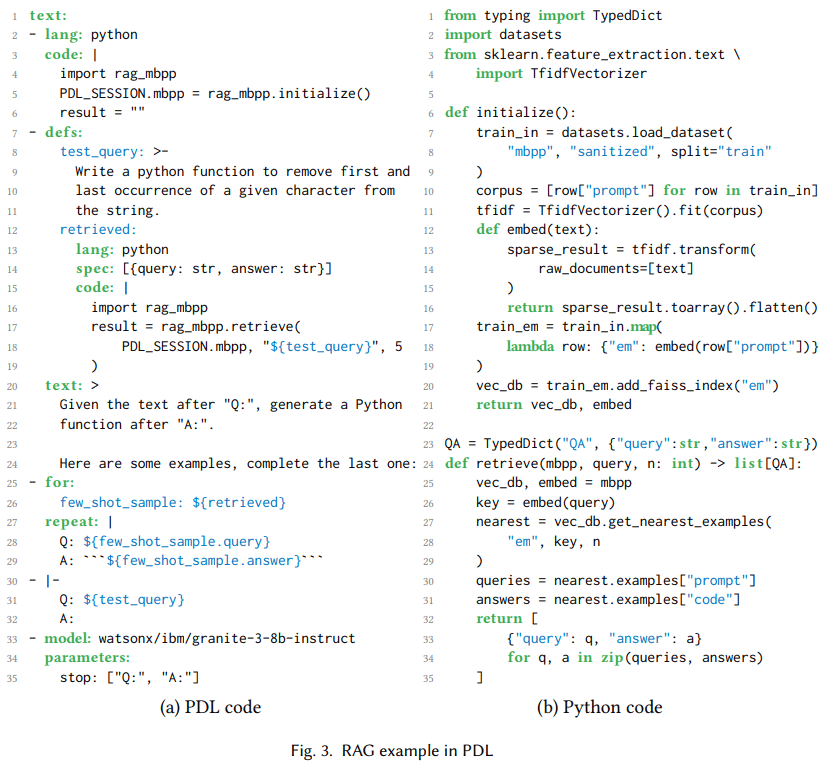

Retrieval-enhanced generation, or RAG, which works by first retrieving relevant context and then adding it to the model's prompts for generating answers (Lewis et al. 2020). Fig. 3(a) Shows a PDL program that uses RAG to retrieve a small number of examples for a code generation task. Code: Lines 2-6 use Python to initialize a vector database of training splits for the MBPP dataset of "mostly basic Python programs" (Austin et al., 2006). 2021). It uses the graph 3Python function defined in (b), and a PDL_SESSION special variable that makes it possible to pass state to later code blocks. Figure 3Lines 8-11 of (a) initialize the variable test_query to the natural language request that generated the Python code. Lines 12-19 initialize the variable retrieved to the five most similar examples from the training data.

Lines 20-24 add instructions to the context, lines 25-29 add a handful of examples to the context, and lines 30-32 add test queries to the context. The for: loop in line 25 is a common way of generating data using PDL, in this case for context learning. Finally, lines 33-35 invoke a Granite 3 model (Granite Team, IBM, Inc. 2024), using the cumulative context that causes it to generate Python functions that test query requests. While this is a simple example, we also use PDL with Codellm-Devkit (Krishna et al. 2024) is used in conjunction with a tool that statically analyzes source code from a variety of programming languages to retrieve other relevant context when prompting the LLM for coding tasks.

(a) Code

(a) Code

1text:

2- read: react_few_shot_samples.txt

3- |

4

5 Hudson River 的发现者是什么时候出生的?

6- repeat:

7 text:

8 - def: thought

9 model: watsonx/ibm/granite-34b-code-instruct

10 parameters:

11 stop: ["Act:"]

12 include_stop_sequence: true

13 - def: action

14 model: watsonx/ibm/granite-34b-code-instruct

15 parameters:

16 stop: ["\n"]

17 parser: json

18 spec: {name: str, arguments: {topic: str}}

19 - def: observation

20 if: ${ action.name == "Search" }

21 then:

22 text:

23 - "Obs: "

24 - lang: python

25 code: |

26 import wikipedia

27 query = "${ action.arguments.topic }"

28 result = wikipedia.summary(query)

29 until: ${ action.name != "Search" }

(b) Interpreter tracing

科罗拉多造山运动东部区域的海拔范围是多少?

Tho: 我需要搜索科罗拉多造山运动,找出东部区域的范围。

Act: {”name”: ”Search”, ”arguments”: {”topic”: ”科罗拉多造山运动”}}

Obs: 科罗拉多造山运动是一个事件 […]

[…]

Hudson River 的发现者是什么时候出生的?

Tho: 我需要搜索 Hudson River 的发现者,找出他是什么时候出生的。

Act: {”name”: ”Search”, ”arguments”: {”topic”: ”Hudson River 的发现者”}}

Obs: Hudson River 是一条 315 英里长的 […]

Tho: Hudson River 的发现者是 Henry Hudson。我需要搜索 Henry Hudson,找出他是什么时候出生的。

Act: {”name”: ”Search”, ”arguments”: {”topic”: ”Henry Hudson”}}

Obs: Henry Hudson (约 1565 年 – 消失 […]

Tho: Henry Hudson 于 1565 年出生。Act: {”name”: ”Finish”, ”arguments”: {”topic”: ”1565”}}

Figure 4. ReAct act on behalf of sb. in a responsible position

5.2.ReAct Agent

Large Language Modeling Based act on behalf of sb. in a responsible position Allows large language model selection and configuration movementsin matrix in which these actions are executed, and the output of the actions is fed back to the big language model as the heed. There are different models for such agents, such as ReAct (Yao et al. 2023) and ReWOO (Xu et al. 2023). Actions are based on a large language model Tool Call (Schick et al. 2023), while agents chain together multiple tool calls in a dynamic, large language model bootstrap sequence. The goal is to make AI-based applications less prescriptive and more goal-oriented. In addition, the agent can utilize observations as feedback to recover when actions go awry.

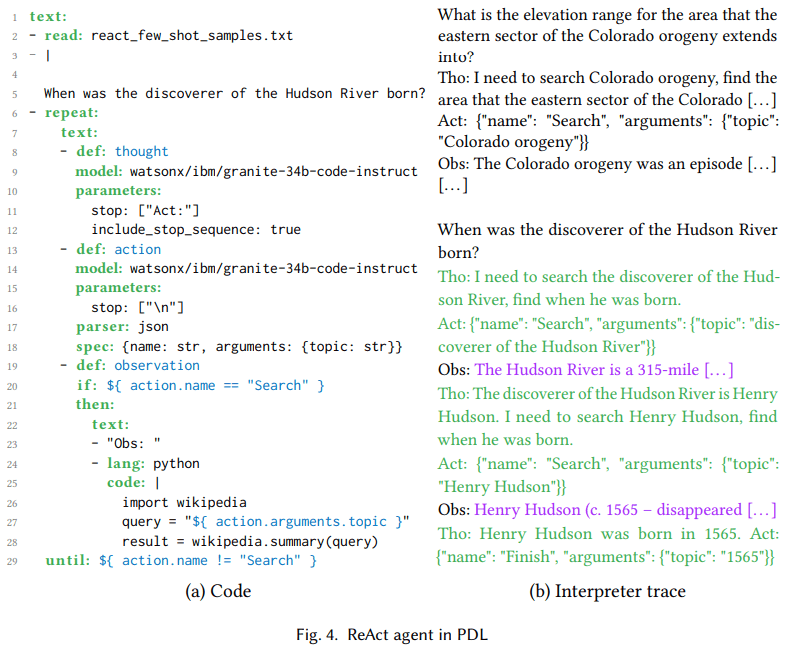

seek 4 shows a PDL example of a simple ReAct agent.At the heart of ReAct is a think-act-observe loop, which is represented in the code as variable definitions for think (line 8), act (line 13), and observe (line 19). Thinking is the natural language of model generation, as illustrated, for example, in Figure 4'I need to search for the Hudson River discoverer to find out when he was born' in the interpreter trace of (b). The action is the JSON generated by the model to match the Granite model's tools using the training data (Abdelaziz et al. 2024). Lines 17 and 18 on the left-hand side ensure that the large language model output is parsed as JSON and conforms to the {name, arguments} schema, while the interpreter trace on the right-hand side shows that the model does indeed generate such objects. This makes it possible to use Jinja2 to access fields of the object, such as ${ action.arguments.topic } on line 27. Observations are generated by the environment, in this case the Python code that calls Wikipedia. As shown in line 27, the 4 As discussed in Section , for cases involving running code generated (partially) from a large language model, we recommend using the sandboxing capabilities of PDL.

seek 4The interpreter trace in (b) shows that this execution has two iterations of the agent loop. Although this is a simple example, we have also implemented a code-editing agent using the PDL, which was used in the SWE-bench Lite leaderboard as part of the commit4 (Jimenez et al. 2024). The submission solves the first instance of 23.7% using only the open source model, which is higher than any previous result using the open source model and comparable to the results of the frontier model.

5.3. Generating PDLs from PDLs using a large language model

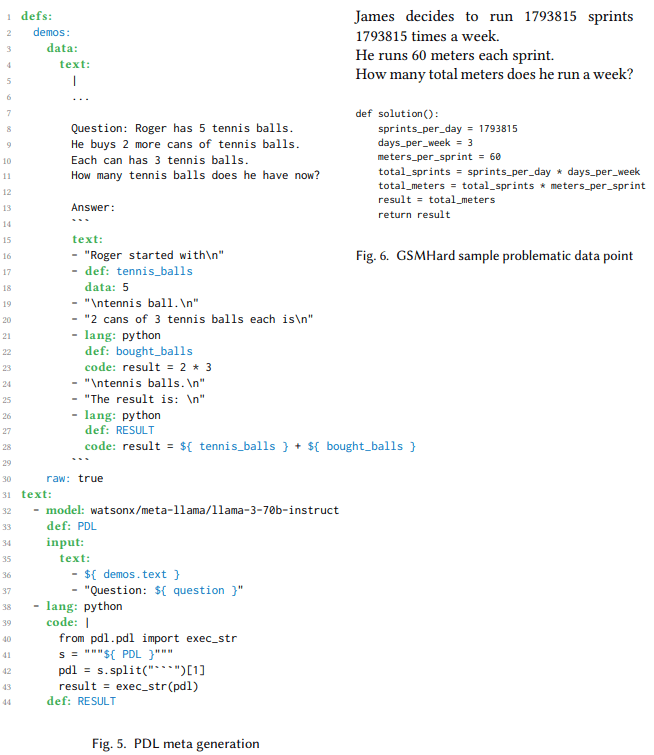

The previous sections showed how human developers can use PDL to encode different cueing patterns. This section turns to large language models and shows how they can also be used to generate PDLs.This meta-PDL generation is useful when large language models need to create problem-solving plans, e.g. as part of an agent workflow. Traditionally, these plans have been text, JSON, or Python code only. With PDL, these plans can be a combination of fully executable models and code calls. This section explores the use of PDL meta-generation on the GSMHard dataset5 .

GSMHard is a more difficult version of GSM8k that contains school-year math problems requiring simple arithmetic or symbolic reasoning.GSMHard contains an input, the math problem statement, and an output, the Python code that solves the problem. We implemented PAL (Gao et al. 2023) method, but instead of generating Python code, the large language model is asked to generate PDL. textual chain thinking is represented as PDL text blocks, and arithmetic is performed using PDL code blocks.

seek 6 A PDL program that generates PDL code and executes it in the same program is shown. The variable demos holds a small number of examples designed to teach the model how to generate PDL code. In line 32, a model call block uses these examples and a problem with free variables as input. The result is a PDL program that solves the problem. Line 38 extracts the PDL program and executes it in Python. The program is applied to the GSMHard dataset, where the problem populates the input problem.

This experiment found that 10% for the GSMHard dataset was actually wrong because the ground truth was inconsistent with the question asked. Fig. 6 Examples of such inconsistencies are shown. The use of PDL helped uncover this because the generated PDL code was human readable, so we were able to easily check for data points that did not match the ground truth and found that the ground truth was wrong in some cases. We used a large language model to cover the entire dataset and systematically picked out examples that appeared to be inconsistent. We then manually screened the results to remove false positives and identified data points from 10% with this problem.

1 定义:

2 示例:

3 数据:

4 文本:

5 |

6 ...

7

8 问题: Roger 有 5 个网球。

9 他又购买了 2 罐网球。

10 每罐有 3 个网球。

11 现在他总共有多少个网球?

12

13 答案:

14 ```

15 文本:

16 - "Roger 起初有\n"

17 - 定义: tennis_balls

18 数据: 5

19 - "\n个网球。\n"

20 - "2 罐,每罐有 3 个网球,总共是\n"

21 - 语言: python

22 定义: bought_balls

23 代码: result = 2 * 3

24 - "\n个网球。\n"

25 - "结果是:\n"

26 - 语言: python

27 定义: RESULT

28 代码: result = ${ tennis_balls } + ${ bought_balls }

29 ```

30 原始: true

31 文本:

32 - 模型: watsonx/meta-llama/llama-3-70b-instruct

33 定义: PDL

34 输入:

35 文本:

36 - ${ demos.text }

37 - "问题: ${ question }"

38 - 语言: python

39 代码: |

40 from pdl.pdl import exec_str

41 s = """${ PDL }"""

42 pdl = s.split("```")[1]

43 result = exec_str(pdl)

44 定义: RESULT

Figure 5. PDL meta-generation

James 决定每周跑 1793815 次冲刺

每次冲刺 60 米。

他每周总共跑多少米?

def solution():

sprints_per_day = 1793815

days_per_week = 3

meters_per_sprint = 60

total_sprints = sprints_per_day * days_per_week

total_meters = total_sprints * meters_per_sprint

result = total_meters

return result

Figure 6. GSMHard Sample Problem Data Points

6. Related work

A recent study puts Cue Frames Defined as a layer that manages, simplifies, and facilitates interactions between LLMs and users, tools, or other models (Liu et al.2023). The study emphasized that a major shortcoming of the cueing framework is the steep learning curve.

Probably the most popular prompting framework today is LangChain (Chase et al.2022), whose rich functionality makes it both powerful and complex.MiniChain's main motivation is precisely to avoid this complexity (Rush.2023), which offers fewer, simpler features that can be combined for advanced applications. However, both LangChain and MiniChain are Python-based frameworks, making them more non-declarative because developers need to write imperative code.PDL has a similar motivation as MiniChain, but takes it a step further by using YAML rather than Python as a foundation.

As with other prompting frameworks, the goal of PDL is to make LLM more robust.Guidance (Microsoft.2023) is a Python-based framework that provides a more structured design, but is more low-level than LangChain. Similarly, LMQL (Beurer-Kellner et al.2023) is a domain-specific language embedded in Python that makes use of types and restricted decoding.PDL takes some inspiration from LMQL in its intertwining of hints and programming, but unlike LMQL, it relies less on imperative Python code.Crouse et al. use finite state machines to formally specify the internal flow of various intelligent loops (Crouse et al.2024); while this is a fascinating endeavor, it does not introduce a sophisticated cueing language.

One advantage of domain-specific languages is that they can implement program transformations, e.g. for optimization (Mernik et al.2005). the DSPy cueing framework (Khattab et al.2023) has the motto "program, not prompt": it generates prompts automatically, so developers do not need to write them manually. Similarly, Vieira (Li et al.2024) extends Prolog to use the LLM as a probabilistic relation and automatically generates prompts.DSPy and Vieira are both very advanced frameworks, but unlike PDL, they both weaken the developer's control over specific prompts.Lale (Baudart et al.2021) is a language that lets users incrementally adjust the tradeoffs between automation and control in the AI pipeline, but it does not focus on LLM cues.DSPy, Vieira, and Lale optimize for predictive performance, while another optimization targets computational performance.SGLang (Zheng et al.2023) achieves this by better utilizing the prefix cache to get more cache hits in the KV cache (Kwon et al.2023). Future work will explore whether the declarative nature of PDL can enable similar computational performance optimizations.

Recently, a number of Large Language Model (LLM)-based prompting frameworks have emerged, focusing on LLM agents.AutoGen (Wu et al.2023) is a multi-agent framework in which all content consists of agents and conversations. Other multi-agent frameworks include CrewAI (Moura.2023) and GPTSwarm (Zhuge et al.2024). These frameworks prioritize support for proxies over other LLM-based use cases.While PDL also supports proxies, it pursues a more balanced stance, treating proxies as one of a number of hinting techniques.

7. Conclusion

PDL is a declarative data-oriented language: programs consist of YAML blocks, each of which is either literal or generative data. The model of thought is to execute a block by appending its data to a background context, which is used as a cue by subsequent calls to the larger language model. This thesis introduces the language with sample programs and a guided tour of the syntax and tools. The declarative nature of the language also makes it easy to implement automatic optimizations for speed, accuracy, and security, which will be implemented incrementally in future work.The PDL is now ready for use and is open source at the following URL:https://github.com/IBM/prompt-declaration-languageThe

© Copyright notes

Article copyright AI Sharing Circle All, please do not reproduce without permission.

Related posts

No comments...