Parler-TTS: Generating speaker-specific text-to-speech models from input text

General Introduction

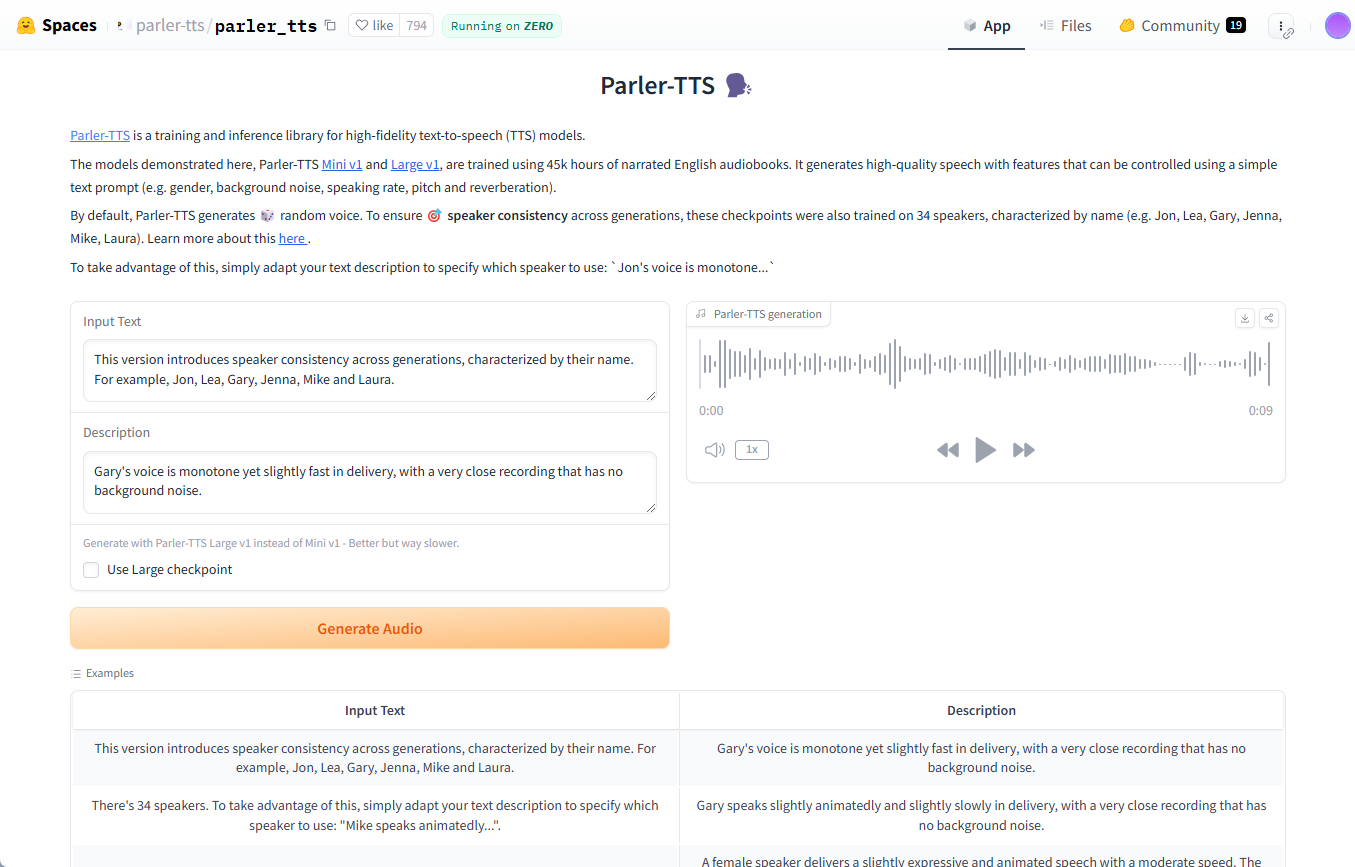

Parler-TTS is an open-source text-to-speech (TTS) modeling library developed by Hugging Face to generate high-quality, natural-sounding speech. The model is capable of generating speech with specific speaker styles (e.g. gender, pitch, speaking style, etc.) based on input text.Parler-TTS is based on the research results in the paper "Natural language guidance of high-fidelity text-to-speech with synthetic annotations" and is completely open source. Parler-TTS is based on the research results in the paper "Natural language guidance of high-fidelity text-to-speech with synthetic annotations", and is completely open source, with all datasets, preprocessing, training code, and weights publicly available, allowing the community to develop and improve upon them.

Function List

- High-quality speech generation: Generate natural and smooth speech with support for multiple speaker styles.

- open source: All code and model weights are publicly available for community development and improvement.

- Lightweight dependencies: Simple to install and use, with few dependencies.

- Multiple model versions: Versions of the model with different parameter counts are available, e.g. Parler-TTS Mini and Parler-TTS Large.

- Quick Generation: Optimized generation speed with support for SDPA and Flash Attention 2.

- Data sets and weights: Provides rich datasets and pre-trained model weights for easy training and fine-tuning.

Using Help

Installation process

- Ensure that the Python environment is installed.

- Use the following command to install the Parler-TTS library:

pip install git+https://github.com/huggingface/parler-tts.git

- For Apple Silicon users, run the following command to support bfloat16:

pip3 install --pre torch torchaudio --index-url https://download.pytorch.org/whl/nightly/cpu

Usage

Generate randomized speech

- Import the necessary libraries:

import torch

from parler_tts import ParlerTTSForConditionalGeneration

from transformers import AutoTokenizer

import soundfile as sf

- Load models and disambiguators:

device = "cuda:0" if torch.cuda.is_available() else "cpu"

model = ParlerTTSForConditionalGeneration.from_pretrained("parler-tts/parler-tts-mini-v1").to(device)

tokenizer = AutoTokenizer.from_pretrained("parler-tts/parler-tts-mini-v1")

- Enter text and generate speech:

prompt = "Hey, how are you doing today?"

description = "A female speaker delivers a slightly expressive and animated speech with a moderate speed and pitch."

inputs = tokenizer(prompt, return_tensors="pt").to(device)

outputs = model.generate(**inputs, description=description)

sf.write("output.wav", outputs.cpu().numpy(), 22050)

Generate speech in a specific speaker style

- Descriptions that use a particular speaker's style:

description = "A male speaker with a deep voice and slow pace."

inputs = tokenizer(prompt, return_tensors="pt").to(device)

outputs = model.generate(**inputs, description=description)

sf.write("output_specific.wav", outputs.cpu().numpy(), 22050)

training model

- Download and prepare the dataset.

- Use the provided training code for model training:

python train.py --dataset_path /path/to/dataset --output_dir /path/to/output

Optimized reasoning

- Optimized with SDPA and Flash Attention 2:

model = ParlerTTSForConditionalGeneration.from_pretrained("parler-tts/parler-tts-mini-v1", use_flash_attention=True).to(device)© Copyright notes

Article copyright AI Sharing Circle All, please do not reproduce without permission.

Related posts

No comments...