OpenAI-o3 and Monte-Carlo Ideas

o3 is here to share some personal insights. About Test-time Scaling Law progress, much faster than we thought. But I'd say the path is actually a bit convoluted - it's OpenAI's way of saving the country from the curve in its pursuit of AGI.

Intensive Learning and Shortcut Thinking

Why is that so? Let's explore this through two examples.

The first example comes from reinforcement learning. In RL, the discount factor plays a key role, which implies that the reward obtained will gradually decrease the further the decision-making steps go. Therefore, the goal of reinforcement learning is usually to maximize rewards in the shortest possible time and in the fewest possible steps. At the heart of this strategy, the emphasis is on 'shortcuts', i.e. getting the reward as quickly as possible.

The second example is the process of fine-tuning a large model. A pre-trained model that has not been fine-tuned often has no clear direction or control. When we ask the model "Where is the capital of China?" it may first say "That's a good question," and then go off on a tangent before finally giving the answer "Beijing". However, when the same question is asked to a fine-tuned model, the answer is straightforward and clear: "Beijing".

This fine-tuned model demonstrates a way of obtaining shortcuts through an optimization strategy - similar to the evolutionary journey of human beings - always striving for the least amount of energy consumption and the shortest path.

Why Reasoning?

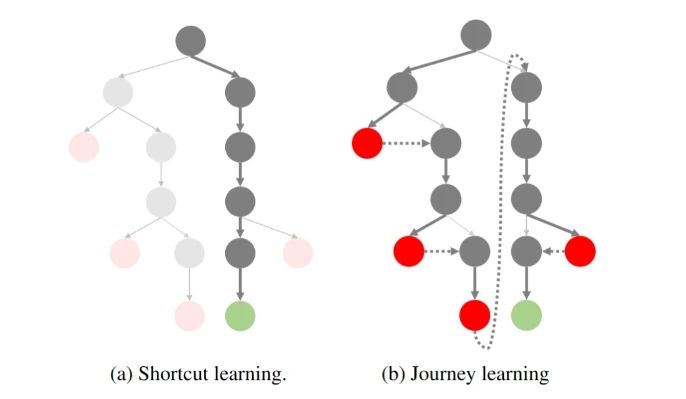

If you visualize the Reasoning sampling process as a tree:

O1 Replication Journey: Part 1

On the left is the shortcut learning that we have pursued in the past: the least number of steps to get to the right result. On the right is the "reflective, retrospective" paradigm represented by OpenAI o1.

We know that as o1 performs a search, the model is constantly reflecting and backtracking, and this process is often accompanied by additional overhead. The problem is, who wants to spend time and money on a complex search if the model can really give the right answer over and over again?OpenAI is not stupid, and we all know that shortcuts are better!

The more difficult the problem, the wider the potential tree of ideas, the more search space there is at each step, and the less likely it is that a shortcut will lead to the right answer. So what to do? One intuitive way to think about it is to go for pruning! Cut off those tree nodes that are unlikely to reach the end point ahead of time, compressing the search space - turning the tree back into a narrow one. This is what many current efforts are trying to do, for example:

Chain of Preference Optimization (CPO)

Chain of Preference Optimization is all about naturally constructing preference data from the thinking tree, and then using DPO to optimize it so that the model has a higher probability of selecting the tree node that will reach the end point.

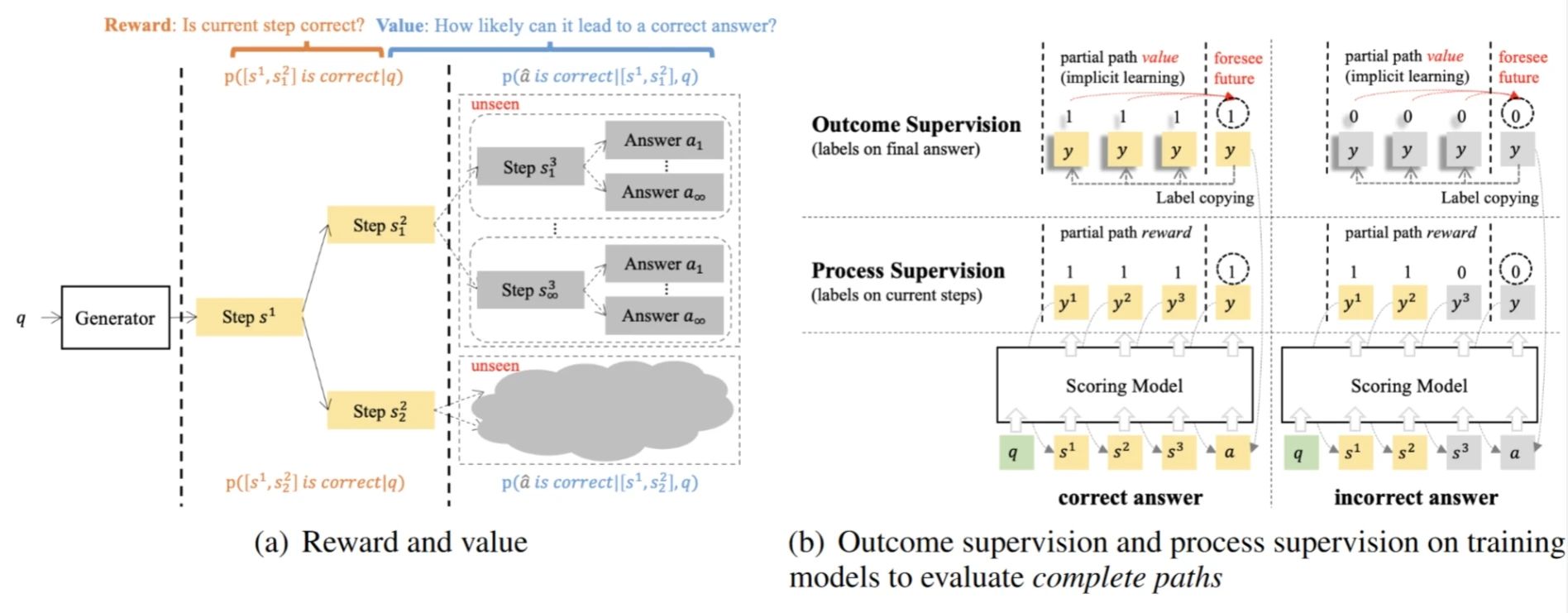

Outcome-supervised Value Models (OVM)

Outcome-supervised Value Models is to model Reasoning as an MDP process, using the probability (Value) of arriving at the correct answer at the current step to guide strategy optimization.

Why did OpenAI choose to break away from traditional shortcuts?

Back to o1, why did you choose to break the traditional shortcut idea and go for the "detour" of Tree Search?

If, in the past, we have tended to utilize the basic capabilities of (Exploit) models, it would be assumed that the existing GPT-4 models have been able to satisfy most of the conversational and simple reasoning needs. And these tasks can be well sampled, preferences evaluated, and iteratively optimized.

But this perspective ignores the need for more complex tasks - such as mathematical reasoning (AIME, Frontier Math), code generation (SWE-Bench, CodeForce), etc., which are often difficult to obtain rewards for in the short run - -Their rewards are very sparse, and the rewards only become apparent when the correct answer is finally arrived at.

As a result, traditional shortcut learning is no longer suitable for dealing with this type of complex task: how can you talk about optimizing the probability of the model choosing the correct path when you can't even sample a correct path?

Returning to the 'Monte-Carlo idea' in the title of this paper, we can see that this is actually the same thing: the core of the Monte-Carlo approach to reinforcement learning lies in estimating the value of a policy through multiple sampling, and thus optimizing the model. However, this method has a natural limitation - if the sampled policies cannot sample the optimal paths, then the model optimization will always end up with a local optimum. This is why we opt for a more exploratory strategy in MC Learning.

So OpenAI chose to break the scales of reinforcement learning by getting rid of the traditional shortcut thinking and instead reinforcing Explore.

Breakthroughs in o1: from exploration to optimization

In this context, OpenAI proposed the o1 paradigm. This change allows the model to start gradually being able to obtain sparse rewards when facing complex tasks! And through these rewards, the strategy can be continuously optimized. Although this exploration process may seem tedious and inefficient, it lays the foundation for further optimization.

So where did the o1 come from? A lot of work on replicating o1 has also appeared recently, what are they doing? If the behavioral policy used for exploration is the On-Policy approach, it is sampling with the current model (e.g., GPT-4o), which is still too inefficient.

So the guys unanimously chose the Off-Policy method:

OpenAI spends a lot of money to hire current PhD students to annotate Long CoT data; What if you don't have money? What can we do if we don't have the money? Then we should do some human-machine collaboration to annotate the data (manual distillation of o1) to reduce the requirement for annotators; We don't even have the money to find annotators? Then we have to distill R1 / QwQ, or think of other ways (Critique, PRM, etc.). Here I would also like to remind the big manufacturers and labs that are actively reproducing o1 not to forget that the ultimate goal of exploration is still optimization!

插一句题外话,虽然大家都在骂 o1 隐藏了真正的思维链,只展示 Summary 的捷径版本。殊不知这个 Summary 才是优化策略的关键数据!但 OpenAI 并不害怕其他人蒸馏这些 Summary,因为蒸馏这些数据还有一个前提——基础模型的能力足够强大,不然步子迈太大还容易闪了腰。

并且 OpenAI 还将探索的成本转嫁给了用户。虽然在初期花很多钱来标注探索型数据,但现在有了 o1 后,用户使用的过程中又无形地为他标注了更多数据。OpenAI 再次实现了伟大的数据飞轮!

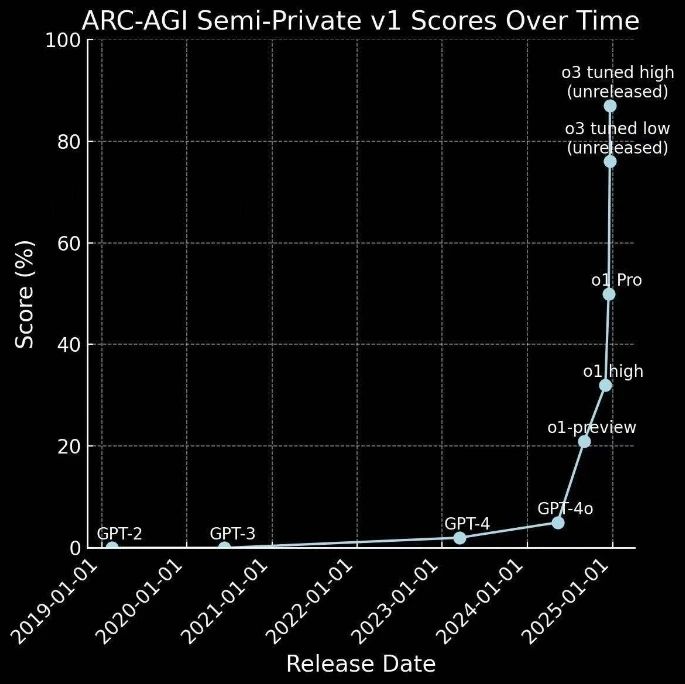

Rapid evolution from o1 to o3

The o1 was just released a couple months ago and here comes the o3.

In fact, this side-steps the previous conjecture: if GPT-4 represents a progression from 0 to 1 - i.e., from simple tasks to obtaining rewards - then o1 represents a leap from 1 to 10 - by exploring complex tasks and obtaining rare rewards, providing an unprecedented amount of high-quality data for further strategy optimization.

It has therefore progressed faster than anyone expected:

This is not only a successful application of the exploration strategy, but also an important step for AI technology towards AGI.

© Copyright notes

Article copyright AI Sharing Circle All, please do not reproduce without permission.

Related posts

No comments...