Open-Reasoner-Zero: Open Source Large-Scale Reasoning Reinforcement Learning Training Platform

General Introduction

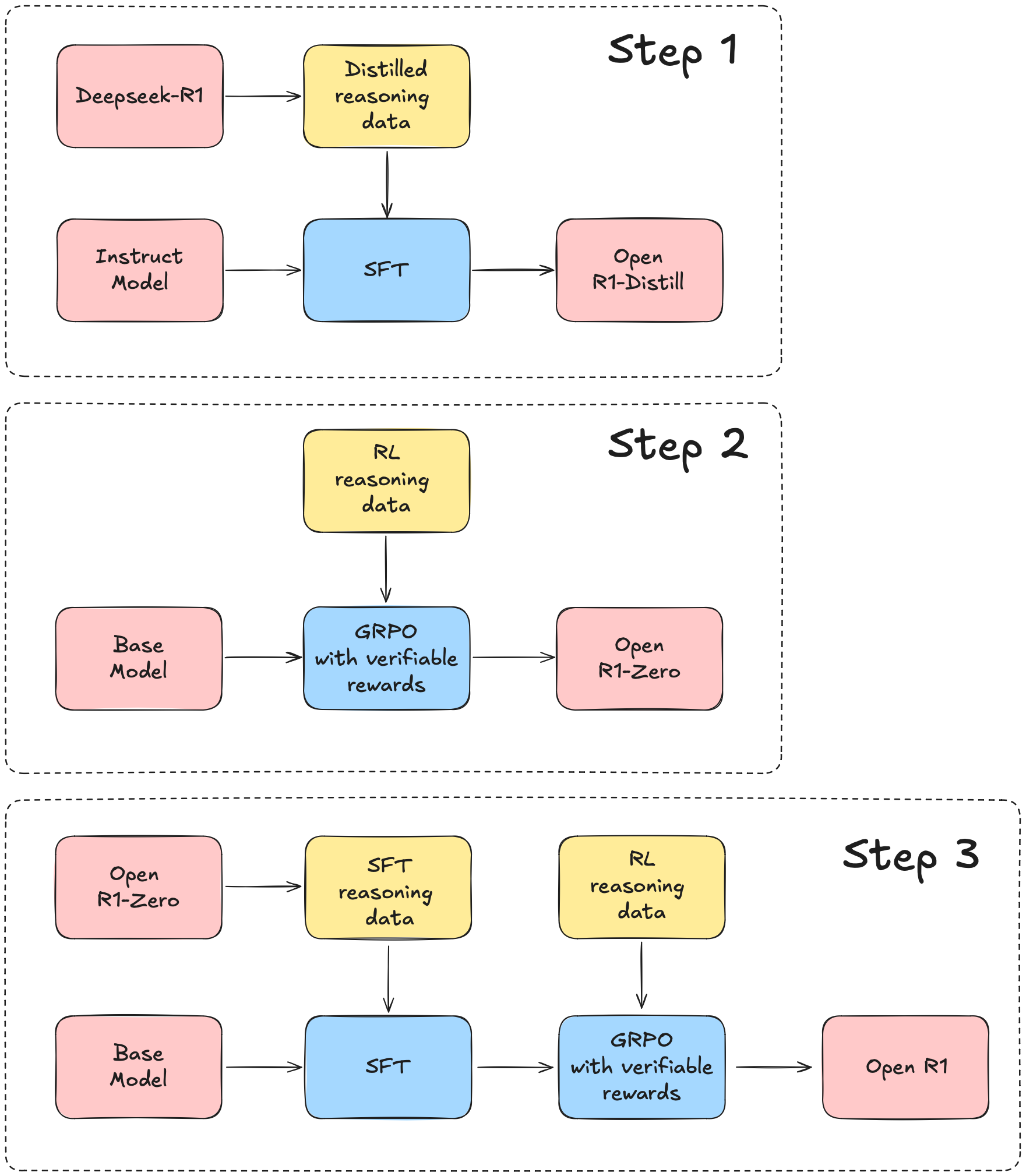

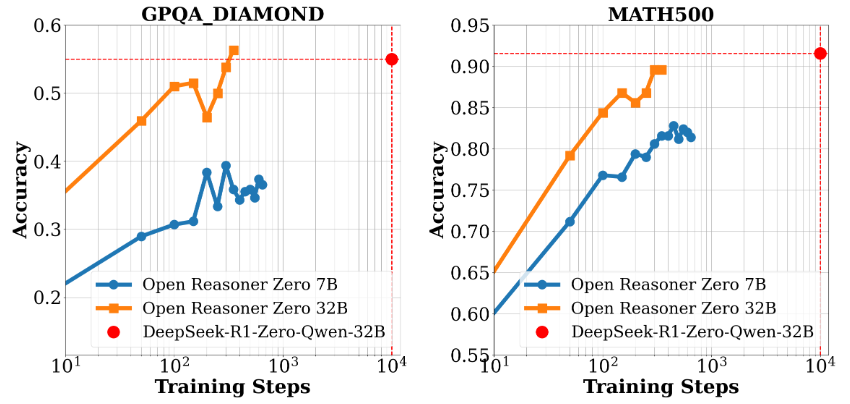

Open-Reasoner-Zero is an open source project focused on reinforcement learning (RL) research, developed by the Open-Reasoner-Zero team on GitHub. It aims to accelerate the research process in the field of Artificial Intelligence (AI), especially the exploration towards Generalized Artificial Intelligence (AGI), by providing an efficient, scalable and easy-to-use training framework. The project is based on the Qwen2.5 model (7B and 32B parameter versions) and combines technologies such as OpenRLHF, vLLM, DeepSpeed, and Ray to provide complete source code, training data, and model weights. It is notable for achieving a similar level of performance in less than 1/30th of the training steps of DeepSeek-R1-Zero, demonstrating its efficiency in resource utilization. The project is licensed under the MIT license and is free for users to use and modify, making it ideal for researchers and developers to engage in collaboration.

Function List

- Efficient Intensive Learning Training: Supports training and generation on a single controller to maximize GPU utilization.

- Complete open source resource: 57k pieces of high-quality training data, source code, parameter settings, and model weights are provided.

- High-performance model support: Based on Qwen2.5-7B and Qwen2.5-32B, providing excellent inference performance.

- Flexible research framework: A modular design makes it easy for researchers to adjust and expand their experiments.

- Docker Support: Provide a Dockerfile to ensure replicability of the training environment.

- Performance Evaluation Tools: Contains benchmarking data and evaluation results presentations, such as performance comparisons for GPQA Diamond.

Using Help

Installation process

The use of Open-Reasoner-Zero requires a certain level of technical knowledge. The following is a detailed installation and operation guide, suitable for running on Linux or Unix-like systems.

environmental preparation

- Installation of basic dependencies::

- Ensure that Git, Python 3.8+, and the NVIDIA GPU driver (CUDA support is required) are installed on your system.

- Install Docker (recommended version 20.10 or higher) for rapid deployment of the training environment.

sudo apt update sudo apt install git python3-pip docker.io

- Cloning Project Warehouse::

- Run the following command in the terminal to download the project locally:

git clone https://github.com/Open-Reasoner-Zero/Open-Reasoner-Zero.git cd Open-Reasoner-Zero - Configuring your environment with Docker::

- The project provides a Dockerfile to facilitate the building of training environments.

- Run it in the project root directory:

docker build -t open-reasoner-zero -f docker/Dockerfile .- After the build is complete, start the container:

docker run -it --gpus all open-reasoner-zero bash- This enters a container environment with GPU support, with the necessary dependencies pre-installed.

- Manual installation of dependencies (optional)::

- If you are not using Docker, you can install the dependencies manually:

pip install -r requirements.txt- Ensure that OpenRLHF, vLLM, DeepSpeed and Ray are installed, refer to the project documentation for specific versions.

Functional operation flow

1. Training models

- Preparing training data::

- The project comes with 57k high-quality training data, located in the

datafolder. - If custom data is required, organize the format and replace it according to the documentation instructions.

- The project comes with 57k high-quality training data, located in the

- priming training::

- Run the following command in the container or local environment:

python train.py --model Qwen2.5-7B --data-path ./data- Parameter Description:

--model: Select the model (e.g. Qwen2.5-7B or Qwen2.5-32B).--data-path: Specifies the training data path.

- The training log is displayed on the master node terminal for easy monitoring of progress.

2. Performance evaluation

- Running benchmark tests::

- Compare model performance using the provided evaluation scripts:

python evaluate.py --model Qwen2.5-32B --benchmark gpqa_diamond- The output will show the accuracy of the model on benchmarks such as GPQA Diamond.

- View Appraisal Report::

- The project contains charts (e.g., Figure 1 and Figure 2) showing performance and training time scaling, which can be found in the

docsfolder to find it.

- The project contains charts (e.g., Figure 1 and Figure 2) showing performance and training time scaling, which can be found in the

3. Modifications and extensions

- Adjustment parameters::

- compiler

config.yamlfile, modifying hyperparameters such as learning rate, batch size, etc.

learning_rate: 0.0001 batch_size: 16 - compiler

- Add New Feature::

- The project is modular in design and can be

srcfolder to add new modules. For example, add a new data preprocessing script:

# custom_preprocess.py def preprocess_data(input_file): # 自定义逻辑 pass - The project is modular in design and can be

Handling Precautions

- hardware requirement: A GPU with at least 24GB of video memory (e.g., NVIDIA A100) is recommended to support Qwen2.5-32B training.

- Log Monitoring: Keep the terminal on during training and check the log at any time to troubleshoot problems.

- Community Support: Questions can be submitted via GitHub Issues or by contacting the team at hanqer@stepfun.com.

Practical Examples

Suppose you want to train a model based on Qwen2.5-7B:

- Enter the Docker container.

- (of a computer) run

python train.py --model Qwen2.5-7B --data-path ./dataThe - Wait a few hours (depending on hardware) and run when finished

python evaluate.py --model Qwen2.5-7B --benchmark gpqa_diamondThe - View the output to confirm the performance improvement.

With these steps, users can quickly get started with Open-Reasoner-Zero, whether it's to reproduce experiments or develop new features, and do it efficiently.

© Copyright notes

Article copyright AI Sharing Circle All, please do not reproduce without permission.

Related articles

No comments...