OPENAI o1 (Inference Model) User's Guide, Suggestions for Writing Prompt Words

OpenAI o1 series models are novel large language models trained through reinforcement learning designed to perform complex reasoning. o1 models think before they answer and are capable of generating long internal chains of thought before responding to the user. o1 models excel in scientific reasoning, ranking in the 89th percentile in Competitive Programming Problems (Codeforces), ranking among the top 500 U.S. students in the American Mathematical Olympiad (AIME) qualifiers, ranked among the top 500 students in the U.S., and exceeded human Ph.D.-level accuracy on benchmark tests (GPQA) for physics, biology, and chemistry problems. o1 model was also able to produce a high level of scientific reasoning, with a high degree of accuracy.

Two inference models are provided in the API:

1. o1-preview: An early preview of our o1 model, designed to reason about difficult problems using a wide range of common knowledge about the world.

2. o1-mini: a faster and cheaper version of o1 that is particularly good at coding, math, and science tasks that don't require extensive general knowledge.

o1 model provides significant inference in the make headwayBut they Not intended to replace GPT-4o in all use casesThe

For applications that require image inputs, function calls, or consistently fast response times, the GPT-4o and GPT-4o mini models are still the right choice. However, if you are looking to develop applications that require deep inference and can accommodate longer response times, the o1 model may be an excellent choice. We are looking forward to the results you will create with them!

🧪 o1 Model is currently in beta stage

o1 The model is currently in testing phase, with limited functionality. Access is limited to Level 5 The developers in the here are Check your usage level and have a low rate limit. We are working on adding more features to add speed limit, and expanding access to more developers in the coming weeks!

Quick Start

o1-preview cap (a poem) o1-mini All can be accessed through the chat completions Endpoint Usage.

from openai import OpenAI

client = OpenAI()

response = client.chat.completions.create(

model="o1-preview",

messages=[

{

"role": "user",

"content": "编写一个 Bash 脚本,将矩阵表示为格式 '[1,2],[3,4],[5,6]' 的字符串,并以相同的格式打印转置矩阵。"

}

]

)

print(response.choices[0].message.content)

Depending on the amount of reasoning required by the model to solve the problem, these requests can take anywhere from a few seconds to a few minutes.

Beta Restrictions

In Beta, many of the chat completion API parameters are not yet available. The most notable ones are:

- modal (computing, linguistics): Only text is supported, not images.

- Message Type: Only user messages and assistant messages are supported, not system messages.

- streaming: Not supported.

- artifact: Tools, function calls, and response format parameters are not supported.

- Logprobs: Not supported.

- (sth. or sb) else:: `

temperature`, `top_p` and `n` Fixed to `1`, and `presence_penalty` and `frequency_penalty` Fixed to `0`. - Assistants and Batch: These models do not support the Assistants API or the Batch API.

As we phase out of Beta, support for some of these parameters will be added. o1 Future models in the series will include features such as multimodality and tool usage.

How reasoning works

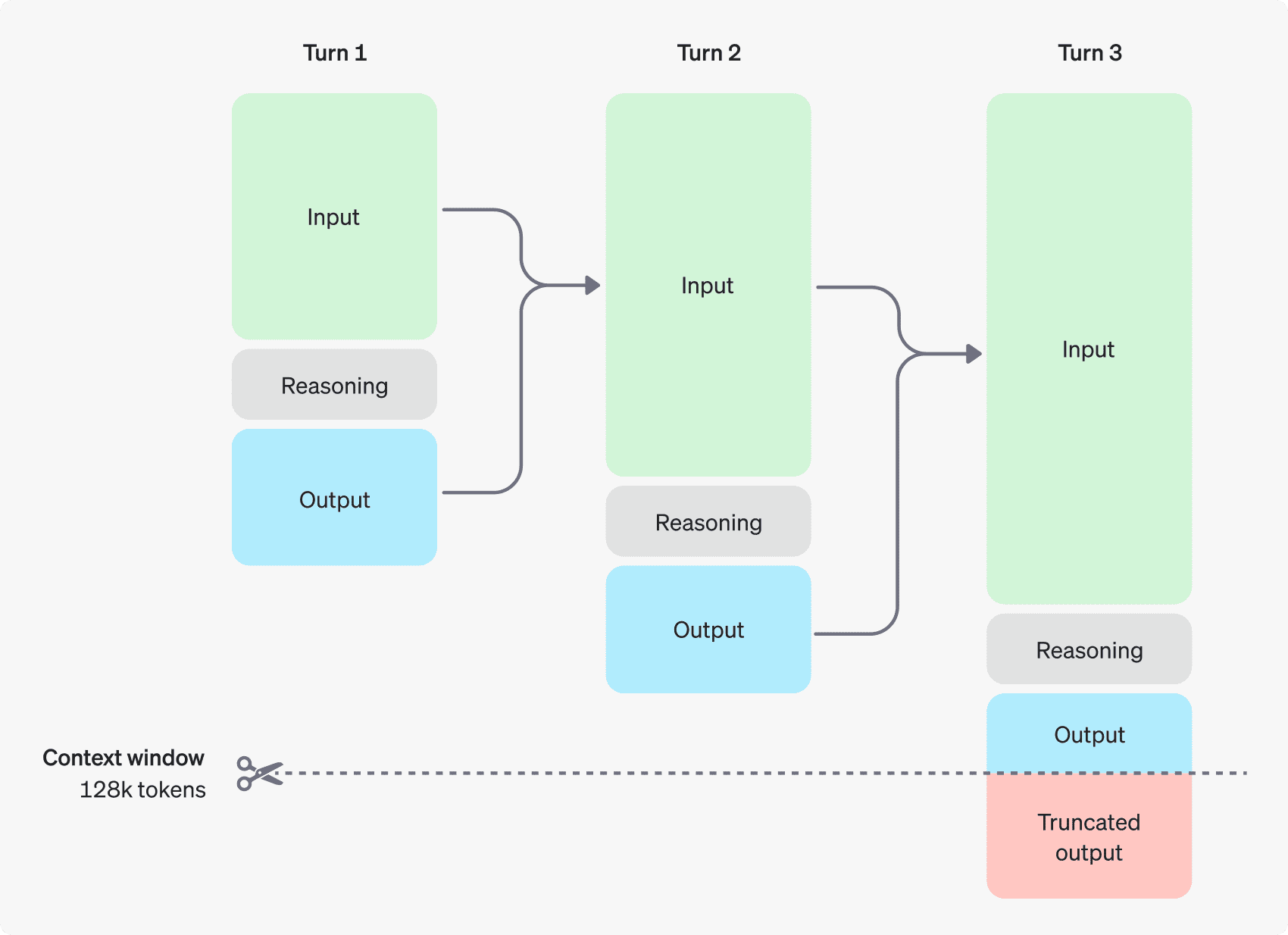

The o1 model introduces Reasoning tokens. These models use inference tokens The model "thinks", breaks down the understanding of the cue, and considers multiple ways of generating a response. After generating reasoning tokens, the model generates answers as visible completion tokens and discards reasoning tokens from their context.

Below is an example of a multi-step dialog between a user and an assistant. The input and output tokens for each step are retained, while the inference tokens are discarded.

Inference tokens are not saved in context

Although inference tokens cannot be displayed via the API, they still occupy the model's context window space and are used as the output tokens Charges.

Manage Context Window

The o1-preview and o1-mini models provide a context window of 128,000 tokens. Each time content is generated there is an upper limit on the maximum number of output tokens - this includes both invisible inference tokens and visible generation tokens. the maximum output tokens limit is as follows:

- o1-preview: up to 32,768 tokens

- o1-mini: up to 65,536 tokens

When generating content, it is important to ensure that there is enough room for inference tokens in the context window. Depending on the complexity of the problem, the model may generate anywhere from a few hundred to tens of thousands of inference tokens. the exact number of inference tokens used can be found in the Usage Object for Chat Generation Response Objects hit the nail on the head completion_tokens_details View:

usage: {

total_tokens: 1000,

prompt_tokens: 400,

completion_tokens: 600,

completion_tokens_details: {

reasoning_tokens: 500

}

}

Control costs

To manage the cost of the o1 family of models, you can use the max_completion_tokens parameter limits the total number of tokens generated by the model (both inference and generation tokens).

In the previous model, `max_tokensThe ` parameter controls the number of generated tokens and the number of tokens visible to the user, which are always equal. However, in the o1 family, the total number of generated tokens can exceed the number of visible tokens because of the presence of internal inference tokens.

Since some applications may rely on `max_tokens` Consistent with the number of tokens received from the API, the o1 series introduces `max_completion_tokens` to explicitly control the total number of tokens generated by the model, both inferred and visible generated tokens.This explicit choice ensures that existing applications are not broken when new models are used. For all previous models, `max_tokensThe ` parameter still maintains its original function.

Allow room for reasoning

If the generated tokens reach the limit of the context window or if you set the `max_completion_tokens` value, you will receive `finish_reason` Set to `length`'s chat generation response. This may happen before any visible generated tokens are generated, meaning you may pay for input and reasoning tokens and not receive a visible response.

To prevent this, make sure you leave enough space in the context window or set the `max_completion_tokensThe ` value is adjusted to a higher number.OpenAI recommends setting aside at least 25,000 tokens for inference and output when you start using these models. Once you are familiar with the number of inference tokens needed for hints, you can adjust this buffer accordingly.

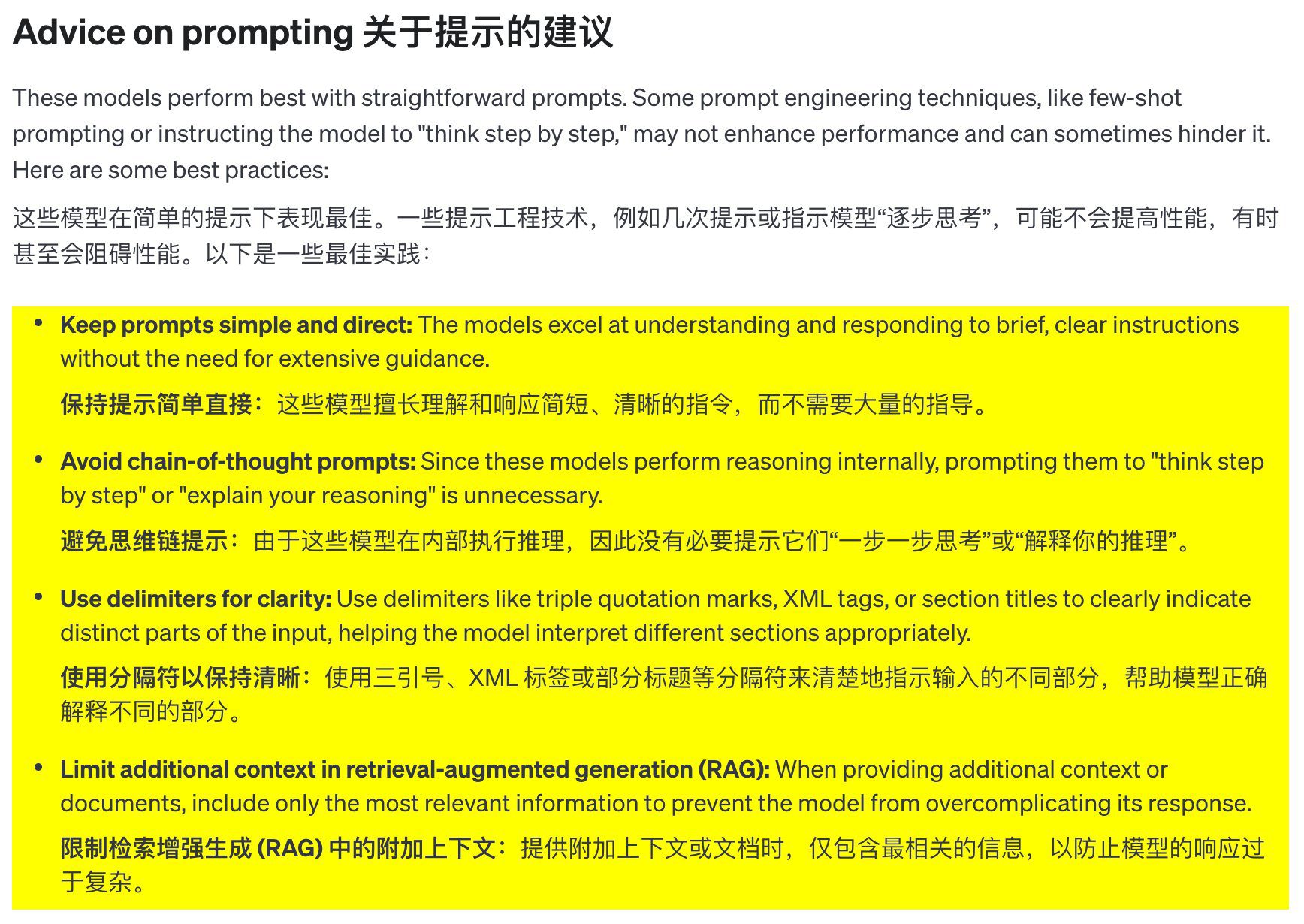

Suggestions for cue words

These models perform best when using clear and concise cues. Some cue engineering techniques (such as few-shot cues or letting the model "think step-by-step") may not improve performance and can sometimes be counterproductive. Here are some best practices:

- Keep the prompts simple and clear: These models excel at understanding and responding to short, clear instructions without providing much guidance.

- Avoid chain thinking cues: Since these models reason internally, it is not necessary to guide them to "think step-by-step" or "explain your reasoning".

- Use separators to improve clarity: Using separators such as triple quotes, XML tags, or section headings to clearly label the different parts of the input helps the model to understand each part correctly.

- In the search for enhanced generation (RAG) in Limit additional context:** When providing additional context or documentation, include only the most relevant information to avoid over-complicating the model's responses.

Examples of prompts

Coding (refactoring)

The OpenAI o1 family of models is capable of implementing complex algorithms and generating code. The following hints require o1 to refactor a model based on some specific criteria. React Component.

from openai import OpenAI

client = OpenAI()

prompt = """

指令:

- 对下面的 React 组件进行修改,使得非小说类书籍的文字变为红色。

- 回复中只返回代码,不要包含任何额外的格式,如 markdown 代码块。

- 在格式上,使用四个空格缩进,且代码行不超过 80 列。

const books = [

{ title: '沙丘', category: 'fiction', id: 1 },

{ title: '科学怪人', category: 'fiction', id: 2 },

{ title: '魔球', category: 'nonfiction', id: 3 },

];

export default function BookList() {

const listItems = books.map(book =>

<li>

{book.title}

</li>

);

return (

<ul>{listItems}</ul>

);

}

"""

response = client.chat.completions.create(

model="o1-mini",

messages=[

{

"role": "user",

"content": [

{

"type": "text",

"text": prompt

},

],

}

]

)

print(response.choices[0].message.content)

Code (planning)

The OpenAI o1 family of models also excels at creating multi-step plans. This sample prompt asks o1 to create the file system structure of a complete solution and provide the Python code that implements the required use cases.

from openai import OpenAI

client = OpenAI()

prompt = """

我想构建一个 Python 应用程序,接收用户问题并在数据库中查找对应答案。如果找到相近匹配,就返回匹配的答案。如果没有匹配,要求用户提供答案,并将问题/答案对存储到数据库中。为此创建一个目录结构的计划,然后返回每个文件的完整内容。仅在代码开始和结束时提供你的推理,而不是在代码中间。

"""

response = client.chat.completions.create(

model="o1-preview",

messages=[

{

"role": "user",

"content": [

{

"type": "text",

"text": prompt

},

],

}

]

)

print(response.choices[0].message.content)

STEM research

The OpenAI o1 family of models performs well in STEM research. Prompts used to support basic research tasks typically show strong results.

from openai import OpenAI

client = OpenAI()

prompt = """

我们应该考虑研究哪三种化合物以推进新抗生素的研究?为什么要考虑它们?

"""

response = client.chat.completions.create(

model="o1-preview",

messages=[

{

"role": "user",

"content": prompt

}

]

)

print(response.choices[0].message.content)

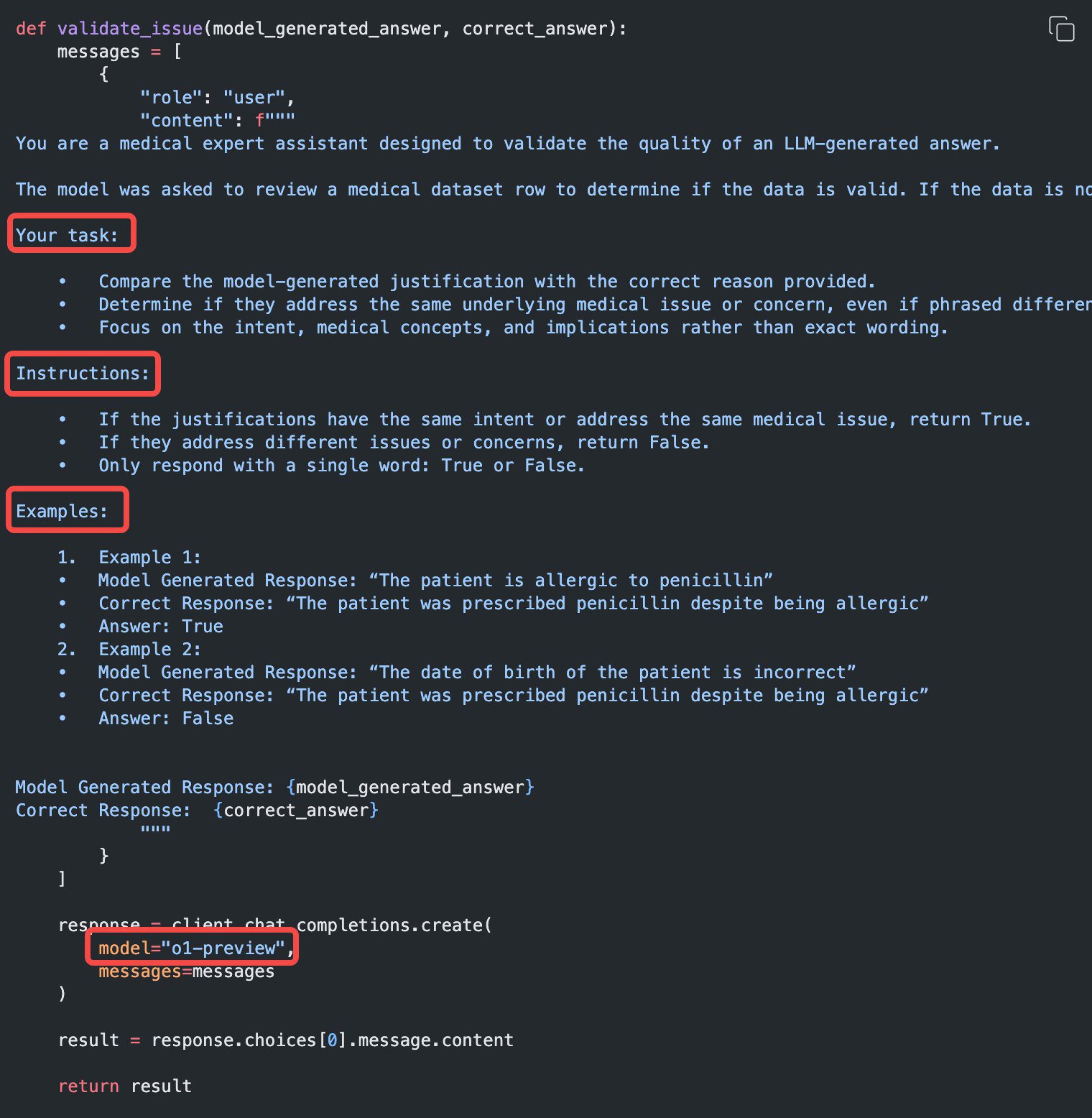

Examples of use cases

Some examples of real-world use cases using o1 can be found in the the cookbook Found in.

© Copyright notes

Article copyright AI Sharing Circle All, please do not reproduce without permission.

Related articles

No comments...