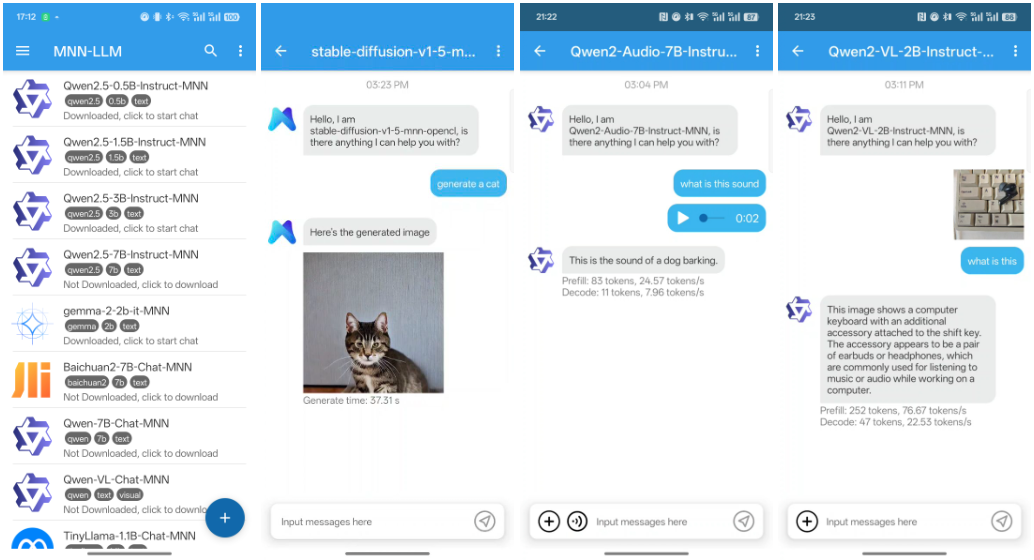

MNN-LLM-Android: MNN Multimodal Language Model for Android Applications

General Introduction

MNN (Mobile Neural Network) is an efficient, lightweight deep learning framework developed by Alibaba and optimized for mobile devices.MNN not only enables fast inference on mobile devices, but also supports multimodal tasks including text generation, image generation, and audio processing, etc.MNN has been integrated into several Alibaba applications such as Taobao, Tmall, Youku, Nail and Idle Fish, covering over 70 usage scenarios such as live streaming, short video capture, search recommendation and product image search.

Function List

- multimodal support: Supports a wide range of tasks such as text generation, image generation, and audio processing.

- CPU inference optimization: Achieves excellent CPU inference performance on mobile devices.

- Lightweight framework: The framework is designed to be lightweight and suited to the resource constraints of mobile devices.

- widely used: It is integrated into several applications of Alibaba, covering a wide range of business scenarios.

- open source: Full open source code and documentation is provided for easy integration and secondary development.

Using Help

Installation process

- Download & Installation: Clone project code from a GitHub repository.

git clone https://github.com/alibaba/MNN.git cd MNN

2. **编译项目**:根据项目提供的README文档,配置编译环境并编译项目。

```bash

mkdir build

cd build

cmake ..

make -j4

- Integration into Android applications: Integrate the compiled library file into the Android project by modifying the

build.gradlefile for configuration.

Usage

multimodal function

MNN supports a variety of multimodal tasks, including text generation, image generation, and audio processing. The following are examples of how to use these features:

- Text Generation: Text generation using pre-trained language models.

import MNN interpreter = MNN.Interpreter("text_model.mnn") session = interpreter.createSession() input_tensor = interpreter.getSessionInput(session) # 输入文本进行预处理 input_data = preprocess_text("输入文本") input_tensor.copyFrom(input_data) interpreter.runSession(session) output_tensor = interpreter.getSessionOutput(session) output_data = output_tensor.copyToHostTensor() result = postprocess_text(output_data) print(result) - Image Generation: Image generation using pre-trained generative models.

import MNN interpreter = MNN.Interpreter("image_model.mnn") session = interpreter.createSession() input_tensor = interpreter.getSessionInput(session) # 输入数据进行预处理 input_data = preprocess_image("输入图像") input_tensor.copyFrom(input_data) interpreter.runSession(session) output_tensor = interpreter.getSessionOutput(session) output_data = output_tensor.copyToHostTensor() result = postprocess_image(output_data) print(result) - audio processing: Use pre-trained audio models for audio generation or processing.

import MNN interpreter = MNN.Interpreter("audio_model.mnn") session = interpreter.createSession() input_tensor = interpreter.getSessionInput(session) # 输入音频数据进行预处理 input_data = preprocess_audio("输入音频") input_tensor.copyFrom(input_data) interpreter.runSession(session) output_tensor = interpreter.getSessionOutput(session) output_data = output_tensor.copyToHostTensor() result = postprocess_audio(output_data) print(result)

Detailed Operation Procedure

- Creating Reasoning Instances: Initialize the MNN model and create an inference session.

import MNN interpreter = MNN.Interpreter("model.mnn") session = interpreter.createSession() - Input data preprocessing: Preprocess the input data according to the model type.

input_tensor = interpreter.getSessionInput(session) input_data = preprocess_data("输入数据") input_tensor.copyFrom(input_data) - executive reasoning: Run the session for reasoning.

interpreter.runSession(session) - Output data post-processing: Get the output and post-process it.

output_tensor = interpreter.getSessionOutput(session) output_data = output_tensor.copyToHostTensor() result = postprocess_data(output_data) print(result)

© Copyright notes

Article copyright AI Sharing Circle All, please do not reproduce without permission.

Related posts

No comments...