Maestro: A tool to simplify the process of fine-tuning mainstream open source visual language models

General Introduction

Maestro is a tool developed by Roboflow to simplify and accelerate the process of fine-tuning multimodal models so that everyone can train their own visual bigrams. It provides ready-made recipes for fine-tuning popular visual language models (VLMs) such as Florence-2, PaliGemma 2, and Qwen2.5-VL.Maestro enables users to perform model fine-tuning more efficiently by encapsulating the best practices in the core module that handles configuration, data loading, reproducibility, and training loop settings.

Function List

- configuration management: Automatically handles the model's configuration file, simplifying the setup process.

- Data loading: Support for multiple data formats, automated data preprocessing and loading.

- Training Cycle Setup: Provides standardized training cycles to ensure repeatability of the training process.

- ready-made formula: Provides a variety of fine-tuning recipes for models that users can use directly.

- Command Line Interface (CLI): The fine-tuning process is initiated with a simple command line command.

- Python API: Provides a flexible Python interface that allows you to customize the fine-tuning process.

- Cookbooks: Detailed tutorials and examples to help users get started quickly.

Using Help

Installation process

- Creating a Virtual Environment: Since different models may have conflicting dependencies, it is recommended to create a dedicated Python environment for each model.

python -m venv maestro_env

source maestro_env/bin/activate

- Installing dependencies: Install model-specific dependencies as needed.

pip install "maestro[paligemma_2]"

Using the Command Line Interface (CLI)

- priming and fine-tuning: Initiate the fine-tuning process using the command line interface, specifying key parameters such as dataset location, number of training rounds, batch size, optimization strategy, and evaluation metrics.

maestro paligemma_2 train \

--dataset "dataset/location" \

--epochs 10 \

--batch-size 4 \

--optimization_strategy "qlora" \

--metrics "edit_distance"

Using the Python API

- Importing Training Functions: Import the training function from the corresponding module and define the configuration in the dictionary.

from maestro.trainer.models.paligemma_2.core import train

config = {

"dataset": "dataset/location",

"epochs": 10,

"batch_size": 4,

"optimization_strategy": "qlora",

"metrics": ["edit_distance"]

}

train(config)

Using Cookbooks

Maestro provides detailed Cookbooks to help users learn how to fine-tune different VLMs on a variety of visual tasks. for example:

- Fine-tuning Florence-2 for Target Detection with LoRA

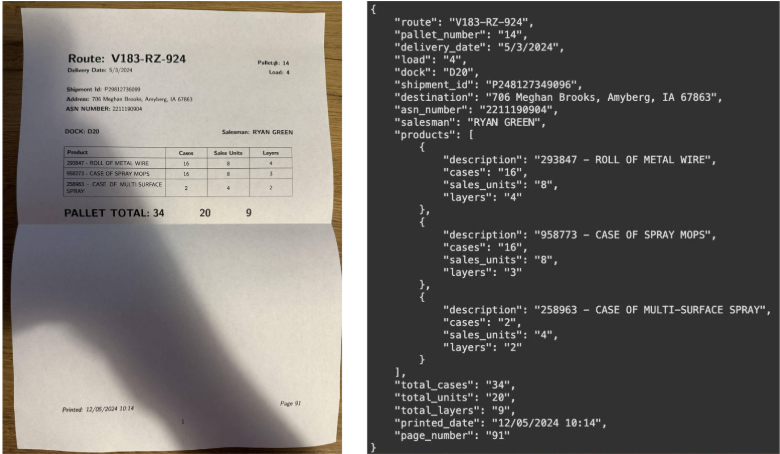

- Fine-tuning PaliGemma 2 for JSON Data Extraction with LoRA

- Fine-tuning with QLoRA Qwen2.5-VL Perform JSON data extraction

© Copyright notes

Article copyright AI Sharing Circle All, please do not reproduce without permission.

Related posts

No comments...