llama.cpp: efficient inference tool, supports multiple hardware, easy to implement LLM inference

General Introduction

llama.cpp is a library implemented in pure C/C++ designed to simplify the inference process for Large Language Models (LLM). It supports a wide range of hardware platforms, including Apple Silicon, NVIDIA GPUs and AMD GPUs, and provides several quantization options to increase inference speed and reduce memory usage. The goal of the project is to achieve high-performance LLM inference with minimal setup for both local and cloud environments.

Function List

- Supports multiple hardware platforms including Apple Silicon, NVIDIA GPUs and AMD GPUs

- Provides integer quantization options from 1.5 to 8 bits

- Supports multiple LLM models such as LLaMA, Mistral, Falcon, etc.

- Provide REST API interface for easy integration

- Supports mixed CPU+GPU reasoning

- Provide multiple programming language bindings, such as Python, Go, Node.js, etc.

- Provide multiple tools and infrastructure support such as model transformation tools, load balancers, etc.

Using Help

Installation process

- Cloning Warehouse:

git clone https://github.com/ggerganov/llama.cpp.git

cd llama.cpp

- Compile the project:

make

Guidelines for use

model transformation

llama.cpp provides a variety of tools to convert and quantize models to run efficiently on different hardware. For example, the Hugging Face model can be converted to GGML format using the following command:

python3 convert_hf_to_gguf.py --model <model_name>

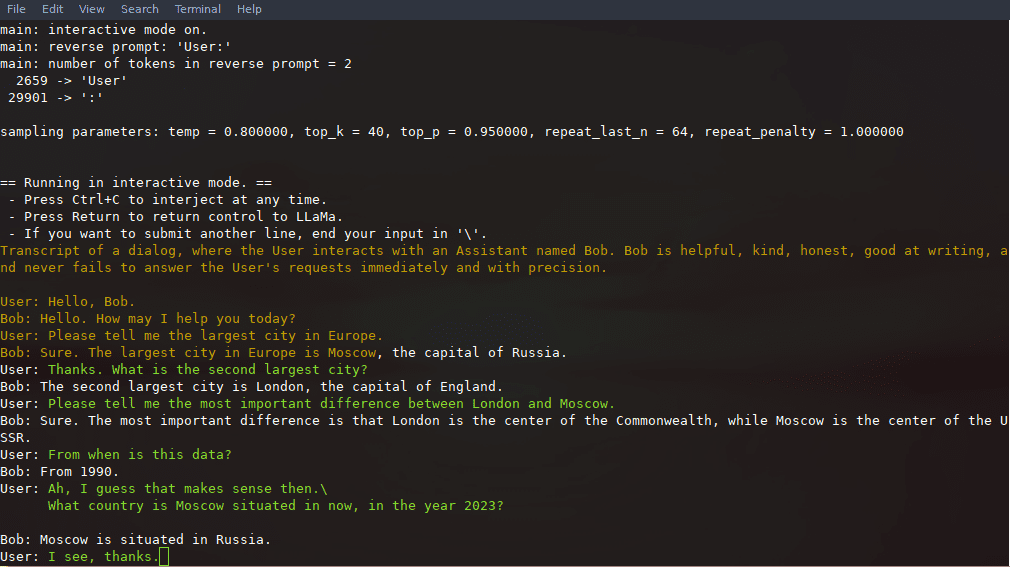

Example of reasoning

After compilation, you can use the following commands for inference:

./llama-cli -m models/llama-13b-v2/ggml-model-q4_0.gguf -p "你好,世界!"

REST API Usage

llama.cpp also provides an OpenAI API-compatible HTTP server that can be used for local model inference services. Start the server:

./llama-server -m models/llama-13b-v2/ggml-model-q4_0.gguf --port 8080

The basic Web UI can then be accessed through a browser or by using the API for inference requests:

curl -X POST http://localhost:8080/v1/chat -d '{"prompt": "你好,世界!"}'

Detailed function operation flow

- Model loading: First you need to download the model file and place it in the specified directory, then load the model using the command line tool.

- Reasoning Configuration: Relevant parameters for inference, such as context length, batch size, etc., can be set via configuration files or command line parameters.

- API integration: Through the REST API interface, llama.cpp can be integrated into existing applications to enable automated reasoning services.

- performance optimization: Utilizing quantization options and hardware acceleration features can significantly improve inference speed and efficiency.

© Copyright notes

Article copyright AI Sharing Circle All, please do not reproduce without permission.

Related posts

No comments...