Light-R1: 360 open-source superb inference model for the mathematical domain

General Introduction

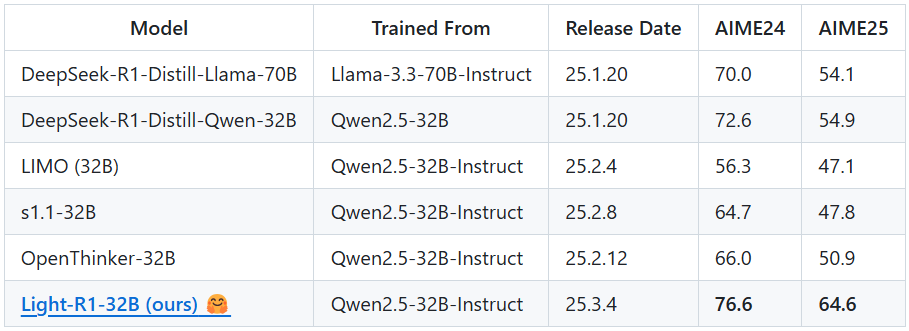

Light-R1 is an open-source AI model developed by the Qihoo360 (Qihoo360) team focusing on long-chain reasoning (Chain-of-Thought, COT) in mathematics. It is based on the Qwen2.5-32B-Instruct model, and through a unique course-based supervised fine-tuning (SFT) and direct preference optimization (DPO) training method, it achieved high scores of 76.6 and 64.6 on the math competitions AIME24 and AIME25, respectively, at a cost of only about $1,000 (6 hours of training on 12 H800 machines), outperforming previous top performer DeepSeek-R1-Distill-Qwen-32B (72.6 and 54.9).The highlight of Light-R1 is the efficient and cost-effective performance breakthroughs achieved by starting from a model with no long-chain inference capability, combined with decontaminated data and model fusion techniques. The project not only releases the model, but also opens all the training datasets and codes, aiming to promote the popularization and development of long chain inference models.

Function List

- mathematical reasoning: Provides accurate problem solving for difficult math competitions such as AIME.

- Long-chain reasoning support: by hard-coding

<think>labels, forcing the model to reason progressively about complex problems. - open source resource sharing: Complete SFT and DPO training datasets and 360-LLaMA-Factory based training scripts are available.

- Efficient inference deployment: Support vLLM and SGLang frameworks to optimize model inference speed and resource usage.

- Model Evaluation Tool: Integration of the DeepScaleR evaluation code, providing benchmark results such as AIME24.

- Data decontamination: Ensure that training data is not contaminated against benchmarks such as MATH-500, AIME24/25, etc. to improve fairness.

Using Help

Acquisition and Installation Process

Light-R1 is an open source project hosted on GitHub that allows users to obtain and deploy models by following the steps below:

- Accessing GitHub Repositories

Open your browser and enter the URLhttps://github.com/Qihoo360/Light-R1, goes to the project home page. The page contains an introduction to the model, links to datasets, and code descriptions. - clone warehouse

Clone the project locally by typing the following command in a terminal or command line:

git clone https://github.com/Qihoo360/Light-R1.git

Once the cloning is complete, go to the project directory:

cd Light-R1

- Download model files

The Light-R1-32B model is hosted on Hugging Face. Accesshttps://huggingface.co/Qihoo360/Light-R1-32BIf you want to download the model weights file, follow the instructions on the page. After downloading, place the file in a suitable directory in your local repository (e.g.models/), the exact path can be found in the project documentation. - Installation of dependent environments

Light-R1 recommends using vLLM or SGLang for inference, and requires the installation of related dependencies. Take vLLM as an example:

- Ensure that Python 3.8 or later is installed.

- Install vLLM:

pip install vllm - If GPU support is required, ensure that CUDA is configured (12 H800 or equivalent devices are recommended).

- Prepare the data set (optional)

If you need to replicate the training or customize the fine-tuning, you can download the SFT and DPO datasets from the GitHub page (link in theCurriculum SFT & DPO datasets(Part). Unzip it and place it in thedata/Catalog.

Main function operation flow

1. Mathematical reasoning with Light-R1

The core function of Light-R1 is to solve math problems, especially complex topics that require long chains of reasoning. Here are the steps:

- Initiate reasoning service

Go to the project directory in the terminal and run the following command to start the vLLM inference service:

python -m vllm.entrypoints.api_server --model path/to/Light-R1-32B

included among these path/to/Light-R1-32B Replace it with the actual model file path. When started, the service listens on the local port by default (usually 8000).

- Send inference request

Use a Python script or the curl command to send math problems to the model. Take curl as an example:

curl http://localhost:8000/v1/completions

-H "Content-Type: application/json"

-d '{

"model": "Light-R1-32B",

"prompt": "<think>Solve the equation: 2x + 3 = 7</think>",

"max_tokens": 200

}'

The model returns step-by-step reasoning processes and answers. for example:

{

"choices": [{

"text": "<think>First, subtract 3 from both sides: 2x + 3 - 3 = 7 - 3, so 2x = 4. Then, divide both sides by 2: 2x / 2 = 4 / 2, so x = 2.</think> The solution is x = 2."

}]

}

- caveat

<think>Labels are hard-coded and must be included in the input to trigger long-chain reasoning.- For AIME level challenges, it is recommended to add

max_tokens(e.g., 500) to ensure that reasoning is complete.

2. Replication model training

If you want to reproduce the training process of Light-R1 or make secondary development based on it, you can follow the steps below:

- Preparing the training environment

Using the 360-LLaMA-Factory framework, install the dependencies:

pip install -r train-scripts/requirements.txt

- Run SFT Stage 1

compilertrain-scripts/sft_stage1.sh, ensure that the model path and dataset path are correct, and then execute:

bash train-scripts/sft_stage1.sh

This phase uses a 76k dataset and takes approximately 3 hours (12 H800s).

- Run SFT Stage 2

Similarly, run:

bash train-scripts/sft_stage2.sh

Use 3k harder datasets to improve model performance.

- Running DPO

Implementation:

bash train-scripts/dpo.sh

DPO further optimizes inference capabilities based on SFT Stage 2 results.

- Model Merge

Use the provided merge script (e.g.merge_models.py), fusing the SFT and DPO models:

python merge_models.py --sft-model sft_stage2 --dpo-model dpo --output Light-R1-32B

3. Assessing model performance

Light-R1 provides evaluation tools to test benchmarks such as AIME24:

- Run the evaluation script

existdeepscaler-release/directory is executed:

python evaluate.py --model Light-R1-32B --benchmark AIME24

The results will be recorded in the logbook and the average score should be close to 76.6 on 64 occasions.

Featured Functions

Long chain inference optimization

Light-R1 Adopted <think> cap (a poem) </think> Special markers to ensure that the model is reasoning step-by-step through the math problem. For example, input:

<think>Find the number of positive integers less than 100 that are divisible by 3 or 5.</think>

Model output:

<think>Let’s use inclusion-exclusion. Numbers divisible by 3: 99 ÷ 3 = 33. Numbers divisible by 5: 99 ÷ 5 = 19. Numbers divisible by 15 (LCM of 3 and 5): 99 ÷ 15 = 6. Total = 33 + 19 - 6 = 46.</think> Answer: 46.

Data decontamination safeguards

The training data is rigorously decontaminated to ensure fairness in benchmarks such as AIME24/25. Users can check the data set (data/ (Catalog) Verify that there are no duplicate titles.

Examples of low-cost training

Light-R1 demonstrates the feasibility of efficient training, and users can refer to the training scripts to customize the model for other domains (e.g., physics).

Tips for use

- Improved accuracy of reasoning: Increase

max_tokensor averaged over multiple runs. - Debugging Issues: View evaluation logs to analyze the model's reasoning process on specific topics.

- Community Support: Join the WeChat group on the GitHub page to connect with developers.

© Copyright notes

Article copyright AI Sharing Circle All, please do not reproduce without permission.

Related posts

No comments...