LangChain Program Executive Intelligence

Planning-executing intelligences provide a faster, more cost-effective, and more performant solution to task execution than previous designs. This article will guide you through building three planning intelligences in LangGraph.

We have introduced three "plan-and-execute" mode intelligences on the LangGraph platform. These intelligences demonstrate several improvements over the classic Reason and Act (ReAct) mode intelligences.

⏰ First, these intelligences are able to perform multistep task processes more rapidly because there is no need to request the involvement of large intelligences again after completing each action. Each subtask can be completed without the need for additional large language model (LLM) calls, or calls to lighter-weight LLM support.

💸 Second, they can effectively reduce costs compared to ReAct intelligences. If LLM calls are needed for subtasks, smaller, domain-specific models are usually used. Large models are only invoked when (re)planning steps need to be performed and final responses generated.

🏆 Finally, by asking the planner to explicitly "think" step-by-step about the process needed to complete the entire task, these intelligences can outperform in terms of task completion rate and quality. Developing detailed reasoning steps has been a validated cueing technique to improve outcomes. Segmenting the problem can also lead to more focused and efficient task execution.

Background

Over the past year, intelligent agents and state machines based on language models have emerged as a promising design paradigm for developing flexible and efficient AI products.

At its core, an agent uses a language model as a generalized problem-solving tool, connecting it to external resources for answering questions or performing tasks.

Typically, intelligent agents based on language models go through the following major steps:

1. Propose action: The language model generates text that responds directly to the user or is communicated to a function.

2. Execute action: the code calls other software to perform, for example, query the database or call the API and other operations.

3. Observe Observe: React to feedback from tool calls, either by calling another function or responding to the user.

ReAct Intelligent agents are a good example of this, utilizing repetitive cycles of thinking, acting, and observing to guide the language model:

Think: I should call Search() to look up the score of the current match.

Action: Search("What is the score of the current X match?")

Observation: Current score is 24-21

... (Repeat N times)

This is a typical ReAct-style intelligent agent trajectory.

This approach utilizesChain-of-thoughtprompts so that only a single action decision is made at each step. While this can be effective for simple tasks, it has some major drawbacks:

1. Each tool call requires a language model call.

2. The language model plans one subproblem at a time. This can lead to sub-optimal processing trajectories, as it is not forced to "reason" about the whole task.

An effective way to overcome these two shortcomings is to include an explicit planning step. Below are two examples of such designs that we have implemented at LangGraph.

Plan-And-Execute System Plan-And-Execute

Program Executing Agents

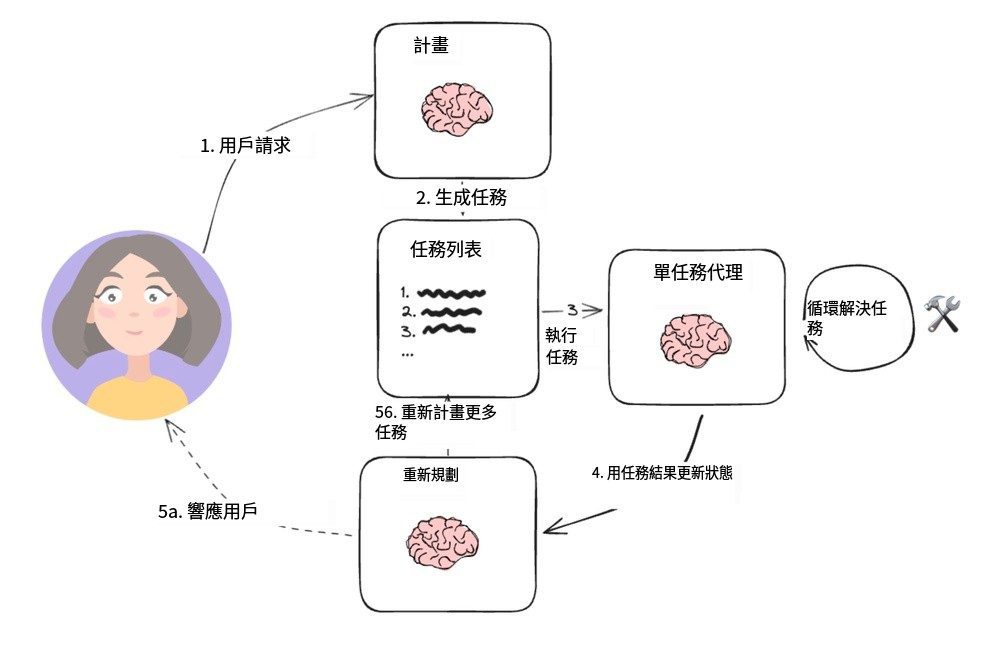

This simple structure is based on the paper by Wang et al [Planning and resolution tips] proposed by and drawing on Yohei Nakajima's [BabyAGI] project, which became the typical planning agent architecture. It contains two main base components:

1. A planner (A) planner), is responsible for directing a language model (LLM) to generate multi-step plans to accomplish complex tasks.

2. Multiple actuators (Executor(s)) that can process the user's query requirements as well as a step in the plan and invoke one or more tools to perform that task.

Once the task execution is over, the agent is brought up again based on a new planning prompt, at which point it decides whether to simply provide a response to end it, or to generate further plans as needed (if the original plan didn't reach its goal).

This agent design avoids the need to rely on a large planning language model for each invocation of the tool. However, since it does not support assignment operations on variables and the tool must be invoked serially, a separate language model is required for each task.

Reasoning beyond independent observation

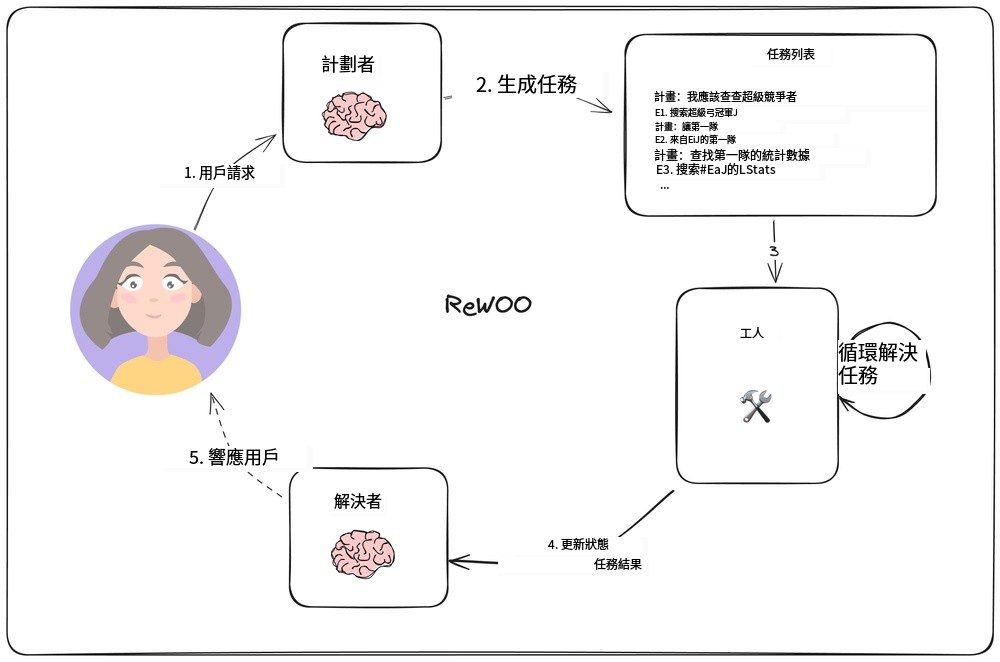

In [ReWOO] study, Xu et al. devised a novel agent model that subverts the traditional way in which each task must rely on the Large Language Model (LLM) by allowing subsequent tasks to rely on the results of the previous task. The model is realized by including the function of variable assignment in the output planning. The following figure shows the design of this agent model.

ReWOO agent model

The planner is responsible for generating a list of plans that includes "plan" (reasoning) alternating with the "E#" line. For example, for the query "What are the quarterback statistics for this year's Super Bowl contenders?", the planner might generate the following plan:

PLAN: I need to know the teams playing in the Super Bowl this year

E1: Search for [who's playing in the Super Bowl?] PLAN: I need to know who every team's quarterback is

E2: LLM [first team's QB #E1] program: i need to know who each team's QB is

E3: LLM [2nd team QB #E1] Program: i need to look up the stats of the first QB

E4: Search [#E2's QB stats] Plan: I need to look up the stats of the second quarterback

E5: Search [#E3's quarterback stats]

Note that the planner (planner) How can it refer to previous output through syntax such as `#E2`. This means that it is possible to perform a series of tasks without having to reschedule each time.

"Workers (workerThe ")" node will iterate through each task and assign their outputs to predetermined variables. And, when subsequent task calls are executed, these variables are replaced with the corresponding results.

Ultimately, the "resolver (Solver) "Integrate all the outputs to arrive at the final answer.

This agent design philosophy can be more efficient than a simple plan-execute model, since each task contains only the necessary contextual information (i.e., its inputs and variable values).

However, this design is still based on sequential task execution, which may result in a longer overall runtime.

LLMCompiler

LLMCompiler Agent

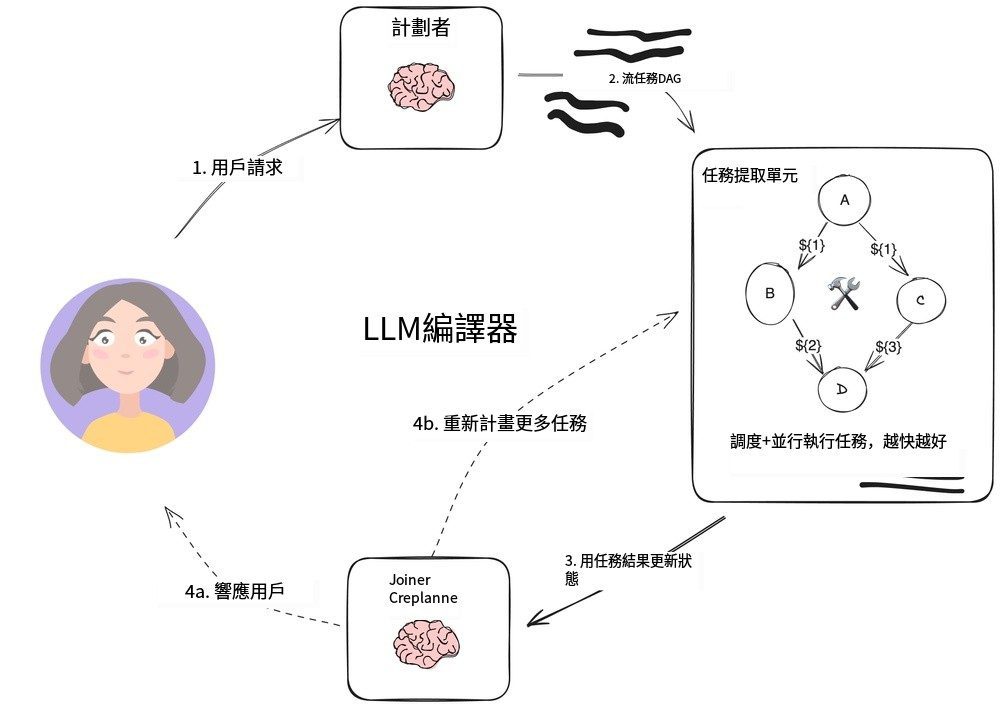

LLMCompiler It's a program that's run by [Team Kim] developed an agent architecture that is designed to improve task execution efficiency over the previously described plan-execute with ReWOO agents, and even faster than OpenAI parallel tool calls.

The LLMCompiler consists of the following main components:

1. Planner: It generates a directed acyclic graph (DAG) of tasks. Each task includes tools, parameters and a list of dependencies.

2. Task Fetching Unit (TFU) It is responsible for the scheduling and execution of tasks. It can accept a stream of tasks and schedule them when their dependencies are satisfied. Since many tools require additional search engines or LLM calls, this parallel execution can significantly increase speed (up to 3.6x according to the paper).

3. Connector (Joiner): This part can dynamically replan or end the task based on the entire history of the task execution, including the results of the task execution. This is the step in the LLM session that determines whether to present the final result directly or return the progress to the planner for further work.

The key point of this architecture to accelerate the implementation is:

- plannerThe output of thestreaming; it is able to produce instant outputs of task parameters and their dependencies.

- task acquisition unit Receives tasks streamed in and starts scheduling when all dependencies are satisfied.

- The task parameters can bevariant, i.e., the outputs of previous tasks in the directed acyclic graph. For example, the model can be modeled with

search("${1}")to query the search content generated by Task 1. This approach allows the agent to work more efficiently than OpenAI's normal parallel tool calls.

By organizing tasks into a directed acyclic graph, it not only saves valuable time when invoking the tool, but also brings a better user experience.

summarize

These three proxy architectures are prototypes of the Plan-Do design pattern, separating the LLM-driven "planner" from the tool execution runtime. If your application requires multiple calls to the tool or API, these approaches can shorten the time it takes to get to the end result and help you reduce costs by reducing the number of calls to higher-level LLMs.

© Copyright notes

Article copyright AI Sharing Circle All, please do not reproduce without permission.

Related posts

No comments...