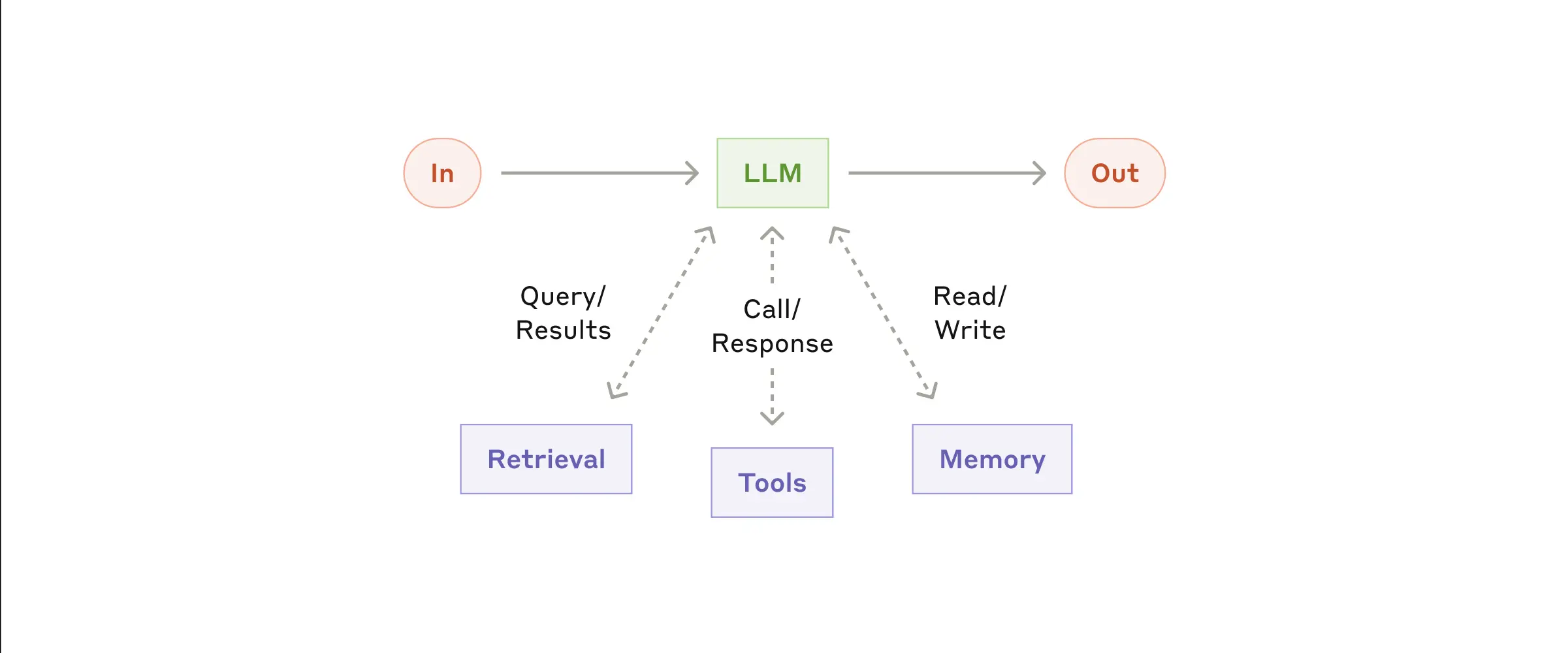

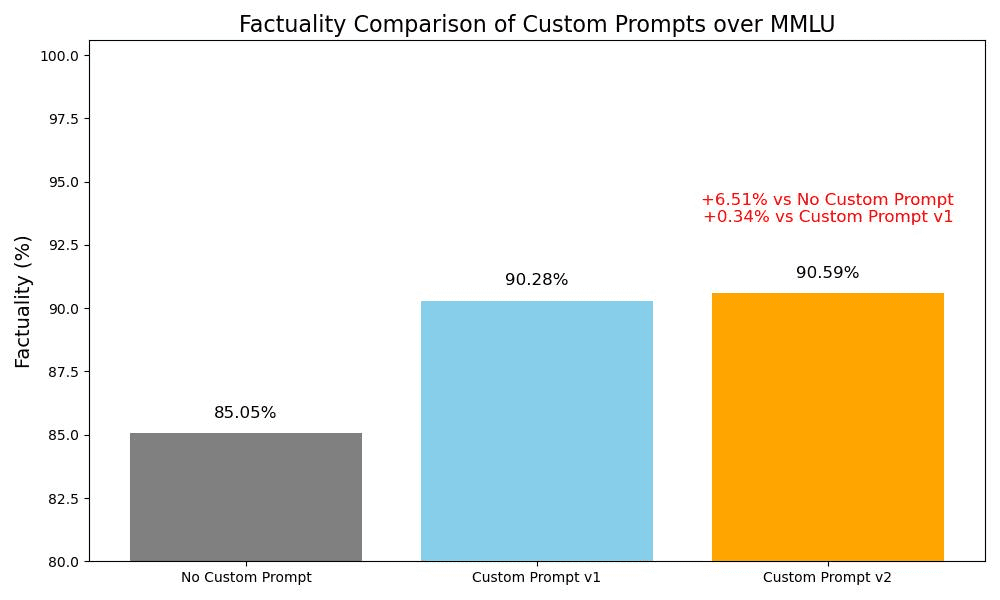

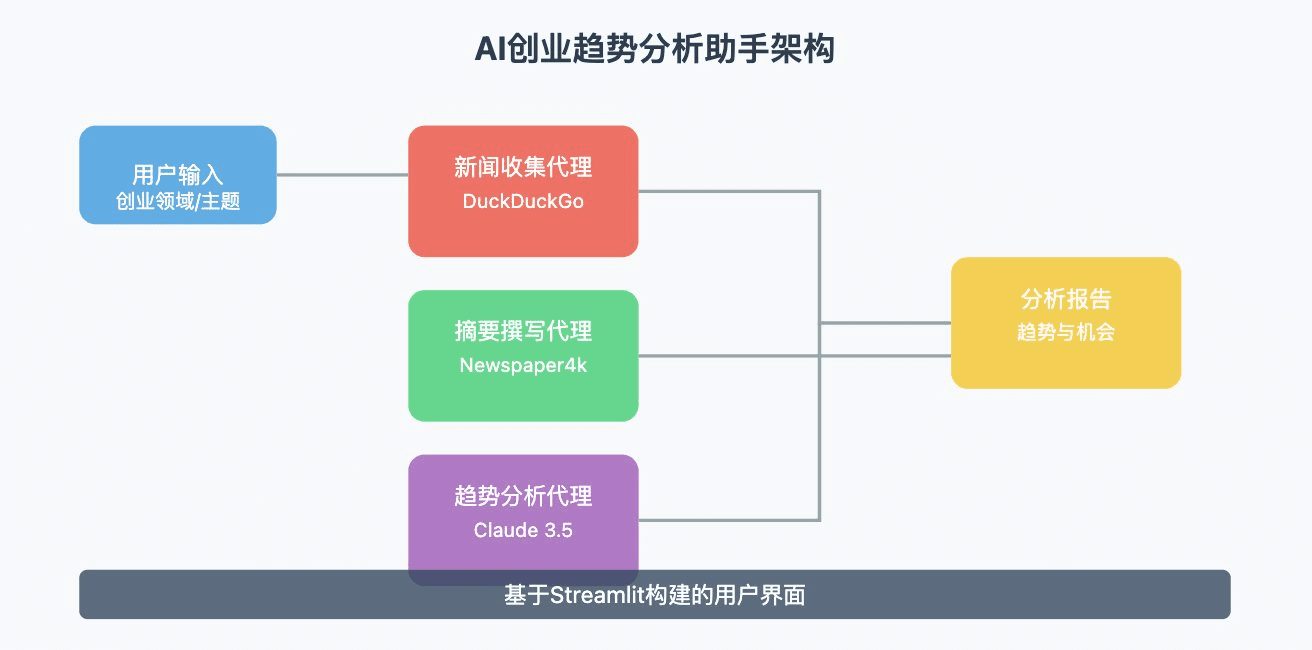

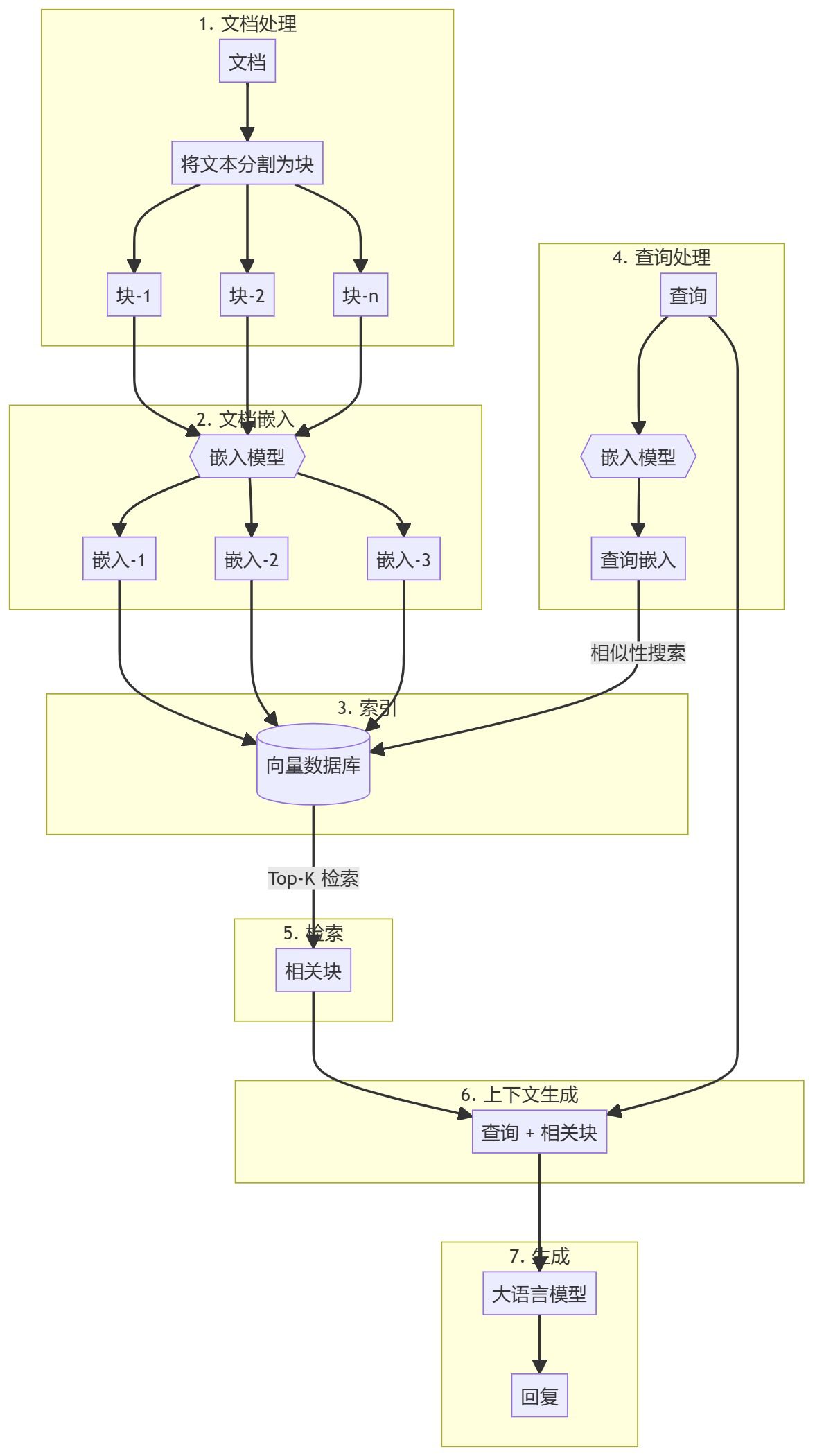

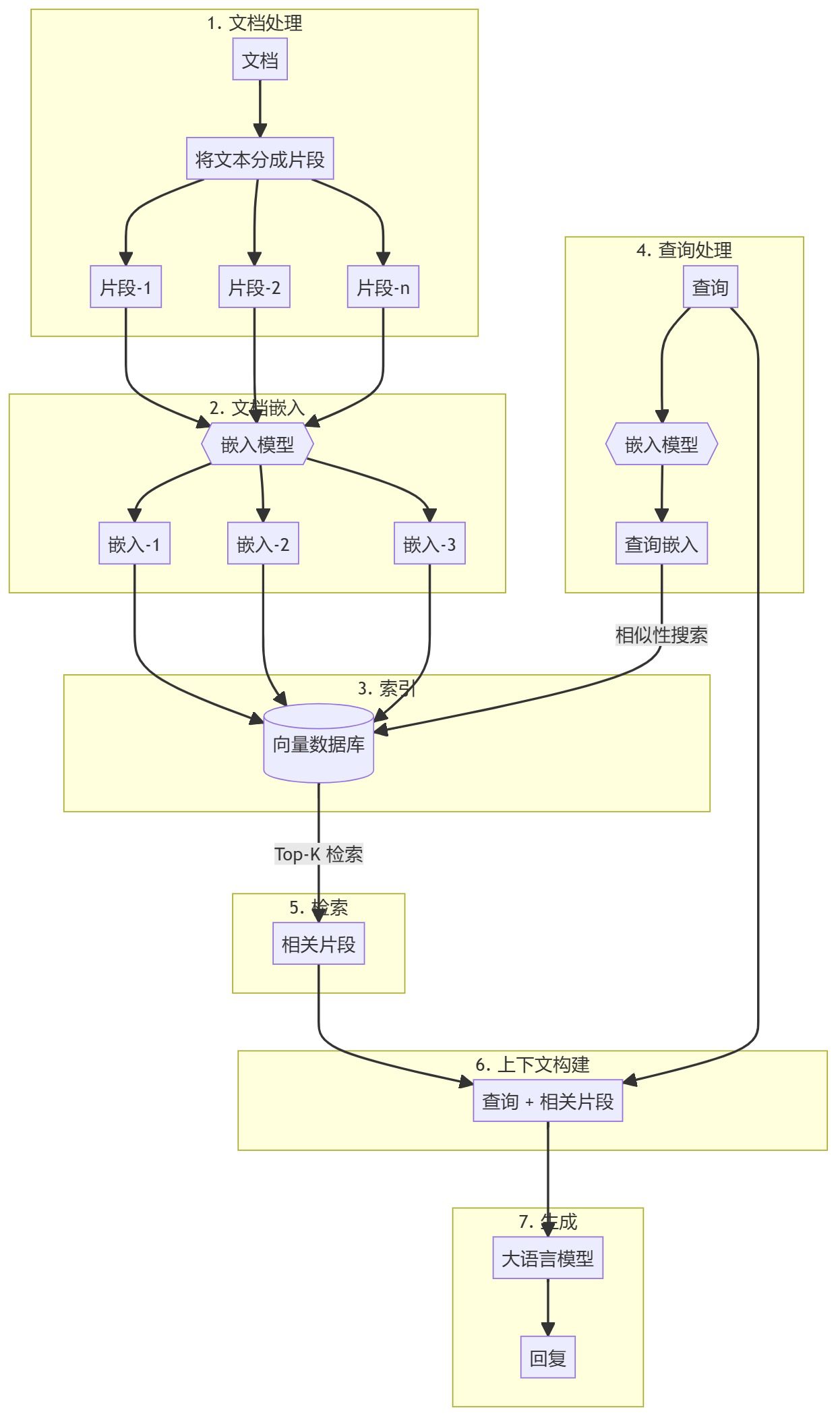

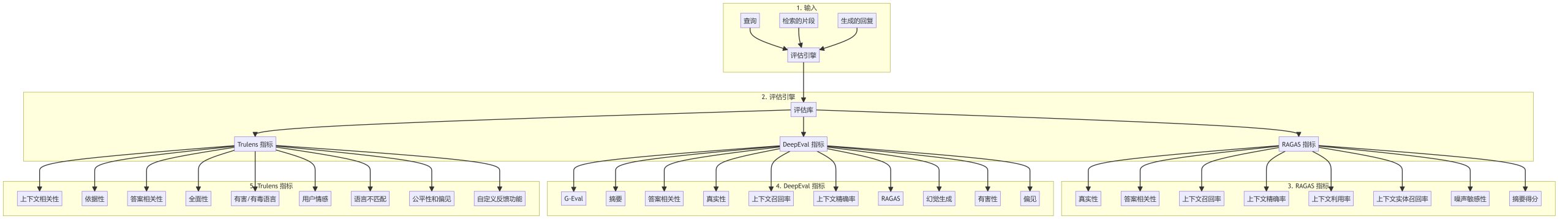

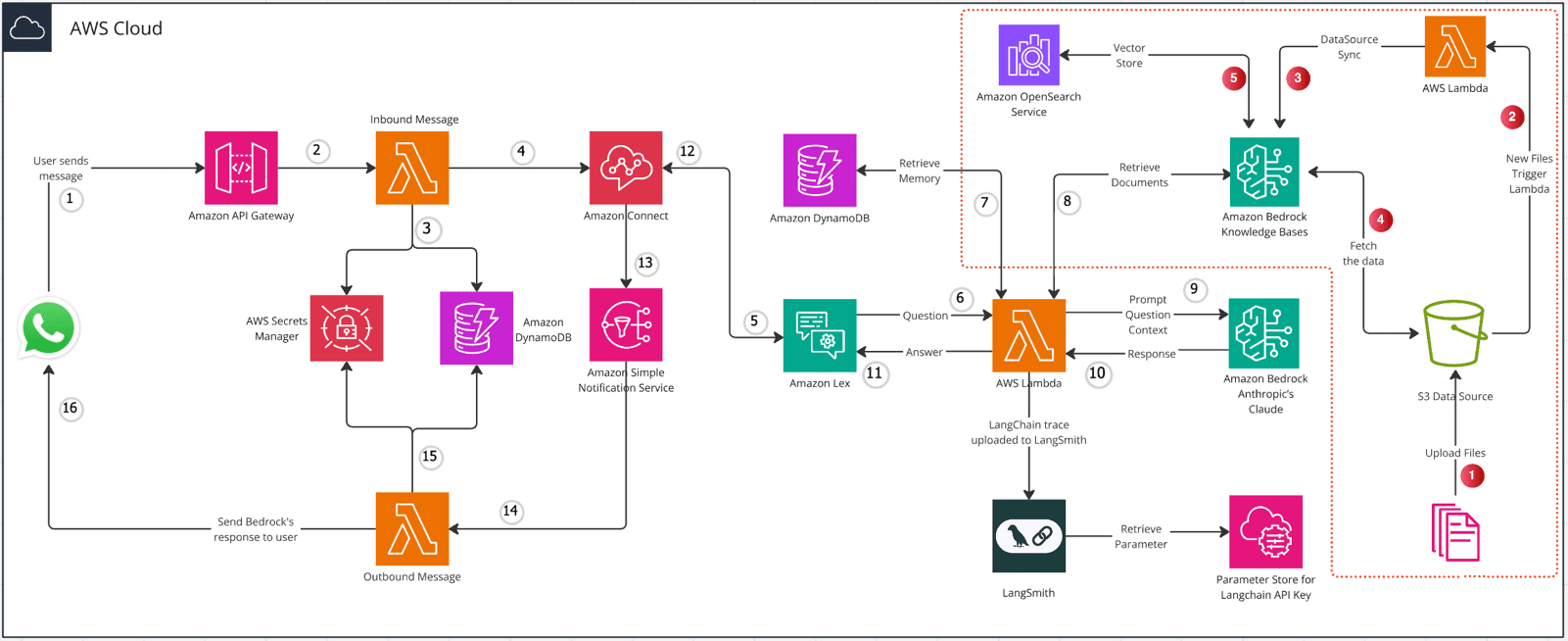

Positive multi-dimensional scoring of both answers facilitates the determination of the best answer.

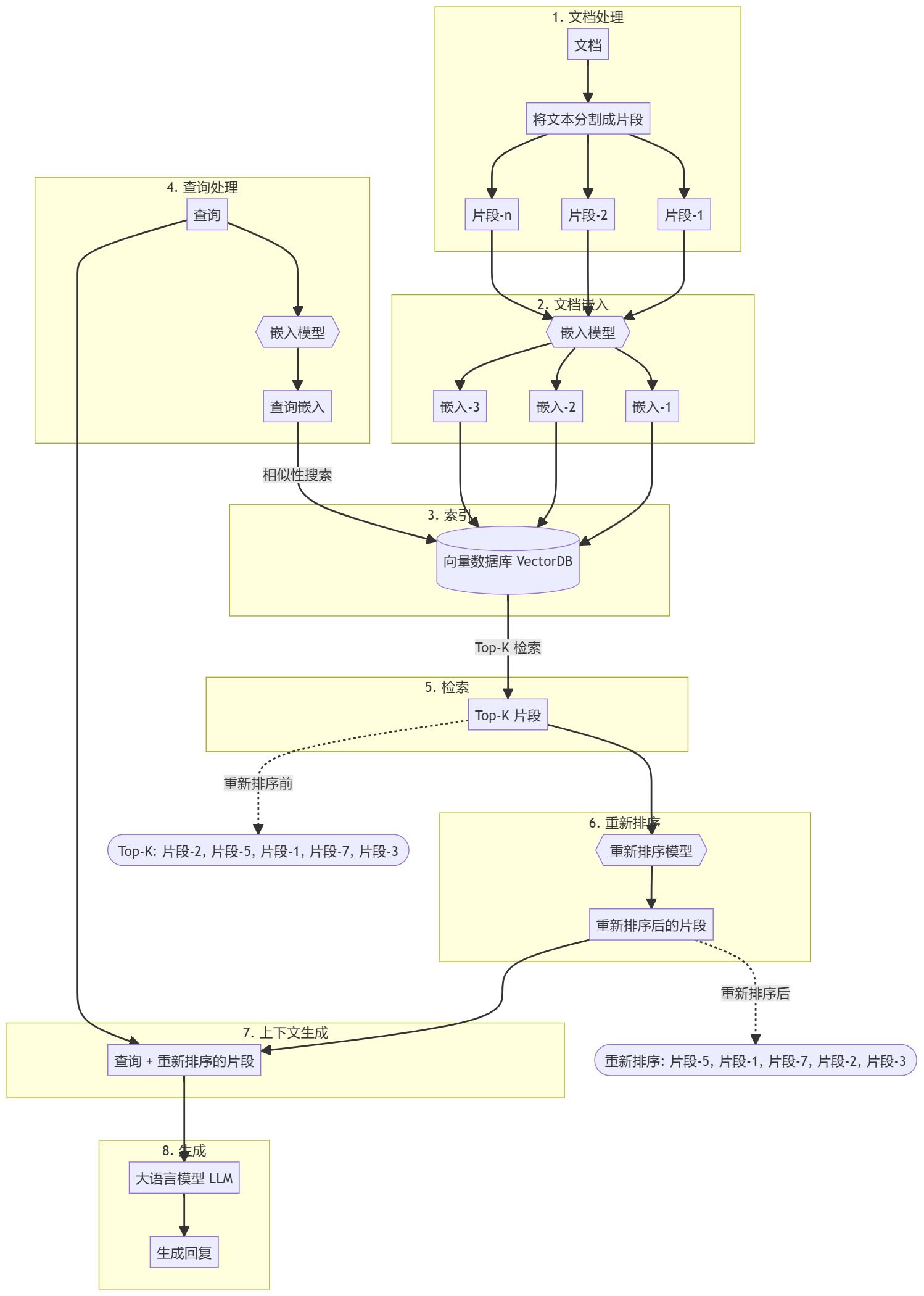

LangChain Hub #1 tipster in Chinese. Released over a year ago and used in the overall evaluation of the combined scores of different RAG strategies. Translated and adapted for use in multiple languages. Usage Help Evaluate which answer is better, assuming both answers are correct. Take ...